Chatbots are one of the easiest entry points into AI. They help teams access information faster and reduce routine workload. But their value stops at the conversation. They don’t understand your workflows, can’t execute tasks, or integrate deeply into your systems, and improve based on operational outcomes. All this can’t move a business process forward.

Meanwhile, many companies are already shifting from conversational interfaces to AI capabilities that live within business operations: assistants embedded in tools, agents that automate multi-step tasks, and early multi-agent systems that coordinate entire workflows. The competitive advantage comes from this deeper operational integration.

After three years of experimenting with 900 AI pilots, Johnson & Johnson now prioritizes 10–15% of initiatives with meaningful operational value. The company CIO said, “We had the right plan three years ago, but we matured our plan based on three years of understanding.”

They believed in AI, invested in custom experiments, and analyzed results. This helped them shape a unique AI strategy. Shortly after this strategic shift became public in Q2 2025, J&J reported a 5.8% year-over-year increase in Q2 sales. That growth can’t be attributed solely to AI. However, it aligns with J&J’s broader goal of using AI to accelerate innovation and strengthen overall performance.

Despite impressive results of big tech companies, stepping out of the chatbot comfort zone for some organizations can be risky. You may think that “we don’t have time and budget for 900 pilots”. However, this may not hold for every company, because each business operates under different constraints. As AI technologies mature, they open up more ways to introduce advanced AI safely, so teams can test new capabilities, validate, and roll back without disrupting core infrastructure. This creates a practical path beyond chatbots and toward AI that understands workflows, acts autonomously within guardrails, and improves as operations evolve.

This guide will help you determine whether advanced AI is viable for your business by examining:

- approaches for integrating AI into business workflows beyond chatbots;

- use cases where each approach is the most beneficial;

- AI system development strategies;

- methods for optimizing AI deployment and use to ensure quick and stable ROI;

- the value from integrating advanced AI solutions.

When chatbots stop delivering value

Chatbots like ChatGPT, Claude, and Gemini provide context-rich responses to human queries. They excel at retrieving information, summarizing content, and assisting with text generation. These capabilities make them useful for knowledge workers and can save up to 4 hours of routine work each week. A recent survey shows that employees find AI chatbots helpful for speeding up their work, but less impactful on improving the quality of work.

Despite their convenience, chatbots operate within a single interaction loop: they wait for a prompt, generate an answer, and return control to the user. This model is helpful for supporting work, but it cannot run a workflow. Business processes depend on multi-step execution, validations, approvals, and coordination across systems. A chatbot can guide an employee through the process of filing an insurance claim, but it cannot process it end-to-end. This reveals a fundamental divide between “explaining work” and “doing work.”

Passive outputs

The request–response mechanism limits a chatbot’s usefulness in operations. Once it completes an answer, it stops. It cannot follow through on tasks, trigger downstream actions, or track progress across systems. Processes that require sequencing, such as onboarding, invoice handling, supply chain updates, or compliance reporting, remain manual because the chatbot cannot execute them.

Memory limitations

This gap becomes even more visible when you consider memory. Chatbots don’t remember past interactions or decisions unless you provide that info each time. But enterprise operations depend on persistence. This includes business rules, past actions, exceptions, service-level agreements, and audit trails. Without the ability to remember and reason over this context, a chatbot can’t own or reliably execute any workflow.

Lack of integrations

Another barrier is integration depth. Chatbots usually interact at the surface: answering questions, summarizing documents, or fetching bits of information. They aren’t embedded into the internal enterprise systems, such as ERP, CRM, financial tools, or internal databases.

Rule-based chatbots stop delivering value when the business expects them to behave like operational AI. From here, the next layer of intelligence, embedded AI, task assistants, workflow engines, and agents, is beneficial to take companies from “using AI” to running business functions with it.

Chatbots vs. advanced artificial intelligence solutions

Advanced AI becomes useful the moment operational complexity increases. As companies grow, processes become interconnected: procurement affects finance → finance affects the supply chain → the supply chain affects customer experience. A chatbot can’t manage these dependencies.

Its job is to answer questions. Advanced AI systems, on the other hand, perform tasks across systems. They make recommendations, apply business rules, and improve over time. This happens through structured feedback and retraining. As a result, AI becomes an integral part of business workflows.

| Dimension | Traditional AI chatbots | Advanced AI solutions |

|---|---|---|

| Decision scope | Single query → single response | Multi-step reasoning, branching logic, and workflow orchestration |

| Context retention | Session-limited; loses context between interactions | Persistent memory across workflows, tasks, and system states |

| System integration | Simple API calls triggered by user requests | Deep, autonomous AI coordination across multiple systems, tools, and data sources |

| Learning mechanism | Static training; improves only with new model versions | Continuous adaptation from operational data, feedback loops, and performance outcomes |

| Actionability | Can provide an answer or suggestion | Can take actions across systems, trigger and streamline processes, update records, and enforce rules |

| Error handling | Fails silently or asks the user for clarification | Detects anomalies, retries, escalates, logs decisions, and applies guardrails |

| Workflow awareness | No understanding of business processes | Understands process stages, dependencies, approvals, and constraints |

| Operational impact | Improves user productivity in conversations | Automates business operations and decision cycles |

Incremental progress from conversation AI to advanced AI is one of the best strategies to naturally enhance business operations with AI. It’s a representation of the crawl-walk-run framework, where chatbots represent the “crawl” stage, AI assistants – “walk”, and agentic systems and networks – “run”.

What differentiates each level is the ability not just to respond but to act, and eventually to learn from actions.

As organizations mature, the question shifts from “How do we use chatbots?” to “How do we build AI that operates our workflows?” That is the moment when advanced AI starts creating meaningful business value.

Integrating AI systems: The roadmap from chatbots to agentic networks

The path from basic chat interfaces to autonomous, workflow-driven AI is a four-level maturity curve. Each level builds on the previous one, expanding the extent to which AI contributes to daily operations.

This roadmap helps organizations understand where they stand today and what is required to progress toward systems that act, coordinate, and learn from real business workflows.

Level 1: Assisted intelligence

This type of intelligence includes chatbots as information partners. It’s best suited for workflows in which humans need AI assistance but still do all the work themselves. AI simply optimizes processes, answers queries, and generates content.

Core technologies under the hood: Generative AI, conversational AI, and natural language processing (NLP).

Out-of-the-box solutions: ChatGPT, Claude, Perplexity, DeepSeek, Gemini chat interfaces.

How to customize: Integrate with internal knowledge bases and communication platforms like Slack and Microsoft Teams.

Workflow example: A customer service chatbot that unburdens the support team and provides customers with quick answers.

Business impact: Faster responses and lower support costs, but no meaningful transformation of business processes.

Level 2: Augmented intelligence

At this stage, AI becomes a lightweight decision-support companion. AI copilots and assistants suggest next steps, handle basic tasks, and point out opportunities. However, humans are still responsible for approval and execution. With this level of intelligence, AI augments workflows without owning them.

Core technologies under the hood: Machine learning, generative AI, retrieval augmented generation (RAG), predictive modeling, data analytics, and recommendation engines.

Out-of-the-box solutions: GitHub Copilot for code, HubSpot AI Assistants.

How to customize: Define custom workflows, tune prompts, configure guardrails, and embed copilots directly into internal tools.

Workflow example: A sales copilot recommending outreach sequences or drafting highly personalized emails.

Business impact: Employee productivity improvement with limited impact on business processes.

Level 3: Autonomous workflows

This is the first level where AI moves from supporting work to performing it. Agents can run complete workflows from start to finish within clear limits. They pull data from systems, make decisions, trigger actions, and hand edge cases or high-risk situations over to humans when needed.

Core technologies under the hood: AI agents, workflow engines, structured tool-calling, API orchestration, event-driven automation, vector databases (for context and memory).

Out-of-the-box solutions: Azure AI Agents, Amazon Bedrock Agents, LangChain Agents.

How to customize: Connect via custom APIs, set guardrails, and add evaluation pipelines for quality control.

Workflow example: Automated invoice processing, where an agent extracts data, validates it, checks rules, updates systems, and triggers payments.

Business impact: Significant operational efficiency gains with higher consistency and fewer delays.

Level 4: Agentic networks

At the highest maturity level, multiple specialized agents collaborate to manage interconnected business processes. They communicate through standardized protocols, share context, request actions from one another, and collectively optimize outcomes. Humans shift from task-level supervision to strategic oversight.

Core technologies under the hood: Model context protocol (MCP) server, agent-to-agent (A2A) protocol, shared memory layers, policy-driven orchestration.

Out-of-the-box solutions: Multi-agent frameworks like CrewAI or AutoGen.

How to customize: Design agents around business functions, implement shared context stores, standardize communication, add governance, and human-in-the-loop layers.

Workflow example: Cross-department supply chain optimization, where agents across business departments synchronize decisions in real time:

- procurement agent tracks supplier delays

- logistics agent adjusts delivery routes

- planning agent recalculates inventory needs

- finance agent updates cash-flow forecasts

Business impact: Deep operational transformation, new service capabilities, and entirely new business models enabled by AI-driven orchestration.

To tap into advanced AI (augmented or agentic), you need to know typical AI development approaches. This includes understanding their business impacts, requirements, technologies, costs, and ROI.

Developing AI systems with learning capabilities: Fine-tuning, no-code, low-code, and code

To develop AI solutions that learn from your workflows and get better over time, you can either fine-tune existing models’ capabilities or develop custom AI solutions from scratch with the help of no-code, low-code, or code-based tools. Each method differs in flexibility, long-term scalability, and adaptability to operational data.

The right choice depends on your workflow complexity, compliance requirements, data maturity, and timeline for achieving meaningful ROI.

AI development with no-code/low-code tools

No-code and low-code platforms such as Microsoft Power Platform, Retool, Glide, Mendix, and Airtable AI enable businesses to build AI-powered apps quickly, without a whole engineering team. These tools support workflow logic, API integrations, vector-based search, document ingestion, and lightweight model prompting. AI can improve as users refine prompts, enhance datasets, or adjust workflow rules.

You interact with these systems via user interfaces that let you compose a custom AI solution from a wide range of drag-and-drop functionality. This way, you focus on the system’s features rather than how it operates under the hood.

No-code/low-code tools improve results over time via:

- iterative prompt optimization (users can refine prompts over time based on what works or doesn’t)

- adding examples (“few-shot” learning) for in-context understanding

- refining workflow rules based on real-time performance analytics

- connecting extra data sources as operations expand

Pros:

- Fastest time-to-value (in hours or days)

- Low learning curve for business users

- Cost-efficient, with no infrastructure or MLOps required

- Supports incremental improvement, letting teams experiment safely before scaling

- Easy integration with SaaS systems (CRM, ERP, analytics)

Cons:

- Limited flexibility for complex or high-stakes workflows

- Restricted access to the underlying model logic

- Scalability may hit a ceiling as workflows become more advanced

- Less control over security, data lineage, and custom reasoning logic

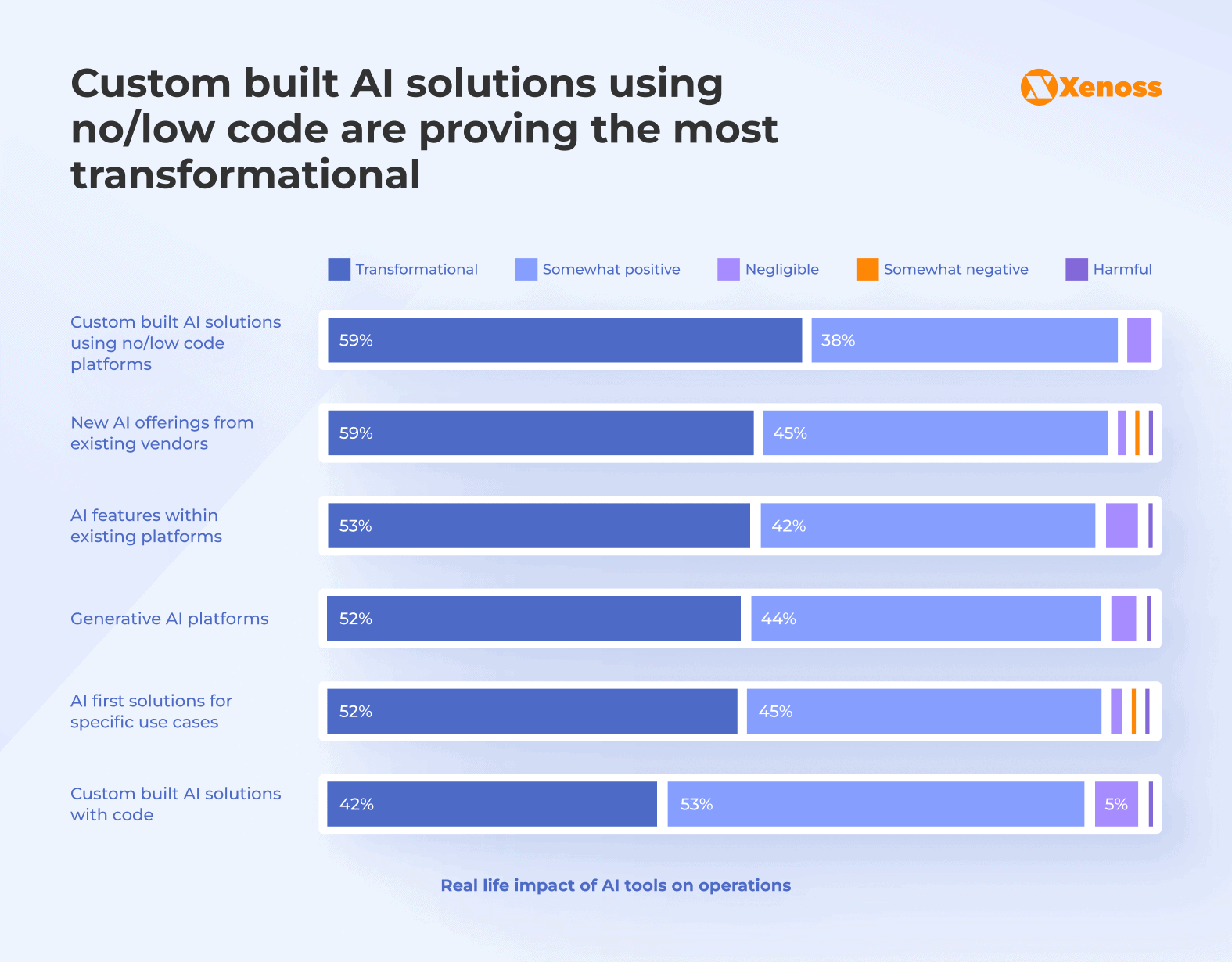

Real-life example: For 59% of business leaders (among 1,000 surveyed), custom-built AI solutions with no-code, low-code tools proved the most transformative in their business operations. This makes sense from an ROI perspective: these tools let teams quickly automate high-volume, repetitive tasks at low cost, without waiting for engineering resources or long development cycles.

Since the investment is small and the time-to-value is quick, even slight efficiency gains lead to rapid, compounding returns. This makes no-code and low-code options very cost-effective for entering advanced AI.

When to choose: No-code/low-code platforms let teams develop custom AI systems and quickly step beyond simple AI workflows like chatbots. With these platforms, businesses can combine flexibility with speed-to-market, experiment, and spin up workflows quickly without incurring heavy development overhead.

Fine-tuning existing foundation models

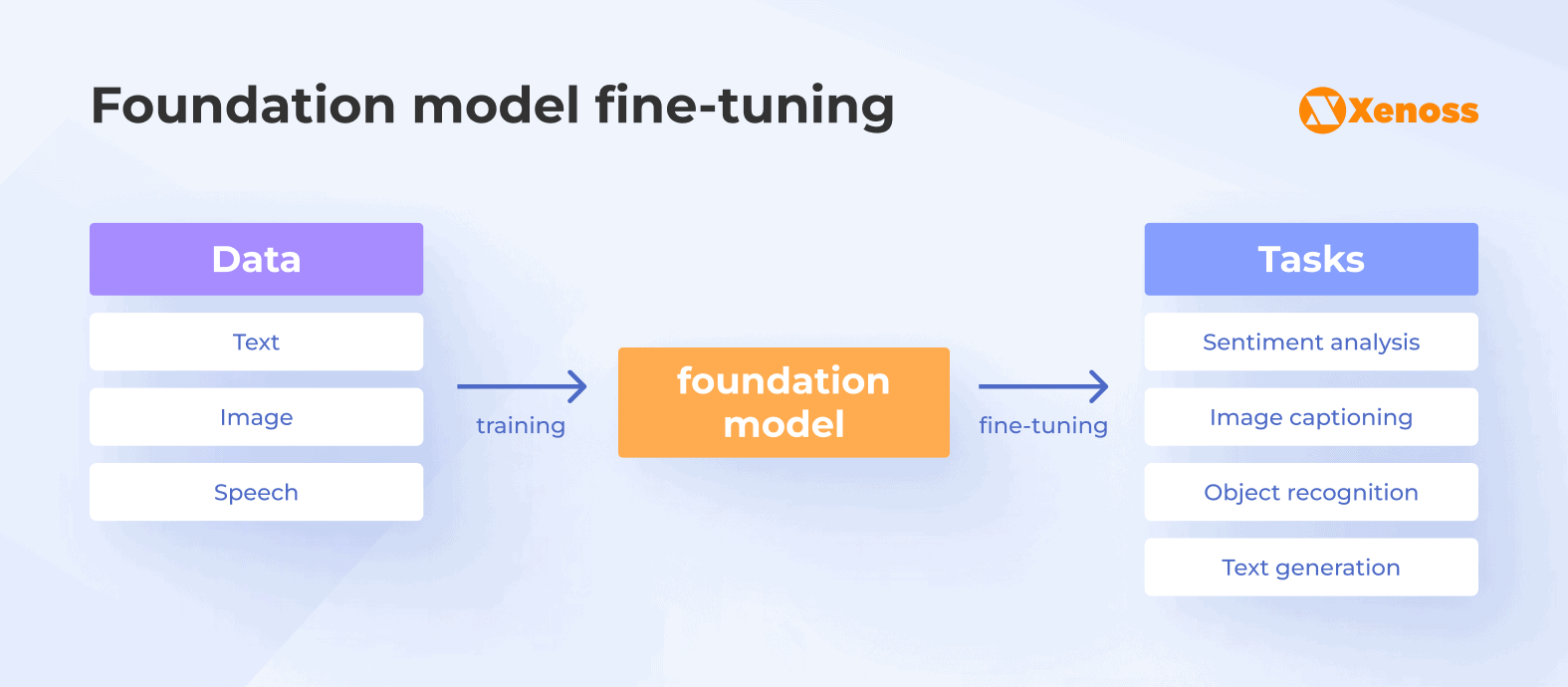

Foundational models are key to generative AI. They include large language models (LLMs), computer vision, and generative adversarial networks (GANs). While these models are powerful out of the box, they’re still generalized systems trained on broad internet-scale data. To be truly effective for your business, they often need fine-tuning. This approach involves adjusting the model’s parameters to optimize performance and improve output accuracy for a specific domain. To achieve this, you can train AI models with custom high-quality datasets.

That’s why model fine-tuning requires:

- establishing a reliable data infrastructure with a single source of truth;

- role-based access controls;

- real-time data pipelines.

The most common model fine-tuning methods are:

- supervised fine-tuning (SFT) using labeled instruction data;

- reinforcement learning from human feedback (RLHF).

With these methods, models produce context-aware outputs, follow instructions, and perform domain-specific tasks.

Pros: Fine-tuning existing models is more cost-efficient than training and inference from scratch. Plus, with the model trained on your proprietary data, you receive more accurate results than with generalized open-source AI solutions and retain full ownership of a custom solution.

Cons: Fine-tuned models may not have access to up-to-date enterprise data or “forget” the parameters they were trained on. To address this, hybrid approaches such as retrieval-augmented fine-tuning (RAFT) have emerged, providing models with continuous access to relevant data while maintaining a high level of explainability through chain-of-thought reasoning.

Real-life example: The Hugging Face team helped the investment firm Capital Fund Management (CFM) implement fine-tuned small language models (SLMs) to solve a financial named-entity recognition (NER) problem.

Although LLMs provide high performance, smaller models are more cost-efficient. Hugging Face used LLMs’ power only to assist with data labeling, but ran training and inference with SLMs. This helped the investment company reduce model maintenance costs (inference per hour for SLMs costs $0.10, while for LLMs, it costs $4.00–$8.00 per hour) and achieve high output accuracy.

When to choose: Ideal for specialized workflows such as claims processing, contract review, fraud detection, financial analysis, and medical summarization. Businesses benefit from precision and consistency that generic models cannot deliver.

Custom AI system development with code

Building AI systems with languages such as Python, R, Java, C++, or Rust provides complete control over model behavior, data pipelines, guardrails, evaluation logic, and agent orchestration. Developers can implement reinforcement loops, custom model evaluation, automatic retraining triggers, and multi-agent architectures, developing AI systems that continuously learn from real operational data.

Just like with model fine-tuning, the process of custom model development involves establishing a data foundation and building custom data pipelines. The difference is in custom model training, hyperparameter tuning, model evaluation, and versioning. Plus, you’ll also be responsible for integration, deployment, and model governance.

To enable all of this, you’ll need a dedicated team (machine learning engineers, data engineers, and subject matter experts) and compute infrastructure for model retraining, fine-tuning, and inference (GPUs/TPUs, storage).

Pros:

- Maximum customization and control

- Deep integration into enterprise systems

- Supports advanced guardrails and compliance logic

- Enables faster system evolution into multi-agent or autonomous workflows

Cons:

- The highest initial cost and longer development cycles

- Requires engineering and MLOps expertise

- Ongoing maintenance is needed for scaling, monitoring, and retraining

Real-life example: With direct access to the model’s Python code, a developer built a continuously self-learning AI system. Using Python libraries, memory buffer, and algorithms, such as model-agnostic meta-learning (MAML), he developed an incremental learning loop that enables a model to improve in real time without the need for regular data updates or retraining.

He integrated the system with a real-world fraud-detection mechanism at a financial services company. As a result, the model continuously adapted to emerging fraud patterns and detected new fraud behaviors in real time.

When to choose: Suitable for mission-critical AI systems that run complex, multi-system workflows or autonomous agents. This AI development method offers the best long-term ROI. It’s designed to fit your processes, compliance rules, and proprietary data. Ideal for companies needing strong system integration, custom guardrails, scalable automation, or multi-agent orchestration.

AI development approaches: Comparison table, costs, and ROI timelines

| Approach | Key business implications | Requirements | Core technologies | Cost range (realistic) | ROI timeline |

|---|---|---|---|---|---|

| No-code AI (Glide, Airtable AI, PowerApps AI App Builder) | Fastest experimentation; low risk; ideal for prototypes and lightweight workflows | Developers with minimal IT oversight | Prebuilt AI blocks, connectors, and form logic | $30–$150 per user/month or $5k–$30k/year for team plans | 2–8 weeks |

| Low-code AI (Mendix, OutSystems, Retool, Power Platform) | Good for operational tools; scalable with moderate customization; faster than full-code | Business analysts and engineers | Workflow engines, API connectors, ML integrations | $20k–$150k/year, depending on seats and environments | 2–4 months |

| Custom code development (Python, Java, Node, Rust backends, ML pipelines) | Full control; highest flexibility; needed for complex, mission-critical systems | Engineering team; DevOps; MLOps infrastructure | Model serving, vector DBs, orchestration frameworks | $150k–$500k+ on the initial development; $10k–$80k/month on maintenance | 6–18 months |

| Fine-tuning foundation models (OpenAI FT, HuggingFace, Google Vertex AI, Azure AI) | Domain-specific accuracy, competitive moat, and improved complex reasoning | High-quality data, ML engineers, and GPU availability | LLMs, adapters, SEFT, RLHF, RAFT, and evaluation pipelines | $50k–$300k per fine-tuned model depending on data & GPUs | 3–9 months |

You can integrate each AI development approach into your workflows in different ways. No-code and low-code might offer less flexibility, but you can still adapt them to fit your processes, data structures, and brand guidelines. Advanced methods, like custom code and fine-tuning, enable deep system integration and autonomous behavior, making it suitable for end-to-end workflow orchestration and multi-agent architectures.

AI model deployment

For successful AI deployment, you can choose either an API integration with cloud providers or AI vendors, an aggregator, an LLM routing service to switch between models, or run models on-premises. What approach to choose depends on your budget, workload type, latency requirements, privacy constraints, and the degree of autonomy your AI systems need.

| AI model deployment strategy | How you pay | What you’re paying for | Best for |

|---|---|---|---|

| Model hosting via APIs (OpenAI, Anthropic, Google Vertex, Azure OpenAI) | Usage-based token billing (input and output tokens) | Volume, not users; scales with automation demand | Backend services, high-throughput workloads, agentic AI systems |

| Aggregators (e.g., Mammoth.ai, OpenRouter, LangSmith routing) | Flat subscription that abstracts multiple model providers | Routing, unified API, lower combined price than using each model provider separately | Teams requiring reliability, model switching, or cost optimization without overhead |

| Local models (running on laptop/workstation/edge) | Hardware and electricity (compute time) | Open-source models are free; the cost is setup, maintenance, and GPU/CPU time | Privacy-sensitive workloads, offline environments, rapid experimentation, and cost savings |

For instance, the go-to approach can be fine-tuning LLMs to fit your niche and then deploying them in the cloud via APIs to avoid the overhead. A mid-sized company could fine-tune a base model like Llama or Mistral on its own data (customer transcripts, contracts, historical cases, or product specifications) to improve accuracy on domain-specific tasks such as classification, forecasting, or compliance checks.

Once the model is fine-tuned, it can be hosted on a managed platform (Azure AI, AWS Bedrock, GCP Vertex, Hugging Face Inference Endpoints), where the vendor handles scaling, uptime, security patches, and GPU provisioning. This reduces your infrastructure responsibilities to almost zero and turns AI into a predictable, pay-as-you-go operational expense.

Benefits of integrating AI systems beyond chatbots

Let’s see how a workflow of processing a customer query could look using only an AI chatbot compared to using an agent.

| Step in the workflow | Before (chatbot) | After (AI agent) |

|---|---|---|

| Customer inquiry arrives | An employee reads and interprets the email | Automatically reads and parses the email |

| Order lookup | Chatbot tells the employee how to check the order | Queries the order management system directly |

| Identify the cause of the delay | Chatbot provides general policies or guesses based on text | Pulls real shipment data and determines the exact cause |

| Customer context | Employee manually checks CRM history | Fetches CRM history automatically |

| Decision-making | Employee reviews policies and decides what action to take | Applies business rules (refund? escalate? reship?) |

| Response drafting | Chatbot drafts text; employee edits | Drafts or sends the final personalized email |

| System updates | Employee updates CRM/ticket by hand | Updates CRM records automatically |

| Follow-up actions | Employee sets reminders or tasks | Schedules follow-ups or triggers next steps |

| Human involvement | A complete workflow requires manual effort | Human only reviews exceptions or approvals |

| Overall outcome | Faster information access, but no process automation | A complete end-to-end workflow execution with minimal human effort |

From this table, we can form the following benefits:

- Less human intervention. With a chatbot, there is constant back-and-forth communication and validation. Whereas an agent is autonomous and performs most tasks by itself, requiring human validation at the end.

- Faster end-to-end resolution. AI-powered chatbots speed up answers, but they don’t speed up the process. Agents shorten the entire workflow, from identifying the issue to updating systems, resulting in faster resolution times.

- Higher accuracy with fewer errors. Agents rely on real system data rather than surface-level text, reducing manual mistakes such as incorrect updates, missed steps, or misinterpreted policies.

- Consistent decision-making. Where a human might change their judgment, agents apply business rules consistently, improving fairness, compliance, and operational predictability.

- Scalable process automation. Agents scale entire workflows, allowing the business to handle more operational load without adding headcount.

Moving beyond chatbots doesn’t mean completely replacing human employees with AI. It’s about helping people across many departments perform, reason, and act more freely in complex workflows.

Bryce Hall, the Associate Partner at McKinsey, explains the collaboration of AI and humans this way:

AI is rarely a stand-alone solution. Instead, companies capture value when they effectively enable employees with real-world domain experience to interact with AI solutions at the right points. The combination of AI solutions alongside human judgment and expertise is what creates real “hybrid intelligence” superpowers and real value capture. AI leaders adopt a set of other practices that point in this same direction, including fully embedding AI solutions into business workflows and having senior leaders actively engaged in driving adoption at scale.

Final takeaway

The aim of this article wasn’t to convince you to abandon chatbots altogether. They remain extremely valuable for frontline communication, internal Q&A, and fast information access. Chatbots are often the first safe, low-risk step in exploring AI.

But the real, tangible business benefits come from gradually transitioning from conversational AI to workflow-embedded AI and doing so in a structured, measured way to align with your business priorities, risk tolerance, and technical maturity.

The future of AI belongs to multi-agentic, multi-modal enterprise AI systems that can reason, act, collaborate, and learn across your business workflows. Those who wait will eventually adopt AI out of necessity, while those who start now will adopt it out of opportunity.

With deep expertise in enterprise data engineering and agentic AI, Xenoss helps businesses make this transition safely and strategically, building systems that mature with your operations and position you ahead of the curve.