Generative AI could add $2.6 trillion to $4.4 trillion in annual value to the global economy, more than the entire GDP of the United Kingdom. A significant portion of this economic opportunity stems from empowering employees with AI knowledge bases that provide instant answers to complex questions and automate knowledge-intensive tasks.

McKinsey research indicates that about 75% of generative AI’s value falls across four key areas: customer operations, marketing and sales, software engineering, and research and development. These capabilities position AI-powered systems to either streamline or fully automate the estimated 25% of daily work activities that require natural language understanding.

However, enterprise leaders are discovering that off-the-shelf LLMs like ChatGPT fail to deliver a transformative business impact. While these models excel at general knowledge queries, they lack access to proprietary organizational data and cannot provide answers that align with specific corporate policies, recent process changes, or current strategic objectives.

The solution lies in building enterprise-specific knowledge bases that augment LLMs with real-time access to internal documentation, procedures, and institutional knowledge. These systems ensure AI responses reflect current organizational realities while maintaining compliance with industry regulations and security requirements.

This article examines three distinct approaches to building LLM-powered enterprise knowledge bases using retrieval-augmented generation (RAG): Vanilla RAG, GraphRAG, and agentic RAG architectures.

Vanilla RAG: The foundational approach for enterprise knowledge bases

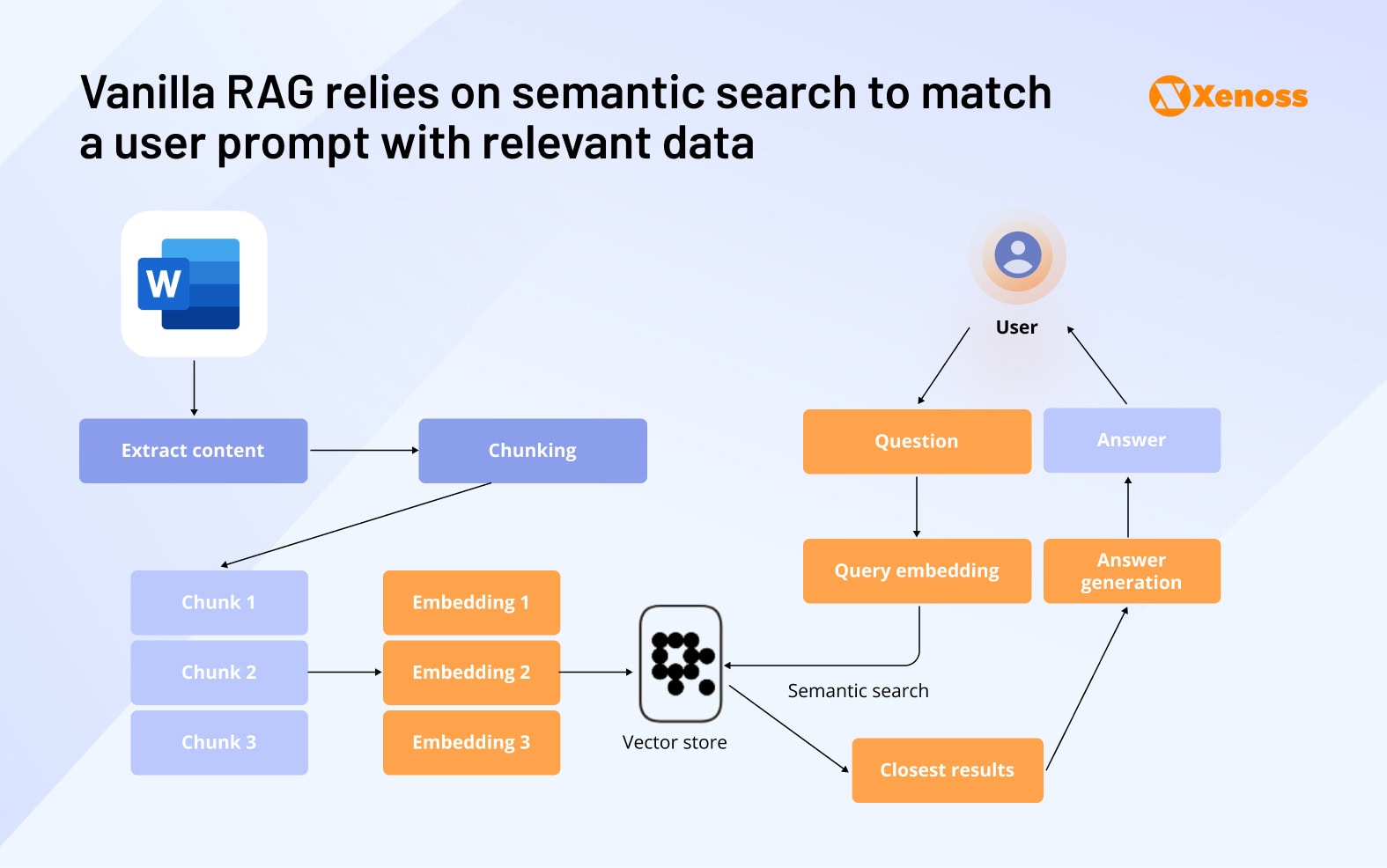

Vanilla RAG represents the traditional methodology for implementing retrieval-augmented generation in enterprise environments. This approach leverages vector databases to store document embeddings and retrieve contextually relevant information. With its proven track record and flexible architecture, Vanilla RAG remains the preferred choice for organizations requiring straightforward, fact-based question-answering capabilities.

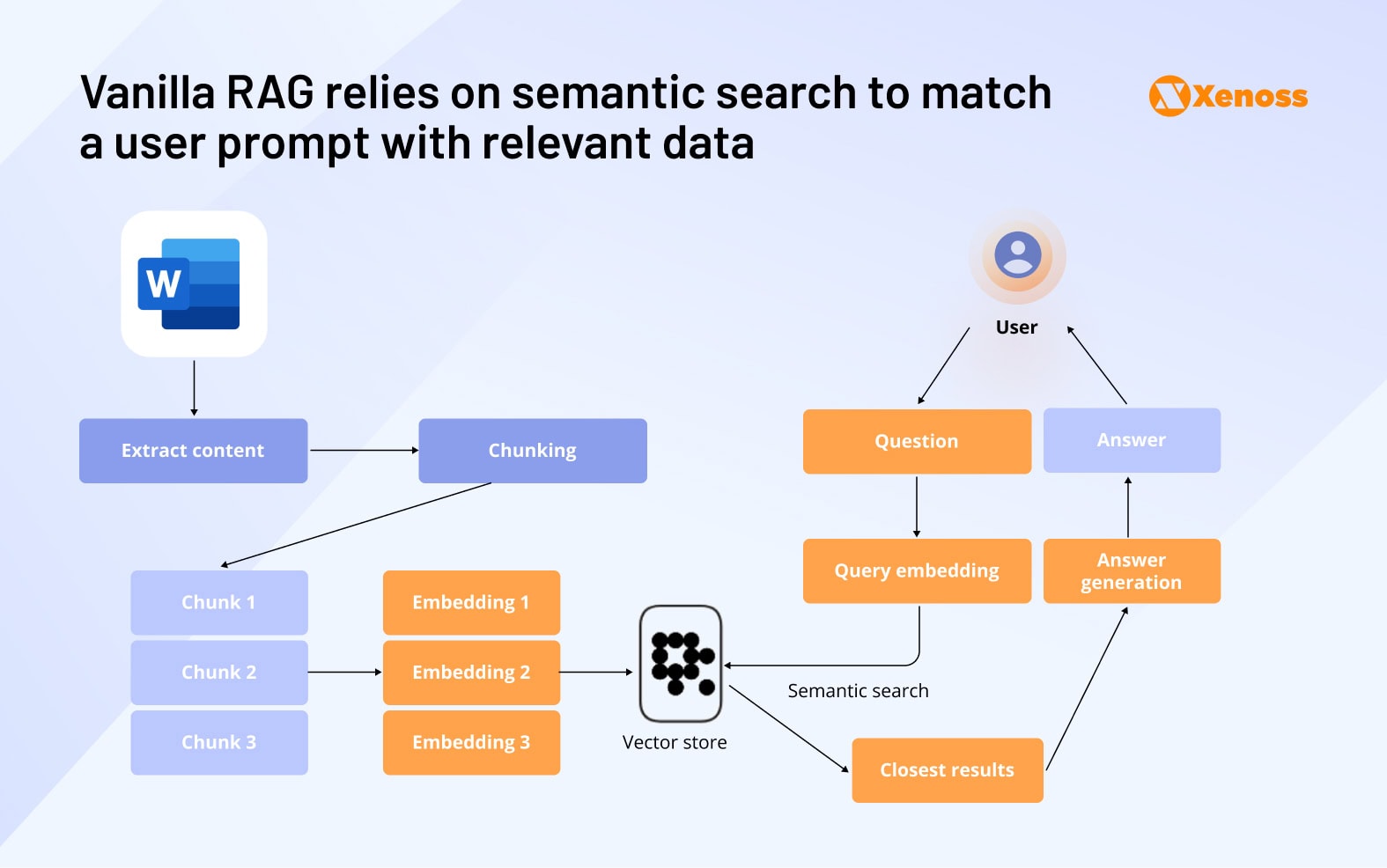

The Vanilla RAG pipeline: five essential components

- Data ingestion and preprocessing

Enterprise documents—policies, procedures, technical documentation, and knowledge bases are loaded into the system, determining the scope and quality of information available for user queries.

- Document chunking and embedding creation

Large documents undergo segmentation into smaller, semantically meaningful fragments. These fragments transform into high-dimensional numerical vectors (embeddings) that capture semantic relationships and contextual meaning.

- Vector database implementation

Embeddings are stored in specialized vector databases such as LanceDB, Pinecone, or Weaviate, enabling rapid similarity searches that directly impact query performance and system scalability.

- Semantic data retrieval

When users submit queries, the system converts questions into embeddings and performs similarity searches against the vector database. Retrieved fragments combine with the original query to create enriched prompts for the LLM.

- Contextual answer generation

The language model processes RAG-augmented prompts containing both user questions and relevant context, generating accurate, up-to-date responses grounded in enterprise-specific knowledge.

Architecture considerations for building a Vanilla RAG knowledge base

Building a RAG copilot comes with challenges that data engineering teams have to counteract.

Hallucinations

Xenoss engineers recommend implementing multi-layered verification systems that validate LLM responses against source documents in real time. This includes confidence scoring mechanisms, source attribution requirements, and automated fact-checking workflows.

Enterprise data security and access control

Organizations must establish rigorous document curation processes before ingesting content into RAG databases. Best practices include implementing role-based access controls, excluding personally identifiable information (PII), and maintaining separate knowledge bases for different security clearance levels.

User experience

Successful enterprise knowledge bases feature intuitive interfaces with advanced search capabilities, source citation linking, and contextual navigation tools. Implementing continuous feedback loops allows data teams to monitor system performance and iteratively improve response quality.

Vanilla RAG implementations: Uber and Mercari case studies

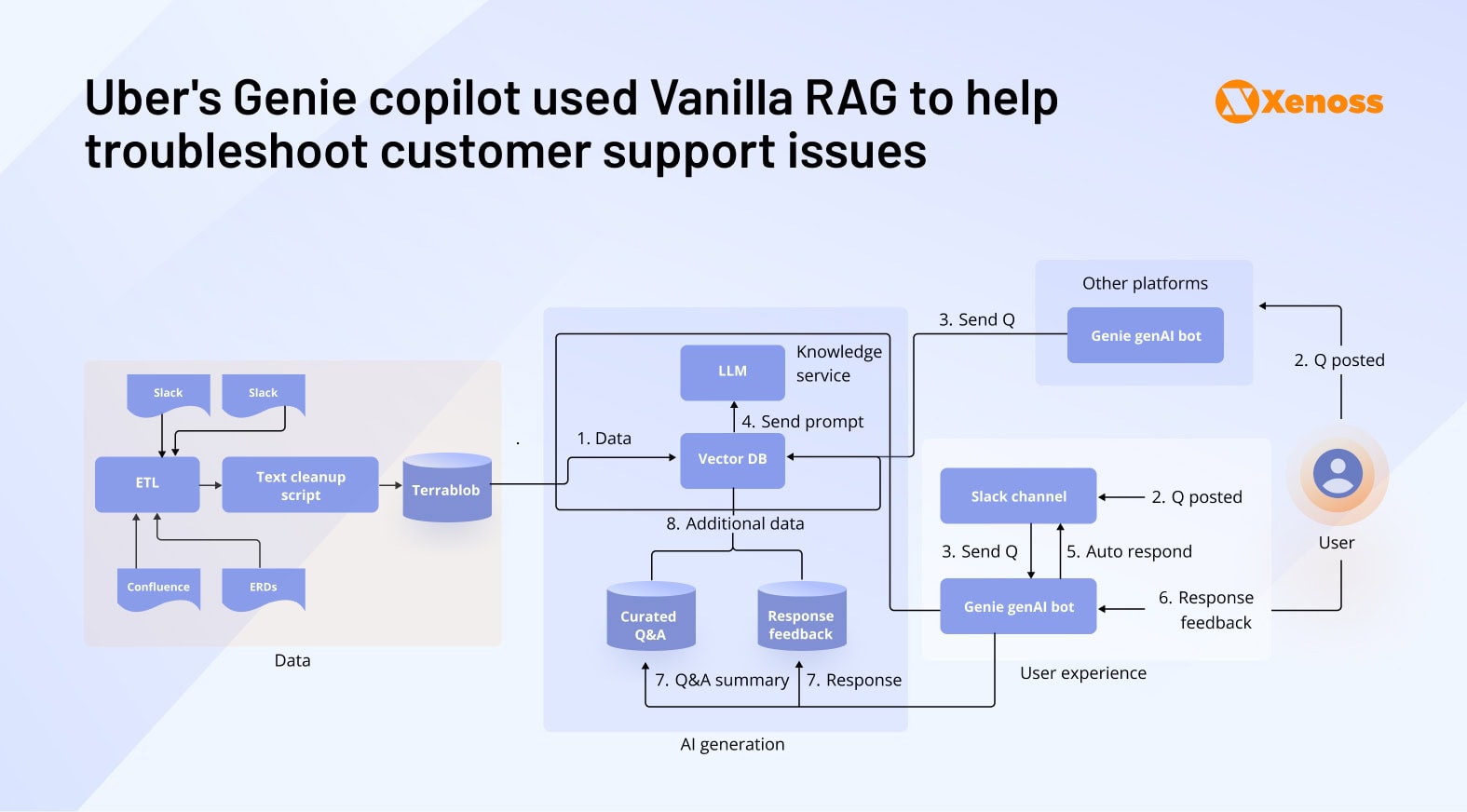

Enterprise organizations across industries have successfully deployed Vanilla RAG systems to streamline internal knowledge management. Two notable implementations—Uber’s Genie copilot and Mercari’s incident management system- demonstrate practical architecture patterns for large-scale deployments.

Uber’s Genie copilot exemplifies effective Vanilla RAG implementation for managing customer support operations. The system follows a streamlined four-step workflow: data engineers scrape internal wikis and Stack Overflow content, transform documents into vector embeddings, store them in databases, and process employee queries through Slack integration for real-time responses.

Data preparation

Uber data engineers developed a custom Spark application with dedicated executors for scalable ETL handling.

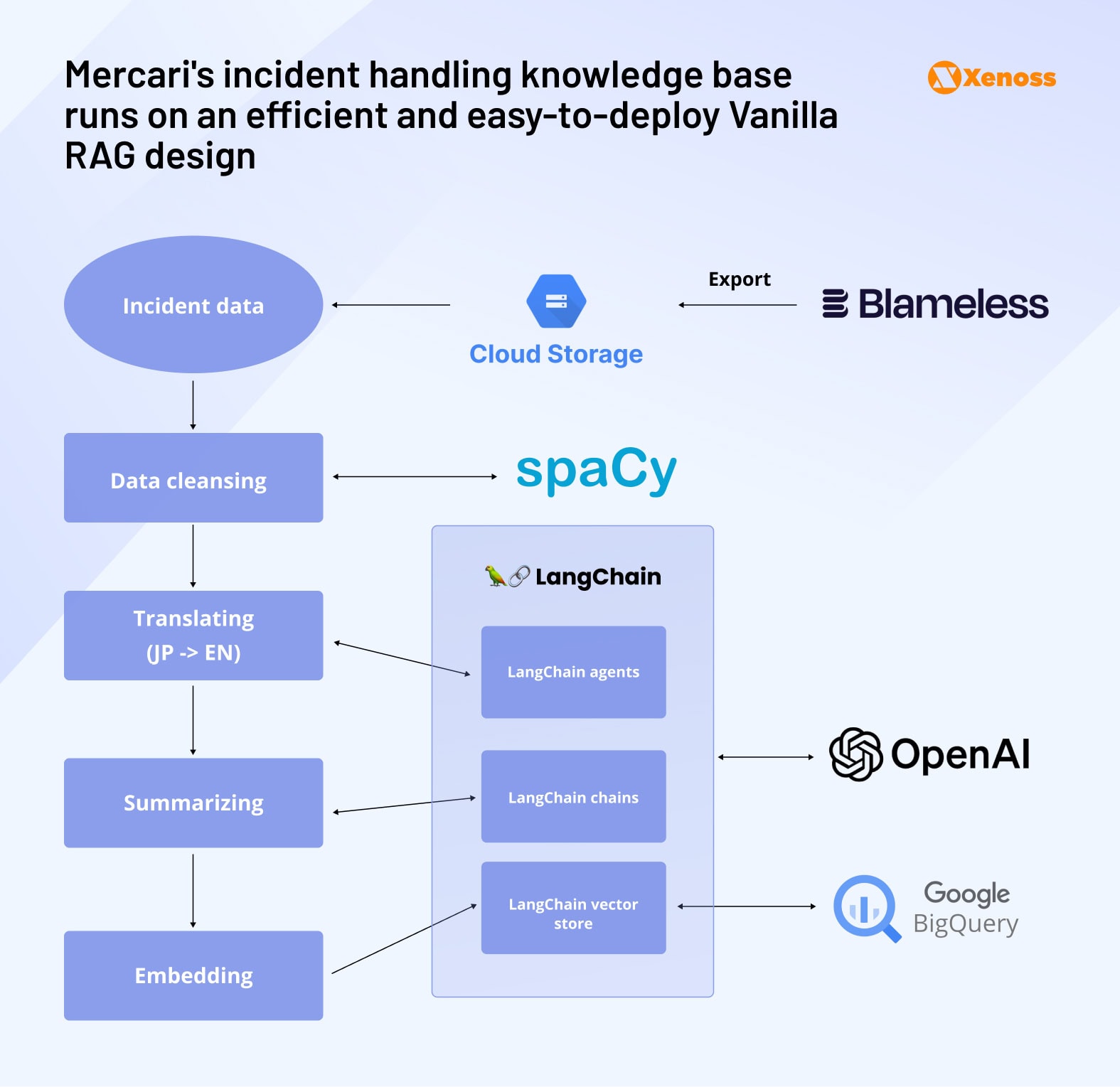

A Japanese e-commerce company, Mercari, implemented a serverless RAG architecture for incident management using Google Cloud infrastructure. The system exports incident data via Blameless, processes it through Google Cloud Scheduler, and runs on Cloud Run Jobs for optimal cost-efficiency.

Data cleansing and PII protection

Ingested data requires preprocessing before embedding creation to ensure vector search precision and eliminate personally identifiable information. Effective cleansing directly impacts cosine similarity accuracy during retrieval operations while maintaining enterprise compliance standards.

Tool selection depends on data formats—Mercari processed Markdown-formatted incident reports using LangChain’s Markdown Splitter function while implementing SpaCy NLP models to detect and remove PII-associated terms. The team deployed these cleansing operations on Google Cloud Run Functions for serverless scalability and cost optimization.

Embedding generation and security controls

Cleansed data transforms into high-dimensional vector embeddings before database storage. Uber engineers leveraged OpenAI embedding models with PySpark UDFs for scalable processing, while Mercari’s team implemented GPT-4-based LangChain translation to convert Japanese incident reports into English before embedding creation.

The embedding process generates structured dataframes that map document fragments to their corresponding vector representations, enabling efficient similarity searches during query processing. Enterprise security requires strict access controls—Uber restricts embedding access to designated Slack channels, ensuring proper governance while maintaining system functionality.

Vector database management

All embeddings are stored in a vector database that can be either an off-the-shelf product like BigQuery or built in-house, like Uber’s internal vector database Sia.

Uber engineers set up two Spark jobs to manage data retrieval.

These jobs are triggered to build and merge the data index. Spark communicates directly with Terrablob, which syncs and downloads each snapshot and index to the Leaf.

Conversation management

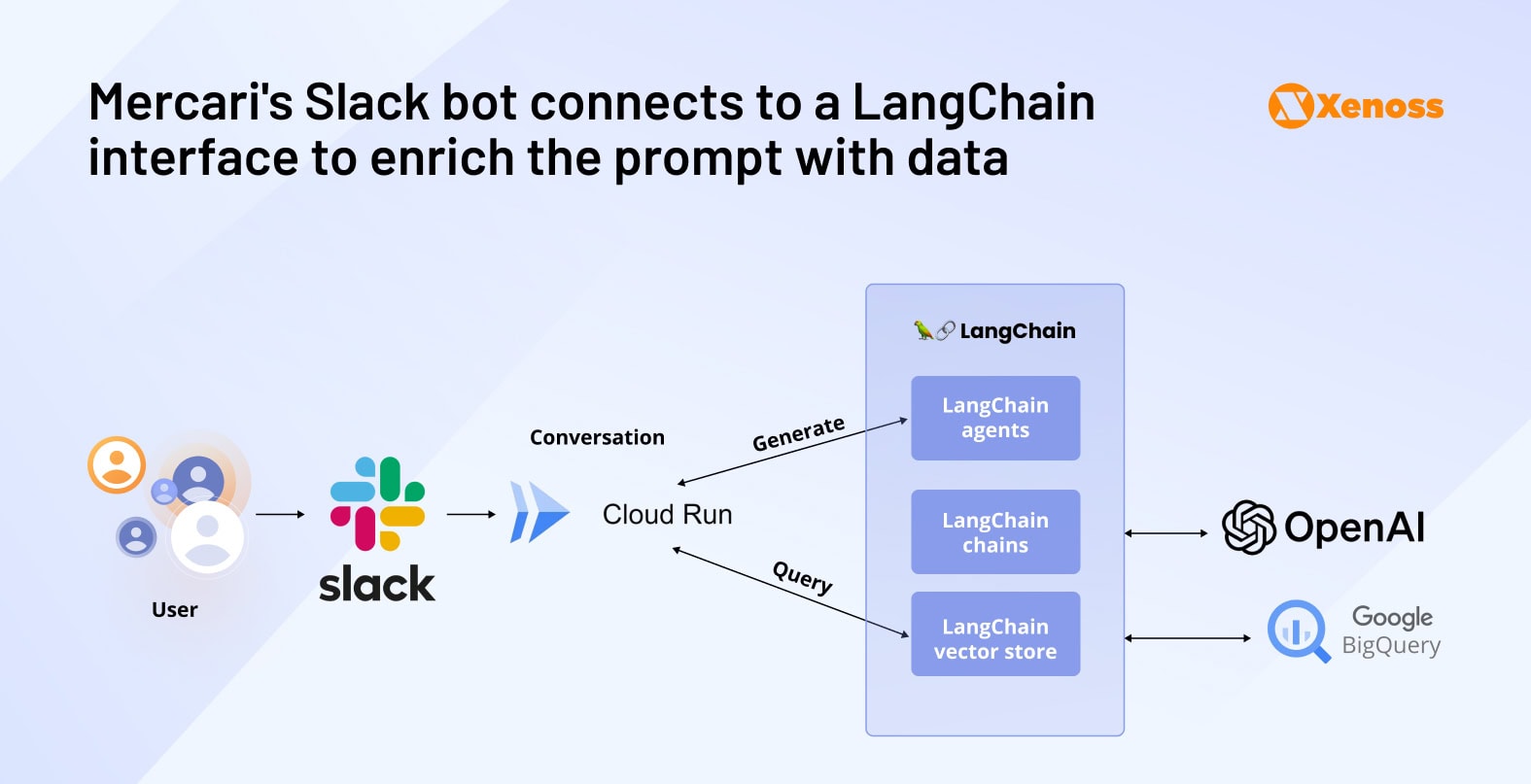

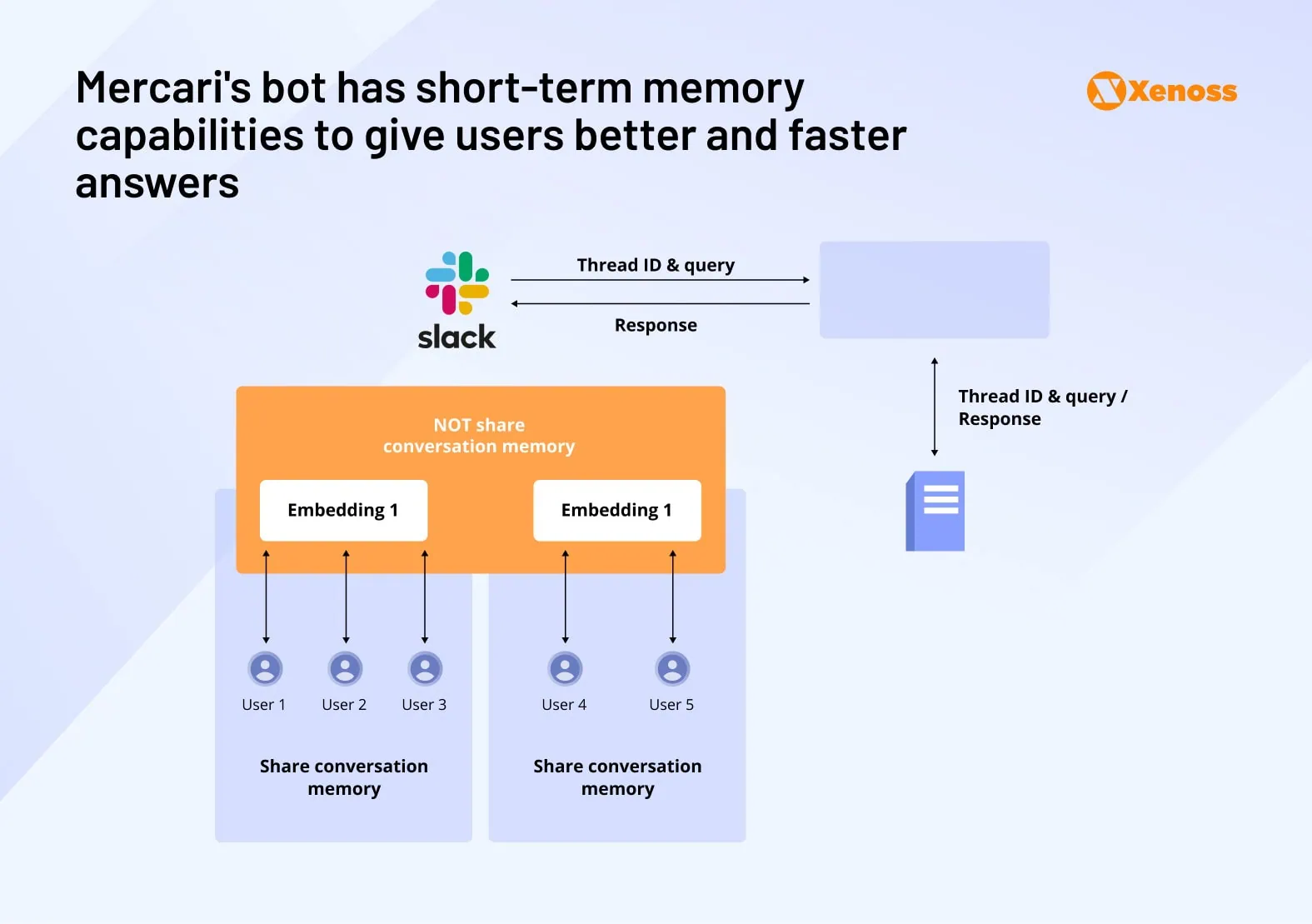

A RAG-enabled internal knowledge base needs a system for constantly listening to the Slack channels teams use to ask questions. Mercari engineers built a server on Google Cloud Run that connects the company’s Slack channel with the BigQuery vector database.

Every incoming query is converted into an embedding, connected to matching database items, and sent over to the LLM as a prompt.

To improve the LLM’s retention of user interactions, Mercari engineers added short-term memory to the model.

They used LangChain’s memory features to store user queries and LLM outputs for specific users. This way, the LLM keeps track of chat history and can refer to its earlier answers.

The caveat to this setup is that the memory feature is version-specific. When a new version of the copilot is released, the model loses its answer history.

Cost tracking

If the RAG platform relies on third-party APIs with a cost-per-interaction pricing model, a cost-tracking mechanism helps control infrastructure expenses.

Uber’s data engineers built a flow where a Universally Unique Identifier (UUID) is passed to Knowledge Service whenever an employee calls the copilot. The Knowledge Services passes the UUID to the Michelangelo Gateway, which is a gateway to the LLM. The cost per interaction will be added to the LLM audit log.

Performance tracking

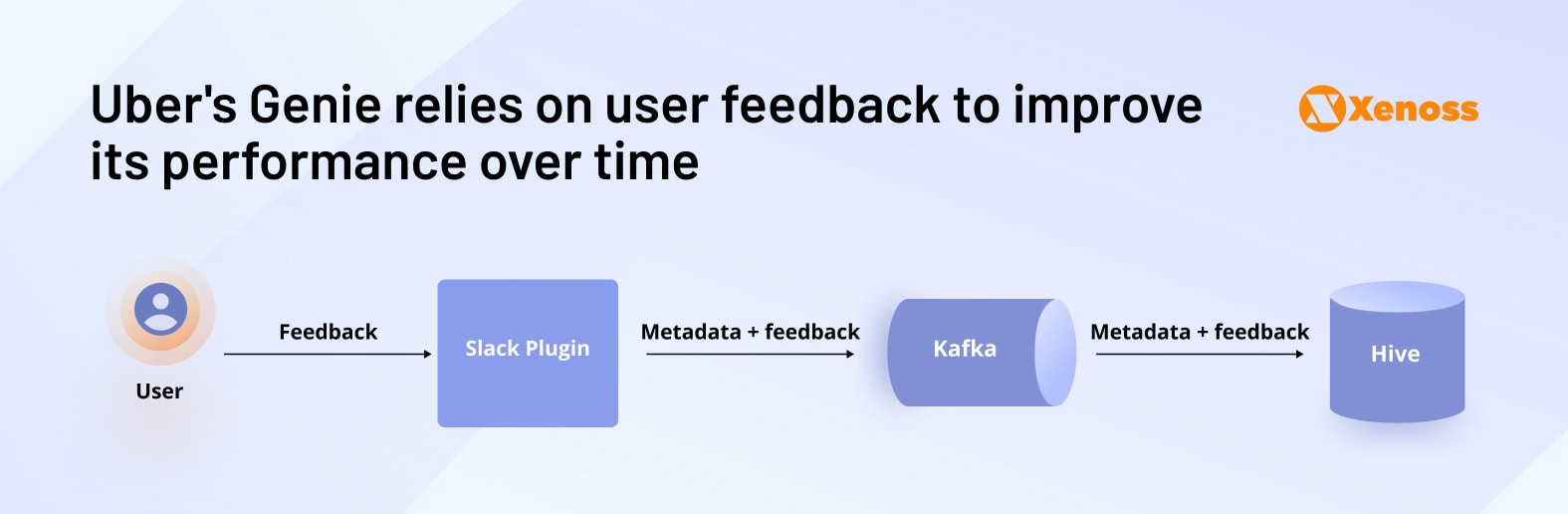

A feedback loop helps improve the quality of LLM answers. After each interaction, a user can be asked to answer a question, “How helpful was the response?” in the following ways.

- Resolved: The answer helped fully eliminate the problem

- Helpful: The LLM was helpful, but its output alone did not help solve the problem

- Unhelpful: the answer was wrong, irrelevant, and didn’t solve the employee’s challenge.

In Uber’s knowledge base, user answers are recorded by the Slack plugin, uploaded to corresponding Kafka topics, and streamed into a Hive table that keeps all feedback records.

Vanilla RAG limitations

Vanilla RAG delivers impressive results for straightforward enterprise use cases. Uber’s Genie implementation demonstrates this effectiveness. Since launching in 2023, the system has processed over 70,000 Slack questions across 154 channels, saving approximately 13,000 engineering hours through automated knowledge retrieval.

However, three fundamental limitations constrain Vanilla RAG’s enterprise applicability:

- Uniform data source weighting. The system treats all documents equally, lacking mechanisms to prioritize critical sources, such as regulatory guidelines, over supplementary materials, like user guides—a significant limitation for heavily regulated industries.

- Semantic similarity assumptions. Vanilla RAG employs fixed Top-K retrieval strategies that equate semantic similarity with relevance, failing to adapt based on user behavior patterns or contextual query nuances.

- Static query interpretation. The approach assumes single queries perfectly capture user intent, making it less effective for complex, multifaceted questions that require iterative clarification or context building.

These architectural constraints have driven enterprise teams toward more sophisticated RAG methodologies. GraphRAG, which leverages knowledge graph structures for enhanced reasoning capabilities, has emerged as a leading alternative for complex enterprise knowledge management scenarios.

GraphRAG: Advanced knowledge base architecture for complex enterprise queries

In RAG applications, knowledge graphs significantly improve AI memory and contextual understanding.

GraphRAG addresses Vanilla RAG’s core limitations through three key architectural advantages:

- Enhanced relational reasoning. GraphRAG enables LLMs to retrieve information not explicitly mentioned in datasets by analyzing interconnected data relationships, supporting complex inference and reasoning tasks beyond simple semantic matching.

- Context-aware source prioritization. The system dynamically ranks and prioritizes data sources based on query context, ensuring critical documents like compliance guidelines receive appropriate weighting over supplementary materials.

- Unified structured and unstructured data processing, GraphRAG seamlessly integrates diverse data formats, ranging from structured databases to unstructured documents, creating comprehensive and nuanced responses that reflect the complexity of enterprise data.

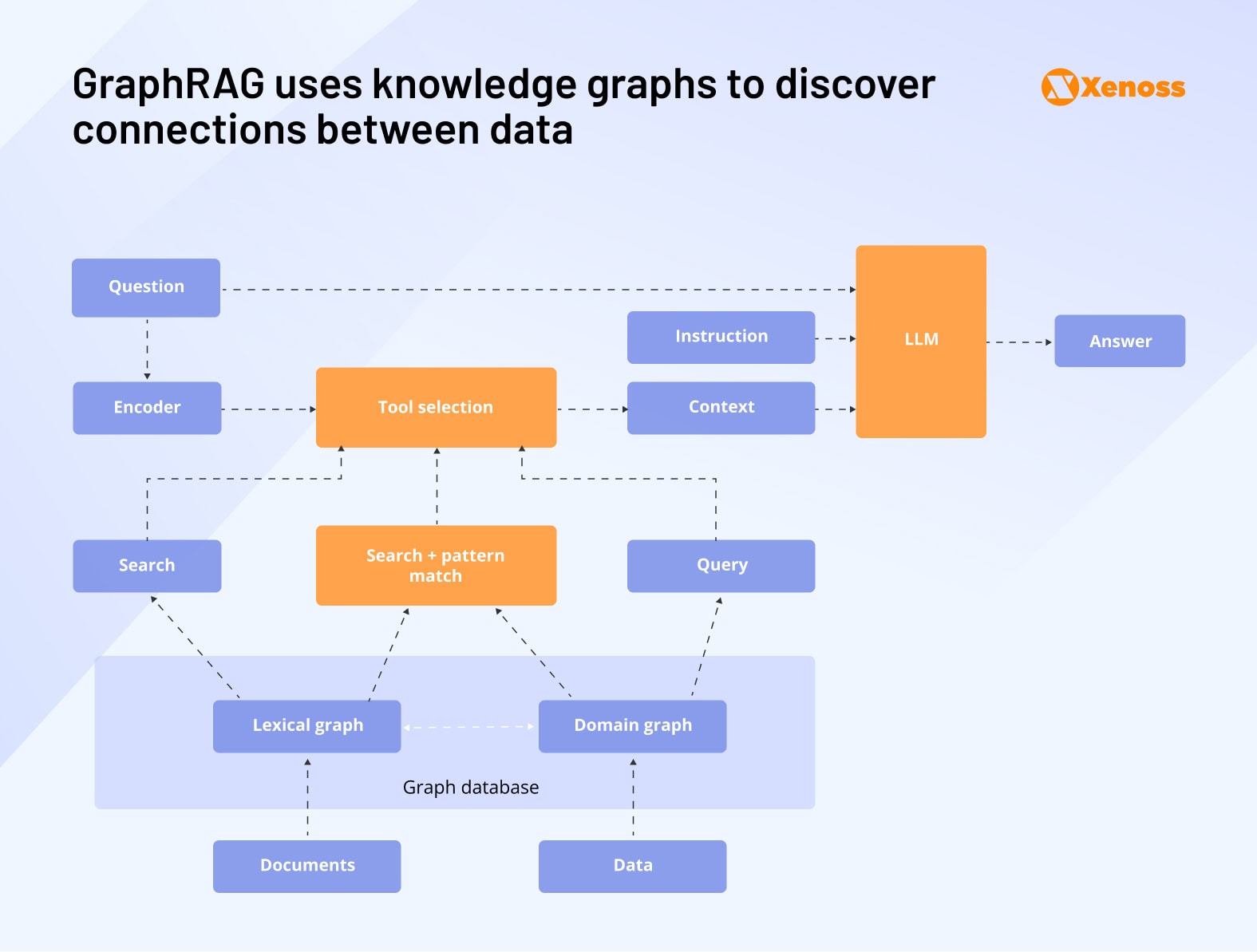

Here is a schematic breakdown of the GraphRAG workflow.

- Dual processing: User queries route simultaneously to the LLM for prompt preparation and to encoders for RAG enrichment

- Embedding transformation: Questions are converted into vector representations using standard embedding techniques

- Graph pattern matching: Queries match against both lexical graphs (unstructured data) and domain graphs (structured relationships)

- Context packaging: Retrieved patterns and relationships compile into structured context blocks

- Enhanced prompting: LLMs receive user prompts, task instructions, and GraphRAG-enriched contextual information

- Informed response generation: Systems generate answers leveraging both direct content and inferred relationships

GraphRAG implementation: Total Energies EU AI Act case study

Total Energies’ Digital Factory initiative demonstrates practical GraphRAG deployment for regulatory compliance use cases. Their EU AI Act knowledge base implementation provides valuable insights for enterprise teams evaluating GraphRAG architecture decisions.

Data preparation

GraphRAG accommodates a diverse range of data types, including structured spreadsheets, semi-structured JSON/XML, and unstructured documents. Total Energies converted PDF regulations to text using Python pypdf commands while maintaining standard data validation practices, including format standardization and duplicate removal.

Modeling the knowledge graph

All cleansed data is imported into a graph. Data elements should be mapped to graph nodes, which allows building relationships between adjacent nodes.

A knowledge graph is considered complete when every node is mapped to a dataset.

A Total Energy blog post points out that it’s important to pay attention to data formats. They used JSON for raw data logs, GraphML for visualization, and Parquet for optimized graph data storage.

Query modes

GraphRAG supports two distinct query approaches: global searches provide comprehensive, broad-context answers while local queries deliver specific, targeted responses. This dual-mode capability enables organizations to optimize responses based on user intent and information requirements.

Comparing performance

Comparative testing of the question “Which elements can be self-conflicting in the AI Act?” demonstrated GraphRAG’s superior analytical capabilities, delivering more detailed and conclusive results than traditional Vanilla RAG implementations across both global and local query modes.

Cost considerations for GraphRAG

GraphRAG’s enhanced capabilities come with significant cost implications. Total Energies’ basic EU AI Act implementation required 20x more tokens than equivalent Vanilla RAG systems due to multiple LLM calls for data prioritization, clustering, and summarization. Enterprise teams should expect proportionally higher maintenance costs for complex datasets.

Query latency trade-offs GraphRAG processing times increase approximately 2x compared to Vanilla RAG, with latency directly correlating to dataset complexity. Organizations must balance enhanced reasoning capabilities against performance requirements for time-sensitive applications.

Agentic RAG: Scalable multi-agent knowledge base architecture

The emergence of AI agents has introduced a revolutionary approach to enterprise knowledge management through multi-agent systems. Agentic RAG deploys specialized agents responsible for distinct domain areas, delivering targeted, contextually relevant responses while maintaining autonomous navigation capabilities across internal documents and corporate tools.

AI agents operate independently while sharing data and coordinating operations to build collective intelligence over time. This “shared brain” concept enables continuous learning from user interactions, improving system-wide knowledge retention and response accuracy.

Unlike Vanilla RAG and GraphRAG architectures that require infrastructure modifications for expansion, agentic RAG systems scale through simple agent addition. Organizations can deploy new domain-specific agents without disrupting existing workflows or requiring architectural overhauls.

Each agent focuses on specific expertise areas—compliance, technical documentation, and customer support, enabling more profound domain knowledge and more precise responses than generalist knowledge base approaches.

The agentic approach modifies traditional RAG processing through agent-mediated data access. Input data undergoes standard embedding transformation, but instead of storing vectors in databases or knowledge graphs, specialized AI agents directly access and share relevant information with LLMs based on query context and domain requirements.

This architecture enables dynamic data retrieval where agents collaborate to assemble comprehensive responses, leveraging their specialized knowledge domains while maintaining real-time access to enterprise information systems.

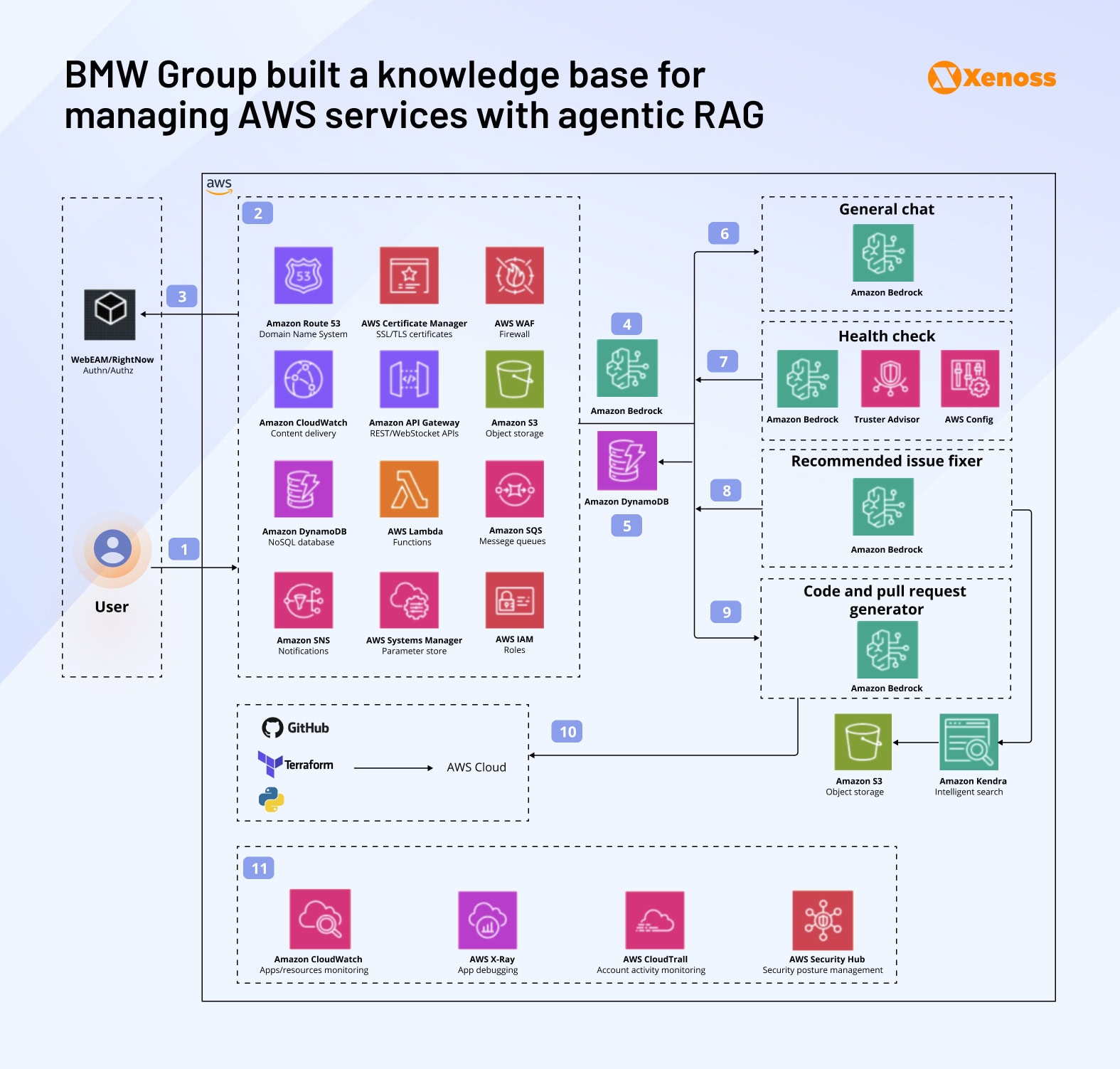

Agentic RAG implementation: BMW Group’s multi-agent AWS copilot

BMW Group’s internal copilot demonstrates enterprise-scale agentic RAG deployment, supporting DevOps engineers across four critical operational areas: AWS service information retrieval, real-time infrastructure monitoring, cost optimization, and automated code deployment across multiple AWS accounts.

System architecture and workflow

The system processes natural language queries through Amazon Bedrock for intent recognition, routing requests to specialized agents for general support, health monitoring, pull request generation, or troubleshooting. MongoDB stores conversational memory, enabling cross-agent knowledge sharing and context retention.

BMW’s core innovation lies in agent collaboration through shared conversational history and real-time data exchange, creating a continuously improving knowledge ecosystem that adapts to engineering team needs.

Data ingestion

Amazon Kendra indexes AWS documentation and internal resources, while AWS Trusted Advisor provides real-time account analysis for health monitoring agents. Amazon Cognito manages secure identity and access controls, with API Gateway coordinating Lambda function invocations through REST API integration.

Building a conversational interface

The BMW Group engineering team used Amazon Cognito to ensure secure customer identity and access management. All incoming requests are passed through the Amazon API Gateway, which invokes AWS Lambda functions.

The interface integrates with AI agents through REST APIs and acts as a centralized hub that manages all interactions.

Designing multi-agent workflows

Data engineers built four autonomous agents and outlined their individual workflows.

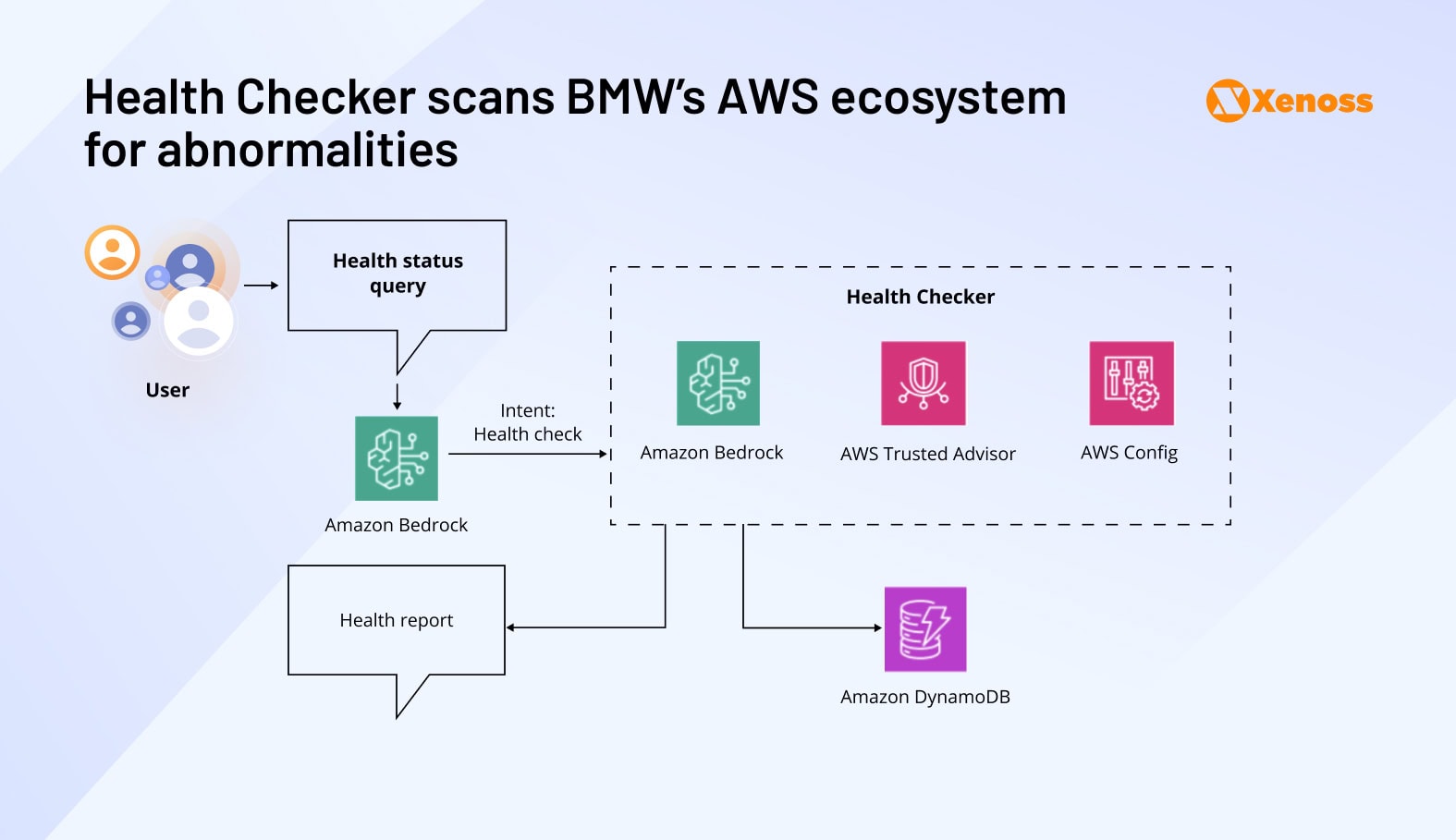

Health check agent

This agent accesses AWS accounts via AWS Config and AWS Trusted Advisor to create real-time infrastructure reports.

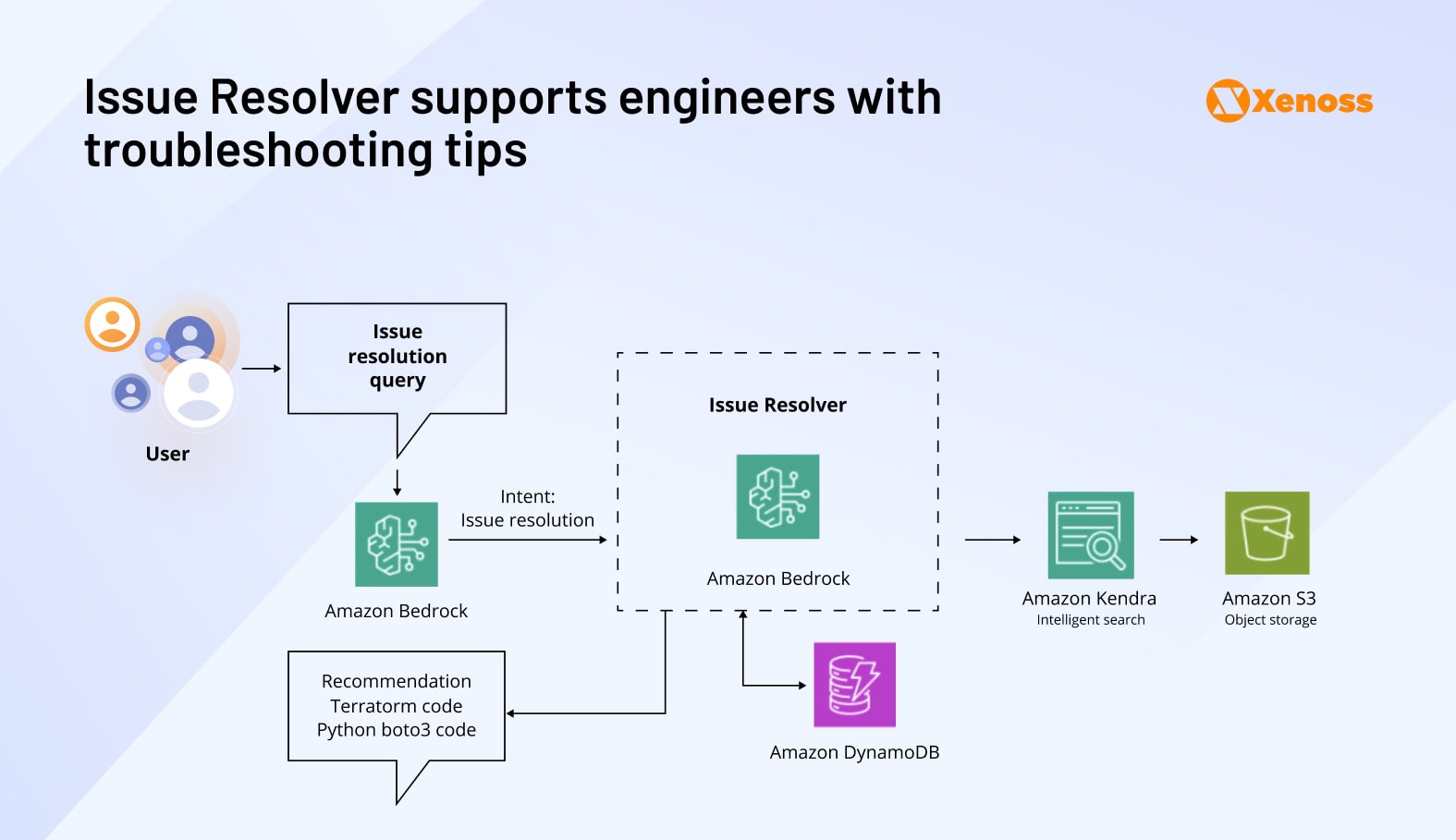

Issue resolver

This agent offers actionable solutions to the problems identified by the Health Checker. It offers both recommendations written in natural language and as code snippets.

Code and pull request generator

Facilitates conversational codebase management, automatically deploying Terraform and Python boto3 changes across selected environments.

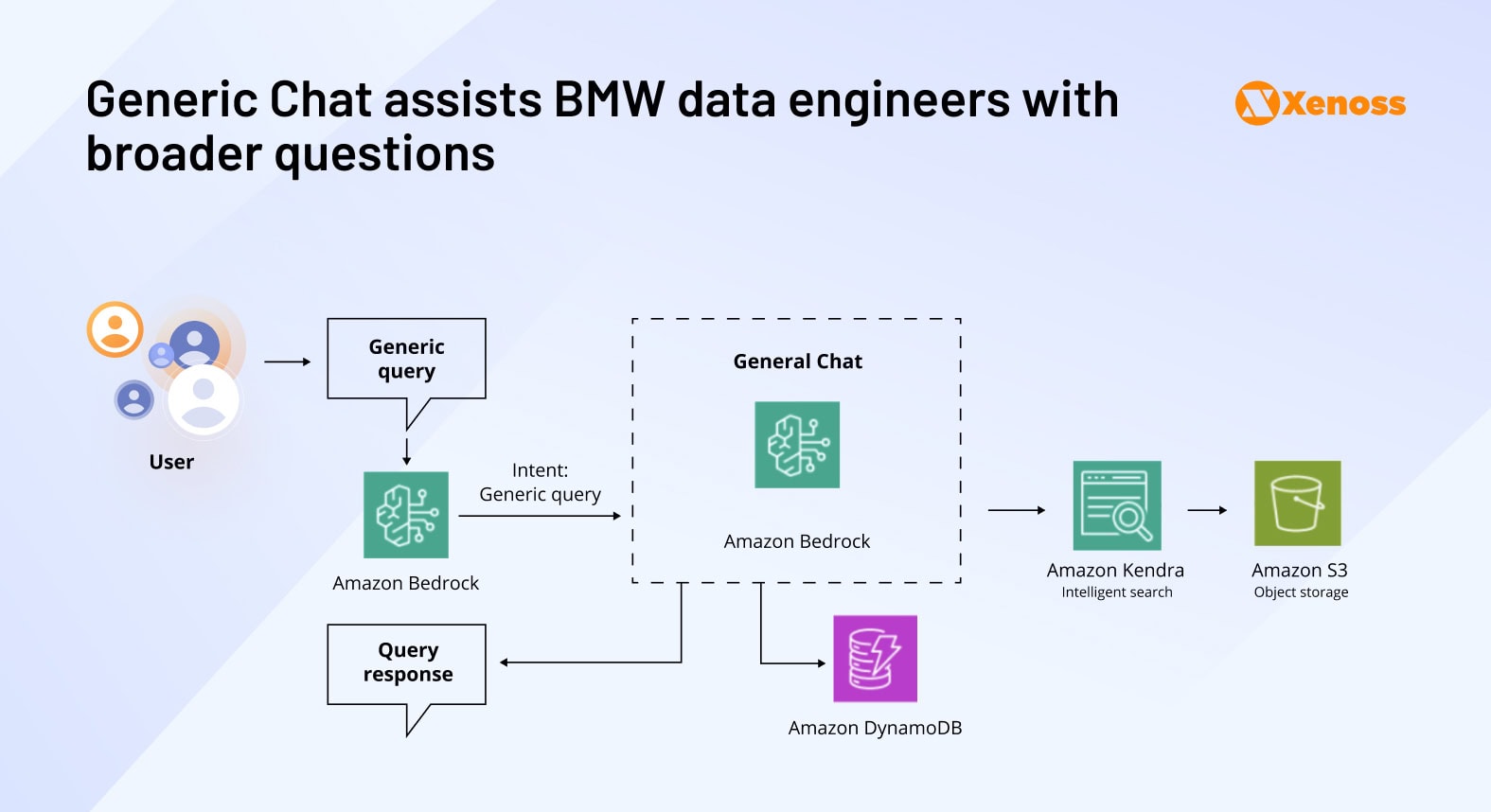

Generic chat

Handles broad AWS-related queries using Amazon Kendra’s knowledge pipeline for comprehensive service information.

Continuous inter-agent communication through a centralized orchestrator ensures real-time system updates and coordinated assistance. Agents share conclusions, data insights, and query histories, maintaining contextual awareness across all user interactions and supporting BMW’s engineering teams with relevant, specialized expertise.

Making the right choice: Enterprise knowledge base architecture decisions

While off-the-shelf LLMs generate market attention, they deliver limited enterprise value without proprietary organizational knowledge. Custom knowledge bases augmented with RAG workflows present substantial opportunities for accelerating employee onboarding and streamlining daily operations.

Architecture selection criteria

Choosing optimal knowledge base architecture requires evaluating four critical factors: use case complexity, technical expertise, budget considerations, and performance requirements.

Vanilla RAG suits straightforward questions, GraphRAG excels at relationship analysis, and agentic RAG provides scalable domain specialization.

GraphRAG and agentic systems demand building specialized ML capabilities, while Vanilla RAG offers accessible implementation for teams with limited AI experience.

Vanilla RAG provides cost-effective deployment, GraphRAG requires 20x token consumption, and agentic systems need ongoing agent development resources.

Real-time applications favor Vanilla RAG’s speed, while analytical use cases can accommodate GraphRAG’s 2x processing delays for enhanced reasoning.

Xenoss implementation recommendations

At Xenoss, our data engineers recommend starting with Vanilla RAG for initial deployments, then evolving the architecture based on proven ROI and organizational needs. This phased approach minimizes risk while building internal capabilities for advanced implementations.

Successful enterprise knowledge bases require comprehensive data governance, security frameworks, and change management strategies that align with broader digital transformation objectives.