This overview of AI regulations in the US is the first article in our series of blog posts on global artificial intelligence legislation.

For a 360-degree view of the AI regulations around the world, see our review of AI policies adopted in Canada, the EU, the UK, and Asia-Pacific.

As of 2025, the U.S. still hasn’t passed federal artificial intelligence laws and regulations. Instead, it leans on agency guidance and existing copyright, privacy, and discrimination statutes to do the heavy lifting.

Individual states have a more proactive stance toward AI regulations. California, Colorado, New York, and Illinois have introduced prolific artificial intelligence laws and compliance requirements.

Additionally, sector-specific rules apply across healthcare, finance, and advertising, creating a layered and often fragmented regulatory landscape.

This article breaks down the three layers of AI compliance in the U.S.—federal, state, and industry-specific—and highlights the most important laws, penalties, and upcoming proposals organizations should track in 2025.

Federal AI legislation

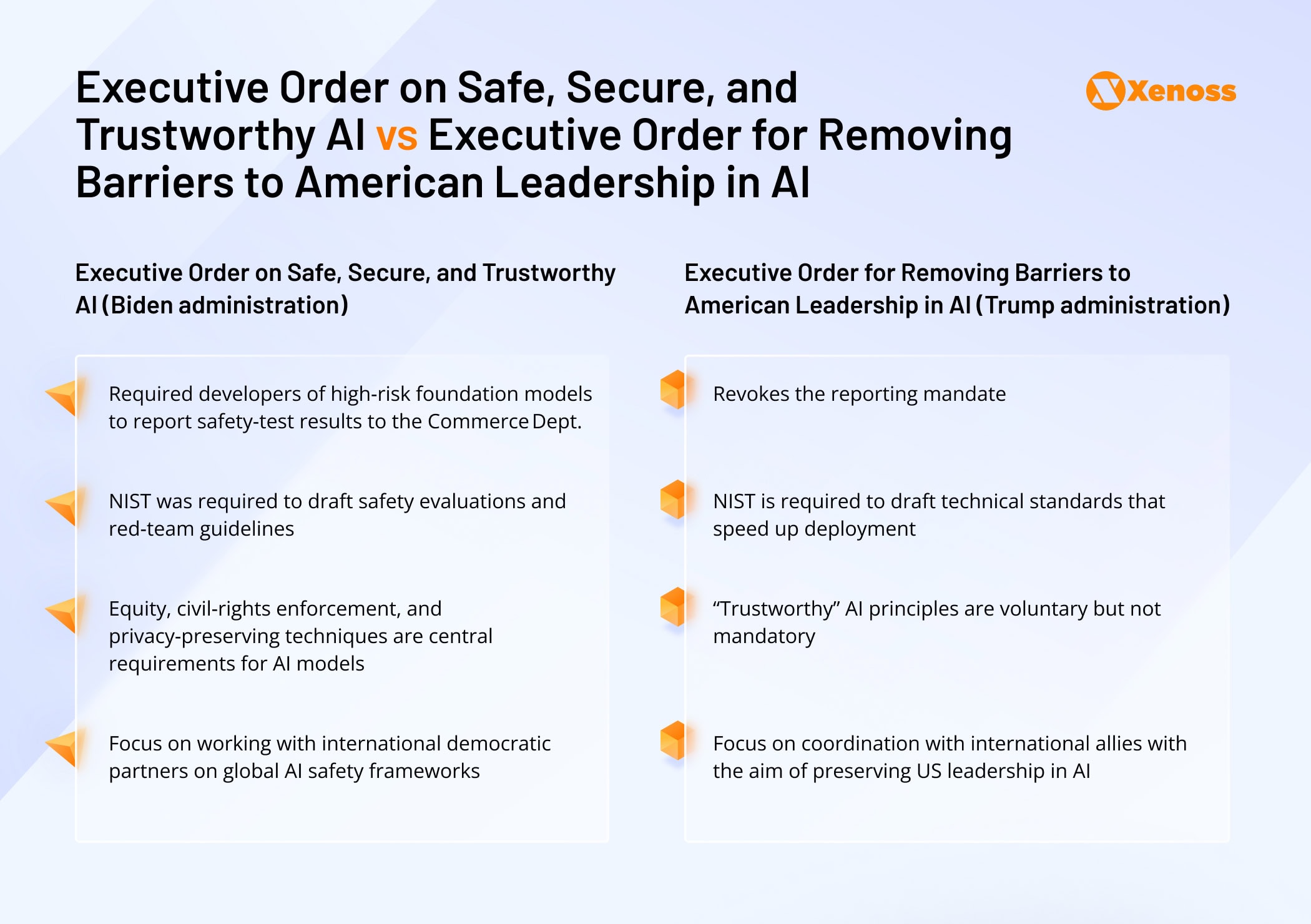

Under the Biden administration, the White House has issued non-binding AI frameworks like the Blueprint for an AI Bill of Rights and Executive Order on Safe, Secure, and Trustworthy AI. Both aimed to promote responsible AI development and establish safety tests for advanced AI models.

Executive order for removing barriers to American leadership in AI (2025)

President Trump, however, has replaced Biden’s executive order focused on AI restriction with a more permissible one in January 2025. The Executive Order for Removing Barriers to American Leadership in AI calls for federal agencies to “revise or rescind all policies, directives, regulations, and other actions taken by the Biden administration”.

The following changes under the new Executive Order are particularly relevant to machine learning teams:

- No mandatory federal red-teaming or pre-deployment filing (unless required by sector-specific regulators like the FDA or CFPB).

- No watermarking requirement for AI-generated content at the federal level.

- No model cards, incident reports, or bias audits are required by the White House.

However, enterprise clients, insurers, and institutional investors may still expect these practices as part of due diligence, even if not federally mandated.

TAKE IT DOWN Act (April 2025)

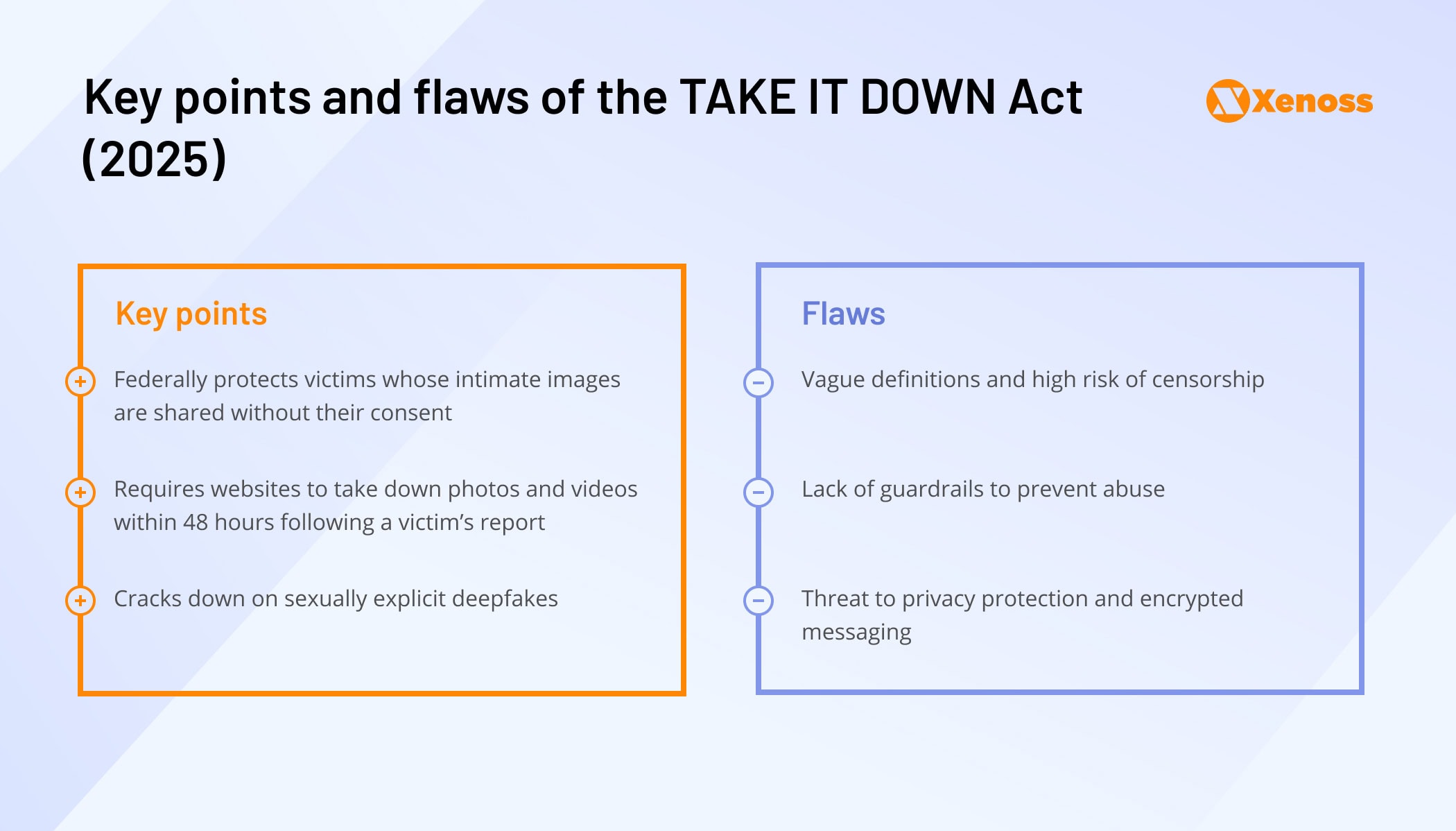

On April 28th, 2025, Congress passed the TAKE IT DOWN Act, which criminalizes the nonconsensual disclosure of AI-generated intimate imagery and enforces its removal from public platforms.

Theoretically, the Act tackles deepfake issues that put female celebrities (and, under closer inspection, all women) at risk of objectification.

Practically, the filters it recommends are not highly selective and can be used to flag legal content or silence political opposition. There’s growing concern that the Act is a way to bypass the First Amendment and limit free speech online.

The CREATE AI ACT (under Congress review)

The CREATE AI ACT is designed to streamline access to shared AI computational resources, datasets, and AI testbeds.

Although initially introduced in 2023, the bill has not yet received a floor vote and remains under congressional review as of mid-2025.

Since its reintroduction, the Act has gained strong backing from leading universities, tech associations, and AI research groups, significantly improving its chances of passage.

Key points of the CREATE AI Act:

- Establishes the National AI Research Resource (NAIRR) to give researchers, educators, nonprofits, and small businesses affordable access to the compute, data, software, and testbeds needed for cutting‑edge AI work.

- Places NAIRR under the National Science Foundation, guided by an inter‑agency steering committee; day‑to‑day operations are run by a competitively selected nonprofit, university consortium, or FFRDC.

- Awards resource time through a merit‑review process, particularly for under‑resourced institutions and under‑represented groups in STEM.

- Authorizes multi‑year appropriations through NSF (other agencies may contribute in‑kind resources) and requires periodic progress reports to Congress on innovation impact, workforce development, and responsible‑AI safeguards

Other pending proposals

A few other notable proposals are The REAL Political Advertisements Act, which aims to regulate AI use in political campaigning, and The Stop Spying Bosses Act, which would curb employers’ use of AI and machine learning for employee surveillance.

Federal agencies are also releasing new AI laws. The U.S. National Institute of Standards and Technology (NIST) issued several recommendation documents, including:

- AI Risk Management Framework (Jan 2023) offers guidelines for governance, transparency, and risk mitigation measures for AI systems.

- Trustworthy and Responsible AI Report (March 2025) provides voluntary methods for securing AI systems against adversarial manipulations and attacks.

- Cyber AI Profile (April 2025 – at the conceptual stage) — a set of risk management approaches for protecting AI systems against existing and emerging cyber threats.

Overall, US artificial intelligence laws and regulations at the federal level rely on a “soft law” approach, using voluntary standards and agency guidance. This gives state legislators a greater playing field.

State AI legislation

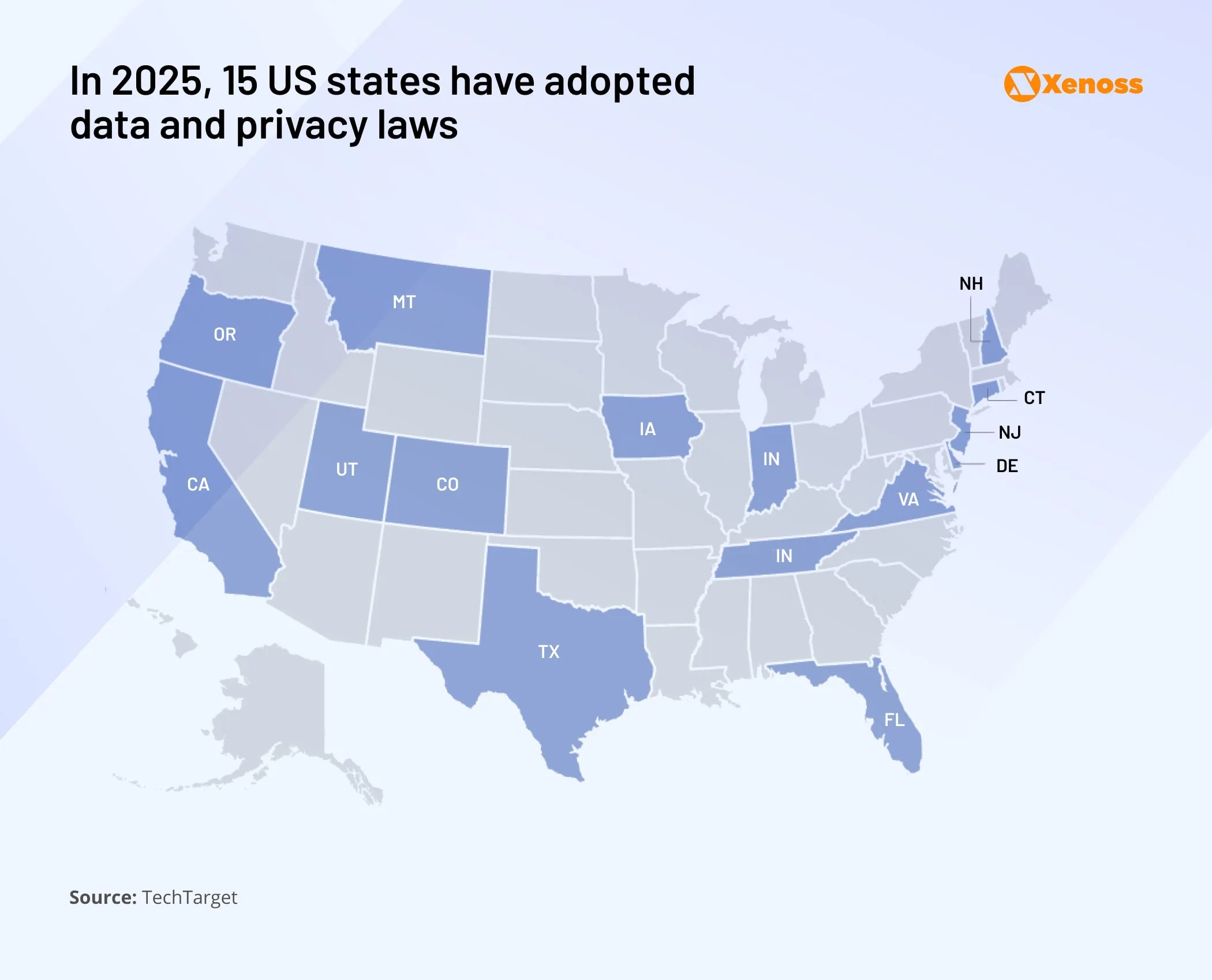

While the federal approach to AI safety regulations is “light touch”, many state bodies are filling in the gaps with local laws.

The most active states—California, New York, Colorado, Illinois, and Utah—have introduced robust frameworks shaping compliance standards for AI developers nationwide.

California

California AI laws offer companies a detailed framework to follow when building machine learning projects.

Assembly Bill 2655 requires large online platforms to identify and block deepfakes and label certain content as inauthentic, fake, or false before and after state elections.

Another regulatory document, Assembly Bill 1836, introduced a requirement to obtain consent from the representatives of deceased celebrities before replicating them digitally.

In January 2026, the State of California will enforce the California AI Transparency Act, which requires AI systems with more than 1 million monthly visitors in California to implement proper AI detection tools and content disclosures.

Companies that fail to comply with the AI Transparency Act will be fined $5,000 daily.

Generative AI: The Training Data Transparency Act is a set of generative AI regulations coming into effect in 2026. It will mandate developers to supply a “high-level summary” of the datasets used to develop and train generative AI systems.

Aside from AI-specific regulations, teams need to keep their machine learning use cases compliant with the California Consumer Privacy Act (CCPA). CCPA violations related to AI profiling or targeted advertising can result in civil penalties of up to $2,500 per violation or $7,500 per intentional violation.

New York

The NYC AI law, effective since 2023, laid the foundation for protecting job candidates and employees from race-, ethnicity-, or sex-based bias through the use of AI tools.

In 2025, New York legislators expanded on AI regulation in the workplace, introducing the New York AI Act Bill and the New York Consumer Protection Act Bill to the Senate and State Assembly.

The new laws on artificial intelligence specify the requirements for bias audits in AI tools used for recruitment, the use of employee data, and bias mitigation practices.

Other US states with AI regulation frameworks

- Utah: The HB 452 law requires companies that offer “mental health chatbots” to disclose chatbot interactions and advertisements presented through them. It also prohibits the sale or sharing of user chatbot inputs or their health information. The follow-up SB 226 law mandates that users be supplied with disclosures of all interactions if asked. The Utah Division of Consumer Protection bills breaches of the Utah Artificial Intelligence Policy Act at $2,500 per day.

- Illinois: Biometric Information Privacy Act (BIPA) restricts the use of biometric data in AI systems. Statutory damages can be up to $1,000 per negligent violation or $5,000 per intentional violation. This has led to class action settlements for companies using AI on fingerprints or faceprints without consent.

- Colorado: The Colorado AI Act established a “high-risk” classification for AI systems and respective requirements for risk management, consumer disclosures, and discrimination prevention, similar to European AI regulation.

Also, all AI providers must comply with data privacy regulations, enacted in 15 states as of 2025.

Sectoral AI regulations: Healthcare, finance, advertising

Beyond federal and state-level laws, AI developers in the U.S. must comply with industry-specific regulations in sectors like healthcare, finance, education, and advertising. These frameworks are often more mature, with strict compliance protocols, disclosure mandates, and approval workflows.

AI regulations in healthcare

In 2025, companies that operate in the Software as a Medical Device (SaMD) market, with or without AI, must obtain FDA approval through a 510(k) clearance, De Novo classification, or Premarket Approval (PMA), depending on the risk level.

In 2024, the FDA issued guiding principles for ensuring transparency in ML-powered medical devices. The main goals are to ensure proper patient disclosures, ongoing risk management, and the responsible use of AI-generated information. Later in the year, the regulator followed up with a “Predetermined Change Control Plan” for AI systems that evolve over time.

To ensure compliance, US-based healthtech companies must outline how future model updates will be managed and validated to obtain certification.

Existing frameworks like Good Machine Learning Practices (GMLP) and Good Clinical Practices (GCP) also govern AI use in pharma.

Compliance doesn’t end at the federal level. California’s Health Care Services: Artificial Intelligence Act, for example, mandates that any use of generative AI in healthcare communications must be clearly disclosed, and providers must offer patients a way to speak directly with a human professional.

Utah has enacted similar requirements for all regulated occupations, including healthcare, legal, and financial services.

For non-compliance with FDA requirements, the regulator can issue product recalls, injunctions, or more severe penalties for selling or marketing unsafe devices

Regulations on AI in finance

AI use is monitored by regulators such as the Federal Reserve, SEC, CFPB, OCC, and FDIC. These institutions mainly focus on credit scoring, fraud detection, algorithmic trading, and customer targeting.

The Fair Credit Reporting Act (FCRA) and Equal Credit Opportunity Act (ECOA) require AI systems to be explainable and ban discrimination in lending decisions. FCRA violations related to AI misuse in credit scoring or lending incur statutory damages up to $1,000 per consumer and extra settlements from potential class action suits

SEC monitors AI usage in algorithmic trading to prevent market manipulations and systemic risks. Standard anti-money laundering (AML) and Know Your Customer (KYC) regulations also apply to financial products with AI features.

Extra regulations for AI applications in finance and banking also crop up at the state level. The New York State Assembly has a bill under review that mandates disclosure of AI usage in credit decisions and institutes mandatory bias audits.

AI regulations in advertising and marketing

AI use in advertising and marketing is regulated by the Federal Trade Commission (FTC).

AdTech and MarTech vendors must ensure that all AI output, such as personalized ads, targeting suggestions, or generated content, is fair, transparent, and not misleading.

In 2024, the FTC began cracking down on companies that overstated AI products’ capabilities, fabricated AI-generated endorsements or reviews, or concealed the use of synthetic media like deepfakes. FTC violations come with a $50,120 civil penalty violation (adjusted annually), with mandatory monitoring and reporting.

Some have already gotten into trouble:

- Software provider accessiBe received a $1 million fine for claiming its AI tool can make any website compliant with the Web Content Accessibility Guidelines (WCAG)

- Workado got a warning for overstating its AI content detection tools’ capabilities. The company must remove all claims, notify users, and submit compliance reports to the FTC within one year of the order being issued and every year for the following three years.

- DoNotPay, an “AI legal robot company,” has to pay $193,000 and provide a notice to consumers who subscribed to the service between 2021 and 2023, warning them about the limitations of law-related features on the service.

Companies offering any type of dynamic pricing optimization, ML-based RTB bidding optimization, or advanced customer segmentation need to ensure fair and transparent practices to avoid FTC fines.

When building a dynamic pricing algorithm for a major advertising publisher, the Xenoss team equally focused on ad revenue optimization and compliance.

Xenoss ML engineers developed a system that balances revenue and fill rates using Gradient Boosting Machines (GBM) techniques.

The engineering team aimed for high interpretability and performance on tabular data, which helped the client deliver accurate, real-time predictions without risking compliance.

Bottom line

U.S. AI regulation isn’t arriving as a single law—it’s emerging in overlapping layers: federal guidance, state legislation, and industry-specific mandates.

Even though the US uses a soft-law approach to AI regulations, companies aiming for the market should track the latest regulations to avoid federal fines (which can exceed $50,000 per violation) or trigger product recalls.

Hiring experienced AI consulting and MLOps partners can help navigate the fragmented compliance landscape.

Xenoss engineers have built compliant AI applications in advertising, marketing, pharma, and other heavily regulated industries.

To run a compliance audit of your AI platforms and build an actionable, step-by-step strategy, reach out to Xenoss engineers.