This rundown of the state of AI legislation in Canada is Part 2 of our series on AI regulations.

For a global overview of the regulatory landscape, read our articles on the US, EU, UK, and APAC.

As of May 2025, Canada has no approved AI regulation framework.

For the last three years, the government has been working on the Artificial Intelligence and Data Act (AIDA), which aims to regulate AI applications at the federal level.

While Canada waited for the federal election (held on April 28th, 2025), the bill was tabled. Now that the Liberal Party is returning to Parliament, AIDA will likely be brought back into the political landscape.

In the meantime, AI applications in Canada are still subject to existing data privacy laws and voluntary frameworks. This article explores what’s already in place, what’s coming next, and what non-compliance could mean for AI teams targeting the Canadian market.

Artificial Intelligence & Data Act (AIDA): Canada’s first law focused explicitly on regulating AI

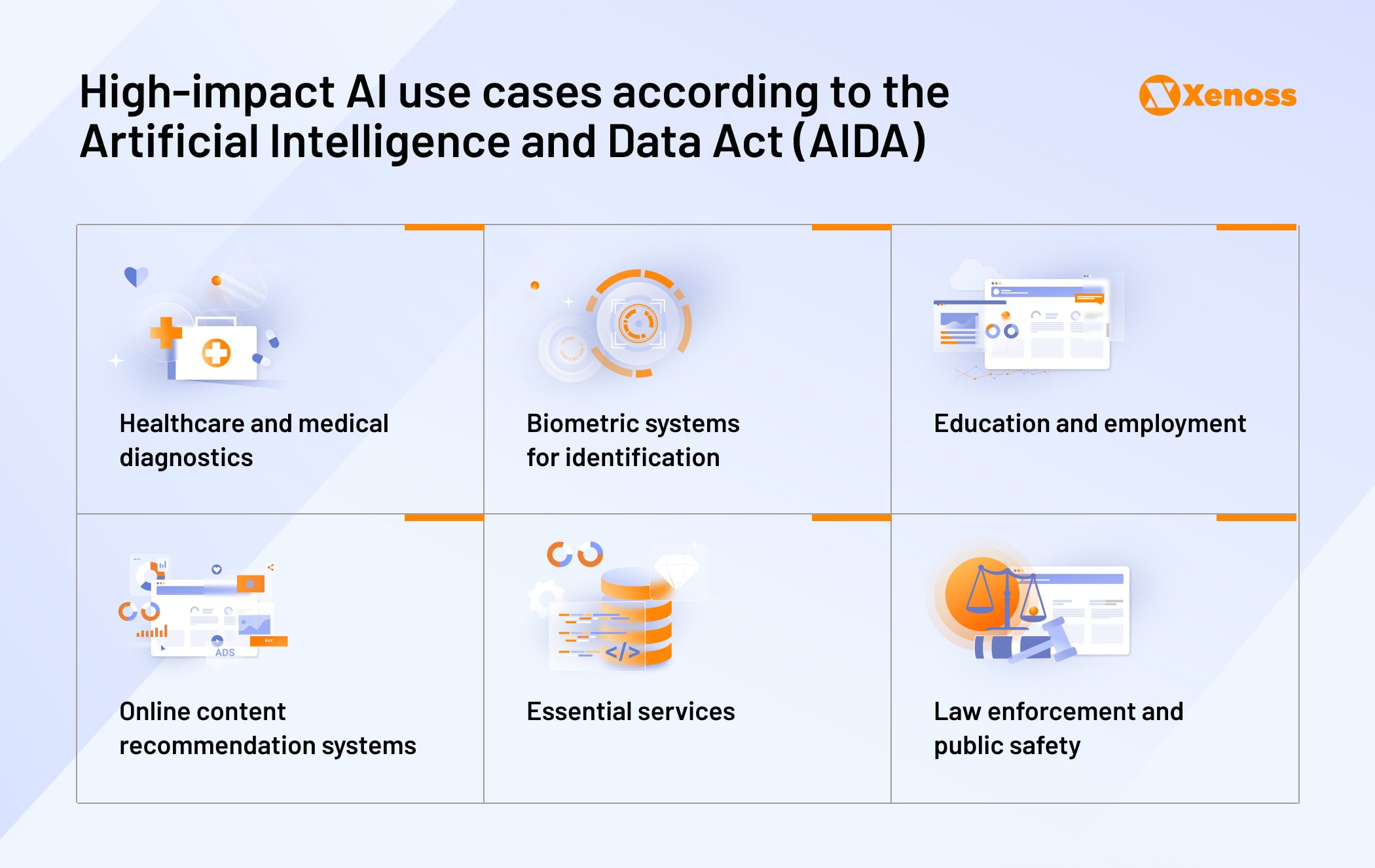

AIDA focuses on minimizing the risks of AI for high-impact use cases.

The Canadian government divided these applications into seven groups.

- Healthcare and medical diagnostics

- Biometric systems for identification

- Employment and workforce management

- Essential services (e.g., banking, housing, insurance)

- Education (e.g., admissions, grading, or student monitoring)

- Law enforcement and public safety

- Online content recommendation systems that can heavily impact public opinions

The systems under these categories must adhere to requirements to minimize a “range of harms to individuals” and “address the adverse impacts of systemic bias in AI systems in a commercial context”.

- Sufficient measures to mitigate potential risks of AI, related to bias, discrimination, and other types of public harm

- Transparent disclosures on how the system will be used and the possible risks it poses

- Record-keeping duties to maintain trained datasets, document training methods, describe intended purposes, and state risk management methods

- Incident reporting to designated regulators, following a serious malfunction or data breach

- Cooperation in audit requests and cooperation with providing the demanded documentation to a regulatory body

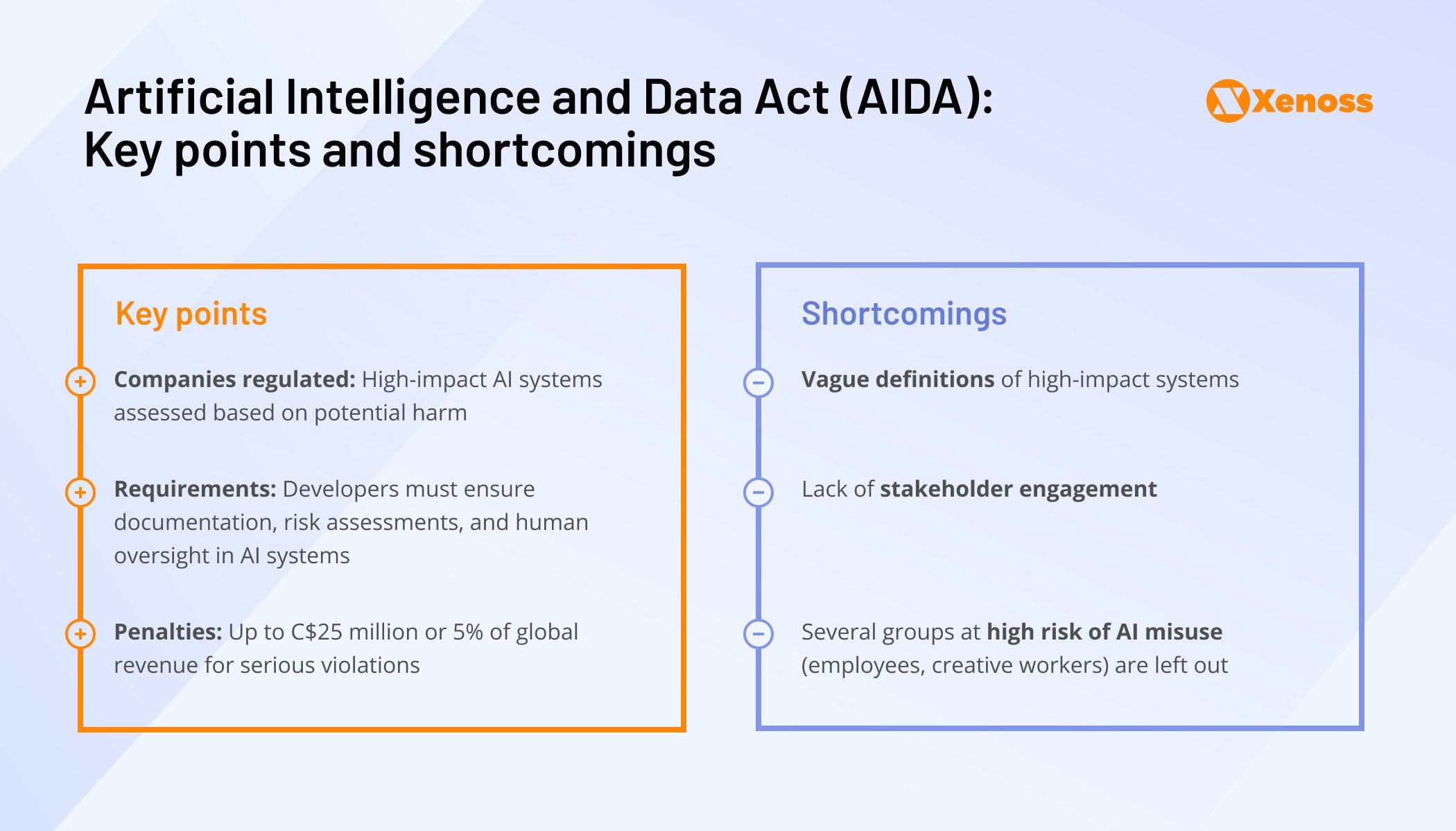

The first AIDA proposal includes a non-compliance fine up to C$25 million or 5% of global revenue. Furthermore, unauthorized use of illegally obtained personal information, deployment of harmful AI systems, and fraudulent use of AI are grounds for criminal charges under the Act.

Note that the Artificial Intelligence and Data Act does not offer a clear definition of a “high-impact system”; on the contrary, the act states that the definition may evolve in the future. Therefore, AI companies that do not yet fall under any of the seven categories may do so by the time the Act is approved.

Though the Parliament has yet to give AIDA a green light, AI companies targeting Canada should prepare in advance by assessing the transparency and explainability of their AI systems.

Criticism of AIDA: Gaps in protection and processes

The initial draft of the Artificial Intelligence and Data Act (AIDA) faced sharp criticism from both policymakers and civil society groups. The House of Commons Standing Committee on Industry and Technology (INDU) conducted a review that included testimony from over 130 witnesses, many of whom raised serious concerns about the bill’s scope, transparency, and stakeholder inclusion.

The main critiques included:

- Requirements for AI-enabled high-impact systems were too vague to guarantee reliable protection from misusing machine learning models.

- Lack of stakeholder engagement: AIDA was mainly developed behind closed doors by a group of selected industry representatives.

- AIDA’s lack of focus on labor rights was criticized by the Canadian Labor Congress, which demanded the law be “reconceived from a human, labor, and privacy rights-based perspective.”

- Lack of input on protecting the rights of creative industries drew criticism from the Directors Guild of Canada, Writers Guild of Canada, Music Canada, and other industry organizations.

The consensus is that the Artificial Intelligence and Data Act failed to protect sectors and groups at the highest risk of AI-driven job disruption.

Existing laws for AI regulation in Canada

Voluntary Code for generative AI

Alongside AIDA provisions, the Canadian Government released a Voluntary Code of Conduct on the Responsible Development and Management of Advanced Generative AI Systems, published in September 2023.

While compliance is not mandatory, complying with these requirements helps Canadian companies prove their commitment to building and employing generative AI systems until formal regulations for generative AI use cases are established.

PIPEDA: Personal Information Protection and Electronic Documents Act

Businesses must also comply with the already enacted Personal Information Protection and Electronic Documents Act (PIPEDA) that governs personal data use in AI systems. PIPEDA violations come with a C$100,000 fine and extend to AI systems that misuse or improperly collect customer data.

Additional legal exposure

AI systems that produce discriminatory outcomes (e.g., bias in hiring, housing, or service delivery) can lead to orders for damages and corrective measures under the Canadian Human Rights Act and provincial human rights codes.

The Competition Bureau, which enforces fair business practices, can impose penalties up to C$10 million for individuals and C$15 million for businesses for misleading representations or anti-competitive conduct involving AI.

Future of AI regulation in Canada

Canada was once a global leader in AI research, but by 2025, it has been overtaken by countries like the U.S., China, France, Germany, and the UK—mainly due to policy inertia and delayed implementation of national AI legislation.

Mark Carney, the new Prime Minister, focused part of his campaign on bringing Canada back into the AI race, favoring innovation over stringent regulation.

His team announced plans to upskill the priority sector workforce, investing C$15,000 per worker.

The Liberals also teased a C$2.5 billion investment in chips and data centers in the next two years to facilitate AI adoption nationwide.

With Parliament back in session, previously stalled legislation, most notably AIDA, is expected to return to the table. But AIDA won’t be the only framework shaping AI compliance. Three other bills are poised to impact machine learning teams:

- Consumer Privacy Protection Act (CCPA), which will call for transparency, accountability, and meaningful consent in AI systems using PPI. It mandates that automated decision-making systems be explainable and that individuals be informed of the use and logic behind these platforms.

- Critical Cyber Systems Protection Act that requires AI systems to secure the integrity, availability, and confidentiality of systems, including safeguards against threats posed by AI-driven attacks or vulnerabilities.

- Bill C-63 aimed at curbing harmful content online through a newly formed Digital Safety Commission. While it does not explicitly regulate AI systems, it requires platforms to prevent and remove AI-generated deepfakes.

Future proposals of these legislations will likely cover AI use cases, so tech companies building machine learning tools for the Canadian market should track the discussions around these acts.

Bottom line

Canada’s regulatory framework for AI is still forming, but the intent is clear. With AIDA likely to return to the agenda and enforcement already happening through PIPEDA, the Human Rights Act, and competition law, the cost of waiting is rising.

Companies building AI tools for the Canadian market need to anticipate regulatory changes, not just react to them. That means building explainability, transparency, and compliance readiness into the systems now.