Marketplace advertising is a booming sector. In 2024 alone, Amazon’s ad business generated over $56 billion, almost twice more than YouTube’s ad revenue.

But those profits don’t come as easy because behind those numbers lies an invisible battle for more precise real-time bidding (RTB) in programmatic advertising. Marketplaces face the mounting pressure to deliver higher ROAS. But many still only support hardcode fixed bids or struggle with crippling latency in real-time bid processing due to outdated tech stacks.

The better news? Investments in RTB optimization with machine learning algorithms for advertising can drive massive gains. As part of our client portfolio, Viant Technology leveraged deep learning to build their AI Bid Optimizer—delivering to their clients 100% gains in performance improvements and 24% to 40% cost reduction compared to manual bidding systems. Coty, a multinational beauty company, improved its ROAS from Amazon ads by 28% and its total digital purchase view rate by 48% through bid modifier API integration.

More and more media buyers realize they can’t compete with ML-based bidding optimization algorithms in terms of speed or precision. So they now expect their demand-side partners to step up to the game with better tech (or risk being replaced).

However, many companies have been slow to respond because of the challenges associated with RTB bidding automation: high-dimensional data, ultra-fast bid response speeds, and a general lack of knowledge in implementing online ad optimization with machine learning.

Does this sound like your case? In this post, you’ll discover:

- The fundamental Fair Price bidding problem in RTB that complicates campaign optimization

- RTB optimization challenges specific to machine learning like class imbalance, cold start problem, and concept drift

- Proven solutions to both illustrated via case studies from top online advertising marketplaces

Core challenges in RTB campaign optimization

Theoretically, RTB bidding campaign optimization is a straightforward prediction problem — estimate conversion, set a bid, win the impression.

But on large online marketplaces, it’s anything but that. The underlying algorithms must adapt to skewed data, changing behaviors, and scale-driven complexity to generate each bid.

Here’s a breakdown of the biggest technical challenges standing in the way of ML-powered programmatic bidding.

Fair price bidding: A more innovative RTB strategy

At its core, real-time bidding (RTB) is an economic optimization problem. For every single ad impression, a programmatic RTB platform has to answer one deceptively simple question:

Given its potential to convert, what’s the maximum we should pay for this impression?

This is the “Fair Price” problem. And many real-time bidding algorithms fail to solve it in probabilistic environments like retail media networks.

Let’s say you’re running a retargeting eCommerce advertising campaign for a popular pair of sneakers. An impression becomes available for a user who:

- Visited the product page for the sneakers 2 hours ago

- Added the item to the cart but didn’t check out

- Bought a similar item about a month ago

Now, here’s the question: How much should your DSP bid for this impression?

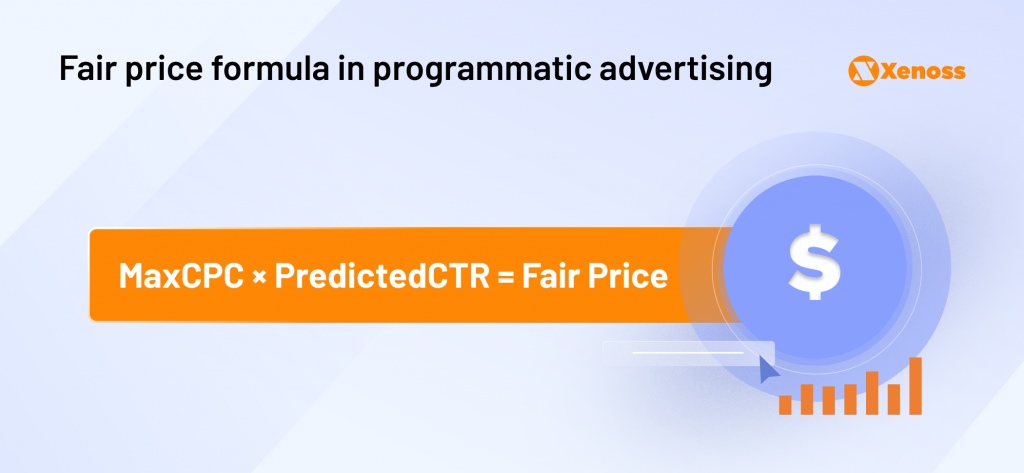

Machine learning programmatic advertising algorithms will dynamically estimate the probabilistic value of every impression based on two factors:

- MaxCPC is the advertiser’s maximum willingness to pay for a click

- PredictedCTR is the estimated probability that this impression will result in a click

Knowing these two parameters, you can calculate the Fair Price using this formula:

Let’s assume your max CPC is $5 (based on ROAS targets). The underlying machine learning advertising model estimated a CTR of 5% based on user behavior signals. In this case, the best bid would be $0.25 per impression.

By using an ML-powered programmatic bidding strategy, you can avoid the pitfalls of fixed bigging strategies like:

- Overpriced bad impressions. Not all ad impressions are created equal. A user who bounces in 0.5 seconds with no scroll activity has zero conversion potential. A fixed $0.50 bid on that impression? You’re overpaying for junk inventory.

- Lost valuable impressions. On the flip side, a high-intent user, returning visitor, product page view, or high basket value might only convert if you outbid competitors. A fixed bid that’s too low (say, $0.10) means you lose the auction and a conversion opportunity.

- No context, no efficiency. Fixed bids don’t account for device type, time of day, user recency, creative performance, or even page category — all of which meaningfully impact conversion probability.

The “Fair Price” model is a game changer for preventing marketplace ad waste. But implementing it in real time and at scale opens up a new can of worms.

ML challenges in implementing fair price bidding

The fair price formula provides a clear theoretical framework for optimizing RTB programmatic buying. However, implementing it in an AdTech platform is far from trivial.

Accurate bidding depends entirely on the quality of the underlying prediction models — and building those models at scale introduces significant ML and data engineering complexity.

The class imbalance problem

Conversion probability predictions are complicated because the positive class (clicks and conversions) is vanishingly rare. The average CTR for retail media advertising is just 0.39%. Amazon marketplace advertising, in turn, averages 0.42%. The conversion rates can be even lower, depending on product category, funnel depth, and user behavior.

Respectively, machine learning algorithms for advertising are mostly trained on highly imbalanced datasets, which causes two problems:

- Overprediction of the majority class. The algorithm defaults to labeling most ad impressions as non-converting because that’s statistically the safest bet in an imbalanced dataset.

- Suppression of the minority class. To minimize false positives, the model becomes overly conservative and fails to identify genuine conversion signals.

In other words, a machine learning media buying model never learns to recognize the right patterns despite showing a high AUC or accuracy score on a monitoring dashboard.

The business results? Underbidding on high-value impressions leading to lost revenue and

overbidding on low-propensity users (aka wasted ad budgets), multiplied a billion with every subsequent RTB bid.

High-dimensional data

To accurately value each impression, machine learning advertising models must take into account a heap of variables about the user and the product, plus the merchant, ad format, device, time of day, and real-time behavioural context.

In an average retail media network, that’s millions of potential features per impression. Most of these are categorical variables with high cardinality, meaning they don’t play nicely with many standard ML models.

The following problems emerge:

- Too large feature marcices. When encoding high-cardinality variables (e.g., 100K+ user IDs in one go), the feature matrix becomes too big for efficient training and storage.

- Complex data dependencies. The trifecta of user-merchant-product interactions creates different CTR and conversion probabilities, which also change depending on the pricing, season, or user demographics.

- Challenging dimensionality reduction. Naive approaches can discard high-impact features from the model or introduce bias, leading to skewed performance.

- High resource requirements. Advanced RTB programmatic advertising models need feature store architectures that serve data in <10ms latency, meaning high cloud infrastructure costs and complex GPU memory optimization.

To overcome these problems, data science teams need to compress categorical variables (e.g., user ID, product ID) with the right embedding techniques into low-dimensional dense vectors.

Marketplaces like Alibaba use trainable embedding tables to optimize high-cardinality entities. These are dense vector representations, optimized via back propagation during the model training phase and then embedded into neural models to support CTR prediction.

Another strategy is to sparse feature engineering pipelines with feature hashing or target encoding. This way, you can avoid maintaining up-to-date embedding vectors for every possible user or product. Instead, the model fetches learned embeddings for frequent entities or hashed representations for rare or unseen ones, improving the speed of programmatic bids.

Real-time constraints

In RTB, everything — including the fair price calculation — has to happen in real time. So, model speed is paramount.

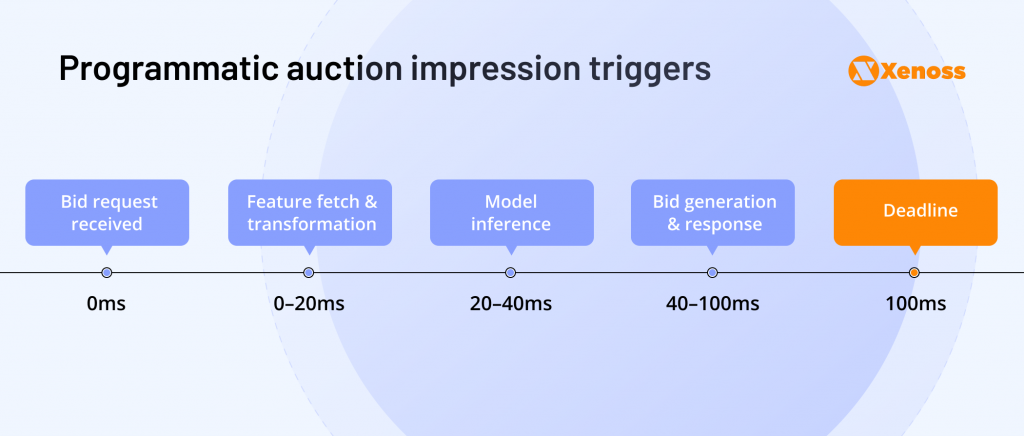

Every impression triggers a programmatic auction. Every DSP has a hard deadline (a 100ms timeout per IAB OpenRTB 2.x specification) to respond to a bid request. Miss that window? Your bid has dropped.

During this small window, the real-time bidding programmatic model must also compute an optimized bid:

- Predict the probability of a click or conversion

- Analyze the current state of campaign pacing and budget

- Apply frequency capping, brand safety, merchant priorities, etc

This is where it gets tricky: More complex models — like deep neural networks with embedding layers and cross-feature interactions — generally offer better predictive bid performance. But they’re also slower to compute, especially under live traffic.

So, every online advertising marketplace has to consider tradeoffs between model accuracy and tolerable latency levels. AdTech engineers must make hard decisions:

- Do you serve precomputed predictions?

- Do you trim down features at inference time?

- Can you cache predictions for certain user segments?

- Should you use a faster approximation model (e.g., tree-based or linear) instead of deep learning?

These questions become more acute as the platform volume increases. The biggest marketplaces must make tens of millions of predictions per second — each of which needs to be completed in under 10ms, including network round-trip time, feature lookup, model inference, and response generation. When your model is too slow, you lose revenue opportunities.

Pro tip from our MLOps team: Try the XGBoost algorithm

XGBoost models are highly optimized for low-latency predictions — ideal for sub-millisecond scoring per impression. They also bode well with sparse inputs natively without needing heavy preprocessing. Compared to deep learning, XGBoost models are “lightweight”, making them ideal for edge deployment or in-memory scoring at scale in RTB.

Cold start problem

When an ad bidding model doesn’t have enough historical data for CTR and conversion probability, we have a cold start problem.

The Predicted CTR term in the Fair Price bidding formula becomes unreliable (or impossible) to calculate when the processed impression involves:

- A new merchant with no past campaign performance

- A new product without browsing or engagement history

- A new campaign targeting novel audiences or ad placements

In such cases, the model will either produce a flat, uninformed CTR (leading to underbidding or overbidding) or not bid at all — missing impressions that may have performed well.

The problem gets worse at marketplace advertising because inventory constantly changes, new merchants get onboarded frequently, and short-term campaigns don’t produce enough training data. All of this makes cold start the default state for large portions of traffic.

To overcome this shortcoming, some AI programmatic advertising platforms use meta-learning techniques to transfer knowledge from past campaigns.

WeChat, for example, developed a two-stage framework called Meta Hybrid Experts and Critics (MetaHeac) to enhance audience expansion in their advertising system. The company first trained a general model using data from existing advertising campaigns to capture the relationships among various tasks from a meta-learning perspective. Then, for each new campaign, a customized model is derived from the general model using the provided seed set. This approach allows the system to adapt quickly to new campaigns by leveraging insights gained from previous ones.

Another approach is to create similarity-based models to effectively infer the optimal CTR from similar products, merchants, or customer segments. A recent paper showcases a Graph Meta Embedding (GME) model that can use two information sources (the new ad and existing old ads) to improve the prediction performance in both cold-start (i.e., no training data is available) and warm-up (i.e., a small number of training samples are collected) scenarios on different deep learning-based CTR prediction models.

The key to solving the cold start problem is striking a balance between exploration and exploitation. Over-exploit, and your system stagnates. Over-explore, and you waste money.

Effective programmatic ad platforms dynamically balance both — adjusting exploration rates based on budget pacing, merchant goals, and model confidence scores.

Concept drift and model decay

User behaviors, product demand, and competitive bidding patterns constantly change. That means a model calibrated for yesterday’s data is already drifting off-course today.

Fair Price model drift happens due to:

- User behavior shifts. Customers’ interest changes (no longer into skincare) or their device usage patterns evolve (e.g., they start shopping more from an iPad).

- Marketplace dynamics. New brands, SKUs, and categories introduce nonstationary data, while supply/demand fluctuations (e.g., stockouts) affect impression volume and pricing.

- Seasonality effects. For many products, conversion rates aren’t the same throughout the year. Holidays (e.g., Christmas, Thanksgiving, and Black Friday) also alter CTR baselines.

- Competitive pressures. Aggressive budget increases from other advertisers distort the auction landscape. Also, new brands may cannibalize your audience segments.

All of these change the underlying relationships that your model learned — between user intent, product type, and likelihood of conversion. This is known as concept drift — and it’s inevitable in programmatic RTB systems.

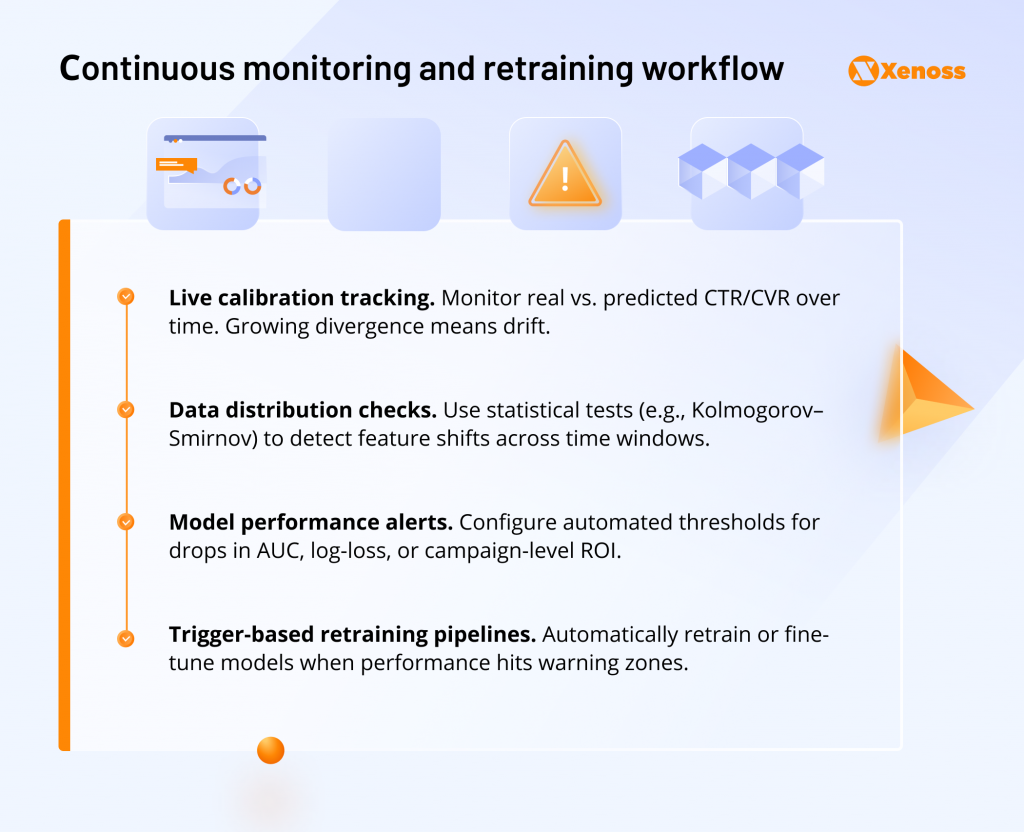

To counter model degradation, you have to implement continuous monitoring and retraining workflows with:

Some platforms also use more advanced techniques to prevent machine learning drift. Pinterest has integrated a Multi-Task Learning (MTL) technique to optimize its ads conversion models. MTL combines multiple conversion objectives into a unified model, leveraging abundant onsite actions to enhance training for sparse conversion objectives.

This approach allows the model to adapt to new data incrementally, reducing the odds of drifting. Additionally, Pinterest employs ensemble models that combine different feature interaction modules, such as DCNv2 and transformers, to capture diverse data patterns effectively and use them in programmatic campaigns.

Solution: The strategic advantage of advanced RTB optimization

Building an effective machine learning programmatic advertising system for a marketplace can feel like a minefield of edge cases, system constraints, and modeling tradeoffs.

But effective solutions exist — and our programmatic software development team has successfully implemented them. By applying systematic, end-to-end optimization strategies for our client’s platform, we have achieved double-digit lifts in CPC and CTR, along with a massive reduction in operating costs.

- 27% CPC reduction compared to initial rule-based bidding strategies

- 18% CTR lift and 9% CR lift through better identification of high-intent audiences

- 45% reduction in operating costs, thanks to a fully automated model maintenance cycle that significantly reduced the number of campaign management tasks

Here are two approaches we used to achieve these results:

Multi-model architectures

In a large-scale marketplace, using one machine learning model to optimize bids never works. Different product categories attract fundamentally different user behaviors, intent signals, and conversion patterns — and one-size-fits-all modeling fails to capture this complexity.

That’s why we encouraged our client, one of the largest retail marketplaces in CEE, to move to a multi-model architecture. A multi-modal AI optimization system classifies products into behavioral clusters (e.g., by category taxonomy or brand patterns) and trains separate models for each group.

This approach effectively addresses class imbalance. Each model gets trained on homogeneous data subsets, meaning a more balanced signal-to-noise ratio. It also reduces high-dimensional data complexity as feature distributions become narrower and more predictable within a specific category.

Lastly, such models can be fine-tuned to category-specific KPIs, latency tradeoffs, or budget pacing rules, offering greater future-proofing.

Automated model retraining pipelines

The sheer volume of changing data points renders manual model retraining absolutely ineffective. That’s why modern RTB platforms adopt self-healing ML systems. Automated pipelines:

- Monitor model performance in real time (e.g., AUC, CTR lift, cost per action)

- Automatically detect degradation due to drift or data distribution changes.

- Trigger on-demand retraining or model replacement using recent data windows or pre-trained category-specific baselines.

This approach doesn’t just mitigate concept drift — it also helps solve the cold start problem. When a new campaign launches, the system can pull from a repository of pre-trained models, fine-tune on limited data, and bootstrap the learning process autonomously.

In the case of our client, automated model retraining pipelines enabled:

- Gradual model rollouts with traffic splitting and live A/B testing

- Performance-based decisions for model swaps — not just on schedule but based on actual campaign-level KPIs

- Zero manual ops even across thousands of active models, leading to greater operational efficiencies

Ultimately, automated retraining turns RTB modeling from a brittle, manual process into a robust feedback loop — where models continuously self-adapt, learn, and optimize.

Conclusion

RTB bidding for marketplaces isn’t just another machine learning challenge — it’s a multi-dimensional problem that spans economics, system design, model engineering, and real-time constraints. The complexity is undeniable, from predicting fair prices to handling concept drift, cold starts, and massive feature spaces.

But it’s also solvable. The difference between theoretical success and real-world results lies in the implementation details — how you structure your model architectures, monitor performance, automate retraining, and scale decision-making across thousands of campaigns. This is where practical ML expertise and system-level thinking make all the difference.

To get a deeper scoop, check our detailed case study showcasing how a leading European marketplace with 128,000 merchants implemented this approach to achieve remarkable results — including a 27% reduction in CPC, an 18% CTR lift, and a 45% reduction in operational cost.