Data is the backbone of enterprise infrastructure. And the number of data tools is only increasing every year across many organizations.

Managing, processing, and extracting value from large data volumes is pivotal, especially as companies shift to AI-based workflow automation (with 70% of data teams using AI) and advanced analytics that hinge on high-quality data.

Scalable, cost-effective data pipelines have become a critical enabler of automation, personalization, and long-term competitiveness. And the impact is measurable:

- Back Market reduced change data capture (CDC) costs by 90% and cut data processing time in half by simplifying its data pipeline and migrating to BigQuery.

- Burberry built a real-time, event-driven data pipeline that reduced clickstream latency by 99%, enabling near-real-time analytics and personalization.

- Ahold Delhaize, a food retail group, introduced a self-service data ingestion and orchestration platform that now runs over 1,000 ingestion jobs per day, accelerating AI-driven forecasting and personalization initiatives.

Tweaking data pipeline performance and infrastructure costs starts with understanding the key components of a high-performance data pipeline and the technical decisions engineering teams make with each step of data processing.

This guide walks through the core components of a modern data pipeline that enables AI-driven analytics, backed by real-world use cases and technical decision points your team should consider.

What is a modern data pipeline?

A data pipeline is a structured set of processes and technologies that automate data movement, transformation, and processing.

A modern data pipeline makes raw data, such as various data formats, server logs, sensor readings, or transaction history, usable for storage, analysis, reporting, and AI-based data analysis. It’s capable of scaling up and down as needed to maintain a consistent data load.

To understand how data moves through each step of the data pipelines, let’s examine how a retailer could use to collect, process, and apply customer data to plan marketing campaigns and improve retention.

Step 1. Ingestion: Collecting sales transactions from POS (point-of-sale systems).

Step 2. Transformation: Cleaning the data and merging it with inventory records

Step 3. Loading: Loading the processed data into a cloud-based warehouse

Step 4. Application: Querying customer data for modeling a marketing campaign

This is a simplified but effective way to conceptualize the components of a typical enterprise data pipeline.

From business intelligence to advanced analytics: Embedding AI into data pipelines

A modern, reliable data pipeline is also a critical component of machine learning operations (MLOps) and AI-driven analytics.

While business intelligence tools are designed to aggregate historical data and support reporting, AI systems depend on pipelines that continuously supply high-quality, timely data to models operating in production.

In a BI context, delays and minor data inconsistencies often result in nothing more than a stale dashboard. In AI-driven solutions, the same issues can degrade model performance, introduce bias, or trigger incorrect decisions.

As a result, data pipelines evolve from linear data flows into learning systems with feedback loops, where data quality, freshness, and lineage directly influence business outcomes.

To maintain efficient data flow that enables AI capabilities, engineers increasingly develop custom APIs and automated ingestion mechanisms that feed models directly from governed data sources. This approach reduces manual intervention, minimizes data inconsistencies, and ensures that AI systems operate on trusted, production-grade data rather than ad hoc extracts.

To support AI-driven workflows, organizations should choose data pipeline architectures that balance governance, flexibility, and performance, and the distinction between ETL and ELT is a critical design decision.

Data pipeline types: ETL vs ELT

The aim of the data pipeline is to bring data from the source to storage for further analysis. But the flow can vary depending on data types (structured, unstructured, and semi-structured), data ingestion speed, and analytics requirements.

For that reason, data pipelines can be of two main types: extract, transform, load (ETL) and extract, load, transform (ELT). They differ in the order of data processing: ETL workloads first clean and preprocess data before loading it into the data warehouse or a database, whereas ELT workloads first load extracted data into the destination data storage and then clean and preprocess it when needed.

ETL pipelines explained

Traditional ETL pipelines process structured data and ingest it into a data warehouse, such as Snowflake, Databricks, or BigQuery. Data and business intelligence engineers can then query already transformed data for analysis.

New trends such as reverse ETL and AI ETL add extra value to traditional, straightforward ETL pipelines. Reverse ETL means infusing insights from the data warehouse back into operational systems, such as CRM or ERP, enabling teams to make quick, data-driven decisions. AI ETL, in turn, accelerates the traditional ETL pipeline through automated data transformation, schema mapping, and data quality management.

With the help of change data capture (CDC) services, ETL pipelines continuously receive up-to-date information about changes in the source systems’ databases (inserts, deletes, and updates).

Business benefits of ETL:

- Strong data governance and schema control

- High data quality and consistency for reporting

- Predictable performance for BI workloads

- Easier auditing, lineage tracking, and compliance

- Lower risk of inconsistent or misinterpreted metrics

ELT pipelines explained

ELT jobs extract and load data directly into a data warehouse, data lake, or lakehouse, where transformations are applied later using scalable compute resources.

This approach allows teams to store raw, unmodified data and postpone transformation decisions until they need to perform analysis or model training. ELT pipelines are particularly effective for handling semi-structured and unstructured data, such as logs, events, text, images, and sensor data.

Since modern enterprises increasingly rely on these data types for advanced analytics and AI use cases, ELT pipelines are gaining traction. They enable faster experimentation, support evolving data models, and allow multiple teams to apply different transformations to the same underlying data without re-ingestion.

Business benefits of ELT:

- Greater flexibility for analytics and machine learning

- Faster time to insight through on-demand transformations

- Lower data loss risk by preserving the raw source data

- Scalable performance using cloud-native compute

The comparison table below summarizes the key distinctions between ETL and ELT and covers the possibility of using a hybrid approach.

ETL vs ELT vs hybrid pipeline

| Dimension | ETL | ELT | Hybrid (ETL + ELT) |

|---|---|---|---|

| Transformation timing | Before loading into storage | After loading into storage | Both, depending on the use case |

| Primary data types | Structured, relational | Semi-structured and unstructured | Mixed |

| Schema strategy | Schema-on-write | Schema-on-read | Dual |

| Compute location | ETL engine | Data warehouse/lakehouse | ETL tools + warehouse/lakehouse |

| Governance & compliance | Strong, centralized | Requires additional controls | Strong with flexibility |

| Data freshness | Near-real-time with CDC | Real-time to near-real-time | Optimized per workload |

| Cost profile | Predictable, transformation-heavy | Storage-heavy, elastic compute | Balanced |

| BI reporting | Excellent | Good | Excellent |

| AI/ML feature engineering | Limited flexibility | High flexibility | High flexibility with guardrails |

| Experimentation speed | Slower | Fast | Fast where needed |

| Typical tools | Informatica, Talend, Fivetran, AWS Glue | Matillion, Airbyte, MuleSoft, Azure Data Factory | A combination of both |

When to choose each approach

- Choose ETL for financial reporting, compliance-driven analytics, and stable KPIs where data correctness and auditability matter most.

- Opt for ELT for AI-heavy workloads, feature engineering, exploratory analytics, and large-scale processing of unstructured data.

- Adopt a hybrid approach if ETL is necessary for governed reporting and ELT for data science and machine learning.

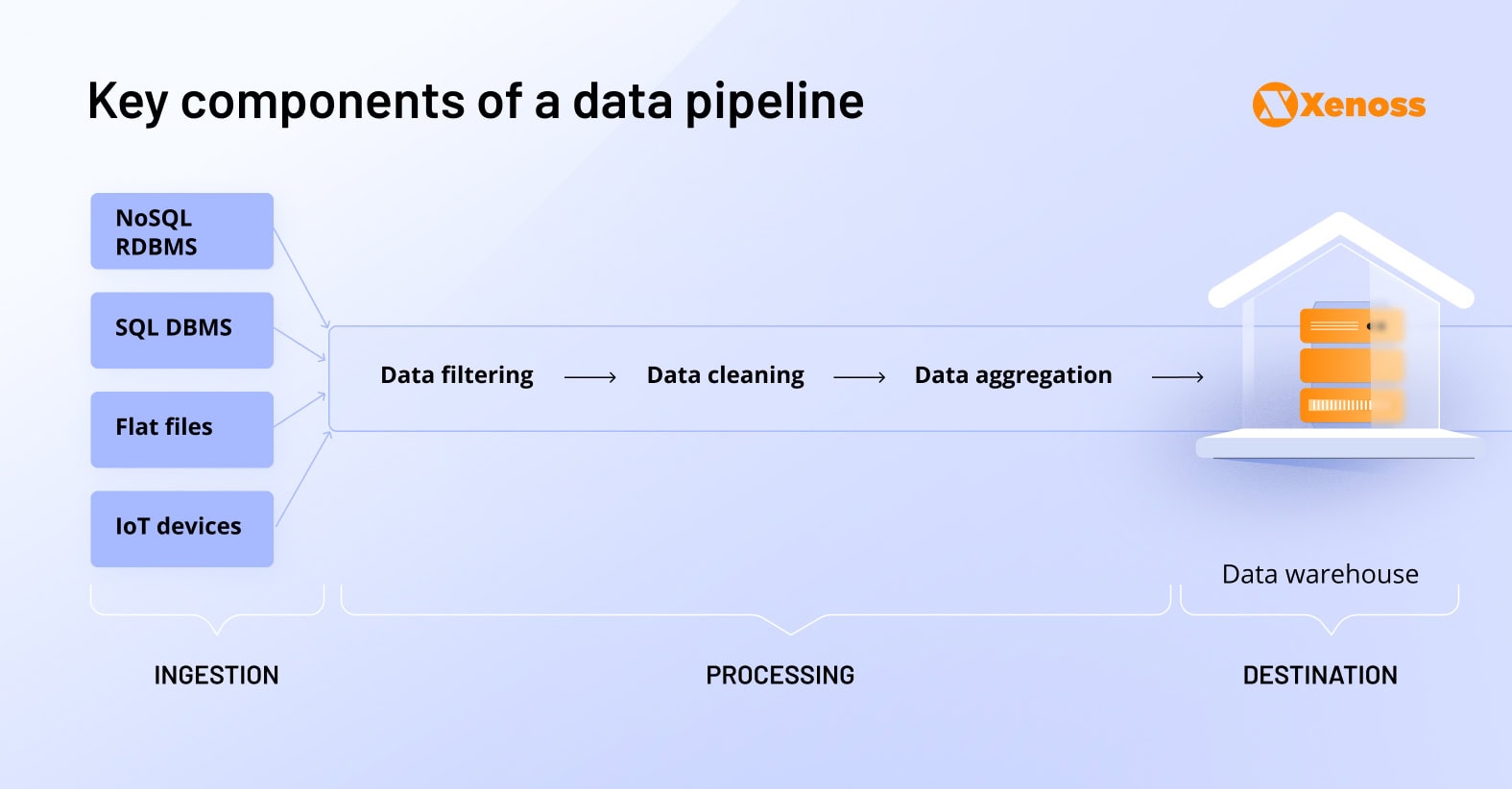

Key components of a data pipeline

In practice, modern data pipelines use more building blocks to manage input data effectively, often in different formats (CSV, JSON, XML, Parquet, among others) from several sources.

Let’s break down the key data pipeline components.

Data sources

Data pipelines process inputs from different sources, including relational and NoSQL databases, data warehouses, APIs, file systems, and third-party platforms (e.g., social media).

If a pipeline ingests data from multiple sources, discrepancies in type (structured and unstructured), format, and data parameters across each point of origin are likely.

To ensure consistent data flow across the pipeline, data engineers use source selection and standardization techniques, such as reliability scoring, relevance filtering, schema enforcement, normalization, and many more.

A “good” source should also score high across data quality dimensions:

- Accuracy: Data correctly represents the real-world value or event.

- Completeness: All required data is present with no missing values.

- Consistency: Data is uniform across different systems or datasets.

- Timeliness: Data is up-to-date and available when needed.

- Validity: Data conforms to defined formats, rules, or standards.

- Uniqueness: No duplicates exist; each record is distinct.

- Integrity: Relationships among data elements are correctly maintained.

Data ingestion

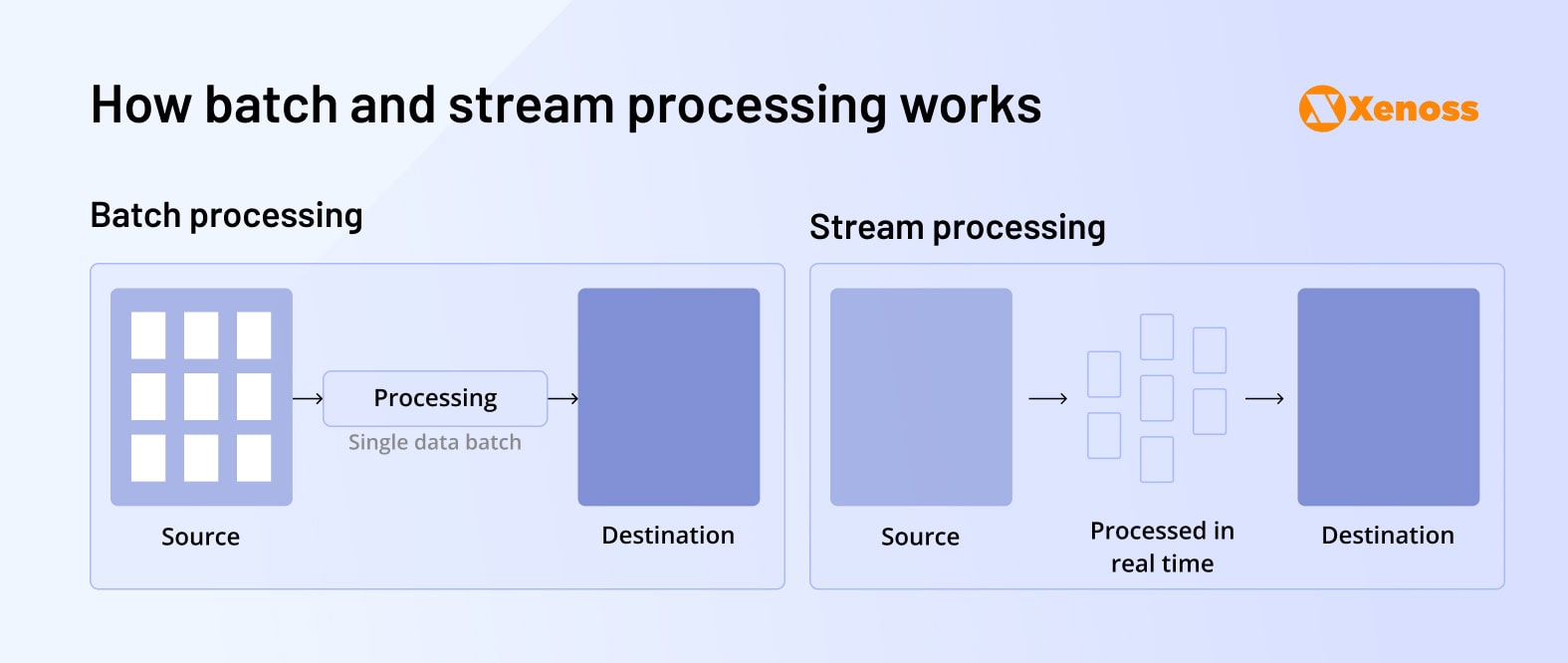

Data ingestion is the process of moving data from its source into the pipeline. It can happen in two primary ways: batch processing and stream processing.

Batch processing

Batch processing processes chunks of data, aka batches, at set intervals. This method is applied to engineer pipelines in projects that do not require critical real-time processing.

For example, an insurance enterprise can use batch processing to identify suspicious claims or classify incidents by severity. This method enables ingesting large data volumes from claim records and the book of policies.

Stream processing

Stream processing is an ingestion technique that enables real-time data processing. It is typically used for real-time finance analytics, media recommendation engines, and traffic monitoring.

Nationwide Building Society, the leading retail bank in the United Kingdom, created a real-time data pipeline to reduce back-end system load, comply with regulations, and handle increasing transaction volumes.

The data engineering team used Apache Kafka, CDC, the Confluent platform, and microservices to support the under-the-hood architecture.

Data processing

At the processing stage, data engineers verify input accuracy, filter out incorrect data, and check format consistency across data points.

For advanced analytics with AI/ML capabilities, engineers can use modern data processing tools such as Polars (written in Rust, one of the fastest programming languages). Instead of processing data row by row, Polars processes data in a columnar format, which is quicker and more efficient for ML workflows. Such tools can preprocess large datasets by using all GPU cores in your infrastructure to speed up computation.

Using such tools, engineers:

- Analyze the incoming data to identify outliers, missing values, skewed distributions, or inconsistencies that could negatively impact downstream analytics or model training.

- Next, the data is cleaned and standardized by normalizing numerical values, encoding categorical variables, aligning timestamps, and reconciling schema differences across sources. For AI workloads, these steps are critical, as models are highly sensitive to data inconsistencies.

- Finally, data is enriched and prepared for consumption by analytics engines or machine learning pipelines. Enrichment may involve joining datasets, adding derived features, aggregating granular events, or integrating external reference data.

Data transformation

At this stage, raw data needs to be transformed into a unified structure and format to become usable across systems. Transformation ensures consistency, simplifies querying, and enables cross-platform analysis.

This step is especially critical when consolidating data from disparate sources with different schemas or structures.

Here are a few industry-specific examples of data transformation.

- Business intelligence: Raw data is aggregated, filtered, and shaped into structured dashboards and reporting views.

- Machine learning: Data is encoded, normalized, and structured to train models effectively and improve prediction accuracy.

- Cloud migration: Moving from on-premises systems to cloud lakehouses such as Snowflake and Databricks often requires format conversion, field mapping, and restructuring to ensure compatibility.

Whether for analytics, modeling, or storage, transformation makes raw data analysis-ready.

Data storage

Once transformed, unified data needs to be stored in a destination system. These are typically an online transaction processing (OLTP) database, a data lake, a data warehouse, or a data lakehouse, depending on the use case.

OLTP

An OLTP system supports high-volume, low-latency transactional workloads. It prioritizes fast inserts, updates, and deletes, enabling applications to handle concurrent user interactions while maintaining strong consistency guarantees.

OLTP databases typically store highly structured data and enforce strict schemas to ensure data integrity. While they are not optimized for analytical queries, they act as the primary source of truth for most enterprise systems.

Modern data pipelines often rely on CDC mechanisms to extract incremental updates from OLTP systems without impacting application performance, keeping analytical and AI systems aligned with real-time operational data.

Data warehouse

A data warehouse is a centralized repository optimized for analytical workloads and business intelligence. It stores structured, curated data that has been cleaned, transformed, and organized for fast querying and reporting.

By enforcing schema-on-write and precomputed aggregations, data warehouses provide predictable performance and consistency for dashboards, financial reporting, and executive KPIs.

Recent advancements have expanded their capabilities to handle semi-structured data and support machine learning workloads, but their primary strength remains high-performance analytics on well-defined datasets.

Data lake

A data lake is a scalable storage system designed to hold large volumes of raw, semi-structured, and unstructured data at low cost. Unlike data warehouses, data lakes apply schema-on-read, allowing teams to store data first and define structure later based on analytical or machine learning needs.

Such flexibility makes data lakes particularly valuable for exploratory analytics, log processing, and training machine learning models on historical data. However, without governance mechanisms, data lakes can become challenging to manage. To address this, modern data lakes increasingly incorporate metadata layers and data catalogs to improve reliability, discoverability, and query performance.

Data lakehouse

It is a data storage solution that combines the best of both worlds: data lake capabilities for cost-efficient storage of unstructured data and atomicity, consistency, isolation, durability (ACID) compliance of the data warehouse. The latter is made possible by open table formats (OTFs) such as Apache Iceberg, Apache Hudi, and Delta Lake.

With the help of OTFs, organizations can store large amounts of data while standardizing data querying and enabling data engineers to run BI and ML jobs using the same data storage. Therefore, a data lakehouse is a particularly suitable data repository for large-scale data analytics.

How to choose the right data storage

There is no cookie-cutter approach to choosing the right data storage platform: the best approach depends on many variables.

- The purpose of the data (analytics, machine learning, real-time processing).

- The type and structure of ingested data.

- Processing throughput requirements. High-load AdTech data pipelines, for example, have to process hundreds of thousands of queries per second.

- The geographic scale of data distribution.

- Additional performance, governance, or integration needs.

Xenoss engineers find it helpful to break data storage selection requirements into “functional” and “non-functional”.

Functional requirements define what a system should do, including the specific behaviors, operations, and features it must support to fulfill business needs.

Functional requirements

| Criteria | Questions to ask |

|---|---|

| Size | - How large are the entities to store? - Will the entities be stored in a single document or split across different tables or collections? |

| Format | What type of data is the organization storing? |

| Structure | Do you plan on partitioning your data? |

| Data relationships | - What relationships do data items have: One-to-one vs one-to-many? - Are relationships meaningful for interpreting the data your organization is storing? - Does the data you are storing require enrichment from third-party datasets? |

| Concurrency | - What concurrency mechanism will the organization use to upload and synchronize data? - Does the pipeline support optimistic concurrency controls? |

| Data lifecycle | - Do you manage write-once, read-many data? - Can the data be moved to cold or cool storage? |

| Need for specific features | Does the organization need specific features like indexing, full-text search, schema validation, or others? |

Non-functional requirements describe how a system should perform, focusing on attributes like performance, scalability, reliability, and usability rather than specific behaviors.

Non-functional requirements

| Criteria | Questions to ask |

|---|---|

| Performance | - Define data performance requirements. - What data ingestion and processing rates are you expecting? - What is your target response time for data querying and aggregation? |

| Scalability | - How large a scale does your organization expect the data store to match? - Are your workloads rather read-heavy or write-heavy? |

| Reliability | - What level of fault tolerance does the data pipeline require? - What backup and data recovery capabilities does the organization envision? |

| Replication | - Will your organization’s data be distributed across multiple regions? - What data replication features are you envisioning for the data pipeline? |

| Limits | Do your data stores have the limits that hinder the scalability and throughput of your data pipeline? |

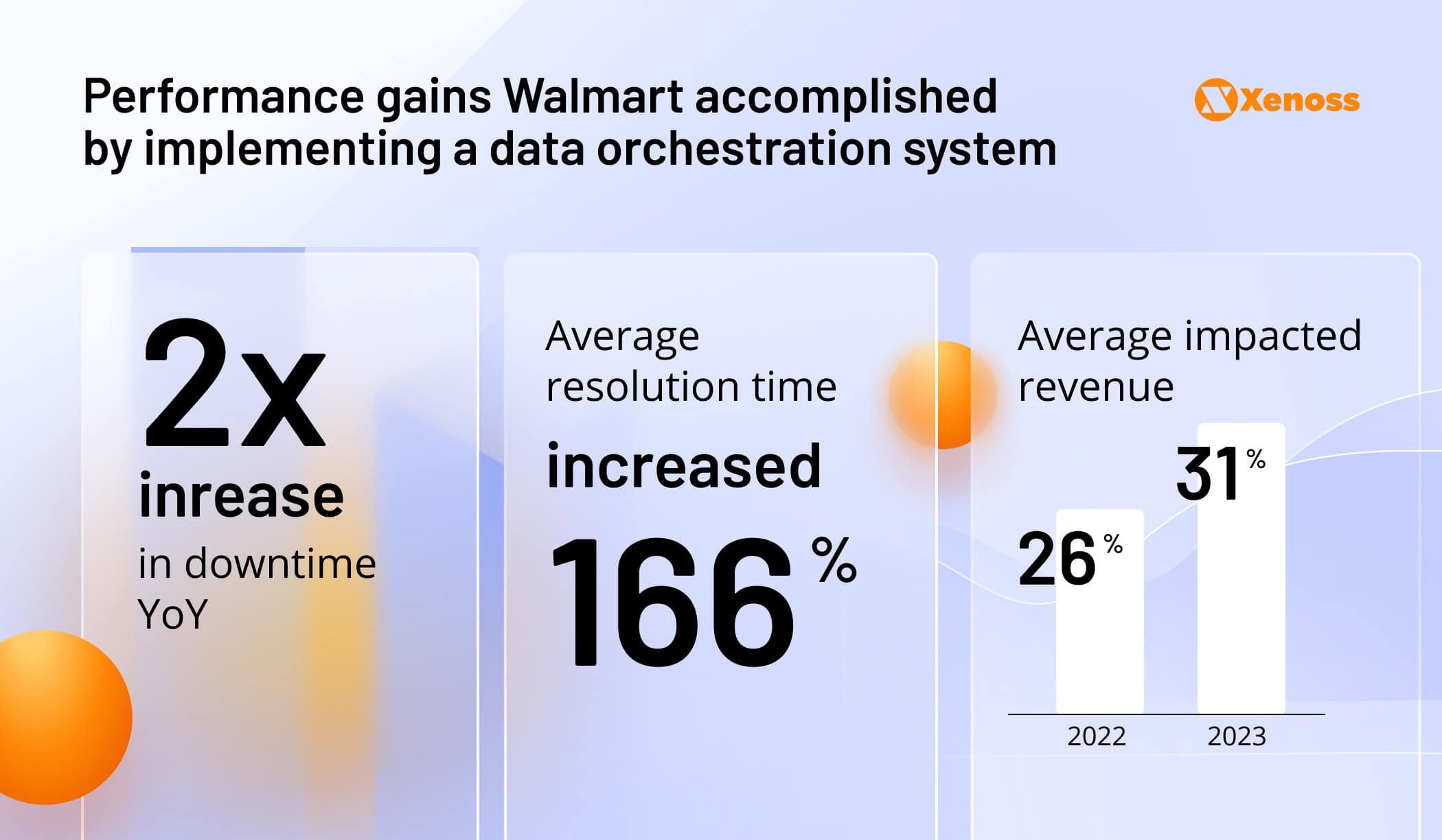

Data orchestration

Data orchestration helps organizations manage data by organizing it into a framework that all domain teams who need the data can access.

Orchestration connects all these sources in a data pipeline that a retailer uses to collect customer orders from its website, warehouse inventory data, and shipping updates from delivery partners. It pulls the order data, checks inventory in real time, updates shipping status, and sends everything to a central dashboard.

This way, a retailer can track the entire customer journey without manually stitching together data from different systems.

Leading enterprise organizations, such as Walmart, introduced similar orchestration workflows to create real-time connections between data points.

In finance, JP Morgan implemented an end-to-end data orchestration solution to provide investors with accurate, continuous insights. The platform uses association and common identifiers to link data points and ensure interoperability.

Whether coordinating batch jobs, triggering real-time updates, or syncing systems across departments, orchestration is what turns raw data movement into reliable, automated workflows.

Monitoring and logging

An enterprise data pipeline should be monitored 24/7 to detect abnormalities and reduce downtime.

A log list captures a detailed record of events across the pipeline, covering ingestion, transformation, storage, and output. These logs are essential for root cause analysis during incidents, auditing pipeline activity, debugging, and optimizing pipeline performance.

Together, monitoring and logging form the operational backbone of observability, helping engineering teams maintain data integrity, meet SLAs, and resolve issues before they escalate.

Security and compliance

Data-driven organizations should implement privacy-preserving practices, such as end-to-end encryption of sensitive data and access controls, to build pipelines that comply with privacy laws (GDPR, California Privacy Protection Act) and industry-specific legislation (HIPAA and PCI DSS).

A focus on compliance is particularly relevant to finance and healthcare organizations that store sensitive data. For instance, Citibank partnered with Snowflake, leveraging the vendor’s data-sharing and granular permission controls to reduce the risk of privacy fallout.

Bottom line

Well-architected data pipelines help enterprise organizations connect all data sources and extract maximum value from the insights they collect.

Designing a scalable, high-performing, and secure data pipeline to support enterprise-specific use cases requires technical skills and domain knowledge.

Xenoss data engineers have a proven track record of building enterprise data engineering and AI solutions. We deliver scalable real-time data pipelines for advertising, marketing, finance, healthcare, and manufacturing industry leaders.

Contact Xenoss engineers to learn how tailored data engineering expertise can streamline internal workflows and improve operations within your enterprise.