Clinical development, which spans four phases of clinical trials, is the last, riskiest, and most expensive step of drug R&D. An average pivotal clinical trial (usually Phase III) costs $48 million, and over 90% of drugs fail.

To address scientific and operational bottlenecks, research teams are exploring technologies like machine learning to enroll patients, collect data, and manage ongoing studies.

With AI in healthcare surpassing $21 billion in market value, new tools for streamlining clinical research are emerging rapidly.

This post is a snapshot of AI use cases in clinical research, companies fueling innovation in the sector, and the current state of VC funding.

This is the final part of the series covering AI applications in drug R&D. Explore our overview of machine learning use cases in drug discovery and preclinical research.

Why clinical trials fail

9 out of 10 drug candidates don’t go past clinical research. Despite multiple attempts to fine-tune preclinical research so that it only yields promising leads, high failure rates persisted for decades.

The most cited reasons as to why clinical trials fail are lack of clinical efficacy (40-50% of reported cases), high toxicity (30% of surveyed RCTs), suboptimal drug-like properties (10-15%), and lack of market demand or poor planning (10%).

While each of these issues stems from different parts of the R&D pipeline, together they highlight a critical need for more predictive tools, better trial design, and smarter patient matching, all areas where AI is starting to make a tangible difference.

The balance between efficacy and toxicity

The failure to reach clinical efficacy is the primary reason why drug candidates do not go past Phase I clinical trials. That does not necessarily mean that tested molecules are ineffective per se, rather that they fail to show therapeutic benefits at maximum tolerable doses (MTD).

At a higher concentration, many drug candidates would have been effective, but by then, they show toxicity in healthy organs, creating a balancing act between maximizing the potency and reducing side effects.

This particular aspect tends to be overlooked in drug discovery and clinical development because it is hard to predict why two structurally similar molecules can yield drastically different clinical outcomes.

For example, the analysis of over 600 RCTs conducted on FDA-approved or failed selective estrogen receptor modulators (SERMs) showed that slight structural changes, which did not alter the compounds’ drug-like properties, led to significant variations in efficacy and toxicity.

Having better visibility of patient response to a drug and the ability to identify early signs of drug toxicity would help reduce the rate of clinical failure.

Suboptimal drug properties

Traditional drug candidate screening often begins with large-scale testing of chemical libraries, but the criteria used are typically generic and overlook how different patients, diseases, and tissues interact with the compound.

Researchers report that the lack of personalization makes assessing drug properties “a shot in the dark” and lends itself to their failure in the clinical context.

The research team behind Remdesivir, a prospective COVID-19 therapeutic, failed to consider the importance of drug exposure to different tissues.

While preclinical tests of the compound showed promise, in clinical research, it achieved little exposure in the target tissue, the lung, yet tended to precipitate and cause high toxicity in the kidney.

Cases like this underscore the importance of developing tools that can quantify tissue-specific drug exposure and predict pharmacokinetic behavior under real-world conditions.

AI-powered models now offer promising capabilities to simulate drug distribution, analyze how molecules behave in diverse biological environments, and tailor compound selection to patient-specific profiles, making trial outcomes far less random.

Operational bottlenecks

Even when a drug shows potential, operational breakdowns often prevent it from reaching approval. These issues aren’t about the compound’s efficacy or safety; they stem from how trials are designed, funded, and executed.

Here are the most impactful operational bottlenecks in clinical research:

- Patient recruitment. In the field of cancer research, 25% of trials fail to enroll the needed number of participants, and only about 2-5% of the total population of adult patients participate in clinical research.

- Lack of guidance on inclusion/exclusion criteria. Clearly defined eligibility criteria help match the patient profile used in research to the target population. Yet, in many therapeutic areas, there are no clear-cut diagnostic criteria to begin with. For instance, there is high variability in diagnosing heart failure with preserved ejection fraction (HFpEF). Some researchers use EF > 50% as a cut-off, while others choose different values. This ambiguity creates difficulties in clearly defining a target patient profile.

- Financial strain. While the costs of clinical trials vary from study to study, they may reach up to $2.5 billion. It is unsurprising, then, that 22% of aborted Phase III studies fail due to lack of funding. White part of these expenses, such as site and patient management costs, are more challenging to slash, optimizing processes and cutting the duration of clinical research could help address the underfunding challenge. McKinsey notes that cutting the duration of clinical studies by a year would add over $400 million to a sponsor’s portfolio.

Discovering ways to automate operations, build stable clinician-patient relationships, and slash costs would help increase the completion rate of clinical trials.

How AI is improving and fast-tracking clinical research

Clinical research is entering a new phase where AI doesn’t just support the process, but actively accelerates it. Early adopters of machine learning are already reporting both scientific and operational wins.

McKinsey reports that research teams have successfully used AI to optimize trial sites, boost patient enrollment by up to 20%, and predict enrollment trends to attract new sources of funding.

Using AI across the research pipeline helped compress the duration of a randomized clinical trial by an average of 6 months. This is a massive gain in a field where time equals both cost and patient outcomes.

But even greater efficiencies are emerging with generative AI. By automating time-consuming manual tasks, from drafting regulatory documents to assisting with decision-making and compliance communications, genAI has the potential to cut process costs by up to 50%.

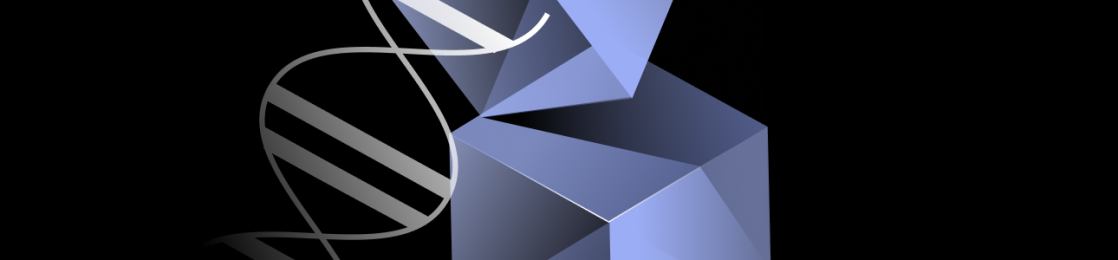

Biotech companies are driving this change by building ML-enabled solutions that streamline all key areas of clinical research.

Here is a snapshot of promising AI applications and innovators to watch.

Patient enrolment

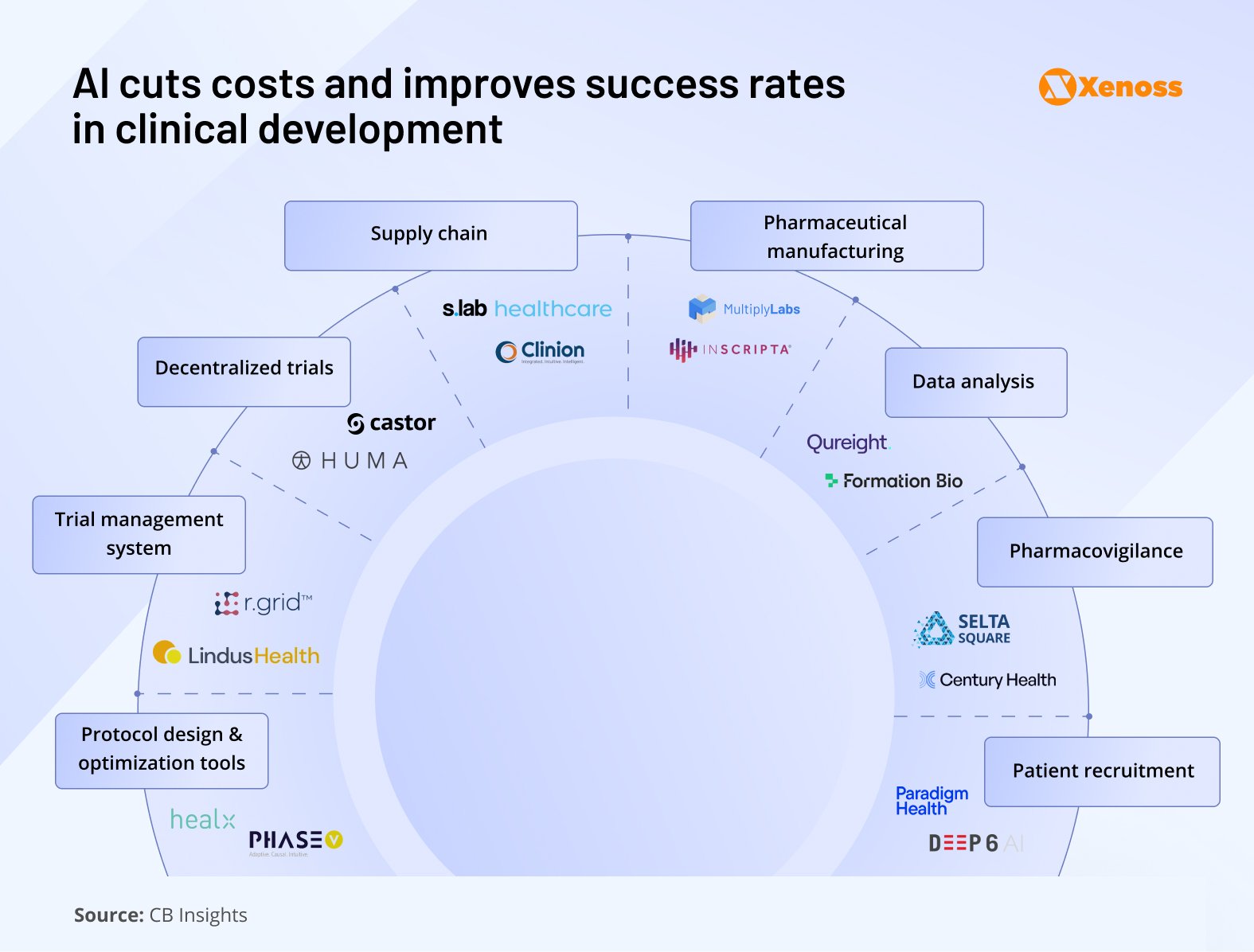

AI platforms help process providers’ patient data records and identify populations that meet the inclusion and exclusion criteria of the trial.

Machine learning algorithms help research teams organize unstructured EHR data to identify potential matches. In 2024, the NIH built a matching engine to connect volunteers to relevant clinical trials. The algorithm was trained on the RCT records kept at ClinicalTrials.gov.

Smaller institutions with limited data access can still use machine learning to automate patient screening.

Altru, a North Dakota-based healthcare service provider, partnered with Paradigm Health to build a data-driven patient screening system. Paradigm’s technology used machine learning to assess each patient admitted to Altru as a potential RCT candidate.

This ML-enabled approach helped increase the annual clinical trial enrolment rate from 4% to 11%.

AI startups innovating patient enrolment:

- Paradigm Health analyses each provider’s patient population and incoming study protocols with LLMs to “source smarter” and pick trials that truly fit local patients.

- Deep6 AI created algorithms to score, qualify, and prioritize matches across a 1,000-site research ecosystem. The platform gives research teams real-time visibility into where they can find recruitable patients.

Protocol design and optimization

On average, principal investigators on a research team need between 150 and 200 hours to write a clinical trial protocol that would lay out the objectives, key steps, and criteria for patient inclusion/exclusion.

Standardizing and automating this process with AI reduces this strain.

Xenoss engineers help clinical trial teams automate protocol design by building proprietary large-language models trained on real-world protocol data, as well as the pre-clinical records kept by the research team.

These models follow structured prompts from clinical teams and generate protocol drafts aligned with industry standards and regulatory expectations, cutting turnaround times and minimizing manual revisions.

AI startups that streamline protocol design:

- Healx mines literature, biomedical data, and patient-reported insight to support protocol writers with outcome measures for small-patient, rare-disease trials.

- PhaseV delivers real-time dashboards to help teams accurately estimate sample size, add arms, or pivot endpoints.

Clinical trial management

McKinsey reports that industry-standard “control tower” teams, which are centralized systems for aggregating patient data across trial sites, capture only 70–80% of actual clinical progress. The remaining 20–30% gap represents missed signals, delays, and manual overhead that slow down decision-making and risk regulatory setbacks.

Machine learning has the tools to automate patient data gathering and processing to cover the gaps in understanding a patient’s response to the drug.

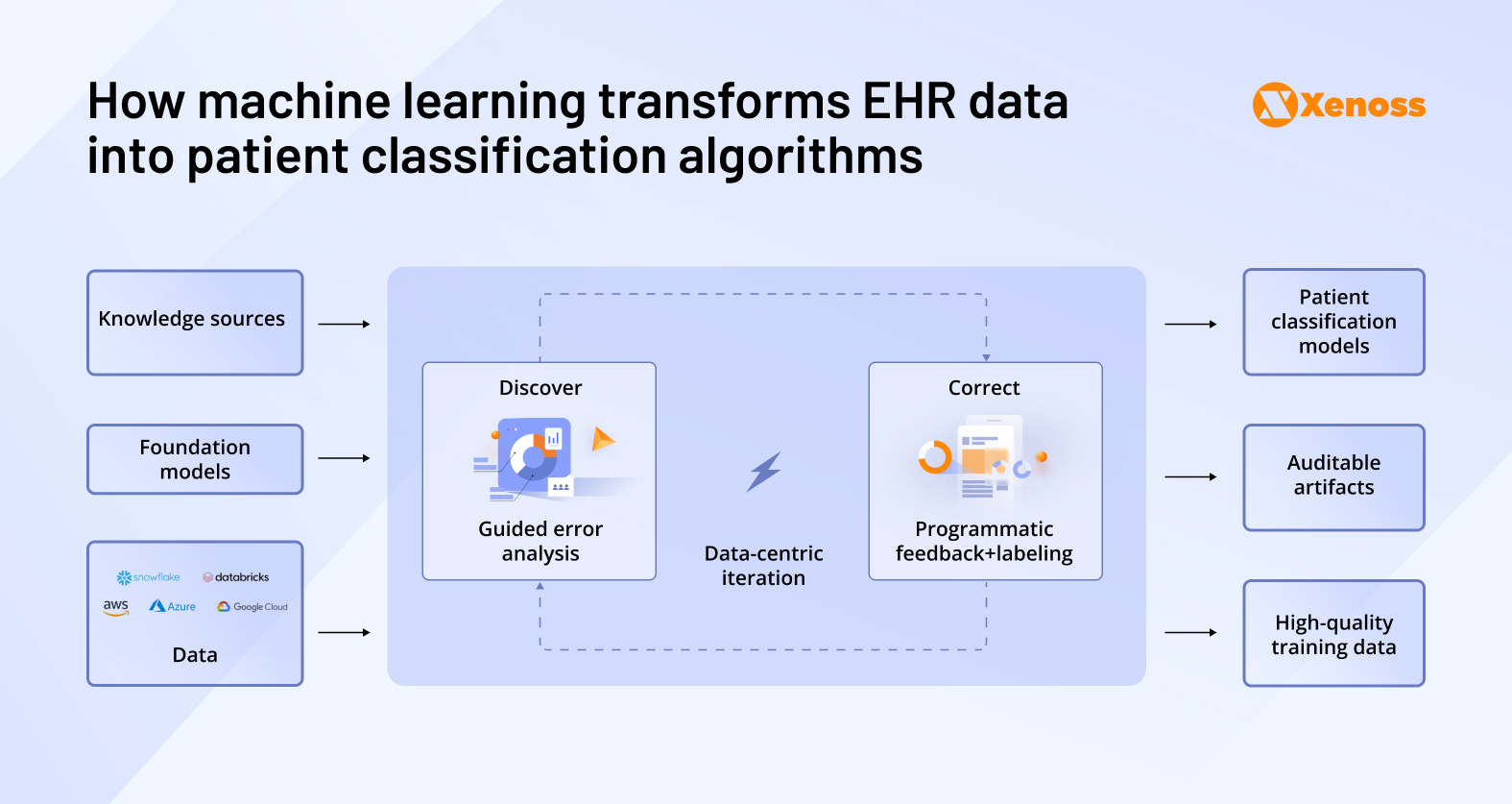

Furthermore, there is a spike in enthusiasm around using agentic AI solutions to automate complex workflows like email exchanges between the clinical and research teams, patient communication oversight, progress tracking, and reporting.

Research teams can now build multi-agent systems that collectively oversee data aggregation, patient monitoring, fact-checking, and reporting outcomes. All agents report to the coordinator agent and have shared access to RCT data.

AI startups optimizing clinical trial management:

- Lindus Health built SPROUT, an internal LLM tool, that shortens protocol creation from weeks to hours by drafting and red-lining new protocols based on historical RCT and KOL data.

- R.grid uses ML to monitor task queues, flag bottlenecks, and set up robotic process automations. The platform’s users report up to 45 % cost cuts and months of timeline reduction.

Decentralized clinical trials

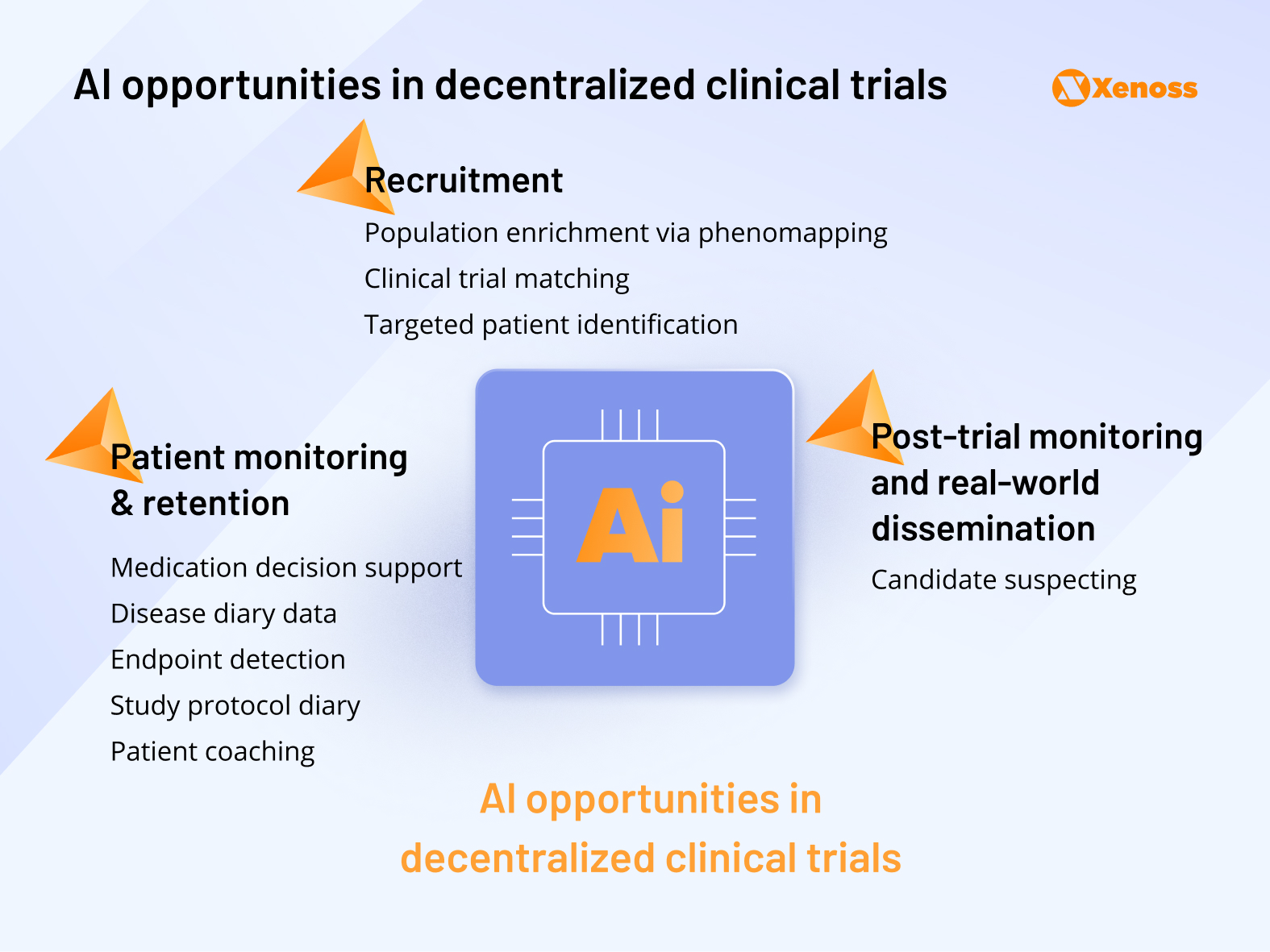

Following the COVID-19 pandemic and the surge of telemedicine, there has been increased interest in running decentralized clinical trials. If they were to become industry standards, clinicians and researchers would have more ways to enroll and monitor the progress of stay-at-home patients.

At the moment, decentralized trials are met with friction due to compliance, patient monitoring, around-the-clock data gathering, and outcome reporting challenges.

Machine learning makes the process more sustainable by improving recruitment, retention, and post-trial monitoring of DCT patients.

Research teams report using phenomapping algorithms to identify ideal patient profiles for decentralized trials.

Tapping into generative AI to automate the process of filling surveys, logging events, and writing disease diaries lifts a significant burden for patients enrolled in remote trials, which currently contributes to a 30% dropout rate and causes 40% of patients to stop adhering to the protocol within 150 days of the study.

Some teams successfully trained patient-specific deep learning models to offer DCT participants personalized guidance and promote compliance.

Biotech companies leveraging AI for decentralized clinical trials:

- Castor uses human-in-the-loop AI on Microsoft Azure to read eSource files, push clean data into the EDC, and auto-complete Source Data Verification. This system eliminates up to 70% of manual data entry.

- Huma combined continuous sensor ingestion with ML models to create digital biomarkers and risk scores. The use of the platform in the DeTAP atrial-fibrillation trial helped researchers reach 94 % recruitment in 12 days and raise medication adherence from 85 % to 96%.

Supply chain

According to Deborah Knoll, Director of Clinical Supply Optimization Services at Thermo Fisher Scientific, clinical trial supply planners typically face supply chain challenges in three areas:

- Demand vs. delivery speed: In some cases, the production rate does not match the pace of the study.

- Regulatory burdens related to labeling, documentation, export, and import of drug candidates.

- Environmental bottlenecks, such as changing weather conditions, can delay delivery.

Machine learning helps optimize supply chains in all three areas.

For one, AI models can analyze historical supply chain data and identify trends and best practices for future shipments. Later, this insight can be used by other models to run real-time simulations of the entire supply chain and identify risks before they manifest in real-world conditions.

Companies applying AI to the pharma supply chain:

- S lab healthcare predicts both the probability and precise timing of temperature excursions by analyzing multiple supply-chain variables.

- Clinion employs generative AI modules to model complex supply scenarios, such as testing routing and allocation strategies in silico before trial start-up.

Pharmaceutical manufacturing

Companies using AI to streamline pharmaceutical manufacturing:

- MultiplyLabs empowers imitation-learning robots that watch expert scientists performing tasks (e.g., resuspending cell flasks) and copy those motions. The platform then “auto-programs” itself for new cell-therapy processes.

- Inscripta blends Directed Evolution, CRISPR+ (royalty-free MAD7 nuclease), and machine learning models to accelerate DBTL cycles and lower the cost per variant to ≈$0.10.

Data analysis

Clinical trials used to rely on retrospective data analysis but this approach comes with limitations. If there are errors in clinical data, there is no way to go back and correct the parameters of the study after the fact and valuable time has been lost.

Integrating real-time data into clinical research, on the other hand, allows research teams to proactively respond to protocol deviations, recruitment delays, or other bottlenecks along the pipeline.

That said, the need for advanced analytics capabilities is a barrier to collecting and processing large volumes of clinical data in real time.

Machine learning can streamline analytics by harmonizing the data that enters the system from different sources (patient-reported outcomes, wearables, EHRs). By automating data cleaning and validation, researchers no longer need to run repetitive sorting and can focus on high-value analysis.

Companies using AI for clinical data analysis:

- Qureight uses computer vision to segment CT scans into fibrotic, vascular, and airway compartments and turn raw scans into quantitative biomarkers for safety read-outs.

- Formation Bio ingests all trial sources (EDC, ePRO, RWD, documents) into its Universal Data Platform. Then LLM/agentic AI pipelines auto-clean, standardize, and push data to dashboards, replacing manual data entry.

Pharmacovigilance

After the drug hits the market, it is essential to continue monitoring patient response and tracking previously unknown adverse effects.

Pharmacovigilance efforts struggle to scale due to a massive underreporting problem. On average, the therapeutic responses to a chosen course of treatment are off researchers’ radar for 94% of patients.

Machine learning helps overcome pharmacovigilance limitations by generating automated reports from EHRs, wearable sensors, and other data sources.

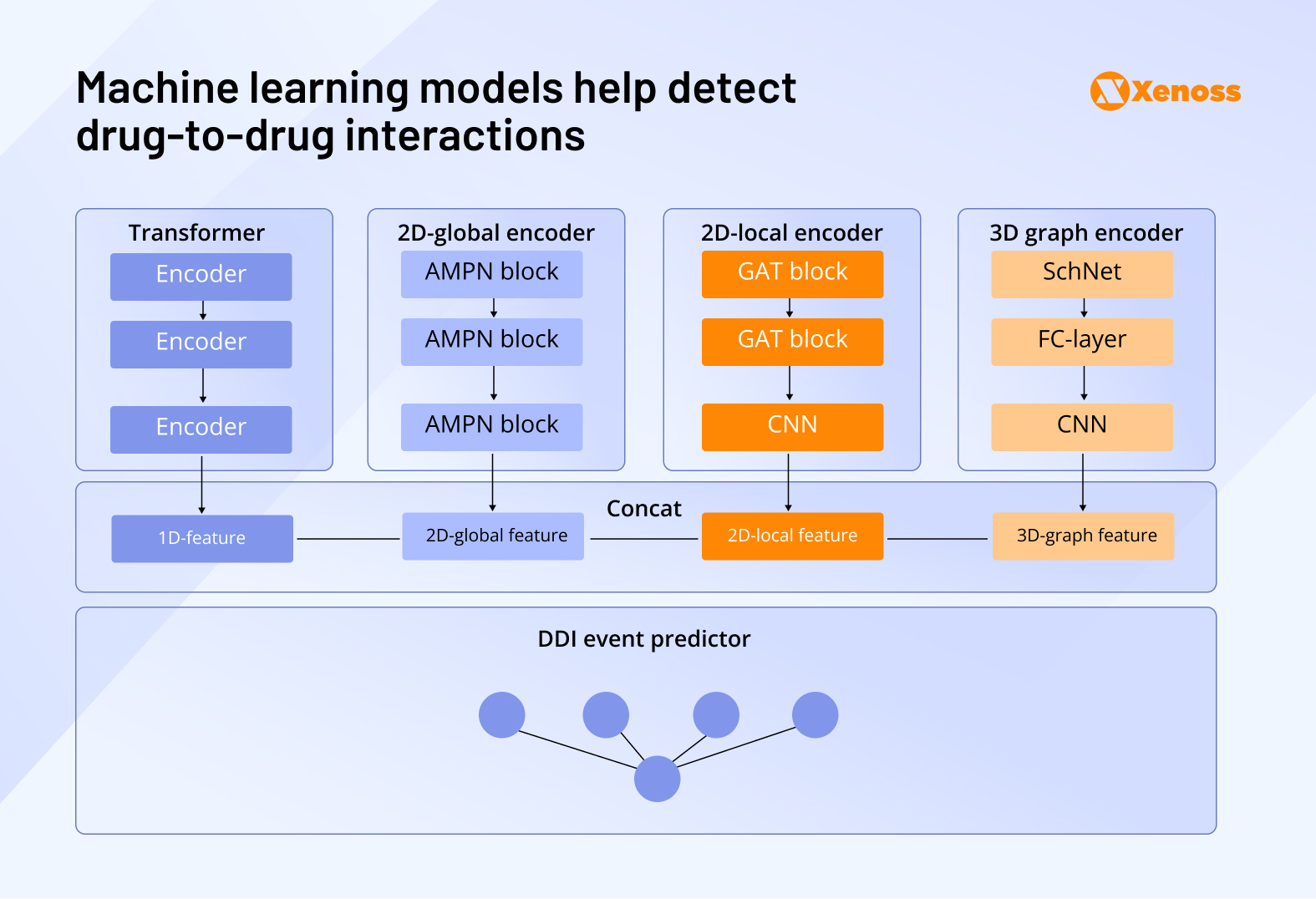

Clinical teams are already applying AI to detect drug-drug interactions, detect early signs of adverse drug effects, and improve communication between the patient and the clinician. Deep learning models like the Multi-Dimensional Feature Fusion (MDFF) model analyze the structural properties of drugs patients are prescribed in polytherapy and identify the probability of DDIs and ADRs.

Biotech companies using AI in pharmacovigilance:

- Century Health abstracts unstructured EHR notes, imaging, and -omics into standardized OMOP/SNOMED formats to create accurate chart reviews and longitudinal patient records.

- Selta Square runs a PV-as-a-service platform that marries IBM Robotic Process Automation to automate global literature and database surveillance for adverse drug reactions.

Funding trends in AI-enabled clinical research

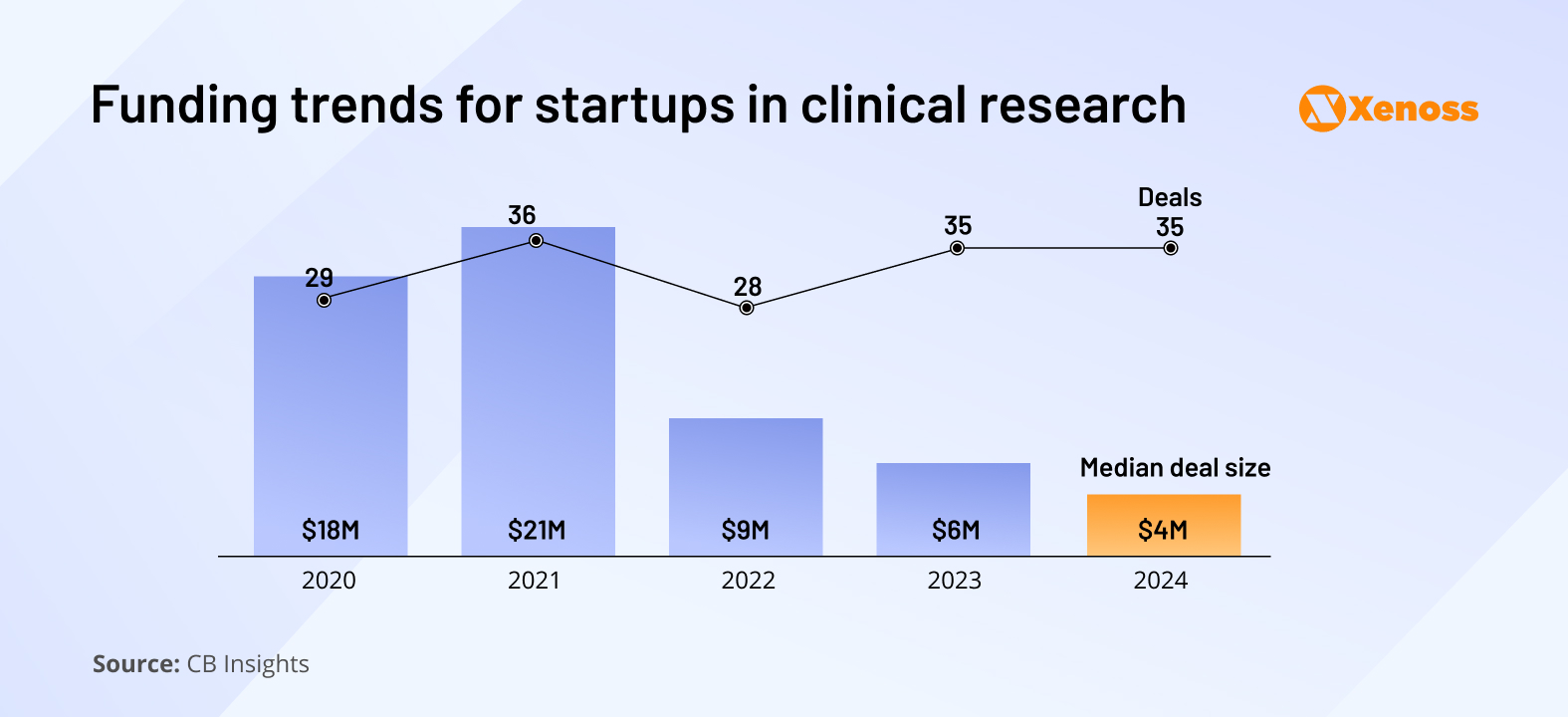

Where drug discovery saw increased investor interest in the last eighteen months, the scale of funding for AI projects in clinical research has been dropping consistently since 2021. Interestingly, the number of deals has remained stable, but the average deal size has shrunk.

Despite a slower stream of venture capital, startups in the sector are showing consistent operational growth. Headcounts at AI clinical development startups grew by 14% last year, and in some markets, like protocol design and optimization tools, they increased by nearly 40%.

This operational growth and declining funding may suggest that the sector is becoming mature, with companies that generate revenue independently and become less dependent on VC funding.

Several indicators point to an upcoming wave of IPOs and acquisitions:

- There are now six unicorns in the AI-for-clinical space, with Huma being the most recent (2024)

- Owkin and Inscripta lead the field in IPO readiness, with CB Insights assigning them IPO probabilities of 21% and 13%, respectively

If these firms successfully transition from private unicorns to public companies, it would validate the commercial promise of AI in clinical development. It could trigger a fresh wave of investor confidence across the field.

Bottom line

Among drug R&D development stages, clinical research is the most mission-critical, expensive, and time-consuming.

There’s a clear need for innovation that would move the needle on the high failure rate of clinical trials. At the same time, heavy regulatory oversight makes innovating in this space challenging, so VC engagement in the sector is moderate.

Thus, clinical research is somewhat stuck between a rock and a hard place. The good news is that emerging technologies like genAI and agents have found a way to trickle into the space. The pace of innovation is not yet rapid enough to make a statistically significant difference, but the adoption rate is growing, and launched AI pilots tend to perform well.

AI won’t replace clinical research. But it will make it faster, smarter, and more resilient.