Worldwide AI spending will reach 1.5 trillion by the end of 2025. By contrast, the global enterprise software market reached $316.69 billion in 2025. This means enterprises are spending nearly five times more on AI than on the software that runs their core operations.

AI total cost of ownership differs fundamentally from traditional enterprise software economics through technical factors that create hidden cost multipliers:

- Computational resource scaling with model parameter growth

- Continuous data pipeline processing overhead

- Real-time model performance monitoring requirements

- Multi-environment deployment complexity

- Regulatory compliance automation

- Legacy system integration challenges.

Unlike enterprise software expenses, however, AI investments are much more complex. Business leaders often lack a comprehensive understanding of the total cost of ownership (TCO) of developing, deploying, maintaining, and scaling an AI model. That’s why 85% of organizations misestimate AI project costs by more than 10%.

Understanding where your AI budget goes is critical. This guide breaks down AI development cost into six components that determine long-term ROI:

- Infrastructure: GPU clusters, auto-scaling, multi-cloud ($200K-$2M+ annually)

- Data engineering: Pipeline processing, quality monitoring (25-40% of total spend)

- Talent acquisition and retention: Specialized engineers ($200K-$500K+ compensation)

- Model maintenance: Drift detection, retraining automation (15-30% overhead)

- Compliance and governance: up to 7% revenue penalty risk

- Integration complexity: 2-3x implementation premium

By segmenting AI costs, you gain control, visibility, and the ability to make informed trade-offs among speed, accuracy, and efficiency, achieving meaningful enterprise impact through systematic TCO management.

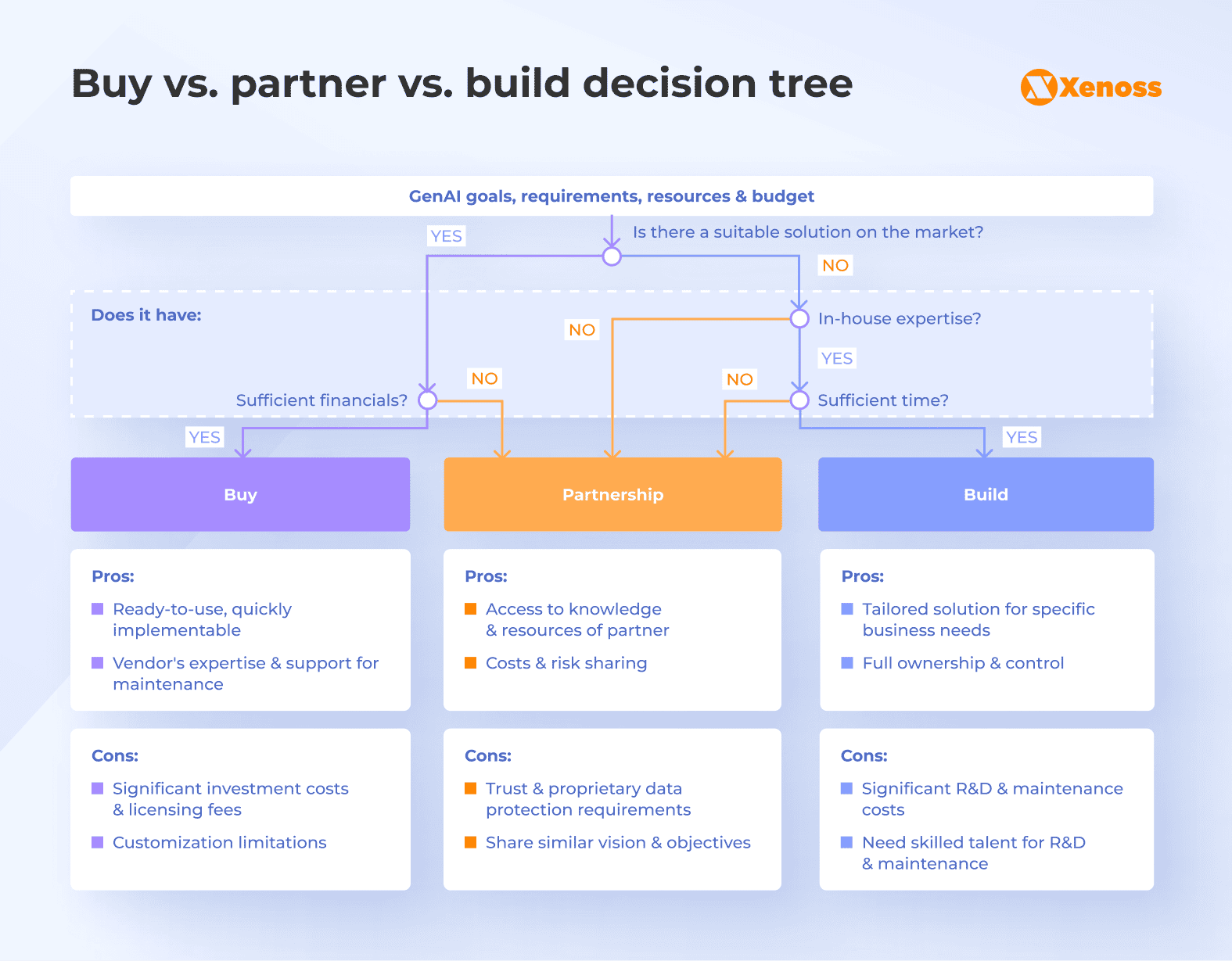

Build vs. partner vs. buy: Decision tree for cost-efficient AI adoption

Before committing to any AI app development cost model, organizations should assess critical factors that determine long-term TCO trajectories:

- Is this a proof-of-concept or a long-term goal? Helps you realize the level of commitment required to see a project through.

- What’s our acceptable threshold for going over budget on an AI project? Defines a clear stopping point and prevents uncontrolled spending.

- Do we have a defined business problem and clear KPIs to measure AI performance? Drives alignment between technical execution and business results, ensuring your AI initiatives remain accountable and measurable.

These assessment questions help you determine whether AI is: a tactical experiment to see where it would be most effective, or a strategic infrastructure investment necessary to solve a budget-draining enterprise problem.

With this understanding, you can decide whether to integrate a ready-made solution, partner with AI vendors, or build an AI solution in-house from scratch.

Architecture strategy comparison

| Approach | Initial investment | Ongoing costs | Control level | Time to value | Risk profile |

|---|---|---|---|---|---|

| Custom development | High ($500,000 – $2 million) | High (30-40% annually) | Maximum | 12–24 months | High technical risk |

| Strategic partnership | Medium ($100,000–$500,000 | Medium (15-25% annually) | Shared | 6–12 months | Medium implementation risk |

| Commercial platform | Low ($50,000–$200,000) | Low-medium (10-20% annually) | Limited | 3–6 months | Low technical, high vendor risk |

Each decision incurs different costs and AI TCO. A ready-made solution is, of course, cheaper than custom development. However, you shouldn’t focus only on what’s cheaper, but also on how AI prices align with your business goals. For instance, you may realize that although solving your current business problem requires a significant upfront investment, the potential AI ROI is worth it.

The decision tree below shows the pros and cons of each decision, along with the chain of reasoning questions leading to the final decision.

Out-of-the-box purchases work for experiments. Long-term goals require trusted partnerships or in-house expertise.

Once you set your mind in the right direction, you’ll analyze each of the TCO components listed in this article with the clarity of which costs are yours to control and which depend on your partners or vendors.

#1. AI infrastructure stack: Training, inference, and hosting costs

Every respondent to IBM’s study said they had cancelled or postponed at least one of their GenAI projects due to rising compute expenses. To avoid this in the future, 73% of respondents plan to implement centralized monitoring solutions to analyze every aspect of AI computing.

But why do AI infrastructure costs spiral out of control? Because they exhibit non-linear scaling patterns:

- Model drift: performance degrades over time, requiring retraining and revalidation and consuming, on average, an additional 15-25% of compute overhead.

- Parameter growth and memory scaling: large language models with 70B+ parameters require 140GB+ GPU memory for inference.

- Data decay: outdated or low-quality data inflates processing and storage costs.

- Hallucination control: continuous monitoring, evaluation, and guardrails add operational load.

- Hardware wear and capacity limits: GPU utilization declines as workloads scale and diversify, experiencing 20-40% utilization penalties compared to dedicated deployments.

Each of these factors adds new layers of compute, storage, and monitoring demands that compound month after month.

Where and how you host your models (cloud, on-premises, or hybrid), and whether you run training and inference workloads, determine the long-term cost of implementing AI.

Model training vs. inference costs

Google spends 10 or even 20 times more on inference than on model training. The gap between training and inference costs is so wide because, during model training, you make a high initial investment to ensure enough computing power to run training datasets.

During inference, costs accumulate and increase over time. The more people use the model and the more outputs it produces, the more computing power it needs, and eventually, the more money the company pays.

The cost of training and inference for your business depends on the model type, the number of parameters, and the size of your data. To reduce training costs, you can purchase pre-trained models and expand their capabilities with enterprise knowledge bases based on retrieval-augmented generation (RAG).

Skipping inference won’t be possible, since the model will run in production within your organization. But you can reduce AI software cost by choosing where to host your system.

Cloud vs. on-premises model hosting

Enterprises can host AI solutions in the cloud or on-premises, each with distinct cost implications. Platforms like Amazon Bedrock, Azure AI, and Google Vertex AI simplify deployment by providing managed infrastructure and ready access to pretrained models.

Cloud hosting reduces setup complexity but introduces unpredictable expenses. While spot instances make training and retraining more affordable, inference workloads often drive “cloud bill shocks”, with costs spiking from 5 to 10 times due to idle GPU instances or overprovisioning. As Christian Khoury, CEO of EasyAudit, puts it: “Inference workloads are the real cloud tax; companies jump from $5K to $50K a month overnight.”

A hybrid approach often balances cost and control: running training in the cloud and inference on-premises. Khoury notes: “We’ve helped teams shift to colocation for inference using dedicated GPU servers that they control. It’s not sexy, but it cuts monthly infra spend by 60–80%.”

As of 2025, renting an NVIDIA H100 GPU in the cloud costs $0.58–$8.54 per hour or $5,000–$75,000 per year if used continuously, rivaling the $25,000–$30,000 purchase price of on-premises hardware.

However, on-premises setups require spending on power, cooling, and maintenance, which can add 20–40% to ownership costs unless utilization stays high. The larger and more complex the model, the higher the end-of-the-month bill. But the obvious advantage is that you’re in control of how many GPUs to buy, how many workloads to run, and when to stop or completely change direction without any extra fees.

Cloud vs. on-premises vs. hybrid architecture economics

| Deployment model | Training workloads | Inference workloads | Data governance | Scalability | Total cost impact |

|---|---|---|---|---|---|

| Public cloud | Optimal for burst capacity | High per-request costs | Limited control | Unlimited | 2–4x premium for production scaling |

| On-premises | High capital investment | Predictable operating costs | Maximum control | Hardware limited | 40–60% lower at high utilization |

| Hybrid architecture | Cloud training and edge inference | Optimized cost structure | Balanced control | Selective scaling | 30–50% cost optimization |

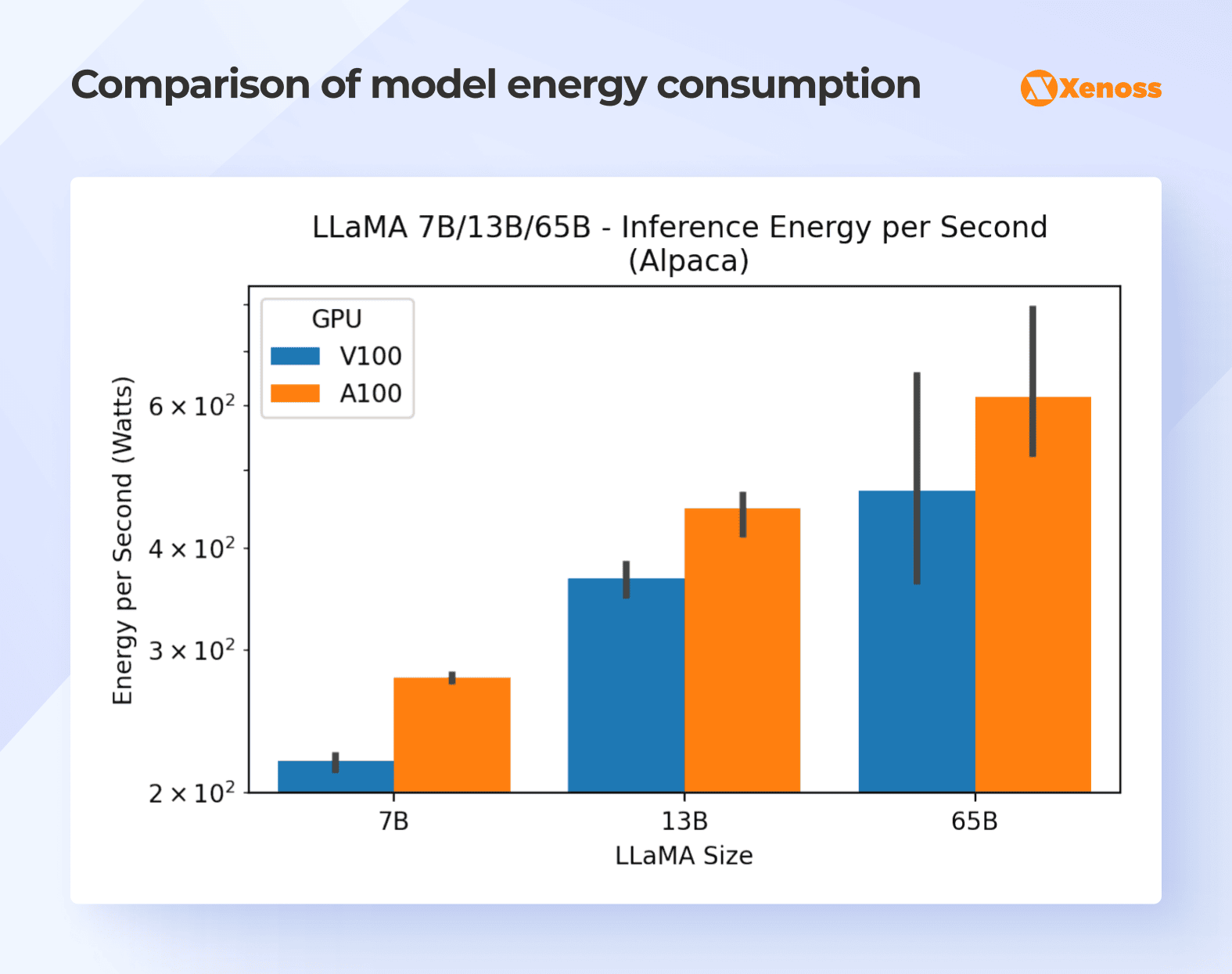

Research comparing LLaMA models (7B, 13B, 65B) found that less powerful GPUs (like V100s) consume less energy per second but take longer to complete inference. Actual efficiency lies in optimizing both energy use and model performance.

#2. Data engineering costs: From manual to automated data processing

The more autonomous you expect your AI system or agent to be, the more data they need. But 49% of organizations view integration with enterprise data as the main bottleneck to AI scaling.

That’s why companies can fall into two extremes, as mentioned in the ISG research:

- Boil the ocean: large data transformation projects, costing millions of dollars, to update all data management systems and practices at once.

- Bypass the mess: use siloed, unprepared data pipelines to get the project going, but end up with tech debt.

Both extremities are costly and inefficient. The balance is, as always, in between. Data transformation is essential, but only to the extent necessary for a particular AI project and business problem.

Collection, cleaning, and labeling at enterprise scale

On average, up to 13.2% of AI project costs are allocated to data preparation steps, and 43% of chief data officers (CDOs) perceive data quality, completeness, and readiness as the main drivers of AI adoption.

Here’s a cost breakdown of different data preparation stages with real-life use cases:

| Data requirement | Description | Real-life use cases | Estimated costs |

|---|---|---|---|

| Data collection & integration | Aggregating information from fragmented internal systems and external APIs to build training datasets. Often includes custom pipeline development, API connectors, and ETL workflows. | A logistics company integrating IoT sensor feeds, warehouse ERP, and shipment tracking APIs into a unified lakehouse for predictive maintenance. | $150K–$500K per year, depending on the number of data sources and pipeline complexity. |

| Data cleaning & preprocessing | Preparing raw data for analysis, removing duplicates, resolving inconsistencies, enriching metadata, and ensuring schema compatibility. | A retail chain is cleaning millions of sales and inventory records to train demand forecasting models. | $25K–$30K per data analyst annually; 10–20% of total AI budget on preprocessing efforts. |

| Data labeling & annotation | Tagging text, image, or video data for supervised training or fine-tuning. Costs vary by complexity, domain expertise, and quality assurance. | A healthcare company is labeling 200,000 MRI scans for a diagnostic model. | $0.05–$5 per label (simple → complex); $5K–$10K per project via SaaS platforms like Labelbox, Scale AI, or Labellerr. |

| Data storage & lifecycle management | Storing structured and unstructured data (including model inputs/outputs) and applying tiered retention policies to control cost. | A pharma company managing high-resolution microscopy and genomic datasets for drug-discovery models, requiring fast retrieval and secure access controls. | $23–$80 per TB/month (cloud storage) |

| Data governance & compliance | Implementing access control, lineage tracking, and regulatory compliance (GDPR, HIPAA, AI Act). Requires metadata management and policy enforcement. | A financial institution using Databricks Unity Catalog and Collibra to ensure customer data traceability and model audit readiness. | $100K–$300K annually for governance tools and personnel |

Costs vary depending on the complexity of the use case and the maturity of the infrastructure. To control them, leaders are choosing synthetic data generation, automated labeling and data ingestion tools, data-quality monitoring systems, and data contract enforcement. Platforms like AWS Glue DataBrew can reduce data preparation time by up to 80%, freeing engineers to focus on model development rather than data cleanup.

By investing in automation and ongoing data-quality control, enterprises cut redundant labor and costs while strengthening the reliability of their AI models.

Data storage and governance

Storing multimodal big data (text, audio, image, and sensor streams) drives continuous spending on compute and memory. Each redundant pipeline increases the total cost of AI development, while poor data lifecycle management results in wasted storage and compliance risks.

Planning for a cost-efficient storage architecture starts with understanding data value over time. Not all data needs to live in the fastest or most expensive tier. Frequently accessed data should be stored in high-performance environments, while historical or low-priority data can be archived in lower-cost tiers.

To ensure AI/ML models have consistent access to relevant structured and unstructured data, enterprises often build consolidated repositories such as data lakes, data warehouses, or hybrid data lakehouses. These architectures simplify data access for analytics and AI pipelines while maintaining scalability.

For example, a global manufacturing company is running predictive maintenance models. The team implemented a tiered storage strategy using Amazon S3 and Glacier: real-time sensor data stayed in S3 Standard for instant access. At the same time, readings older than 90 days were automatically moved to S3 Glacier. This shift cut storage costs by over 60% without affecting model performance.

| Architecture type | Cost structure | AI compatibility | Optimization potential |

|---|---|---|---|

| Data warehouse | High ETL processing overhead and rigid schema management | Limited support for unstructured data and constrained to batch processing | 20–30% cost reduction possible through warehouse automation, though scalability remains limited |

| Data lake | Storage-optimized but compute-intensive for large-scale data processing | Excellent support for multimodal and unstructured data with flexible schema evolution | 50–80% savings in storage costs are achievable through tiered storage and data processing optimization |

| Data lakehouse | Balanced storage and compute economics with built-in transactional capabilities | Native integration with ML workflows supporting both real-time and batch processing | Up to 30% reduction in data management costs through a unified architecture that eliminates redundant data movement |

#3. Model maintenance and retraining: The hidden tax of model drift

AI systems require continuous lifecycle management to maintain accuracy, ensure regulatory compliance, and optimize computational efficiency.

Model drift and performance degradation

Over time, AI models tend to lose accuracy as real-world data drifts away from the data they were initially trained on. This divergence, known as model drift, causes models to misinterpret new patterns, “forget” previously learned relationships, and deliver unreliable predictions. Left unchecked, drift can quietly erode ROI by increasing false outputs, compliance risks, and customer dissatisfaction.

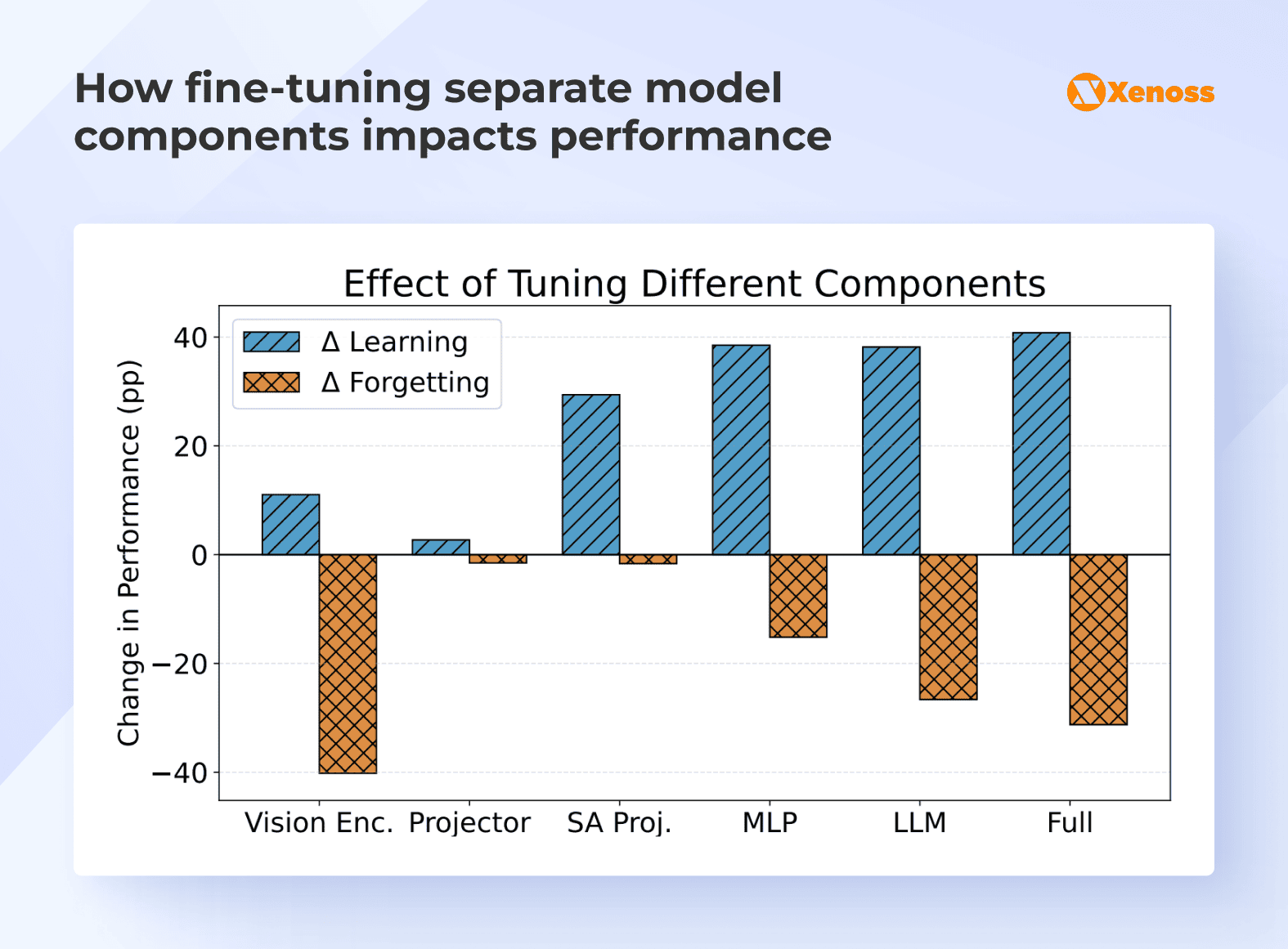

One way to mitigate model drift is to fine-tune only some parts of the model rather than invest heavily in full model retraining. The chart below shows what happens when you fine-tune different parts of an AI model:

- When you retrain the entire model (far right), it learns the new task well but forgets much of what it knew before.

- When you fine-tune only specific layers, such as the self-attention projector (SA Proj.), you still achieve substantial learning gains (+30 points) while losing very little of the original performance.

In business terms, this means not all model updates are worth the cost. Full retraining delivers short-term gains but causes “AI amnesia,” forcing extra rounds of validation, retraining, and maintenance later. Targeted fine-tuning, on the other hand, preserves past accuracy and keeps infrastructure and compute costs lower.

Version control and rollback infrastructure

Maintaining version control for AI models can add another 5-10% to annual maintenance costs. Organizations need a robust MLOps infrastructure to:

- Track model versions and their performance metrics

- Store model artifacts and training configurations

- Enable quick rollbacks when new versions underperform

- Manage A/B testing between model versions

- Document changes and maintain audit trails

Tools like MLflow, Weights & Biases, and vendor-specific solutions (AWS SageMaker, Azure ML, Google Vertex AI) provide these capabilities but require dedicated resources for setup and maintenance.

Security updates and vulnerability management

As models move into production, every layer of the stack becomes an attack surface: APIs, data pipelines, vector databases, and model endpoints.

The hidden risk lies in how often AI models interact with sensitive or proprietary data. 99% of enterprises inadvertently expose confidential data to AI tools, primarily through third-party integrations and shadow AI usage. Fixing such leaks involves not only technical remediation but also incident response, re-training security teams, and updating governance policies, all of which add both direct and opportunity costs.

Each update, patch, or access policy review adds operational overhead but also reduces the risk of multimillion-dollar compliance fines or reputational damage.

Forward-thinking enterprises are now integrating continuous AI security monitoring, combining model-level access control, encrypted inference, and real-time anomaly detection, to keep systems both compliant and resilient without derailing ROI.

#4. Talent acquisition and team training: The $200k+ per specialist reality

Human capital is among the most essential factors to consider in the AI TCO, encompassing both technical specialists who architect and maintain AI systems and end users who integrate AI capabilities into business workflows

Beyond salary: The full cost of AI teams

According to data from Levels. fyi, compensation by role and experience level for AI specialists in the US is as follows:

- Entry-level AI engineers: $150,000-$200,000

- Mid-level AI engineers (3-5 years): $200,000–$300,000

- Senior AI engineers (7-10 years): $300,000–$500,000

- Principal/Staff AI researchers: $500,000–$1,000,000

- Applied Research Scientists: $250,000–$350,000

Beyond direct salaries, the true cost of AI teams includes recruitment premiums, retention bonuses, and the ongoing cost of upskilling talent to keep pace with rapidly evolving frameworks and infrastructure.

The competition for senior and applied research talent drives companies to offer equity packages, relocation support, and signing bonuses that can add another 20–30% to total annual spend per employee.

Add to that the cost of turnover, which can reach 50–60% of annual salary when accounting for recruitment, onboarding, and lost productivity. For smaller firms, maintaining a full in-house AI department may be unsustainable without clear ROI metrics or external support partners.

As a result, many enterprises now balance internal expertise with strategic AI partners, outsourcing model optimization, MLOps, or compliance work while retaining only core AI leadership roles in-house. This hybrid staffing model cuts operational costs while preserving technical ownership.

Change management and team training

35% of organizations plan to invest in employee training as a future priority to help employees use AI more efficiently. Expenses on team training span the following areas:

- Department-specific training. The Workplace AI institute offers courses for different business functions for $498 each (sometimes available at a 50% discount). Training up to 10 teams can cost around $50,000.

- Executive leadership training. An Oxford Management Centre offers a 10-day course for C-suite AI literacy and strategic planning for $11 900.

- Cross-departmental integration. AI high performers are 3 times more likely than less progressive enterprises to redesign workflows to maximize AI value. Redesign can involve hiring new people, integrating human-in-the-loop processes, automating tasks, and retraining teams. Overall, costs for cross-departmental AI integration can range from $150,000 to $500,000 (depending on AI system complexity and headcount).

- Custom development of AI training materials. To increase AI adoption, you can invest $100,000 – $300,000 in custom training software with gamified or interactive components.

Investments in change management and team training initiatives pay off within 6-12 months as teams get up to speed with AI tools, improve productivity, and contribute to increased company profits.

#5. AI compliance and governance: The 40-80% cost multiplier for regulated industries

Regulations such as HIPAA, GDPR, CCPA, PCI DSS, ISO 27001, and the EU AI Act require that every stage of the AI lifecycle (from data collection and storage to model inference and human oversight) be transparent, traceable, and well-documented. This means extra layers of governance: maintaining data lineage, setting access permissions, logging model decisions, and ensuring the right to explanation for automated outputs.

Here are the differences in violation types and maximum fines of GDPR, EU AI Act, and PCI DSS:

| Regulation | Violation type | Maximum fines |

|---|---|---|

| GDPR | Breach of data protection obligations (consent, data processing, security, etc.) | Up to €20 million or 4% of global annual turnover, whichever is higher |

| EU AI Act | Non-compliance with high-risk AI obligations (e.g., lack of transparency, risk management, or human oversight) Use of prohibited AI systems (e.g., social scoring, manipulative surveillance) | Up to €15 million or 3% of global turnover Up to €35 million or 7% of global annual turnover, whichever is higher |

| PCI DSS | Non-compliance with payment card data security standards, or breach by a non-compliant merchant | $5,000 – $100,000 per month, escalating with time |

To minimize regulatory risk, invest early in a unified AI governance framework that balances transparency, data protection, and human oversight. It’s far cheaper to prevent a compliance breach than to pay for one, especially given penalties that can reach up to 7% of global turnover.

#6. Complexity of AI integration into legacy systems

Enterprises still operate on a large number of legacy systems that have been running for decades and are too valuable to abandon for the sake of AI. And then the engineering team faces challenges in integrating modern AI systems with these systems. Completely re-architecting legacy software can make your AI project bill skyrocket.

Rather than rip out core systems, Xenoss advocates for incremental and cost-efficient integration approaches:

- Hybrid architecture: Train models in the cloud while deploying inference on-premises to keep data close and latency low.

- Middleware layers: Use API gateways or a central AI middleware that links legacy systems and multiple AI services without altering core applications.

- Modular AI microservices: Build focused AI capabilities (e.g., document classification, anomaly detection) as independent modules that integrate via standard APIs and leave legacy logic untouched.

These incremental strategies are 2–3x more cost-efficient than full-stack modernization. Instead of spending millions to replace legacy systems, companies can integrate AI into existing systems in stages.

Measuring AI ROI: From short-term to long-term business impact

After answering: how much does AI cost? The next question is: Is it worth it? The only way to answer is through measurable ROI. Start with small, contained pilots and track how AI impacts key financial, operational, and strategic metrics, from direct savings to decision-making speed.

| Category | Metric | What it measures |

|---|---|---|

| Financial | Direct savings | Reduction in labor, infrastructure, or operational costs after AI implementation |

| Opportunity cost | Lost revenue or efficiency from delayed or failed AI adoption | |

| Capital efficiency | ROI on infrastructure spending (GPUs, cloud, data stack) | |

| Operational | Time to First Value (TTFV) | How quickly AI delivers its first tangible outcome (e.g., faster reporting, reduced workload) |

| Time to Value (TTV) | When AI achieves full operational impact across teams | |

| Automation rate | Share of workflows or tasks automated by AI | |

| Decision speed | Time saved in insights, reporting, and execution | |

| Error reduction | Drop in rework, compliance issues, or output errors | |

| Employee productivity | Increase in throughput or value delivered per employee | |

| Strategic | Total Economic Impact (TEI) | Overall ROI factoring in flexibility, risk, and payback period |

| Customer Lifetime Value (CLV) | Growth in long-term customer revenue due to personalization or retention | |

| Net Promoter Score (NPS) | Improvement in customer satisfaction and brand loyalty driven by AI-enabled experiences |

Together, these metrics form a balanced view of how AI delivers value, from short-term productivity to long-term revenue impact.

Bottom line

Most organizations focus on headline costs like API usage or GPU hours but overlook the dozens of small, recurring expenses that quietly erode ROI over time: data preparation that never ends, retraining cycles caused by model drift, cloud bills inflated by idle instances, or compliance audits that turn into six-figure line items.

This article proves that artificial intelligence cost estimation is predictable when it becomes visible. When you break AI spending into components, it becomes clear that the most expensive part isn’t always the technology itself.

Data engineering, security updates, and people-related costs often outweigh software licensing fees and API bills. For instance, data collection and cleaning can take up over 10–15% of total AI budgets, while high-end AI engineers command $300,000–$500,000 in annual compensation. Meanwhile, maintaining accuracy through retraining and vulnerability patching can add 15–30% to your operational costs each year.

Therefore, the real challenge is sustaining AI use rather than affording the technology. Xenoss provides a detailed estimate of your AI systems’ potential TCO and develops a strategic roadmap to help you stay on budget.