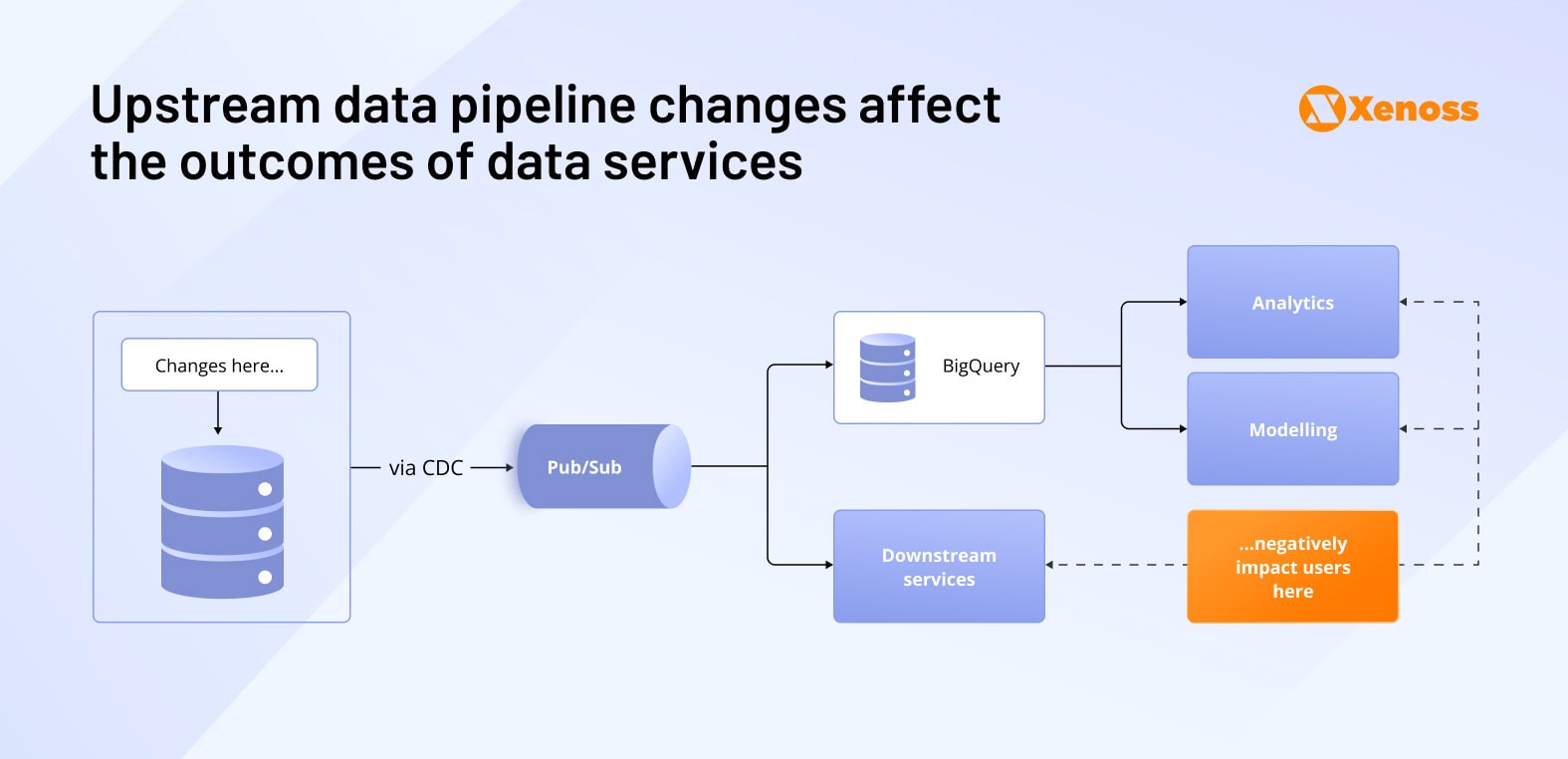

Data contracts solve a problem every data engineering team knows too well: upstream changes breaking downstream systems without warning. One team drops a database field, and suddenly three different dashboards stop working. Another team changes a data type, and machine learning models start producing garbage outputs.

The confusion around data contracts comes from mixed messaging. Some people treat schemas as contracts (they’re not). Others think data contracts are just governance frameworks with fancy names. Many engineers assume the whole concept is too theoretical to work in production environments.

What makes this worse is that modern data stacks are inherently fragile. Unlike the old days when everything lived in one database with clear SQL schemas, today’s systems involve dozens of microservices, streaming platforms, and data lakes. A small change anywhere can cascade through the entire pipeline.

This guide shows you how to implement data contracts. We’ll walk through schema registry setups that prevent breaking changes, automated testing frameworks that catch problems early, and notification systems that keep everyone informed when changes happen.

Data contracts: Why engineers are treating data like an API

Early data systems were simple. Teams stored everything in one database and used JDBC connections with SQL schemas to control access. Everyone knew exactly what data looked like and where to find it.

Then distributed systems changed everything. Organizations split data across microservices, data lakes, and streaming platforms. Suddenly, teams had dozens of data sources with no consistent way to validate quality or track changes between systems.

Andrew Jones, a data engineer who coined the term “data contract”, noticed that the most effective way to keep quality in check was by defining rules for ingestion, so that poor data does not make it to the pipeline.

He noticed that an upstream service change, like dropping a field, can break the final end-user dashboard, and engineers are often unaware of how small tweaks impact the downstream pipeline.

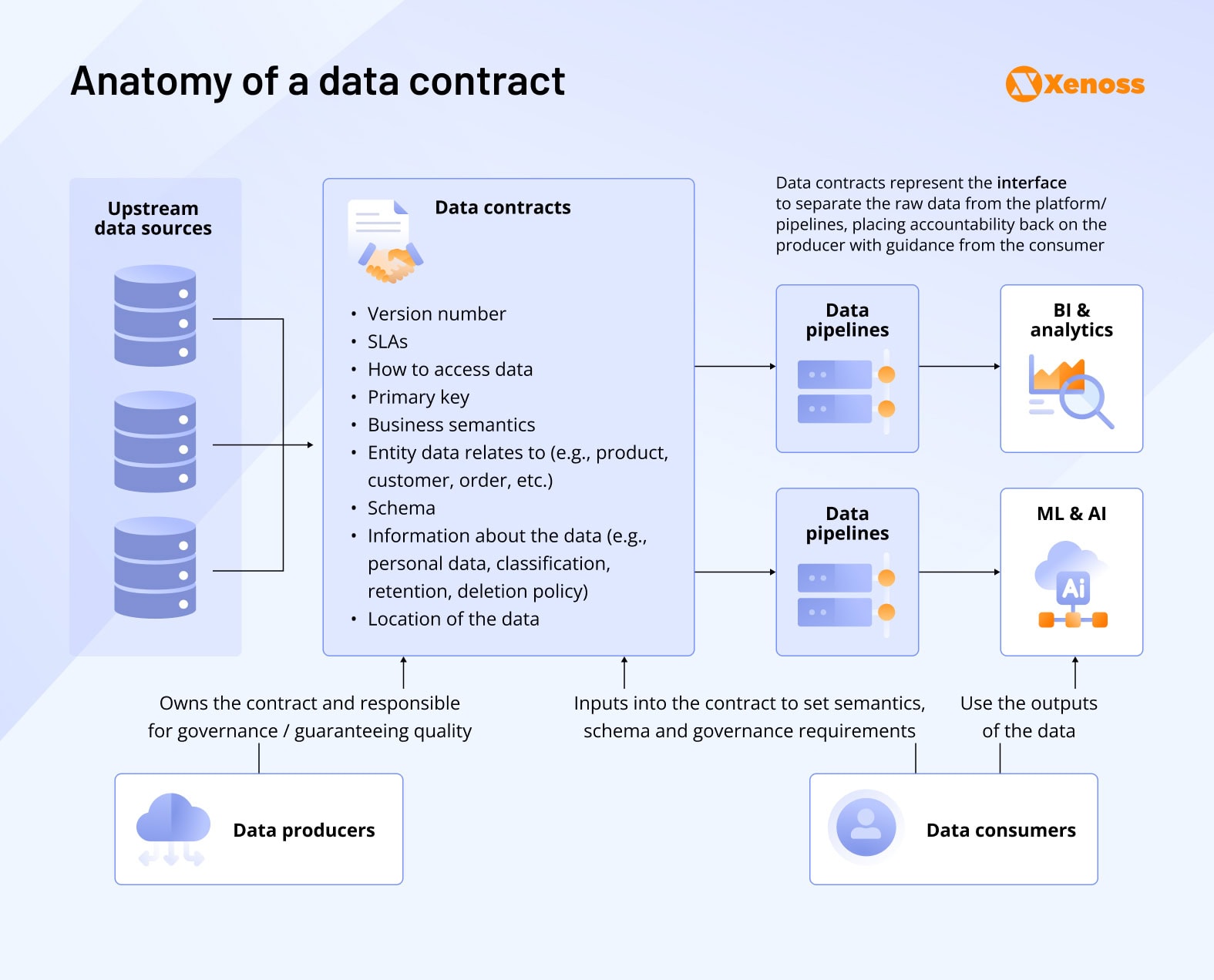

For Jones, a solution to this problem was creating a standardized interface between data generators and data consumers, a “data contract”. If the concept sounds familiar, that’s likely because this is exactly what APIs accomplish in software.

Early APIs, much like modern data services, had poor documentation, would change unexpectedly, or break compatibility without a warning. Eventually, conventions, tools like Portman and Swagger, managed services like AWS Gateway, and the OpenAPI protocol standardized the API ecosystem and helped it mature.

Data contracts extend the same mindset to data.

They are essentially agreements between data producers and consumers that describe how teams should use the data they are about to ingest, determine which data should be exposed to the service, and set access rules.

Every data contract includes core components that define the relationship between producers and consumers:

- Version control for tracking schema evolution

- Service level agreements specifying uptime and latency requirements

- Access permissions determining who can read or modify data

- Primary keys establishing unique identifiers

- Data relationships connecting entities across systems

- Schema definitions specifying structure and types

- Metadata providing context and documentation

- Data location indicating storage and access paths

The ability of data contracts to effectively solve the decade-old misalignment problem quickly made them a new standard in enterprise data engineering.

Netflix enforces data contracts to stay consistent across the company’s multiple pipelines. The engineering team shared that setting clear expectations helps teams “iterate at exceptional speed” and “deliver many launches on time”.

Miro also turned to data contracts to align internal analytics teams and reach agreements between data producers and consumers.

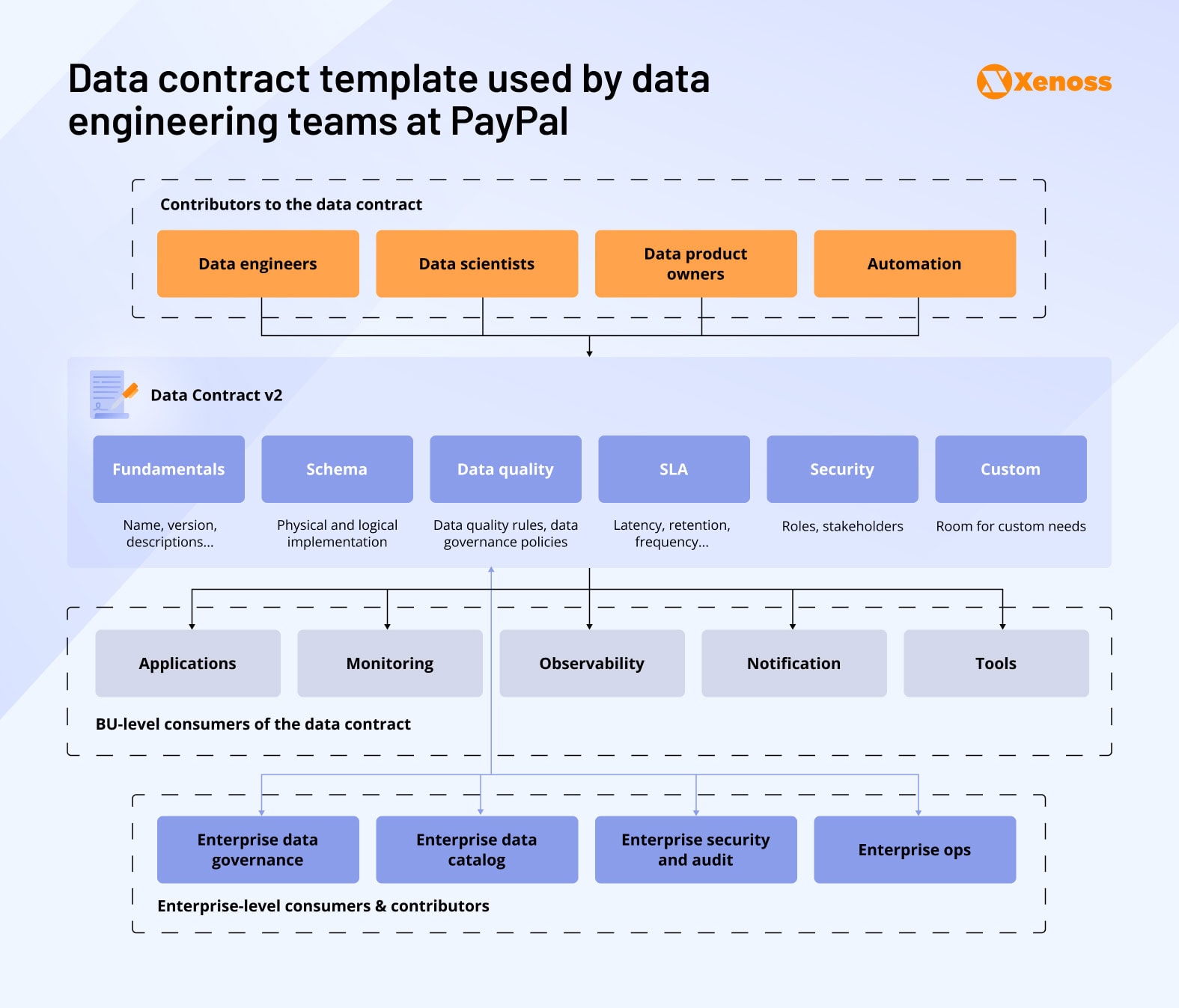

PayPal’s data mesh architecture is also governed by a set of data contracts that define user expectations and SLA details for each “data quantum”.

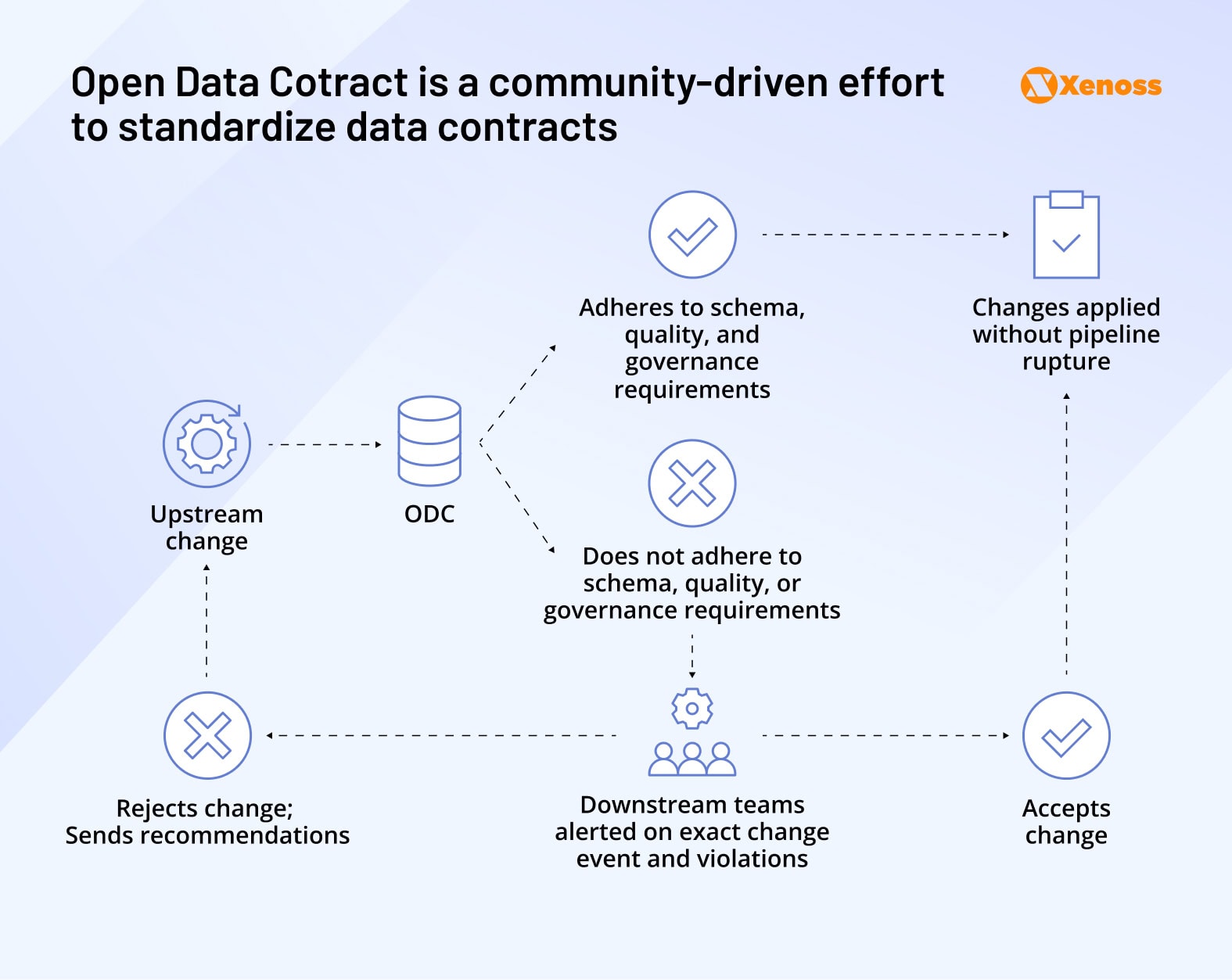

The standardization movement is accelerating. The Open Data Contract initiative develops community-driven standards that organizations can customize for their specific requirements, similar to how OpenAPI transformed API development.

Data contract enforcement: Schema registry implementation patterns

Event-driven architectures dominate modern data pipelines. When the database states change, these events automatically trigger downstream services to process new information. As systems grow, data volumes explode, and schema management becomes a nightmare.

Schemas prevent chaos by standardizing data structures across services. They define exactly what each payload should contain, including field types, formats, and required elements. Without schemas, every service would need custom logic to handle data from every other service.

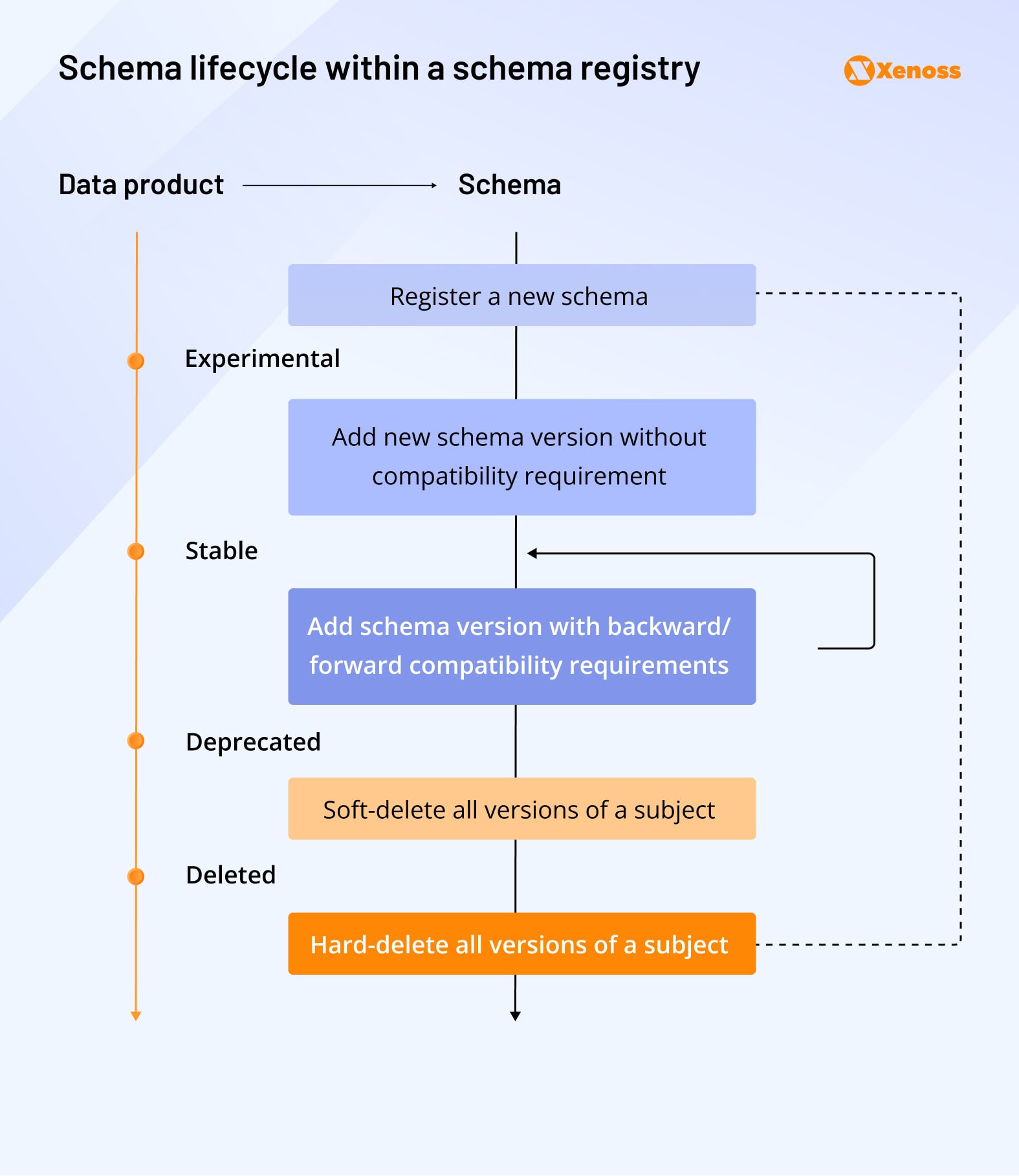

Enterprise pipelines can have hundreds of schemas per data stream. Teams needed a central system to store, version, and validate all these schema files. Schema registries emerged as the solution, providing not just storage but also compatibility checking and lifecycle management.

Understanding schema registries requires knowing three key concepts:

- Schema formats determine how you define data structure. JSON schemas are most common, but Apache Avro handles binary data and schema evolution better, while Google’s Protobuf optimizes for performance and cross-language compatibility.

- Compatibility modes control how schemas can evolve over time. BACKWARD compatibility lets new schemas read old data (the default approach). FORWARD compatibility allows old schemas to read new data, useful when producers update more frequently than consumers. FULL compatibility supports both directions, while NONE disables all compatibility checks.

- Subjects group all versions of a schema under a unique identifier. Think of subjects as schema families that track evolution over time.

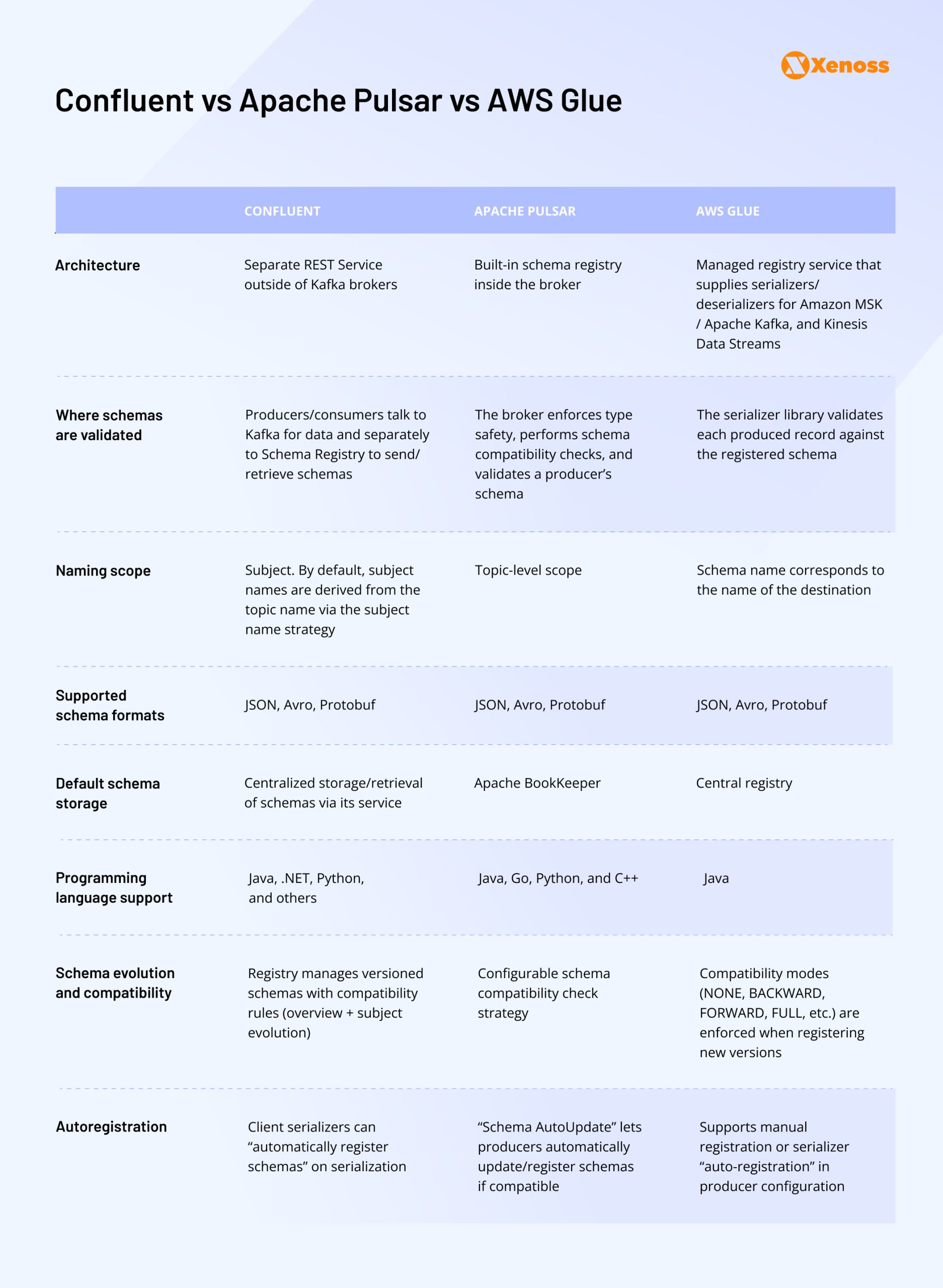

Among the schema registries on the market, Confluent (created by the team of Apache Kafka), Apache Pulsar, and AWS Glue are among the leaders.

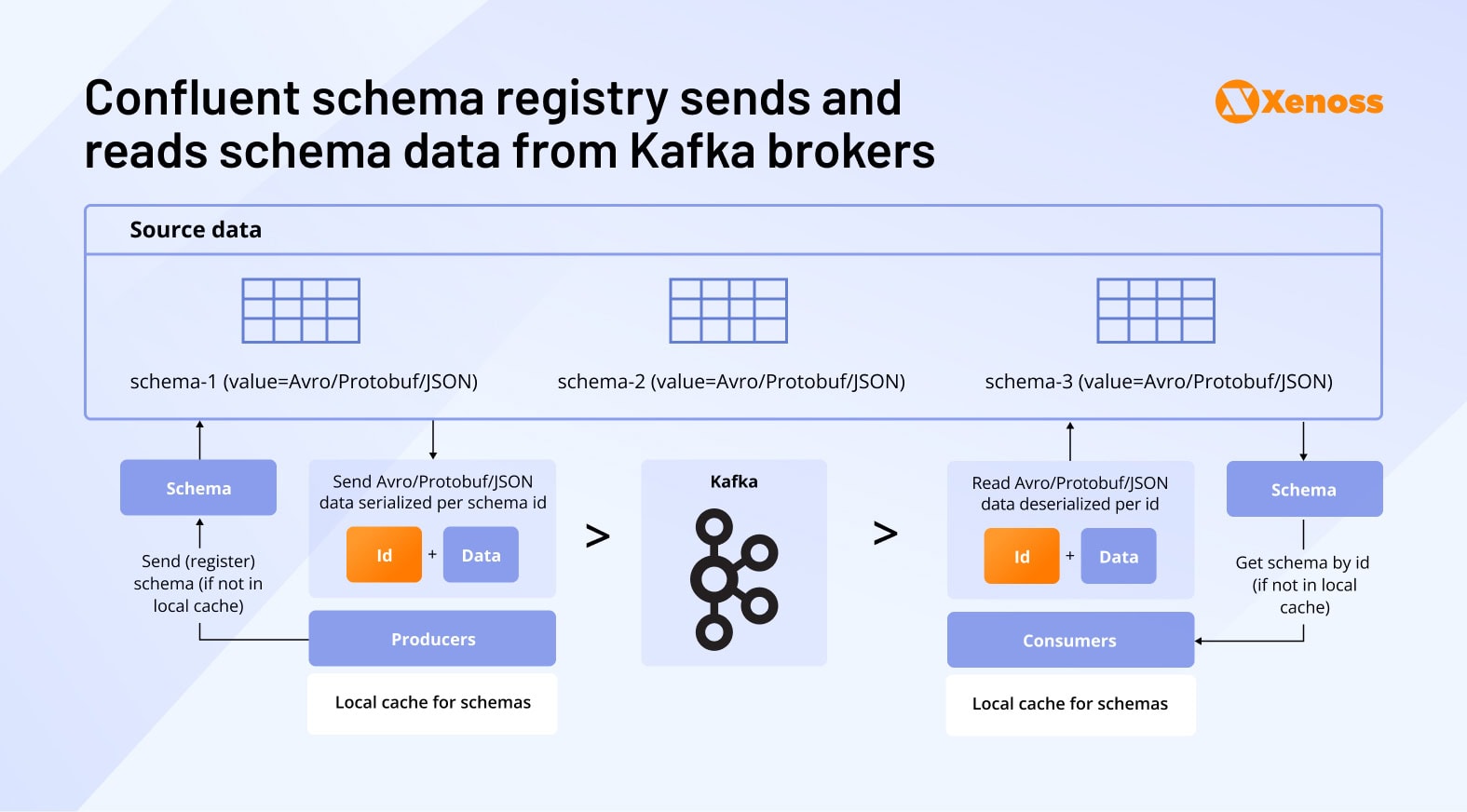

Confluent schema registry: REST-based validation

Confluent provides a standalone REST service that stores schemas separately from Kafka brokers. This separation gives you more control but adds architectural complexity. The registry supports JSON, Avro, and Protobuf formats with full compatibility mode options.

Producers and consumers connect to both Kafka for data and the schema registry for validation. Confluent’s serializers handle this dual connection automatically, checking schemas before processing messages.

Programming language support includes Java, .NET, Python, and several other popular languages for enterprise development.

Apache Pulsar: Built-in schema management

Apache Pulsar takes a different approach by building schema management directly into the broker. Instead of running a separate service, schemas live inside Pulsar’s architecture and get enforced at the topic level.

Schema registration happens automatically when you create a producer. As soon as you initialize a Producer with a schema, Pulsar uploads and registers it without additional configuration. You can also manually register schemas through the REST API for more control.

The broker handles all validation, making the system simpler but less flexible. Pulsar enforces type safety and compatibility checks directly, so producers can’t send incompatible data to topics.

Language support includes Java, Go, Python, and C++, covering most enterprise development needs.

AWS Glue Schema registry: Serverless validation

AWS Glue Schema Registry is Amazon’s managed solution designed for serverless architectures. It integrates seamlessly with Kafka, Kinesis Data Streams, Lambda functions, and Amazon Managed Service for Apache Flink, making it ideal for AWS-native data pipelines.

The biggest advantage is cost—it’s completely free. AWS absorbs the operational overhead, and you only pay for the underlying AWS services that use the registry.

Current limitations center around language support. While Glue supports all standard formats (JSON, Avro, Protobuf), it only works with Java applications right now. According to the AWS documentation, there will be more “data formats and languages to come”.

Advanced features include ZLIB compression for storage optimization and metadata-based schema sourcing that helps reduce storage costs at scale. Like Confluent, it uses serializers and deserializers to integrate with Kafka clients.

A side-by-side comparison of these three registries can help make an informed decision on which schema registry is a better fit for your next data project.

Data quality testing for contract validation

Creating data contracts is only half the battle. You also need to verify that these agreements actually work in production. That’s where contract testing comes in, automated checks that validate whether your data meets the standards you’ve defined.

The testing approach has fundamentally shifted in recent years. Traditional pipelines relied on post-processing validation, catching problems after data had already moved through systems. Modern teams implement in-process checks with CI/CD automation, stopping bad data before it causes downstream issues.

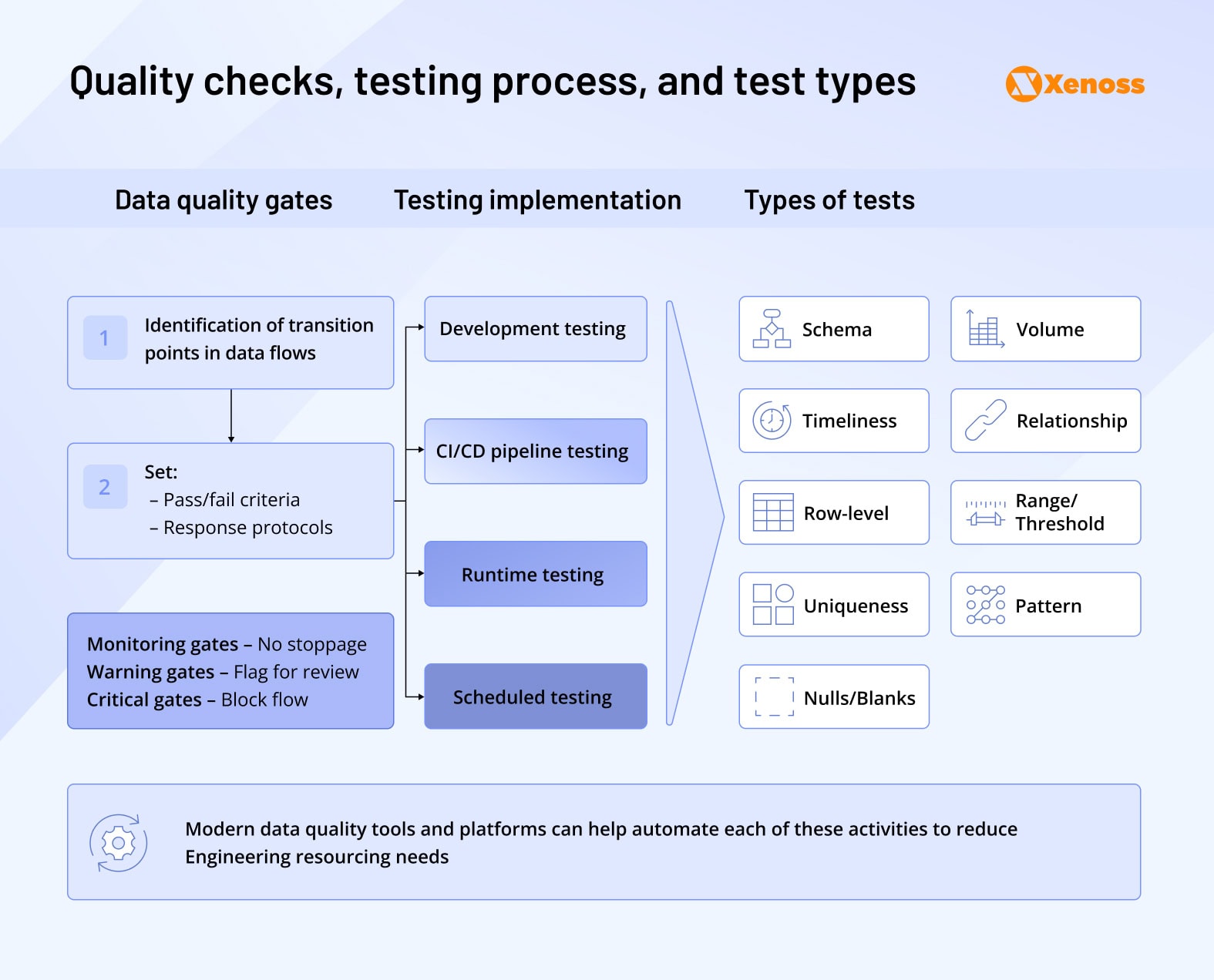

Dylan Anderson, founder of The Data Ecosystem newsletter, breaks effective contract testing into three components: quality gates that stop bad data, testing stages that cover the entire lifecycle, and specific test types that validate different data characteristics.

1. Building data quality gates

Quality gates act as checkpoints throughout your data pipeline. Think of them as automated bouncers that examine data and decide whether it can proceed to the next stage.

Not every gate has the same enforcement level. Critical gates stop the pipeline entirely when data fails, missing required fields, or wrong data types can’t proceed. Warning gates flag potential issues but allow data to continue flowing. Monitoring gates simply track metrics without blocking anything.

This three-tier approach gives you comprehensive coverage:

- Critical gates prevent pipeline failures

- Warning gates catch future problems early

- Monitoring gates provide observability and performance insights

Implementing quality gates follows a straightforward process:

Identify critical checkpoints where validation adds the most value, typically at data ingestion points, before expensive transformations, and prior to serving data to end users.

Define pass/fail criteria that align with your data contract requirements, null checks, data type validation, range constraints, and business rule compliance.

Configure failure handling to determine what happens when tests fail, block pipeline execution, route to error queues, trigger alerts, or allow with warnings.

Build an automated testing infrastructure using data quality platforms like Great Expectations, dbt tests, or custom validation frameworks that integrate with your existing pipeline orchestration.

2. Implementing data checks

As Anderson points out in his blog, data testing is different from typical software testing because it deals with “inherent variability, volume, and evolving nature of data itself”.

A robust data contract should imply data testing at all stages of its lifecycle.

- Development tests use sample datasets to identify anomalies that are likely to be true for production data. Keeping track of issues before data enters production is a massive cost-cutter that can slash infrastructure expenses by up to 10 times.

- CI/CD pipeline tests validate the contract itself, monitor schema evolution, ensure compatibility, and the accuracy of transformation. They make sure that, once high-quality data enters the pipeline, it is not distorted or misinterpreted throughout the flow.

- Runtime tests confirm data quality at production scale and are now a standard feature of Modern Data Stack tools like dbt, Spark, or Flink. Although development tests aim to catch critical quality errors, some only show up once the pipeline is exposed to high loads of production data. That’s why runtime testing is critical to validate data quality before the pipeline goes into production.

- Scheduled tests help monitor data quality on the data stored in a company’s warehouse. Over time, even high-quality data drifts (in other words, its statistical properties change), and the models it helps train no longer produce accurate outputs. Scheduling tests helps identify drift and only keeps reliable data in the pipeline.

3. Choosing data quality test types and validation methods

Data contracts require testing across multiple dimensions to catch different types of quality issues. Schema validation alone isn’t enough—you need comprehensive checks that cover data relationships, business rules, and statistical patterns that emerge from your datasets.

The industry recognizes six core data quality dimensions that every contract should address:

- Timeliness tests ensure data freshness meets business requirements. Stale data can make real-time applications useless or cause models to make decisions based on outdated information.

- Uniqueness validation prevents duplicate records from corrupting analysis. Primary keys, user IDs, and other identifier fields must be unique, or downstream systems will double-count metrics and produce incorrect results.

- Accuracy tests verify that data correctly represents real-world entities. Customer addresses should match actual locations, revenue figures should align with financial systems, and measurement data should fall within expected ranges.

- Completeness checks catch missing fields and null values before they break pipelines. This often becomes a critical quality gate since missing required data typically causes immediate system failures.

- Consistency validation ensures all data follows the same schema and formatting rules. Mixed date formats, inconsistent naming conventions, or schema drift can break downstream processing logic.

- Validity tests confirm that data values match their expected formats and constraints. Date fields should contain valid dates, email addresses should follow proper syntax, and numeric fields should stay within acceptable ranges.

Effective testing combines multiple dimensions rather than checking each in isolation. A comprehensive approach might validate that customer records are complete (all required fields present), unique (no duplicate IDs), timely (recently updated), and accurate (addresses match postal databases).

The more test scenarios you consider, the better you’ll catch edge cases before they impact production systems. Proactive quality testing prevents costly pipeline failures and maintains trust in data-driven decision making across your organization.

How data contracts help detect and address breaking changes

Besides keeping data producers and consumers on the same page, setting standards for incoming data, and enforcing ongoing monitoring, data contracts are a helpful tool for change management.

Upstream changes can trigger expensive ripple effects throughout your data ecosystem:

- Model degradation when training data changes unexpectedly

- Service outages as applications fail to process modified data structures

- Pipeline restart costs that multiply when multiple systems break simultaneously

Without a data contract, engineers have to work around breaking changes, adding extra layers on top of an already complex pipeline. A data contract detects disruptions before they affect the consumer and alerts the consumer about potential risks.

Building breaking change detection systems

Effective change detection requires comprehensive database event capture. Your system needs to monitor table creation, column modifications, data deletion, schema updates, and permission changes across all data sources.

Event classification separates breaking changes from routine updates. Automated analysis categorizes each database event based on its potential impact on downstream systems and existing data contracts.

When breaking changes occur, contracts transition from VALID to BROKEN status. The system logs the breaking event, notifies affected consumers, and triggers validation workflows to restore stability.

Custom vs. open-source breaking change detection tools

Enterprise teams often build custom detection systems for maximum integration. Custom solutions integrate seamlessly with existing data infrastructure and provide complete control over detection logic and response workflows.

Open-source tools offer faster implementation for smaller projects or proof-of-concept work. DataContract provides a Python library, CLI, and CI/CD integration options for automated change detection. Proto Break offers Google’s approach to schema evolution monitoring with built-in alerting capabilities.

The choice depends on your infrastructure complexity and integration requirements. Custom systems provide maximum flexibility but require significant development investment. Open-source tools accelerate deployment but may require adaptation for enterprise-specific workflows.

Setting up a breaking change notification system

Detection without notification creates a false sense of security. Your system might catch breaking changes perfectly, but if the right people don’t know about them quickly, downstream systems still fail, and consumers lose trust in your data.

Effective notification systems balance immediate alerts with comprehensive reporting. Teams need instant awareness of critical issues plus detailed context for making informed decisions about how to respond.

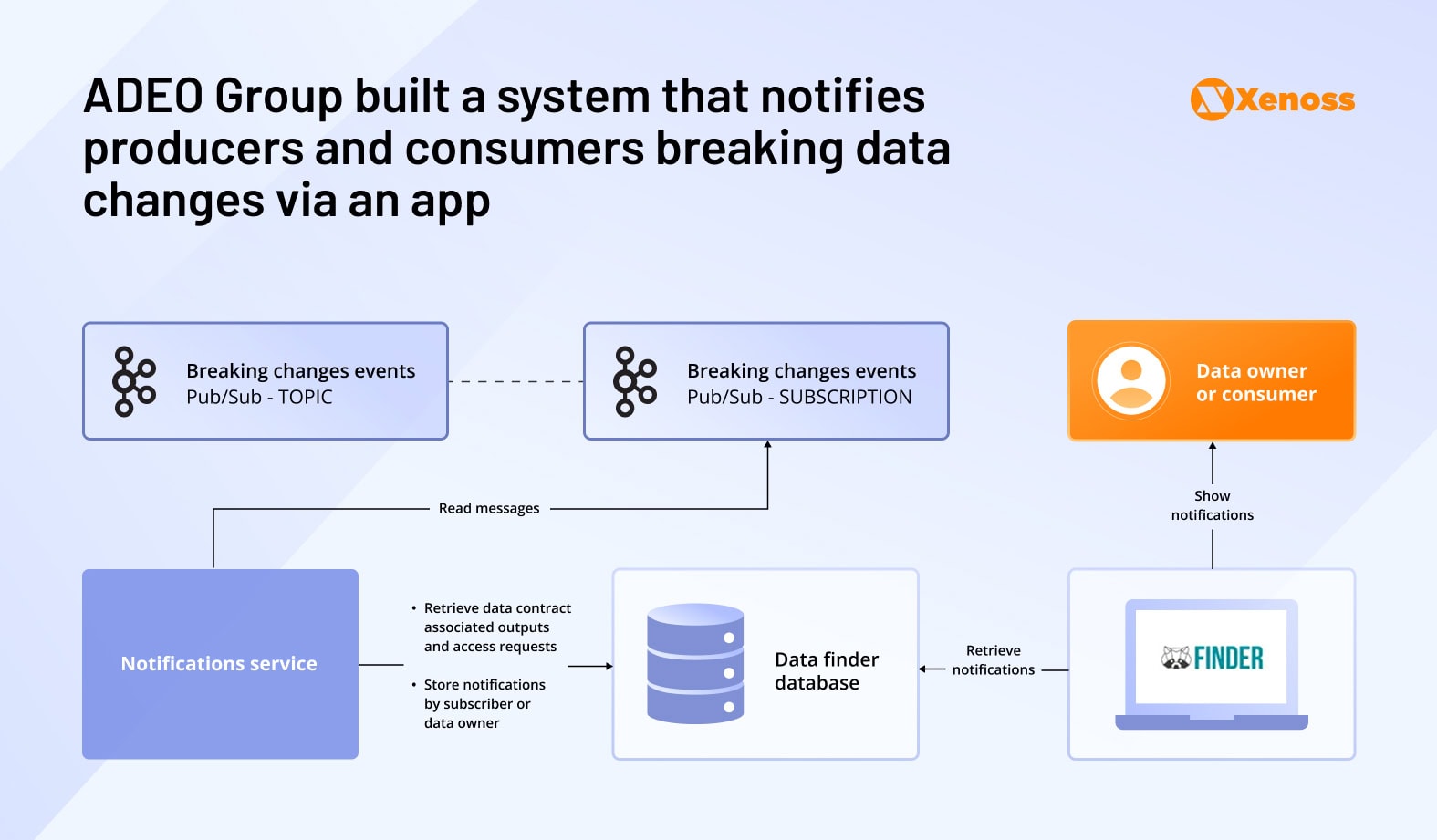

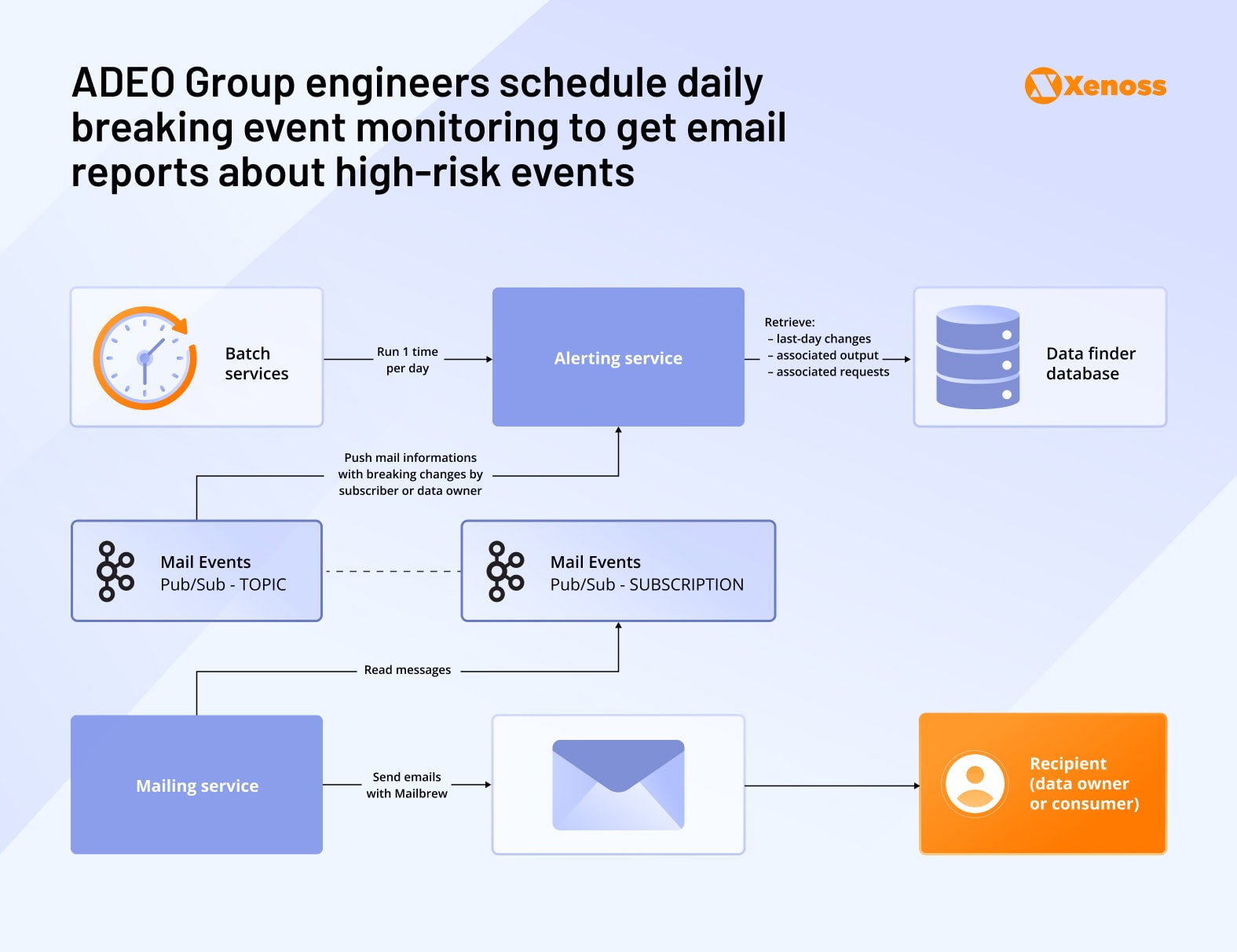

ADEO Groupe (formerly Leroy Merlin) implemented a two-tier notification approach that addresses both needs:

Real-time alerts provide immediate awareness of breaking changes. Their notification service monitors breaking event logs continuously and sends brief in-app alerts to both data owners and consumers as soon as issues are detected. These alerts include essential information: what changed, which systems are affected, and the severity level.

Daily digest reports offer comprehensive change summaries. A scheduled job collects all breaking changes from the previous 24 hours and compiles them into detailed email reports. These reports provide complete context: technical details, affected downstream systems, recommended actions, and historical trends.

Key takeaways for enterprise data contract success

The producer-consumer alignment problem has plagued data engineering since distributed systems became the norm. In enterprise environments, this challenge becomes critical; a single upstream change can cascade through dozens of downstream systems, breaking dashboards, corrupting analytics, and degrading user experiences.

Software engineering solved similar challenges through API standardization. When application integrations were unreliable and poorly documented, the industry developed OpenAPI specifications, versioning standards, and contract-based development that transformed how systems communicate.

Data contracts apply these same principles to data systems. Instead of hoping upstream teams won’t break your pipelines, you establish formal agreements that define expectations, validate quality, and manage changes systematically.

Successful enterprise data contract enforcement requires three foundational components:

Schema registry implementation, comprehensive quality testing at critical pipeline junctions, automated breaking change detection, and notification that alerts stakeholders immediately when contracts are violated.

Organizations that implement these practices see immediate operational benefits. Data teams spend less time firefighting pipeline failures. Product teams gain confidence in data-driven features. Business stakeholders trust analytics for strategic decision-making.

Most importantly, data contracts transform reactive data management into proactive data governance. Instead of discovering problems when dashboards break or models fail, teams prevent issues through systematic validation and change management.

The investment in contract enforcement pays dividends across the entire data ecosystem—more reliable pipelines, higher quality analytics, and organizational confidence in data as a strategic asset.