Most CTOs face the same strategic challenge: legacy systems that power critical operations but struggle to support modern AI capabilities. It’s a classic catch-22: you need AI to stay competitive, but implementing it risks disrupting the very systems that keep your business running.

Recent SnapLogic research puts numbers to this dilemma: 64% of companies rely on legacy platforms for over 25% of their operations, and 75% of these systems can’t effectively integrate with AI tools. For technology leaders, the question isn’t whether to adopt enterprise AI, but how to do it without breaking what already works.

Think of your organization as a tree. The legacy stack forms the roots: deep, established, and critical for stability. These systems anchor operations, hold historical knowledge, and support everything above. AI isn’t here to replace the roots; it’s a new branch. One that grows outward, extends reach, and responds to the current environment. With the right architecture, enterprise AI can thrive without disturbing the foundation and, over time, become a vital part of the tree’s evolution.

This guide draws on real-world enterprise implementations to provide practical strategies for integrating AI into legacy systems, avoiding common pitfalls, and establishing a foundation for long-term digital transformation, all while maintaining stable operations.

Embracing your legacy: Examine current stack and lay the groundwork for AI

Below are four not-so-obvious steps to take before considering the integration of enterprise artificial intelligence:

Step 1. Audit legacy software

Define which solutions are here to stay and which can be iteratively updated, migrated, or modernized. At this stage, you should also determine which systems will benefit from integrating enterprise AI solutions and which should remain unchanged. For example, your customer-facing data analytics solution can benefit from AI-powered predictions or natural language querying, but a rigid payroll processing system may remain as-is.

Step 2. Assess the TCO of your legacy stack

According to IDC surveys, 49% of organizations cite legacy system maintenance as the main reason for overspending their digital infrastructure budgets. To prevent further overspending, define the cost and value of each legacy system. Then you’ll be able to compare those findings against the projected TCO of AI integration. Where can AI reduce operational overhead or open new value streams? Validate that investing in the enterprise AI strategy can deliver more long-term value than maintaining the status quo.

Step 3. Specify business success metrics for AI adoption

Defining business success criteria early ensures you’re clear on why you’re investing and what outcomes matter. Is “improved customer satisfaction” your ultimate goal? Or maybe “saved working hours”? “Faster delivery with fewer resources”? Your business needs and pain points should be decisive factors when integrating AI.

Step 4. AI tool evaluation

Either with your in-house team or external AI consultants, define the AI tools and services suitable for integration. Analyze their potential gains and limitations for your legacy stack. For instance, if you’re considering OpenAI’s GPT-4 for summarizing legacy documentation, you’ll want to evaluate the model’s performance and quality using metrics such as precision and recall.

Where does AI fit in legacy systems?

Your legacy software isn’t too outdated for AI integration, despite what many technology vendors might suggest. The key is understanding where AI adds real value rather than trying to transform everything at once.

Implementation of AI agents for automated workflows

Choosing task-specific AI solutions is gaining ground because they deliver faster returns with less risk than massive AI overhauls. While comprehensive AI ecosystems sound impressive, they typically require significant upfront investment and can take years to show tangible results.

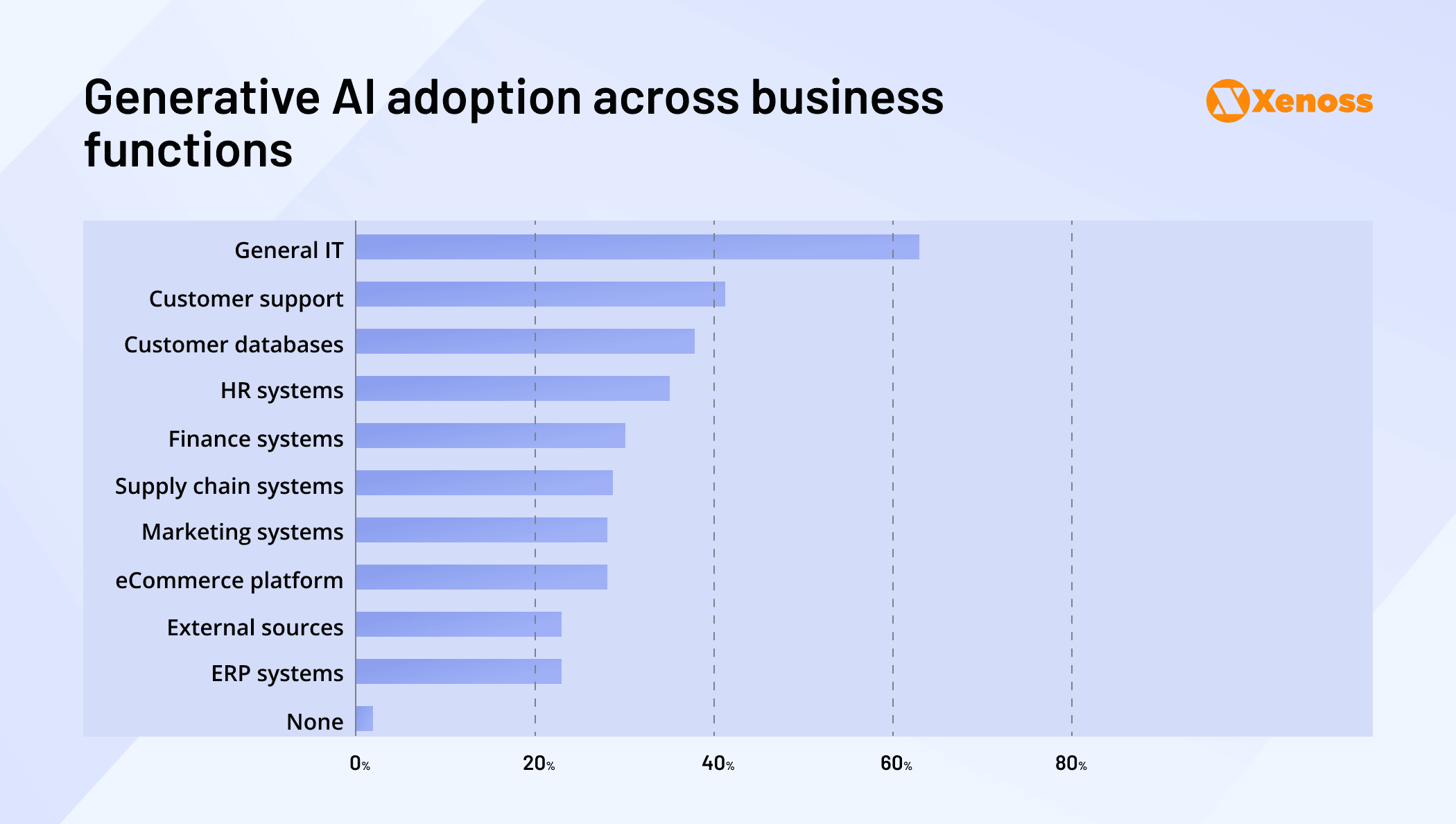

Gartner predicts that by 2027, enterprises will implement function-specific AI models and agents three times more often than general-purpose LLMs. Most current AI implementations focus on targeted, internal use cases such as general IT, customer support, and customer databases.

The SnapLogic report data below shows exactly where business leaders are seeing the most success.

Today’s AI agents are particularly effective at solving two persistent legacy system challenges. First, they can bridge the data gaps that have frustrated IT teams for years, creating intelligent connectors that:

- Automatically translate between different database formats

- Sync information across disconnected systems

- Eliminate the manual data reconciliation work that eats up valuable resources

Second, AI agents are transforming how organizations handle customer support and system troubleshooting. Instead of relying on human staff for every issue, advanced routing agents can:

- Intelligently categorize incoming requests

- Resolve common problems instantly

- escalate complex situations to the right specialists.

These systems work around the clock and integrate smoothly with existing helpdesk and CRM platforms, often without requiring any changes to the underlying infrastructure.

You can start small, prove value, and expand from there, exactly the kind of measured approach that makes sense when you’re working with mission-critical legacy systems.

Migrating to an improved frontend

When your legacy applications need a user experience refresh but you’re not ready for a complete architectural overhaul, AI-powered frontend migration offers a smart middle path. Modern AI development tools like Cursor, Windsurf, and Claude Code can automate much of the heavy lifting, though you’ll want to set them up for success with clear style guides and well-organized code repositories.

AI agents handle UI updates, test migrations, and API integration patterns with up to 90% time savings compared to traditional manual development. They’re particularly effective at converting legacy JavaScript to modern frameworks or updating deprecated styling patterns while preserving core functionality.

How to ensure successful frontend code migration:

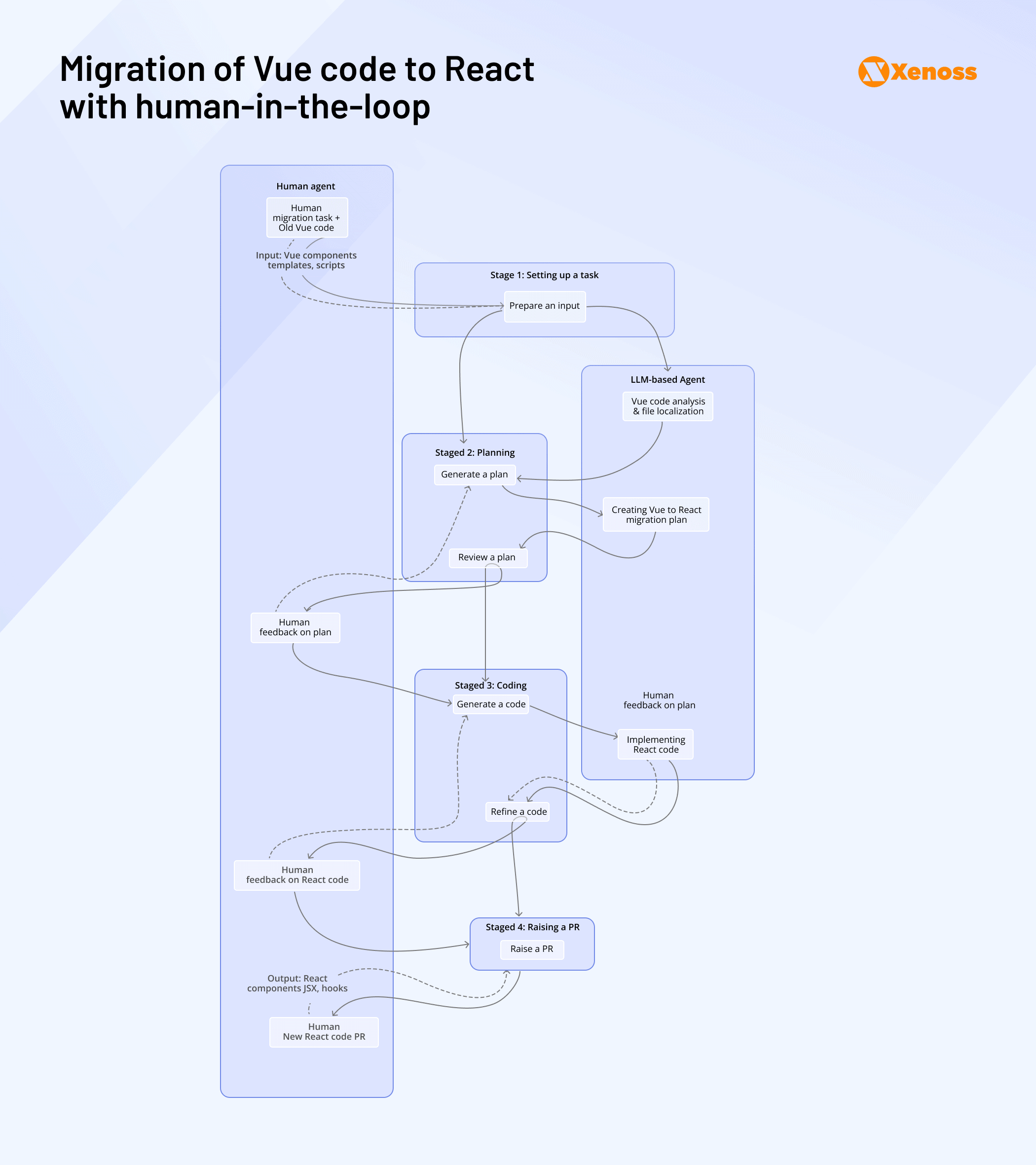

- Leverage a human-in-the-loop approach at each stage to maintain proper code quality and eliminate costly workarounds in the production environment.

- Opt for iterative migration by training AI agents to perform one task at a time, using parallelization with sectioning (breaking a task into several subtasks) or voting (running a task a few times to test outcomes).

- Utilize MCP servers, such as Figma’s Dev Mode model context protocol (MCP), to help agents perform accurate UI component conversions.

The diagram below illustrates a typical Vue-to-React migration workflow, showing how AI agents systematically convert components while human developers validate critical functionality and handle edge cases that require technical judgment.

This approach delivers the user experience improvements your stakeholders want while preserving the backend systems that power your business operations, giving you the best of both worlds without the risk of a full-stack replacement.

Legacy code modernization

Beyond frontend improvements, AI delivers transformational value at the codebase level, directly addressing technical debt that constrains enterprise agility. Atlassian’s latest State of Developer Experience report reveals that 70% of engineering managers have reclaimed over 25% of their time through AI assistants, enabling teams to focus on architectural improvements and code quality rather than repetitive maintenance tasks.

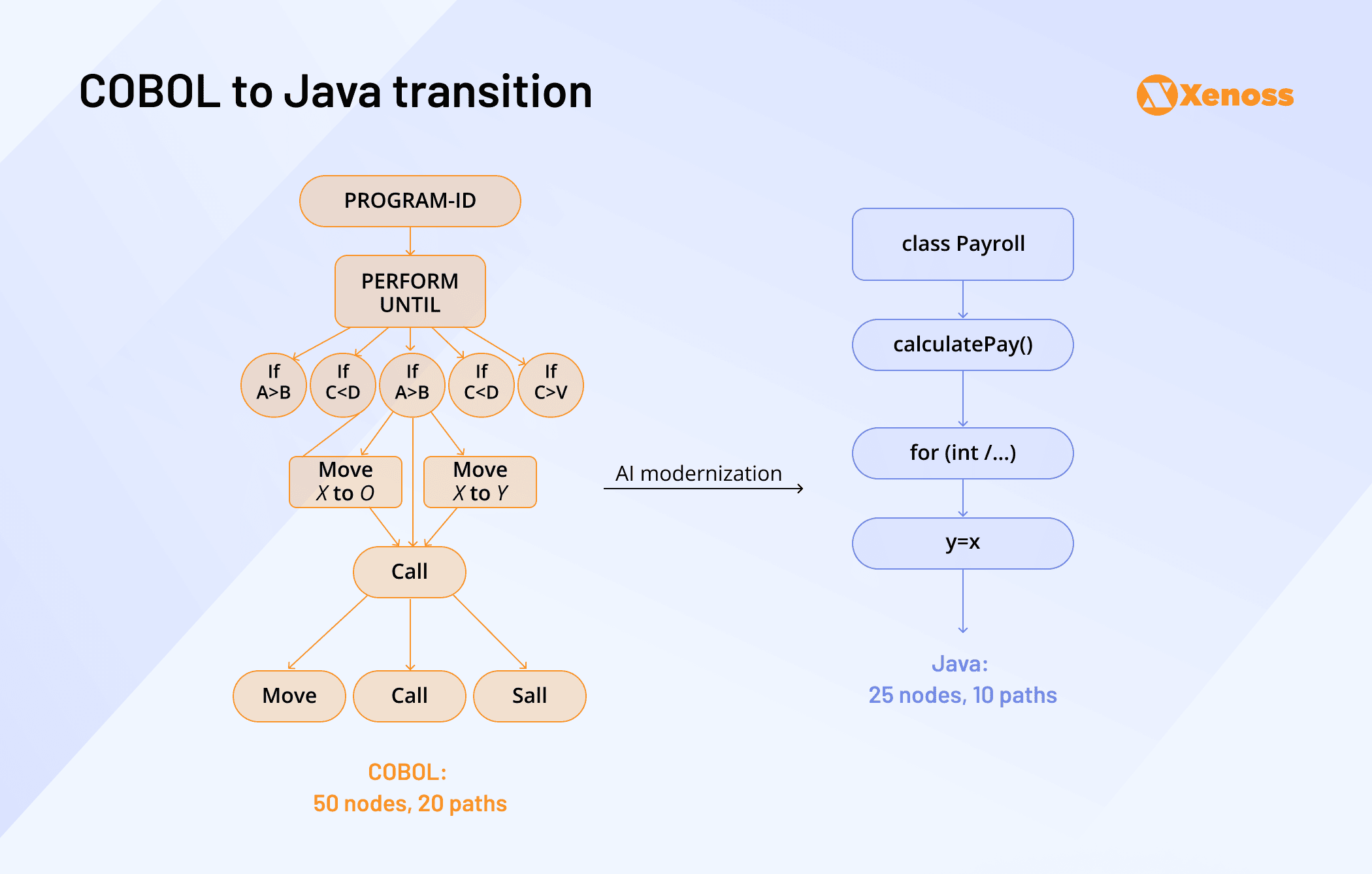

For example, this study examines the AI-driven conversion of COBOL code into Java as part of a legacy system modernization project. AI suggests upgrades, Java handles parsing, and React visualizes results. The method achieved 93% accuracy, reduced code complexity by 35%, and coupling by 33%. This approach offers a scalable path to reviving COBOL systems.

The visualization below illustrates how AI parses COBOL logic into abstract syntax trees, enabling systematic conversion to modern Java patterns while preserving business logic integrity. This approach offers particular value for banking and insurance organizations where COBOL systems still manage critical financial operations but require integration with modern application ecosystems.

AI can tackle the technical archaeology that human developers often avoid, those mission-critical but poorly documented systems that everyone’s afraid to touch. By automating the analysis and conversion process, organizations can finally modernize their most stubborn legacy code without the usual risks and resource requirements.

Examples of AI solutions coexisting with the legacy stack

AI doesn’t require a full-stack system overhaul to deliver tangible results.

Case #1. Automated patient follow-up in healthcare

A Heart Failure Unit at a European hospital was drowning in manual follow-up calls and struggling to maintain consistent patient engagement after discharge. The traditional approach, where nurses manually called patients and documented responses on paper forms, consumed enormous resources while delivering inconsistent results.

The hospital’s IT team implemented an AI-powered voice assistant that could perform follow-up calls based on individual patient conditions and automatically log responses into their existing EHR system. The key was maintaining zero disruption to clinical workflows that medical staff had relied on for years.

The implementation unfolded over six weeks using a careful phased approach.

Core stages of the integration process:

- Pilot program to validate initial ROI with patient lists downloaded directly into the AI agent’s system as CSV files via FHIR as a default interoperability standard

- Automated and scheduled data exchange between EHR and an agent server via a secure file transfer (sFTP) workflow, with minimal changes to the EHR

- Full-blown integration with EHR via real-time APIs backed up by FHIR and OAuth 2.0 for secure bi-directional exchange of data, enabling real-time updates for timely interventions

During the post-implementation period, the hospital yielded the following outcomes:

- 54.7% reduction in heart failure readmissions

- 492 nursing hours saved over 8 months

- 89% of patient adherence

Most importantly, doctors and nurses continued working exactly as before. The AI simply handled the time-consuming follow-up work in the background.

Case #2. Anomaly detection model for predictive maintenance in oil and gas

A global Asian oil and gas company decided to improve its upstream operations by increasing the reliability of the Gas Turbine Compressors (GTC). The GTCs’ monitoring system couldn’t effectively prioritize alerts coming from over 150 sensors installed in each GTC and provide prescriptive insights based on those alerts.

The inefficiency was staggering; experienced technicians were essentially playing detective with massive amounts of unstructured data, requiring both considerable time and deep expertise that was becoming harder to find. The company recognized the need for a smarter approach and decided to integrate a machine learning solution with their existing industrial systems. Six weeks to unify four years of operational data and deploy predictive analytics at enterprise scale.

Core stages of the integration process:

- Injection of 1 billion rows of data from the proprietary Failure Mode Library and data sources containing asset hierarchy, telemetry, and anomaly events to create a unified object model and data image

- Training an anomaly detection model to run at scale on the company’s proprietary data pipelines

- Building a model-driven architecture with a data virtualization and AI governance layers

- Development of the custom application with an adaptive UI framework to visualize AI alerts and prescriptive insights on KPI-focused dashboards with advanced filtering capabilities

AI integration results:

- $40 million annual savings due to the reduction of non-productive time by 4.7%

- 99% reduction in false alarms

- 88% of accurate predictions of compressor failures after 6 months

The decision to integrate AI with legacy systems and databases is often driven by a real business need that’s costing the company time and money. Each of the above implementation approaches is unique and tailored to the company’s existing infrastructure.

From tech debt to trust: Barriers to AI adoption in legacy environments

Let’s address the elephant in the room: the real barriers that make CTOs hesitant about AI integration. Understanding these challenges and, more importantly, how to navigate them often makes the difference between successful implementation and expensive false starts.

Technical debt

The global CTO survey reveals that technical debt is the primary barrier to growth. SnapLogic’s report supports this by showing that 63% of surveyed businesses report adverse and severe effects that technical debt has on their already technical stacks. The primary cause of this is legacy software.

When you’re already drowning in technical debt, AI integration can feel like adding complexity to an already bloated data stack. It’s a legitimate concern, but it doesn’t have to be a roadblock.

To ease concerns about technical debt among your teams, implement AI as isolated, API-connected services that don’t alter core legacy code and can be easily reversed if needed. Use sandbox environments or parallel systems to test AI functionality before connecting it to production workloads. This helps contain risk and keeps your technical debt from growing further.

What matters most is having a clear, step-by-step roadmap for AI integration, enabling you to roll out AI infrastructure in stages, from pilot to full-scale deployment. Measuring ROI in this context should go beyond financial metrics. Include operational KPIs like shorter sales cycles, faster decision-making, or lower error rates.

The goal isn’t to eliminate technical debt overnight, but to ensure AI helps reduce rather than increase your maintenance burden.

Data quality and management issues

Data quality concerns represent the single largest barrier to enterprise AI adoption, with 67% of CEOs citing potential errors in AI/ML solutions as their primary implementation concern. For CTOs, this translates into a dual challenge: ensuring data reliability while preventing data management bottlenecks from derailing AI initiatives entirely.

The apparent contradiction of executives doubting their own data infrastructure reflects the velocity of modern data growth. Enterprise data volumes double every 12-18 months, transforming yesterday’s reliable datasets into today’s governance challenges. Even CTOs confident in their current data quality must constantly question whether their infrastructure can handle tomorrow’s AI demands.

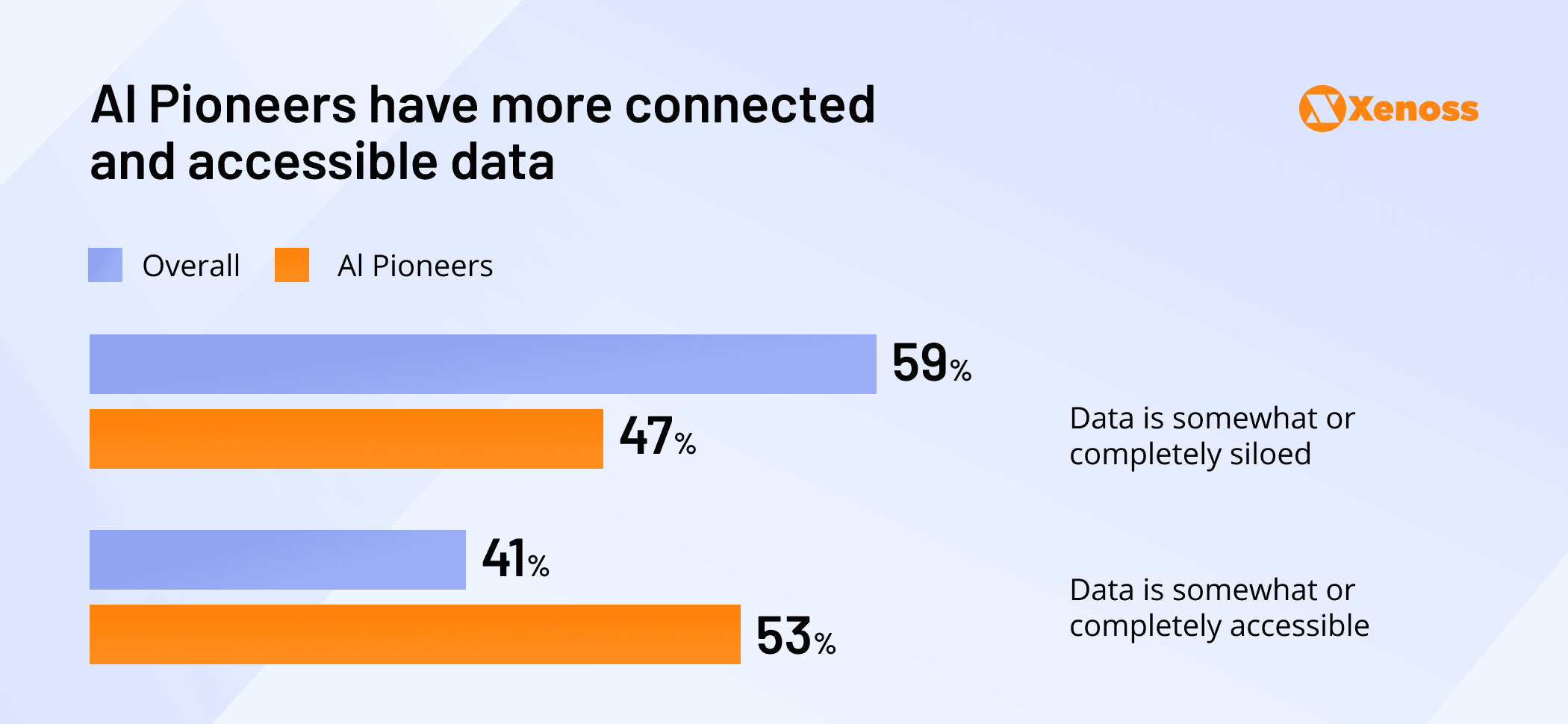

Data challenges typically manifest in three critical areas that directly impact AI readiness.

- Departmental data silos prevent the cross-functional insights that AI requires

- The absence of centralized data architecture, whether through modern data lakes or data mesh approaches, limits AI model training and validation

- Insufficient data governance creates uncertainty about data ownership and access rights, paralyzing AI initiatives before they begin.

AI adoption often becomes the catalyst that forces organizations to finally tackle their data management challenges. Building an enterprise knowledge base with LLMs, for instance, requires extracting data from multiple sources, establishing relationships between previously disconnected data points, and developing unified approaches to data management that benefit the entire organization.

The chart above illustrates a compelling pattern: organizations that commit to AI implementation consistently improve their data management practices, while those that delay AI adoption often remain stuck with fragmented data infrastructure.

CTOs use data concerns as motivation rather than roadblocks. Begin with a comprehensive data infrastructure audit that identifies governance gaps, quantifies data quality issues, and prioritizes remediation efforts.

Security concerns

Security remains one of the most significant barriers to enterprise AI adoption in organizations that rely on legacy systems. Many of these systems still depend on outdated protocols, hardcoded credentials, and unpatched software components, making them vulnerable to exploits such as ransomware or privilege escalation. Security concerns about putting their infrastructure at risk are stopping 28% of CTOs from pursuing digital transformation.

In regulated industries such as finance and healthcare, there is even more pressure. GDPR, HIPAA, PCI DSS, and CCPA require strict data governance, auditability, and explainability. And off-the-shelf models used for fraud detection or patient risk scoring may fail to meet these requirements, due to a lack of traceable decision logic or audit trails.

Successful implementations start with zero-trust security models that assume no system or user can be trusted by default. Every AI service request gets authenticated and validated, while role-based access controls ensure AI systems only access data they actually need for specific tasks.

DevSecOps practices become critical here, integrating security testing directly into AI development cycles rather than bolting it on later. When connecting AI tools to legacy systems, IT teams rely on industry-standard communication protocols that encrypt data in transit and maintain detailed logs of every interaction.

Infrastructure constraints

Legacy systems that have been running on-premises for years aren’t designed for modern AI workloads, as they demand low-latency and high-throughput networks. According to Cisco’s survey, 73% of business leaders are concerned about falling behind the competition due to AI integration, primarily because of their outdated network infrastructure. Implementing AI on-premises or in a hybrid environment can be a viable solution.

The choice of the infrastructure setup largely depends on your use case and the complexity of the task AI should solve. Consider the scenarios below.

Scenario #1. For lightweight AI applications like chatbots, document processing, or basic analytics, on-premises deployment often makes perfect sense. Small language models can run effectively on existing server infrastructure, especially when you add API-based middleware layers that let AI systems access your legacy databases without requiring major system modifications.

As your AI usage grows:

- On-premises workloads can be distributed across GPU clusters and orchestrated efficiently.

- Tools like Kubernetes, Ray, or NVIDIA Triton Inference Server help manage resource allocation and prevent bottlenecks, thereby enhancing overall system performance.

Scenario #2. For more demanding applications, such as large language model implementations or complex machine learning pipelines, hybrid environments typically offer the best balance. For instance, to run the latest large language Llama 4 models, it’s recommended to have:

- NVIDIA GPU with over 48GB VRAM and a CPU with at least 64GB RAM

- multi-GPU setup with over 80GB VRAM per GPU for large-scale implementations

Due to these performance and resource requirements, hybrid environments (where some AI workloads are processed in the cloud while core business systems remain on-premises) often strike the best balance between scale, speed, infrastructure costs, and data control.

Approaches to AI implementation in legacy systems

We’ve researched the enterprise AI market and distinguished the following opportunities for low-risk AI integration.

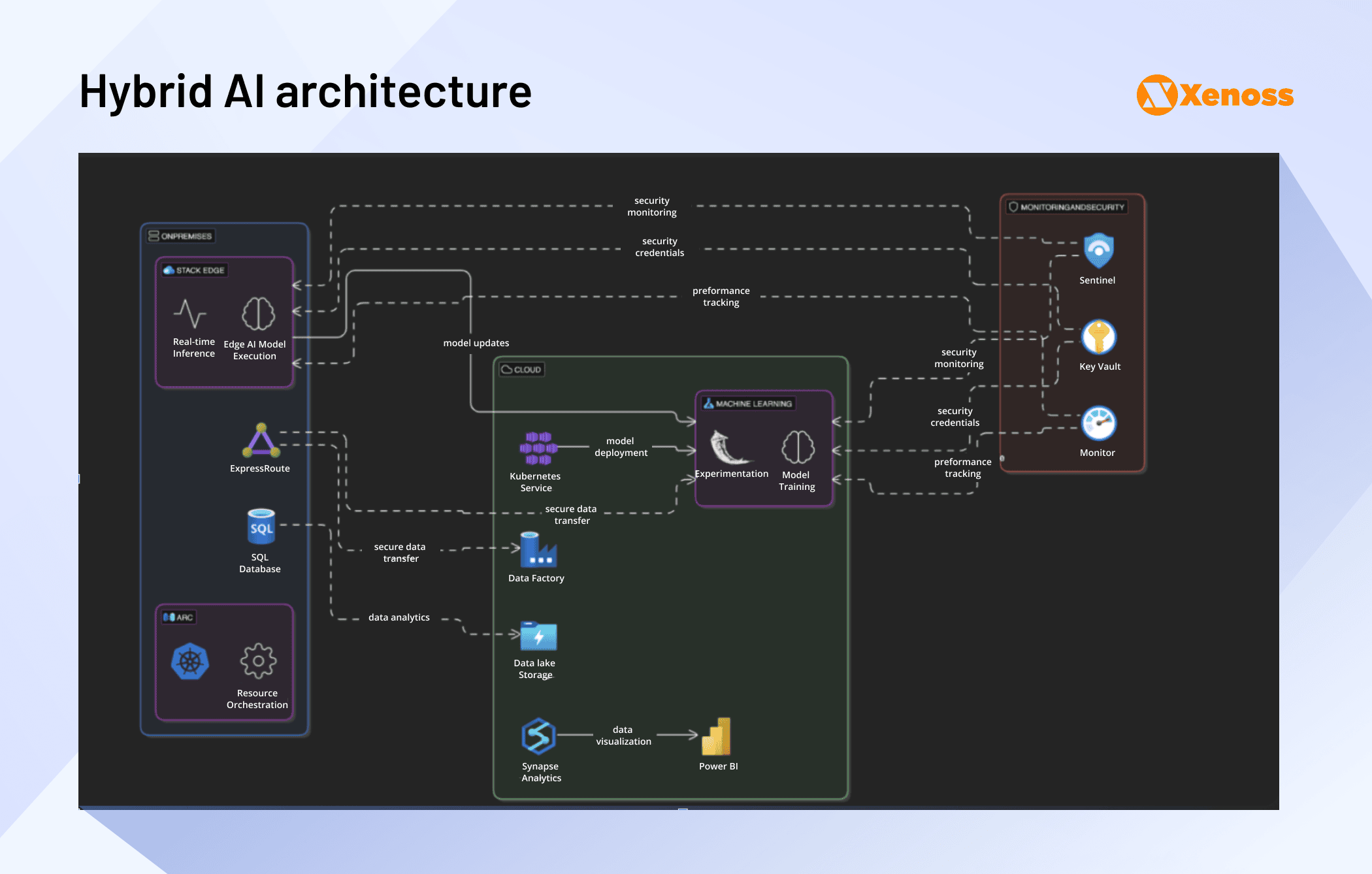

Hybrid architecture

A hybrid setup distributes AI workloads strategically, running computationally intensive tasks like model training in the cloud while keeping sensitive data processing and inference on-premises. This approach gives you the best of both worlds: cloud scalability when you need it, data control when it matters most.

Consider how a manufacturing company might implement demand forecasting. They could train predictive models in the cloud using anonymized historical production data, taking advantage of virtually unlimited computational resources and the latest ML frameworks. Once trained, those models get deployed on-premises where they can:

- Process real-time operational data from ERP systems

- Generate immediate forecasts

- Trigger automated supply chain adjustments

All without sensitive business data ever leaving the corporate network.

Making hybrid architectures work:

- You’ll likely need custom-built API gateways to eliminate the gap between older systems and modern AI services.

- It makes sense to use a mix of proprietary and open-source models depending on the task and budget.

- It’s possible to route simple tasks to lightweight models and keep complex requests for higher-performing ones.

Hybrid approaches make sense when your organization values cloud flexibility and cutting-edge AI capabilities but can’t compromise on data sovereignty or regulatory compliance. It’s particularly popular in industries such as healthcare, finance, and manufacturing, where data residency requirements align with the demanding needs of AI performance.

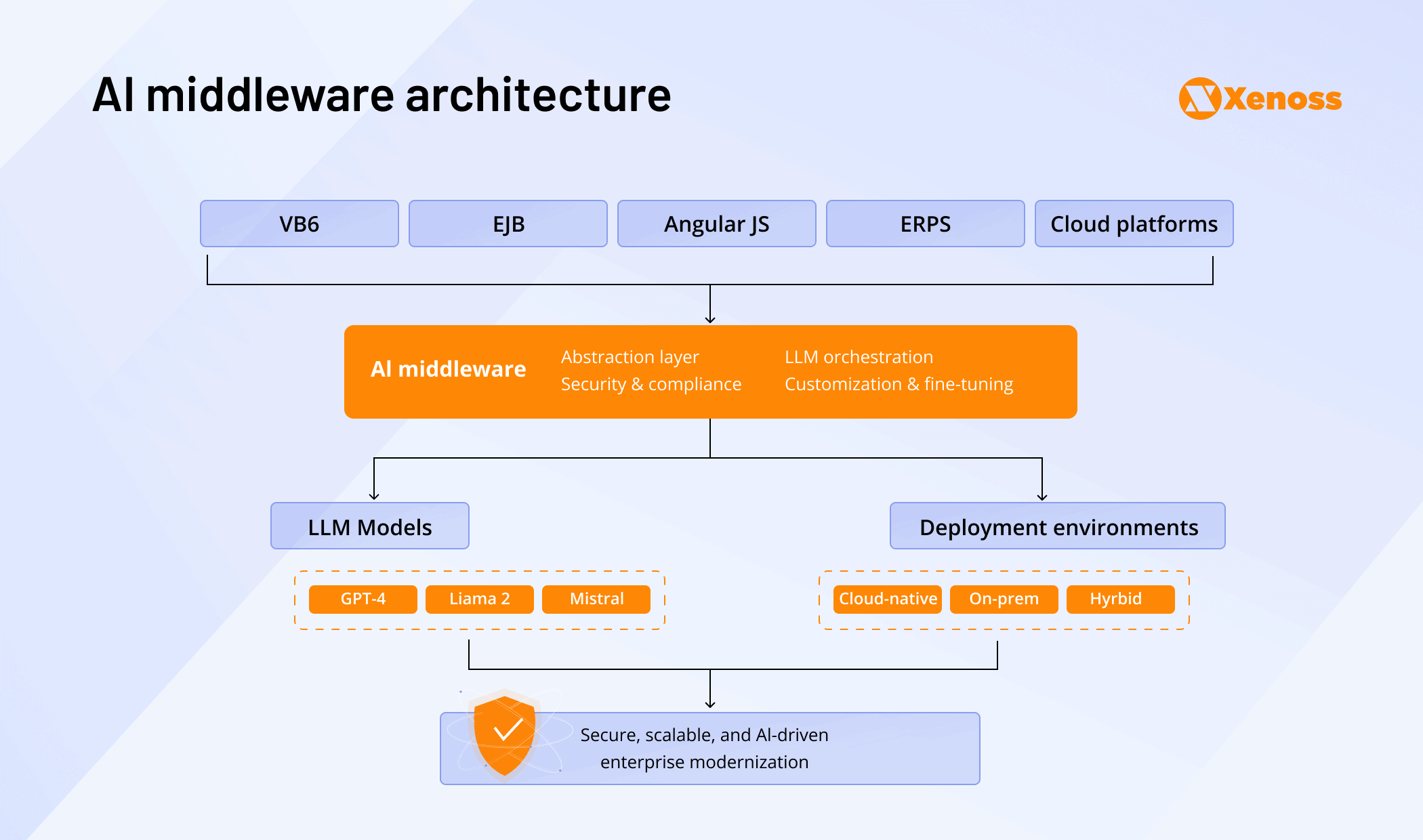

AI middleware architecture

AI middleware serves as a bridge, enabling you to integrate multiple external AI tools into various legacy systems. Instead of hard-coding integrations with specific AI services, your legacy applications communicate with a single middleware layer that can route requests to different AI providers based on performance, cost, or capability requirements. If you need ChatGPT for content generation but Claude for analysis, the middleware handles that seamlessly.

Deployment options match your security preferences: Kubernetes for elastic scaling, virtual machines for familiar management, or on-premises for maximum data control. The key advantage is comprehensive observability, routing all AI interactions through one point gives you clear visibility into model performance, usage patterns, and costs across your organization.

A well-designed middleware layer simplifies observability. By routing all AI-related interactions through a single point, you gain a clearer view of model behavior, performance, and usage patterns across the organization. Such a level of visibility is crucial when managing multiple AI services that evolve at varying rates.

The middleware approach works particularly well when you need clean separation between fragile legacy code and rapidly evolving AI tools.

This architecture is ideal for organizations that want to experiment with different AI capabilities without vendor lock-in or disruption to legacy systems.

Modular AI architecture

Modular architecture treats each AI capability as an independent microservice that integrates with legacy systems through standardized interfaces. Rather than deploying monolithic AI solutions, you build focused services for specific functions: computer vision for quality control, recommendation engines for customer engagement, or predictive analytics for forecasting, each operating independently while sharing data through secure APIs.

The power lies in surgical scalability. When your risk scoring engine needs higher accuracy, you can update or retrain just that module while leaving customer-facing systems unchanged. This approach particularly suits organizations building sophisticated AI environments, such as enterprise-level multi-agent systems, where multiple AI capabilities must coordinate while maintaining operational independence.

Technical implementation leverages modern containerization and orchestration. Modules:

- Communicate via standardized RESTful APIs and message brokers for parallel processing

- Can be containerized and deployed through Kubernetes or serverless platforms for precise resource allocation

- Maintain stateless operations with shared data stores, ensuring consistency across services.

Modular architectures work best for organizations with strong DevOps capabilities and long-term AI strategies. The complexity trade-off favors this approach when AI requirements span multiple business functions or when different capabilities require different update cycles and performance characteristics.

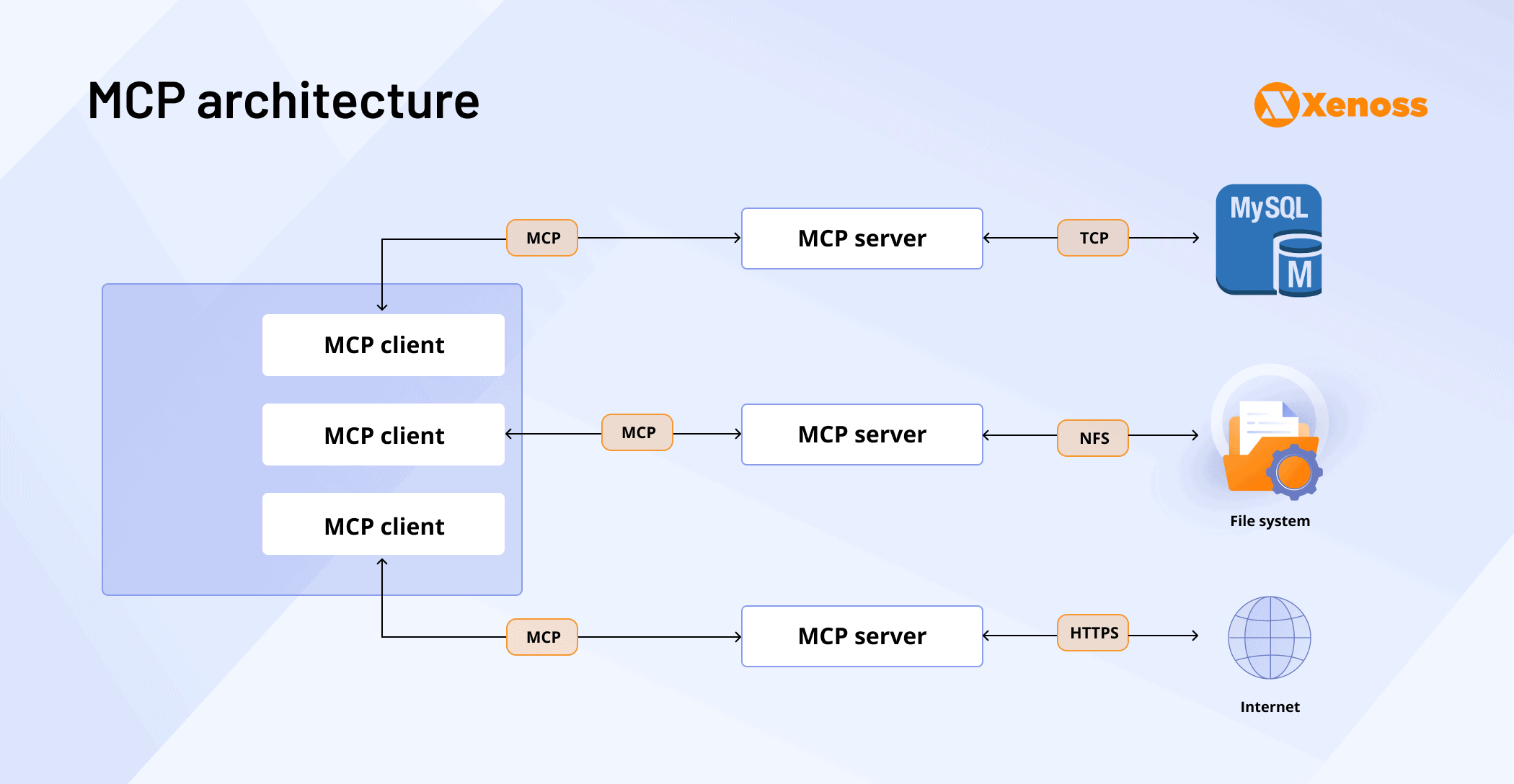

MCP server

MCP is a standard method for connecting AI agents and LLMs to external APIs and data sources, enabling legacy software modernization. Essentially, MCP allows you to connect to multiple AI services (MCP hosts) through a single hub (MCP clients and servers). This approach doesn’t require building separate APIs to connect AI solutions to different systems, making it a more straightforward way to start using AI solutions, even with outdated software.

MCP is a viable solution for quickly testing AI capabilities in your legacy systems. But it’s no silver bullet. Common challenges include issues with growing server overhead, which can potentially impact system performance. Plus, MCP is a relatively new approach with a limited number of MCP hosts, so it’s essential to first validate its effectiveness for your IT infrastructure.

The beauty of these architectural approaches is their flexibility; they’re not mutually exclusive. A modular architecture can integrate AI agents via middleware for enhanced security or combine with custom API gateways for optimized performance.

Plan ahead: Benefits of the long-term AI maturity

The most meaningful results of the AI integration typically emerge 18 to 24 months after implementation. That’s when workflows stabilize, teams adapt to AI-supported decision-making, and models begin delivering higher-value outcomes based on actual usage and feedback loops. Mature AI programs are enabling organizations to reduce operational costs by 20–30% and accelerate time to market by up to 40%.

The path forward depends on your specific challenges and infrastructure reality. Your choice can fall on a simple SML trained to perform a specific task, such as a custom chatbot integrated into your legacy CRM, or a large LLM to solve complex production issues across multiple locations. For each scenario, Xenoss can find an optimal solution that won’t leave you inundated with technical debt, ruinous infrastructure bills, or fines due to non-compliance.