Data has become the defining competitive advantage for modern enterprises. Companies across industries are racing to acquire, process, and activate data assets, driving unprecedented investment in validation and labeling platforms.

Meta recruited Scale AI’s Alexander Wang to head its Superintelligence team while acquiring a 49% stake in the company. Meanwhile, Surge AI, Scale’s primary competitor, is targeting a $1 billion funding round.

Leading AI labs employ fundamentally different validation strategies. High-Flyer Capital Management, the creators of DeepSeek, chose synthetic data generation using ChatGPT-generated content to train and refine their models.

OpenAI takes the opposite approach, recruiting PhD-level experts in biology, physics, and specialized technical domains to establish quality benchmarks. The company reportedly employs over 300 domain specialists at $100 per hour to generate doctorate-level training data.

While conclusive performance data remains limited, industry experts believe human expertise will remain essential. Amjad Hamza, strategic project lead at Mercor, argues that “talent will always be needed as long as there is a gap between the frontier lab or model and a top human in that field.”

Academic research supports human-computer collaboration in data validation. Human-in-the-loop (HITL) approaches helped malware analysts achieve 8x more effective Android threat detection compared to automated-only systems.

Healthcare applications demonstrate similar advantages, with HITL validation achieving 99.5% precision in breast cancer detection, significantly outperforming AI-only (92%) and human-only (96%) approaches.

This guide provides data and product teams with practical frameworks for implementing HITL data quality monitoring in production AI systems. We’ll explore cost-effective architectures for data validation and drift prevention using open-source tools, including Great Expectations and Apache Airflow.

Human-in-the-loop data validation architecture in three steps

Data quality has evolved from a nice-to-have into a business-critical requirement. As event-driven architectures replace traditional reporting pipelines, the stakes for data accuracy have never been higher.

In legacy systems, data validation occurred during database loading. A single data type error would trigger downstream pipeline failures, forcing immediate attention. System constraints naturally enforced data correctness through built-in accountability.

The modern data stack operates differently. Big data platforms became more tolerant of individual errors, removing the forcing function that once kept engineers accountable for data quality. Meanwhile, data volumes exploded to terabytes per second, dramatically increasing error probability.

AI applications across industries now depend on high-quality data for both internal analytics and customer-facing features. Poor data quality directly impacts model accuracy and business outcomes.

Traditional data validation relied on non-expert crowdsourced workers to examine and label every dataset point. As large language models now match or exceed crowdsourced annotator performance, human-in-the-loop validation has emerged as the superior approach.

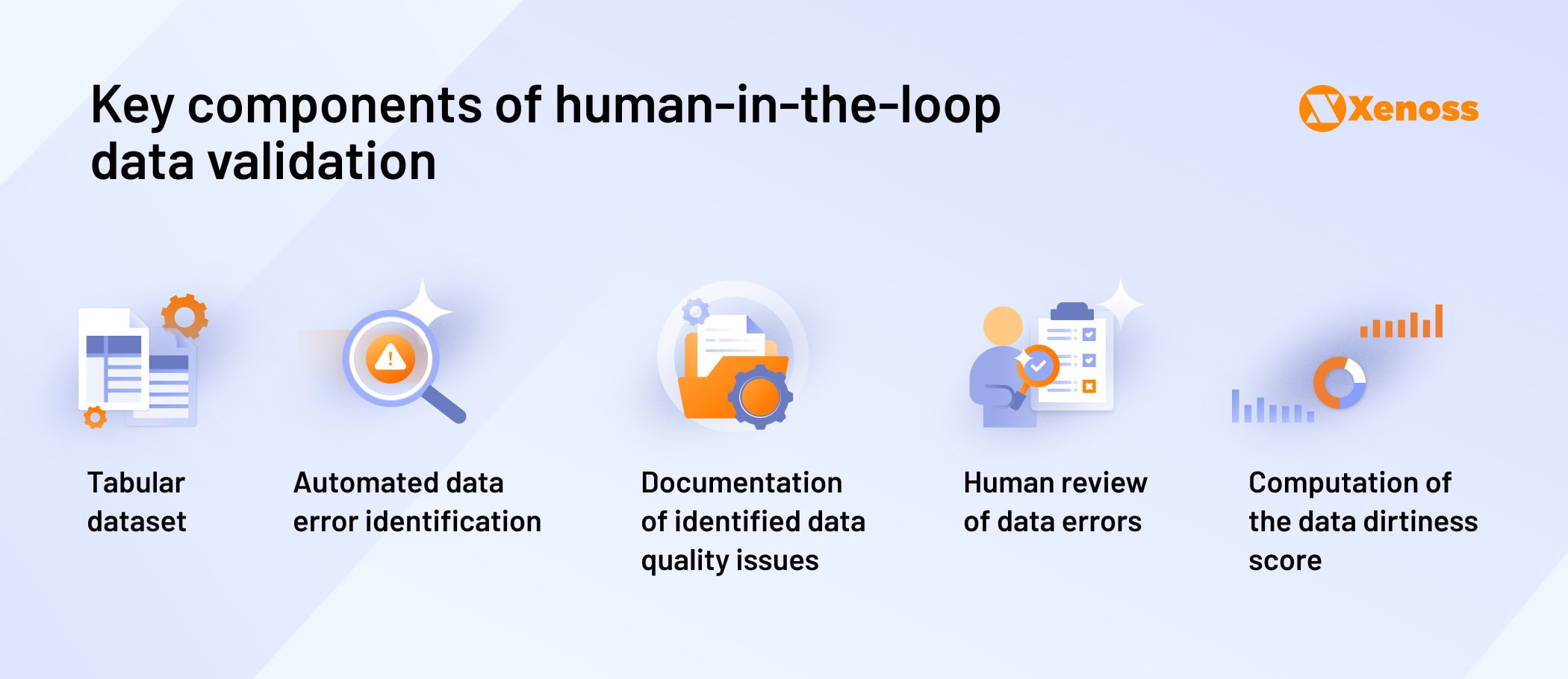

Engineering teams implement HITL validation through three complementary layers, each serving a specific role in maintaining data integrity.

1. Automated flagging

Data engineering teams establish custom validation rules for all incoming data, identifying errors before they enter production pipelines. Automated flagging systems track detected issues and trace them back to root causes, enabling proactive problem resolution.

Automated data flagging has gained widespread adoption because it solves three critical challenges in traditional validation approaches:

Cost efficiency: Human-only validation requires expensive domain experts and specialized analysts to identify data quality issues. Training automated systems on company-specific data patterns delivers comparable accuracy at significantly lower operational costs.

Engineering focus: Data cleansing ranks among the least popular tasks in data engineering. Automation allows teams to redirect their efforts toward higher-value work like architecture design, modeling, and strategic analytics.

Specialized expertise gaps: Most data engineering training programs don’t emphasize quality assurance methodologies. Automated systems can detect complex, systemic issues that human reviewers might overlook without specialized training.

Implementation approaches

Teams implement automated flagging through two primary strategies, depending on their resources and requirements.

LLM-based detection offers a quick-start approach for teams seeking immediate results. Organizations like DeepSeek use large language models to scan datasets and flag suspicious samples. Data engineer Simon Grah documented this methodology in a detailed Towards Data Science implementation guide.

While LLM-based detection is cost-effective and simple to deploy, general-purpose models lack domain-specific expertise. GPT or Claude may miss industry-specific quality requirements that specialized validation systems would catch.

Data flagging tools provide robust alternatives for teams without custom development capacity. Leading data engineering teams rely on these primary ones:

- Great Expectations: Open-source framework enabling human-readable validation tests and pipeline integrity monitoring.

- Monte Carlo: A data observability platform that automatically detects anomalies, missing data, and schema changes across modern data stacks.

- Deequ: AWS-built Apache Spark library for scalable data quality checks within ETL workflows.

Custom systems represent the gold standard for enterprise organizations handling sensitive data. Building validation logic trained on company-specific error patterns delivers superior accuracy while maintaining complete security control. Custom approaches eliminate third-party data sharing concerns and provide full customization for unique business requirements.

2. Human review

Automated error detection is improving rapidly, but at the end of the day, humans are the end-users of model outputs. Involving a human reviewer in data validation ensures that the algorithm is built “by humans for humans”.

Edge case handling represents the most critical area for human intervention. High-performance machine learning models must accurately interpret outlier data that falls outside normal distribution patterns.

Automated systems struggle with edge cases due to their rule-based nature. Without sufficient exposure to similar outlier patterns, these systems either reject valid edge cases or accept problematic anomalies. Human reviewers provide the contextual judgment needed to properly classify borderline samples.

Organizations implement human review through two primary workflows, depending on scale and resource constraints.

Hybrid flagging systems combine automated detection with human oversight. LLMs or rule-based systems identify potentially problematic data samples and route them to human validators for final decisions. This approach reduces reviewer workload by filtering out clear-cut cases while ensuring human expertise handles ambiguous situations.

Crowdsourced validation platforms like Amazon Mechanical Turk provide scalable human review for organizations lacking internal domain expertise. MTurk connects companies with freelance reviewers for data labeling, content moderation, and quality assessment tasks.

While crowdsourced platforms offer cost efficiency and rapid scaling, they lack the domain-specific knowledge that internal experts provide. Organizations handling sensitive or highly technical data typically prefer internal review processes despite higher costs.

3. Expert validation

While automated flagging systems achieve 95th percentile performance, expert validation remains the final checkpoint for achieving near-absolute accuracy in production AI systems.

Research consistently demonstrates expert validation superiority. A 2009 study compared machine annotations, Mechanical Turk workers, and domain experts at rating machine translations across five languages. Expert validation outperformed both automated algorithms and crowdsourced non-expert assessments.

Although machine learning capabilities have advanced significantly since 2009, expert review serves as the critical final checkpoint for model data validation.

Machine learning teams face a fundamental challenge: establishing ground truth for validation when no single correct answer exists. The industry has developed three primary approaches to address this uncertainty:

Majority voting establishes validation benchmarks through consensus. Multiple experts review samples, and the most common assessment becomes the accepted standard.

Probabilistic graph modeling incorporates task difficulty, reviewer expertise, and confidence levels to predict optimal answers. Advanced algorithms weigh expert opinions based on historical accuracy and domain knowledge.

Inter-annotator agreement measures consensus levels across expert reviewers. Samples with higher agreement rates receive priority for model training datasets.

Embracing expert disagreement

Lilian Weng, a former OpenAI research scientist, challenges traditional consensus-seeking approaches in expert validation. She references “Truth is a Lie: Crowd Truth and Seven Myths of Human Annotation” by Aroyo and Welty, which advocates for embracing expert disagreement.

The research argues that single correct answers often don’t exist in complex validation scenarios. Diverse expert perspectives add nuance and improve long-term data quality rather than forcing artificial consensus.

Expert disagreement provides additional benefits by encouraging validation teams to refine review guidelines and reassess task definitions. When disagreement becomes acceptable, teams can implement more nuanced validation approaches that capture data subjectivity rather than forcing binary decisions.

Implementing human-in-the-loop data validation: an Apache Airflow and Great Expectations setup

Human-in-the-loop data validation requires an architecture where automated systems handle routine checks while enabling humans to easily interpret results and troubleshoot complex data errors.

PepsiCo exemplifies this approach through its Apache Airflow and Great Expectations implementation. Data engineers at the company operate over 800 active self-hosted Airflow pipelines, ingesting data from 50+ sources across multiple teams.

While high data volumes and cross-team collaboration power PepsiCo’s business intelligence tools and internal knowledge bases, they create distinct data quality challenges:

- Schema inconsistencies emerge when multiple teams modify shared pipelines without coordination.

- Data removal complexity requires production changes and custom pipeline logic alterations for quality issues.

- Business context gaps occur when technical validation lacks domain-specific quality requirements.

- Scale detection challenges involve identifying duplicates and missing data across massive ingested volumes.

Global organizations need flexible, granular data validation systems integrated with existing pipeline orchestrators. Great Expectations has emerged as the leading solution due to its comprehensive toolset: web interface, validation engine, webhook notifications, and native Airflow integration.

Great Expectations validates data quality through four hierarchical levels:

- Context: The platform considers all configurations and components of the projects.

- Sources: Great Expectations tracks interactions and configurations between data sources.

- Expectations are data tests and descriptions.

- Checkpoints: Validation metrics against which incoming data is tested.

Once a dataset is past the “checkpoint” stage, it is ready for production.

PepsiCo’s validation workflow

PepsiCo’s implementation demonstrates practical HITL validation through six coordinated steps:

- Apache Airflow detects the ingestion of new data. This triggers the validation pipeline.

- In Great Expectations, data quality rules like “values must be positive integers” are defined.

- The newly set expectations are applied to the dataset.

- Validation results get exported in JSON or HTML. These files are then passed on to humans or downstream systems for review and fixes.

- Automated resolution systems address minor issues, like file naming errors.

- Data quality problems that require business input or rewriting the pipeline logic are rerouted to humans for review and troubleshooting.

This architecture ensures comprehensive quality control while optimizing human expertise for complex decision-making. PepsiCo achieves production-ready data accuracy through systematic validation that combines automated efficiency with human judgment.

Off-the-shelf data quality tools vs custom software

Although tools like Great Expectations handle most data validation requirements, these platforms have inherent limitations that become apparent at enterprise scale.

Data engineers frequently cite setup complexity as a primary concern with Great Expectations. One practitioner noted:

Additional complaints include inadequate documentation and complex API ecosystems that slow implementation.

Every commercial validation platform encounters team-specific use cases beyond its scope. Enterprise engineering teams increasingly build custom validation frameworks to avoid vendor lock-in and maintain complete control over data quality processes.

Airbnb’s Wall framework

Airbnb built Wall, a custom data quality framework built on Apache Airflow. The architecture operates through three coordinated components:

- Development Time: Users define data quality checks, input/output tables, and ETL metadata using Wall Configs and Wall API.

- DAG Parse Time: The API Manager and Config Manager generate Airflow sensors, dependencies, and tasks based on the configs.

- Runtime: Airflow executes the generated tasks using Jinja substitution and runtime context to perform the defined checks.

Thanks to its flat learning curve and organization-specific focus, Wall has become a data quality standard at Airbnb and a bridge between engineering and domain teams.

- The framework helped data engineering teams standardize data checks and anomaly detection mechanisms.

- The config-driven nature of Wall enables running no-code data tests.

- The framework is intrinsically scalable and allows new teams to add as many checks as they need.

- Wall’s straightforward onboarding makes it usable for business roles, which fosters communication between technical and non-technical teams.

Stripe’s financial data validation

Stripe operates a custom data quality platform ensuring accurate financial reporting across all business lines. The system evaluates fund flow data using three critical metrics:

- Clearing: Is the flow complete?

- Timelines: Did the data come in on time?

- Completeness: Is the representation of the data ecosystem complete?

Based on these metrics, the system calculates a data quality score that reflects the system’s accuracy and reliability.

Custom validation systems align perfectly with their organizations’ unique business logic. Tailored frameworks deliver accuracy and flexibility impossible with commercial platforms.

Hence, if you are contemplating building a data validation system or choosing a tool already on the market, the table below recaps key considerations.

Bottom line

As AI and machine learning become central to both internal operations and customer-facing products, data quality has transformed from a technical concern into a business-critical requirement. The accuracy of training data directly impacts model performance, user experience, and strategic decision-making across organizations.

Enterprise teams face a spectrum of validation approaches, each with distinct trade-offs. Fully automated validation offers speed and cost efficiency but may miss nuanced errors that require contextual understanding. Human-only review provides superior accuracy for complex cases but lacks scalability for modern data volumes.

While commercially successful AI systems like DeepSeek demonstrate the potential of automated validation, these applications typically handle general-purpose tasks with well-defined success criteria. Enterprise organizations face a different challenge: using machine learning to solve complex, domain-specific business problems where inaccurate data can cascade into strategic missteps affecting multiple teams and initiatives.

Human-in-the-loop validation emerges as the optimal approach for enterprise AI applications. This methodology combines the efficiency and consistency of automated systems with the contextual judgment and domain expertise that human reviewers provide. Organizations implementing HITL validation achieve the dual benefits of scalable data processing and reliable quality assurance for high-stakes decision-making.

The frameworks and tools explored in this guide provide actionable pathways for implementing production-ready HITL validation systems. Whether through commercial platforms like Great Expectations or custom solutions like Airbnb’s Wall, successful data quality architectures share common principles: automated flagging for efficiency, human review for edge cases, and expert validation for final quality assurance.