While Western media focused on xAI’s Grok 4 release and its associated controversies, a potentially more significant development emerged from China. Moonshot AI released Kimi K2, an open-source language model that has begun outperforming established players like Claude and DeepSeek across key benchmarks.

Early adopters in the AI engineering community quickly recognized K2’s potential, integrating it into their development workflows and comparing its performance against industry standards. For organizations evaluating AI models for production use, the timing couldn’t be more relevant.

The market response has been telling: Kimi K2 has already surpassed Grok 4 in API usage on Open Router, suggesting developer preference for the Chinese model’s performance and cost efficiency.

The timing adds another layer of significance: OpenAI announced delays to its first open-source model just as Kimi K2 demonstrated that high-performance, open-source AI is not only possible but cost-effective.

It didn’t take too long for the community to start connecting the dots and assume that the performance of OpenAI’s model didn’t match K2’s benchmark sweep.

A non-reasoning model focused on coding and agentic tasks

Kimi K2 uses a mixture-of-experts (MoE) setup that splits the model into specialized subnetworks, each handling different types of tasks. Think of it like having a team of specialists rather than one generalist. Moonshot AI trained K2 on 1 trillion total parameters—1.5 times more than DeepSeek’s 671 billion, but only uses 32 billion active parameters when actually running, which means faster responses and lower costs.

Moonshot AI released K2 in two flavors:

Kimi K2 Base is the raw foundation model that engineering teams can customize and fine-tune for their specific needs. Perfect for companies with AI teams who want full control over their implementation.

Kimi K2 Instruct is the ready-to-use version that works right out of the box as either a chatbot or an AI assistant that can handle complex, multi-step tasks. This one’s for teams who want to get started quickly without heavy customization.

Here’s what makes K2 different: it doesn’t show its work like reasoning models do. Instead of generating a visible chain of thought, it just gives you the answer directly. This actually has some real advantages: faster responses, less computational overhead, and more predictable behavior in production. All things that matter when you’re running AI systems at scale.

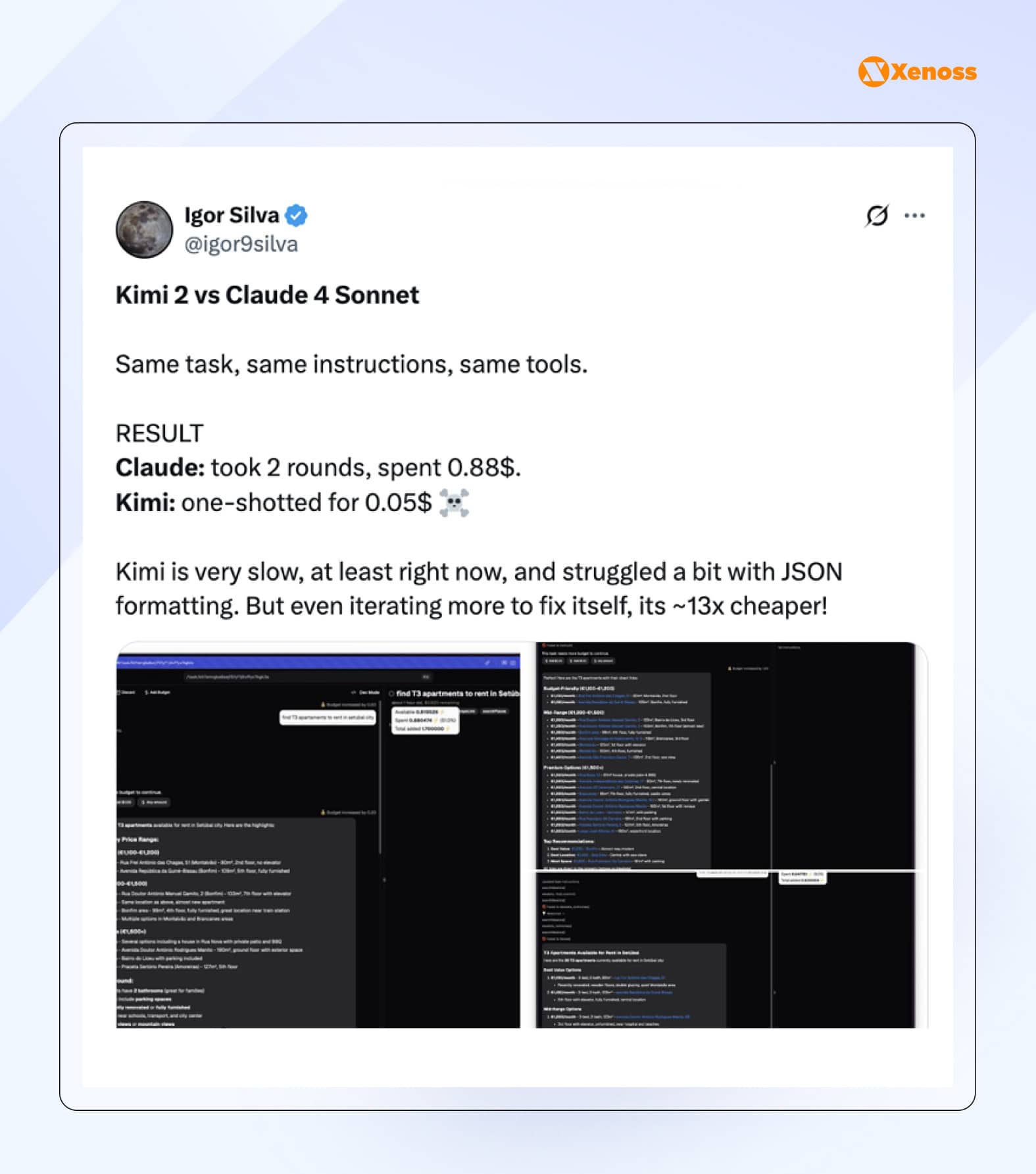

The AI engineering community has been putting K2 through its paces against models like Claude, and the results are pretty impressive. In many coding and automation tasks, K2 matches or even beats Anthropic’s models. These real-world tests give us a better picture of how the model performs beyond just benchmark scores.

Kimi K2 leads across key benchmarks

Kimi K2 has basically blown past DeepSeek by a huge margin, setting a new bar for what’s possible with open-source models. It either tied with or came very close to Claude Opus, while clearly outperforming both Claude Sonnet and GPT 4.1.

Grok 4 wasn’t included in the official benchmark tests, so we can’t directly compare.

Here’s how K2 stacks up in the numbers, pulled straight from Moonshot AI’s release notes.

Coding benchmarks

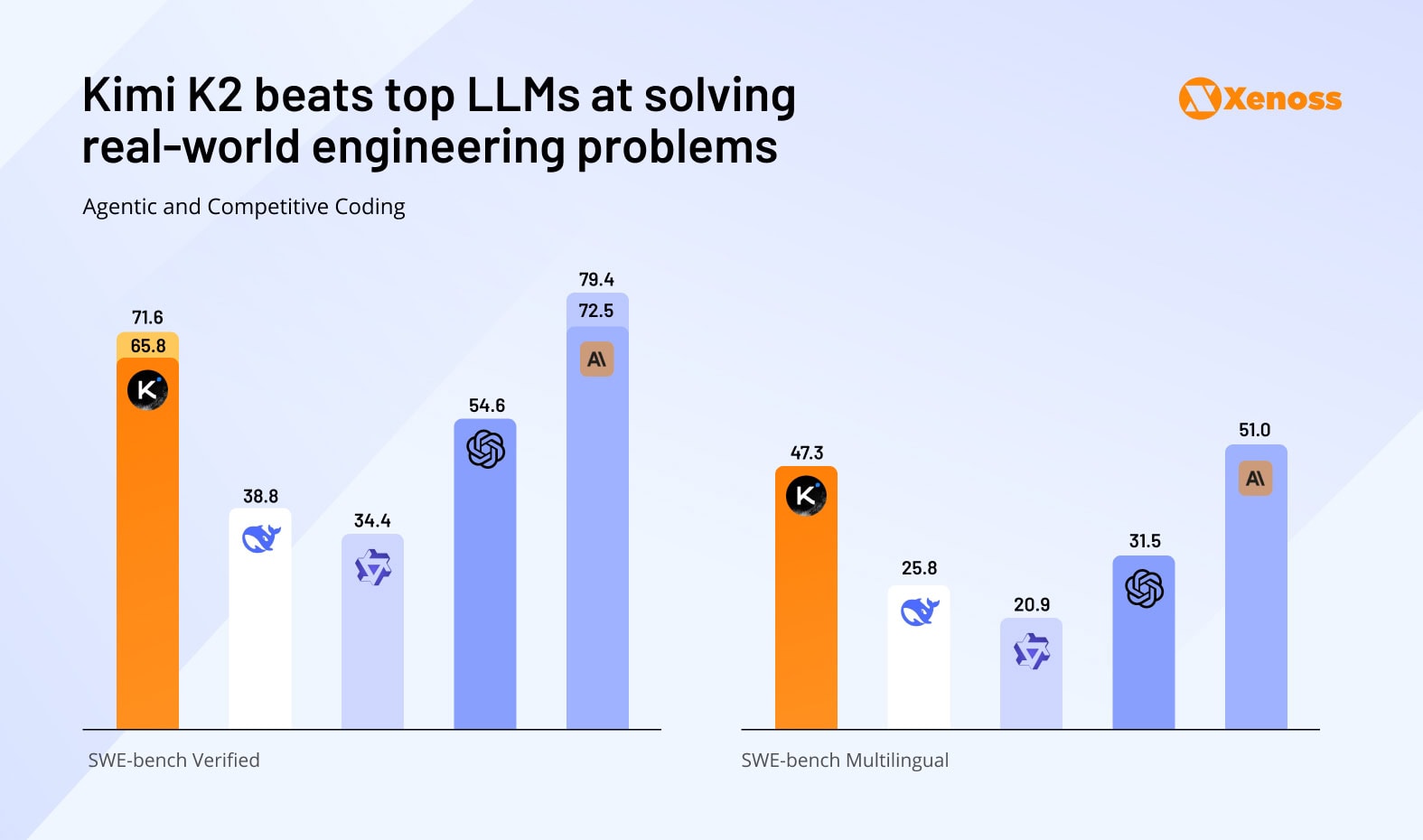

SWE-bench tests how well models can tackle real engineering problems pulled directly from GitHub repositories. Think of it as the ultimate test of whether an AI can help with the messy, real-world coding issues developers face every day.

- Kimi K2: 65.8

- DeepSeek 38.8

- Claude Opus: 72.5

So K2 sits comfortably in second place here, which is pretty solid for a new player.

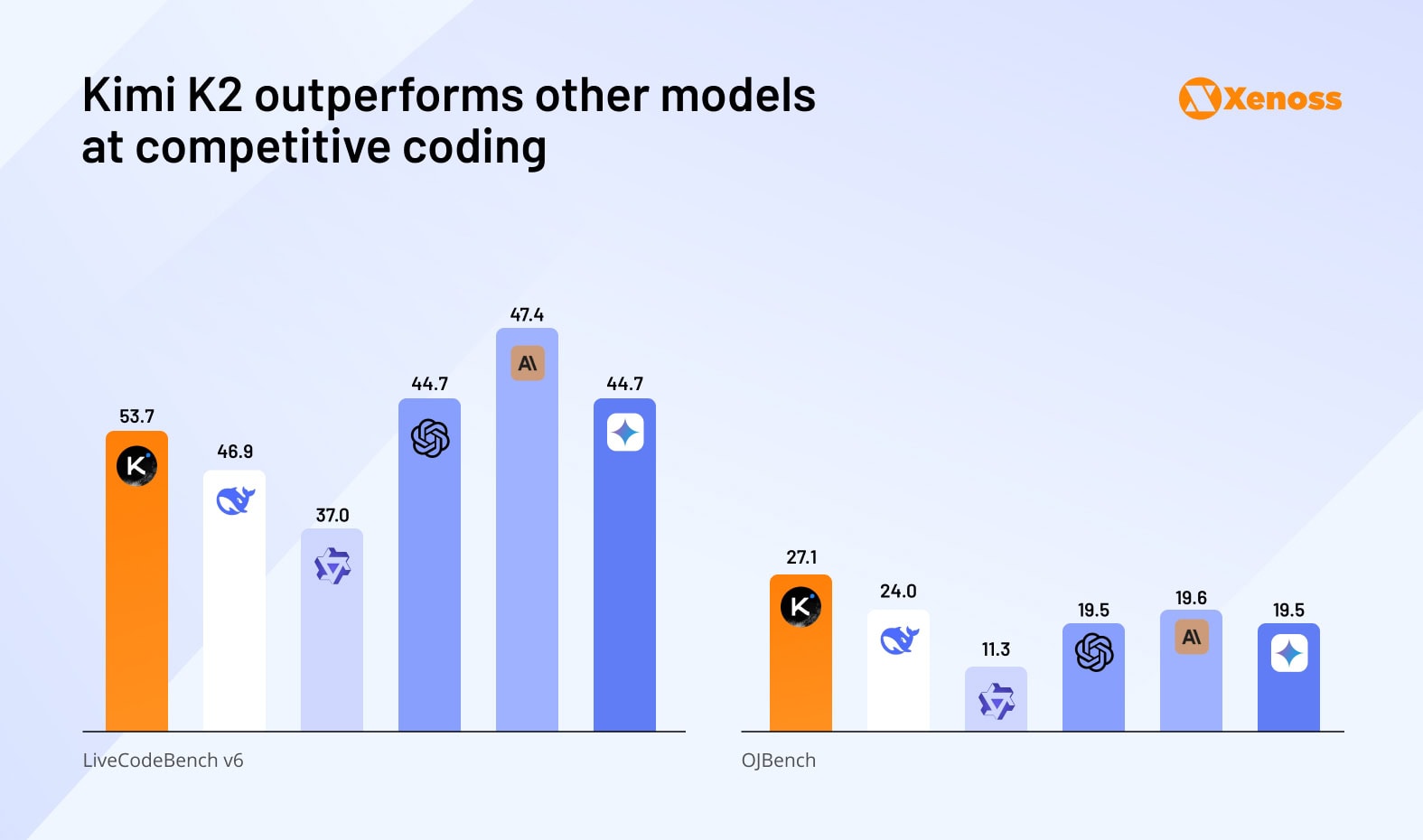

LiveCode measures the model’s ability to code in real-time, as though it were an engineer at a peer programming session.

OJCode is a benchmark that validates how well AI solves LeetCode problems, used as an industry standard in engineering job interviews.

On both of these benchmarks, Kimi K2 outperformed Claude Opus and GPT 4.1

- Kimi K2: 53.7 in LiveCode-bench, 27.1 in OJBench.

- Claude Opus: 47.4 in LiveCode-bench, 19.6 in OJBench.

- GPT: 4.1: 44.7 in LiveCode-bench, 19.5 in OJBench.

- Gemini 2.5: 44.7 in LiveCode-bench, 19.5 in OJBench.

What’s interesting here is that K2 really shines in the more practical, hands-on coding scenarios. That suggests it might be particularly useful for actual development work rather than just theoretical problem-solving.

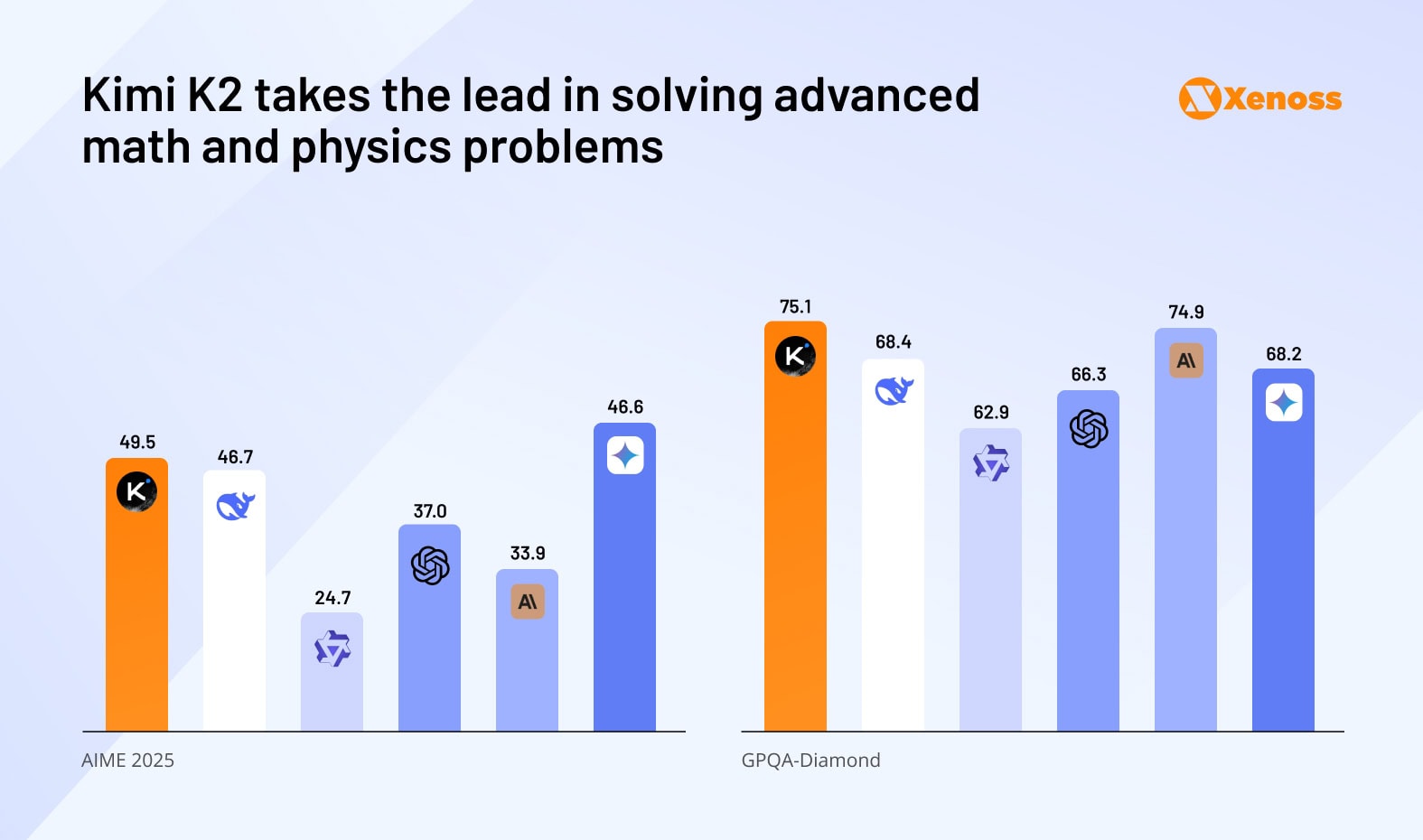

Reasoning benchmarks

K2 is excelling on two particularly challenging graduate-level benchmarks that have traditionally been used to assess how well LLMs can reason through complex problems.

AIME 2025 throws American Invitational Mathematics Examination problems at these models, the kind of abstract, symbolic reasoning challenges that would make most of us reach for a calculator (and probably still fail). These aren’t your typical math word problems.

How Kimi K2 scores against other LLMs:

- Kimi K2: 49.5

- GPT 4.1: 37.0

- Claude Opus: 33.9

- Gemini 2.5: 46.6

GPQA-Diamond is even more brutal; it tests graduate-level physics knowledge, including mind-bending stuff like relativity and quantum mechanics. We’re talking about the kind of problems that would stump most PhD candidates.

How LLMs perform at GPQA-Diamond

- Kimi K2: 75.1

- GPT 4.1: 66.3

- Claude Opus: 74.9

- Gemini 2.5: 68.2

K2 barely edges out Claude Opus here, but both are significantly ahead of the competition. What’s really striking is that K2 can handle this level of complex reasoning without the explicit step-by-step thinking that other models rely on.

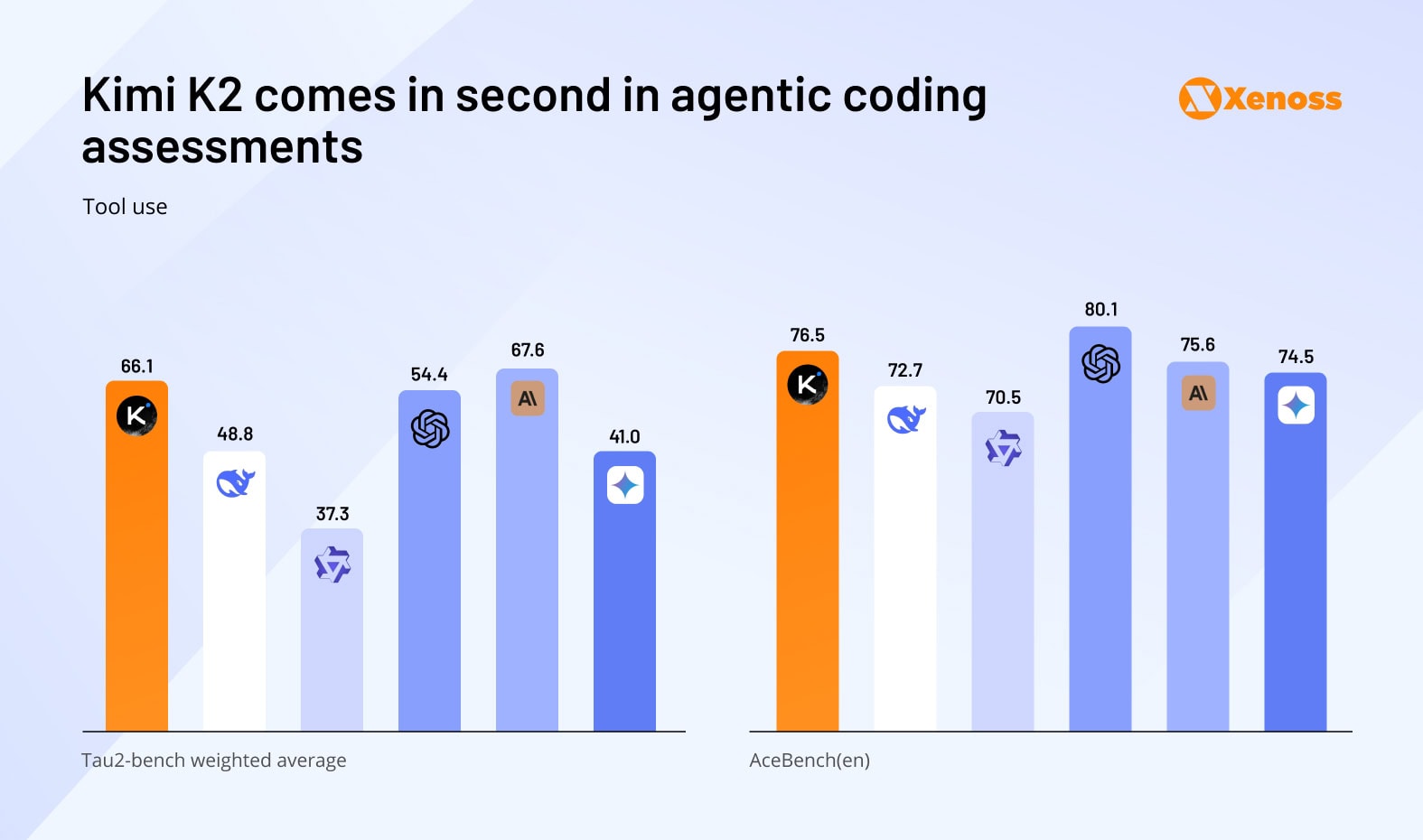

Tool calling benchmarks

When it comes to acting like an actual AI agent that can juggle multiple tools and tasks, K2 holds its own pretty well against both GPT and Claude.

Tau2-bench is all about multi-hop reasoning and app-switching. Can the model jump between different tools to complete complex engineering tasks? Think of it like watching someone efficiently navigate between their IDE, terminal, documentation, and debugging tools to solve a problem.

Tau2 scores for Kimi K2 compared to other foundation models:

- Kimi K2: 66.1

- Claude Opus: 67.6

- GPT 4.1: 54.4

- Gemini 2.5: 41.0

K2 comes in a very close second to Claude Opus, which is impressive. Both leave GPT and Gemini in the dust on this one.

AceBench is where things get really interesting—it makes models build complete engineering projects from scratch. We’re talking about designing features, debugging entire codebases, and refactoring massive amounts of code. It’s like giving an AI intern a full software project and seeing what happens.

K2 scored a solid second place with 76.5 points:

- GPT 4.1: 80 points

- Claude Opus: 75.6 points

- Gemini 2.5: 74.5 points

What’s cool here is that K2 actually beats Claude Opus on the more comprehensive project work, even though Claude edged it out on the tool-switching test. This suggests K2 might be particularly strong at handling complex, multi-faceted engineering challenges.

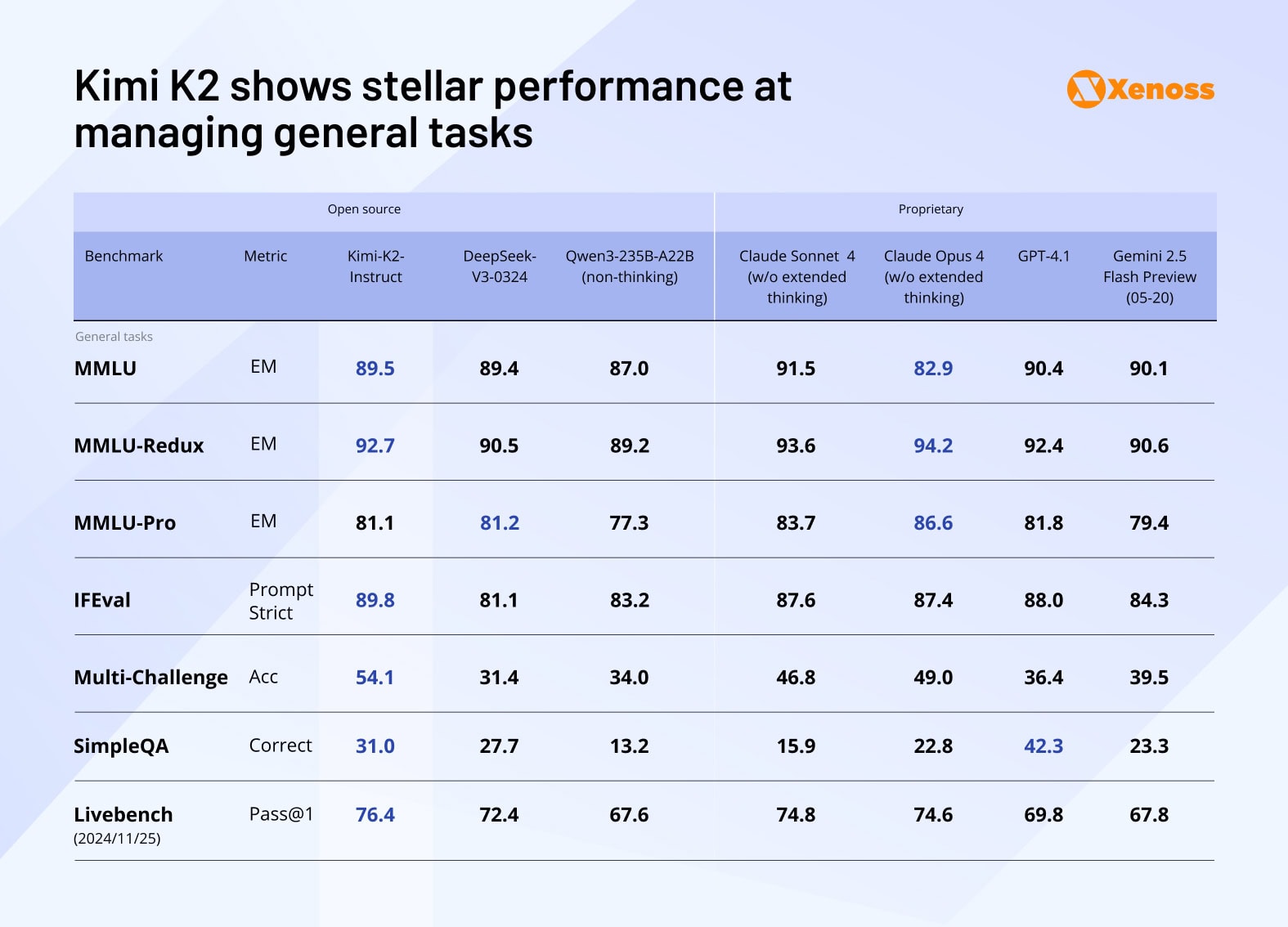

General task benchmarks

Beyond the specialized tests, Moonshot AI put K2 through a bunch of general-purpose benchmarks that test the bread-and-butter stuff like language understanding, how often it gets answers right, and whether it needs multiple attempts to nail a response.

K2 consistently landed in first place or a very close second across the board.

This is actually pretty telling because it shows K2 isn’t just a one-trick pony that’s only good at coding. It’s genuinely well-rounded, which matters a lot if you’re thinking about using it for diverse tasks in a real organization. You don’t want an AI that’s brilliant at debugging code but falls apart when someone asks it to summarize a business report.

The cost of training and implementing Kimi K2

MoonshotAI hasn’t dropped a technical report yet that breaks down exactly how much it cost to train K2. But after seeing what DeepSeek accomplished, we’re getting a clearer picture that skilled engineering teams can build incredibly effective training pipelines without breaking the bank. K2 looks like it might be following the same playbook.

When it comes to actually using the model, K2 costs a fraction of what you’d pay for competitors, plus it comes with open-source weights. This kind of aggressive pricing means K2 will likely become the go-to choice for companies running AI pilot projects or experimenting with different use cases without committing huge budgets.For organizations that’ve been sitting on the sidelines due to cost concerns, K2 could be the entry point they’ve been waiting for. Xenoss engineers can help evaluate whether K2 fits your specific use case and implementation requirements.

The Xenoss take

We’ve been watching this trend for over half a year now, where each new LLM release keeps getting significantly better than what came before. K2’s success, coming just days after Grok 4’s impressive showing, really drives home the point: benchmark excellence is getting commoditized.

Having great benchmark scores alone won’t set you apart anymore.

But here’s where K2 gets interesting. Most top-tier AI models are locked behind closed doors (except Meta’s Llama, which can’t really compete with K2 on performance), so K2 has a real advantage in terms of freedom and flexibility. Sure, Grok’s release meant MoonshotAI didn’t get the same hype wave that DeepSeek enjoyed, but we’re expecting K2’s adoption to keep climbing.

This is the second time this year that a Chinese company has built a genuinely top-tier open-source LLM. That’s starting to challenge the whole idea that American companies dominate machine learning.

American and European AI labs need to take a hard look at where they went wrong with open-source models and adjust their approach. Nathan Lambert, a well-respected researcher in the field, made a similar point in his analysis of K2’s impact. He’s calling for Western leaders to “mobilize funding for great open science projects” to prevent what he calls a third “DeepSeek moment.”

While the Western AI community has legitimate reasons to be concerned about the stream of excellent models coming from China, K2 is actually good news for everyone working in AI.

The fact that K2 can replicate DeepSeek’s success at what’s probably a similar cost just confirms that you don’t need massive budgets to train world-class models anymore. The LLM space isn’t controlled by whoever has the deepest pockets.

Over the next few years, we’re going to see new players challenging OpenAI, Anthropic, Google, and Meta. If they bring solid engineering skills and creative approaches to the table, they’ve got a real shot at winning.