Your data engineering team is drowning in tools, and it’s costing you more than you think.

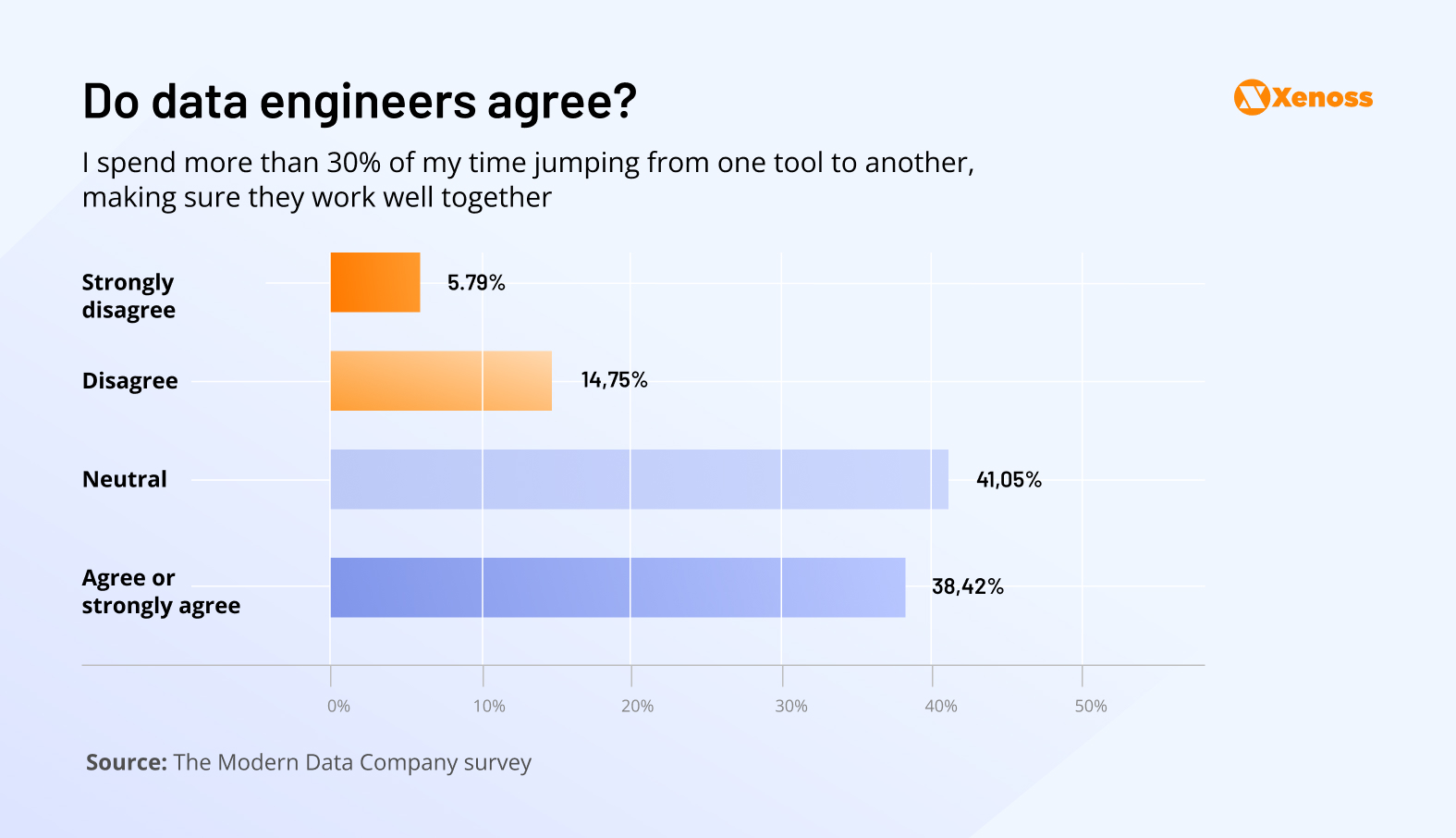

The average enterprise now uses 5-7 different data tools from multiple vendors, with 10% of teams juggling over ten separate platforms for a single project. Data engineers have a library of tools for orchestration, transformation, ETL, ELT, reverse ETL, monitoring, governance, and the list goes on. They spend one-third of their day switching between interfaces instead of delivering insights that drive business value.

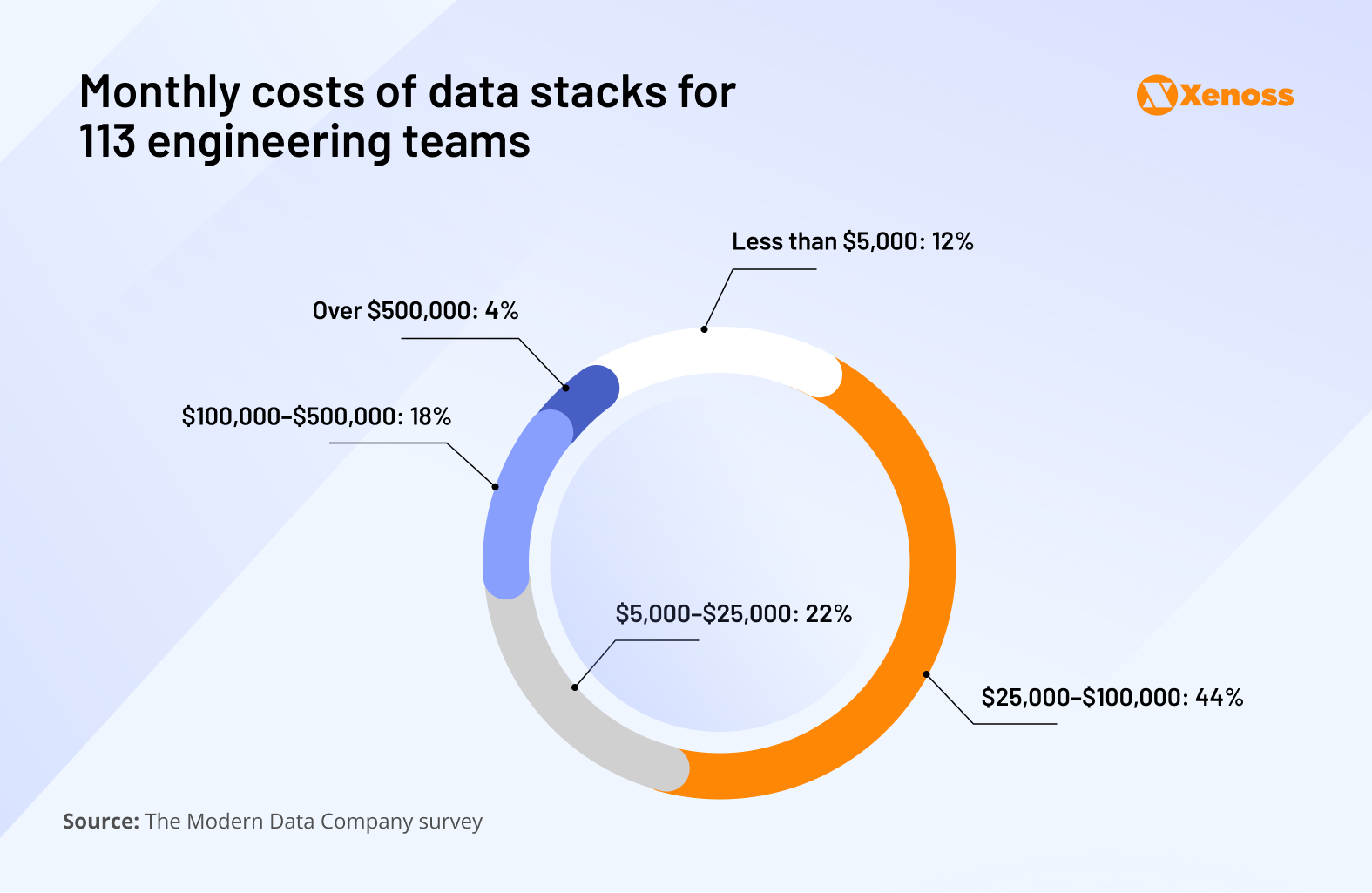

Beyond the productivity loss, teams are spending $25,000-$100,000 monthly on data infrastructure, yet only 12% report meaningful ROI. The hidden costs pile up: licensing fees, integration complexity, maintenance overhead, and constant context switching that exhausts your engineering talent.

What started as the “modern data stack” movement promised to simplify cloud-first data architecture. Instead, it created a fragmented ecosystem where every vendor claims to be essential, and engineering teams feel pressured to adopt tools for problems they may not even have.

As Thomas McGeehan put it, with stacks this bloated, teams need a tool “for remembering why you started this project in the first place.”

Here’s how data tool sprawl is draining your budget and how to fix it without sacrificing performance.

How data tool sprawl became the norm

Ten years ago, the “modern data stack” emerged to solve a real problem: distinguishing cloud-native tools from legacy systems retrofitted for the cloud. The concept worked. Companies like Fivetran and dbt built successful businesses by offering genuinely cloud-first solutions that integrated seamlessly.

But success bred excess. As cloud adoption became universal, every vendor started claiming “modern data stack” status. The term lost its meaning, becoming a marketing buzzword rather than a useful distinction.

This created three critical problems that persist today:

Vendor proliferation without proven value. The low barrier to entry meant new tools could claim market share without demonstrating clear ROI or network effects. Engineering teams struggle to assess which platforms actually solve their specific needs versus which just sound innovative.

Short-term fixes over strategic solutions. Instead of redesigning pipelines from the ground up, teams began patching immediate problems with point solutions. Pipelines kept running, but the underlying architecture became increasingly unsustainable.

Scale envy without scale needs. Teams started building “Netflix-scale” infrastructure because that’s what industry leaders recommended, regardless of whether they processed Netflix-scale data. Entire software categories became “must-haves” for teams that didn’t need them.

By 2025, tool fragmentation has become so normalized that it’s part of the business model. Vendors form partnerships, cross-sell complementary services, and create integration marketplaces, all designed to make multiple tools feel like a cohesive strategy.

Engineering teams now hire full-time positions just to manage tool integrations, monitor the monitoring tools, and coordinate alerts across platforms. Each new tool doesn’t just add licensing costs; it multiplies operational complexity.

The hidden costs of data tool sprawl

When teams calculate the cost of their data infrastructure, they typically focus on licensing fees. But tool sprawl creates expenses that extend far beyond monthly subscriptions, often doubling or tripling the true cost of ownership.

Time and productivity

The Modern Data Company survey shows that 40% of data engineers spend one-third of their workday switching between tools and trying to orchestrate operations.

This time drain happens in three critical areas:

Platform onboarding becomes a recurring expense. Each new tool requires your team to learn interfaces, understand features, and map integration points with existing systems. For senior engineers billing at $150-200 per hour, this learning curve represents thousands in opportunity cost per tool.

Context switching kills productivity and accuracy. Simply changing between interfaces costs hours annually, but the cognitive load is worse. When teams multitask between platforms, they make up to 50% more mistakes compared to working in focused, single-environment sessions.

Integration troubleshooting creates endless loops. Tools from different vendors rarely integrate seamlessly. Engineers spend hours manually tracing data lineage across disconnected platforms, debugging compatibility issues, and building custom workarounds—work that adds zero business value.

The productivity impact compounds over time. As the tool count increases, the percentage of time spent on actual data engineering decreases exponentially.

Direct expenses: licensing and maintenance

The numbers are staggering: 44% of engineering teams now spend $25,000-$100,000 monthly on their data stack, that’s up to $1.2 million annually. Yet only 12% report seeing tangible ROI from this investment.

This cost explosion isn’t accidental. Tool sprawl creates a compounding expense structure where each additional platform multiplies your total infrastructure spend:

Licensing fees scale exponentially. Most data tools use consumption-based pricing that grows unpredictably with usage. What starts as a $500/month pilot can balloon to $15,000 monthly as data volumes increase, often without corresponding business value.

Integration costs dwarf licensing costs. Connecting disparate tools requires custom development, API management, and ongoing maintenance. Teams typically spend 2-3x their licensing fees on integration work—costs that vendors rarely disclose upfront.

Hidden operational overhead compounds over time. Each tool requires monitoring, security compliance, backup procedures, and vendor management. Enterprise teams often hire dedicated platform engineers at $150K+ annually just to manage tool relationships and prevent system failures.

Redundant capabilities create waste. Tool sprawl leads to feature overlap, where teams pay multiple vendors for similar functionality. A typical enterprise might have three different monitoring solutions, four data transformation tools, and six ways to move data, each with separate licensing, support, and maintenance costs.

As a result, infrastructure spending grows faster than data value, creating a budget crisis that forces difficult choices between innovation and cost control.

Tool sprawl shifts focus from data to maintenance

The most damaging cost of tool sprawl goes beyond the financial impact. Engineering teams stop solving data problems and start managing tools that were supposed to solve data problems.

This shift creates a maintenance-first culture where engineers spend more time orchestrating platforms than extracting business value. The consequences compound quickly:

Data quality degrades as complexity increases. When lineage becomes difficult to track across multiple tools, engineers start duplicating data rather than fixing the underlying issues. These duplicated datasets inevitably drift out of sync, creating corrupt records that undermine pipeline reliability and business trust.

Constant tool management replaces strategic work. Each platform has its own design philosophy, interface quirks, and learning curve. Engineering teams find themselves in perpetual onboarding mode, tweaking workflows, debugging integrations, and managing vendor relationships instead of building solutions that drive business outcomes.

Productivity becomes unpredictable. The cognitive overhead of managing multiple tools makes it impossible to estimate project timelines accurately. What should be simple data transformations become complex orchestration challenges that can take days to resolve.

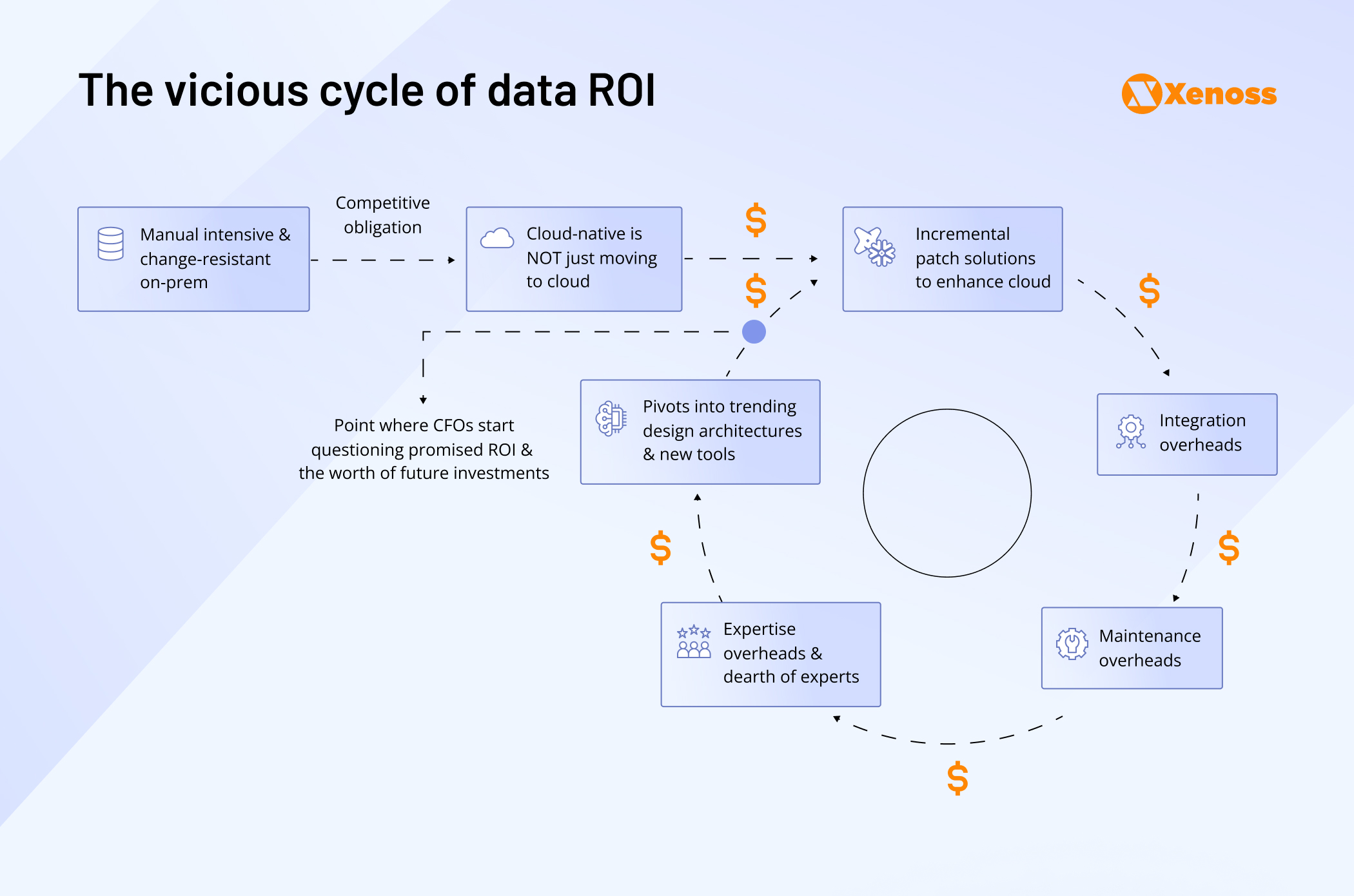

This operational drift explains why many Chief Data Officers and CFOs are questioning their cloud investments. After years of increasing infrastructure costs, they’re asking whether the promised benefits of flexibility, scalability, and revenue growth have materialized, or if they’ve simply traded on-premises complexity for multi-vendor complexity.

The answer, for most organizations, reveals a troubling pattern: higher costs, lower productivity, and diminished data trust.

How to reduce data sprawl without sacrificing performance

It’s hard to find an engineer who, in their training and career, has not heard of the “Keep It Simple, Stupid” rule. Implementing it seems straightforward enough – freeze investments in new tools and “do less with more.”

When talent shortages and pressing deadlines create pressure to add quick fixes, these organizations stick to frameworks that prioritize long-term efficiency over short-term convenience.

To keep data stacks from bloating, we offer a framework that helps match every new tool to a specific purpose, intentionally assess vendors, and harness the benefits of the consolidation trend.

1. Plan with the outcome in mind

Most tool sprawl begins when engineers optimize for technical perfection instead of business results. Teams get pulled into improving every data transformation, regardless of whether these improvements support actual business goals.

Here are a few industry-standard practices data teams follow for successful right-to-left planning.

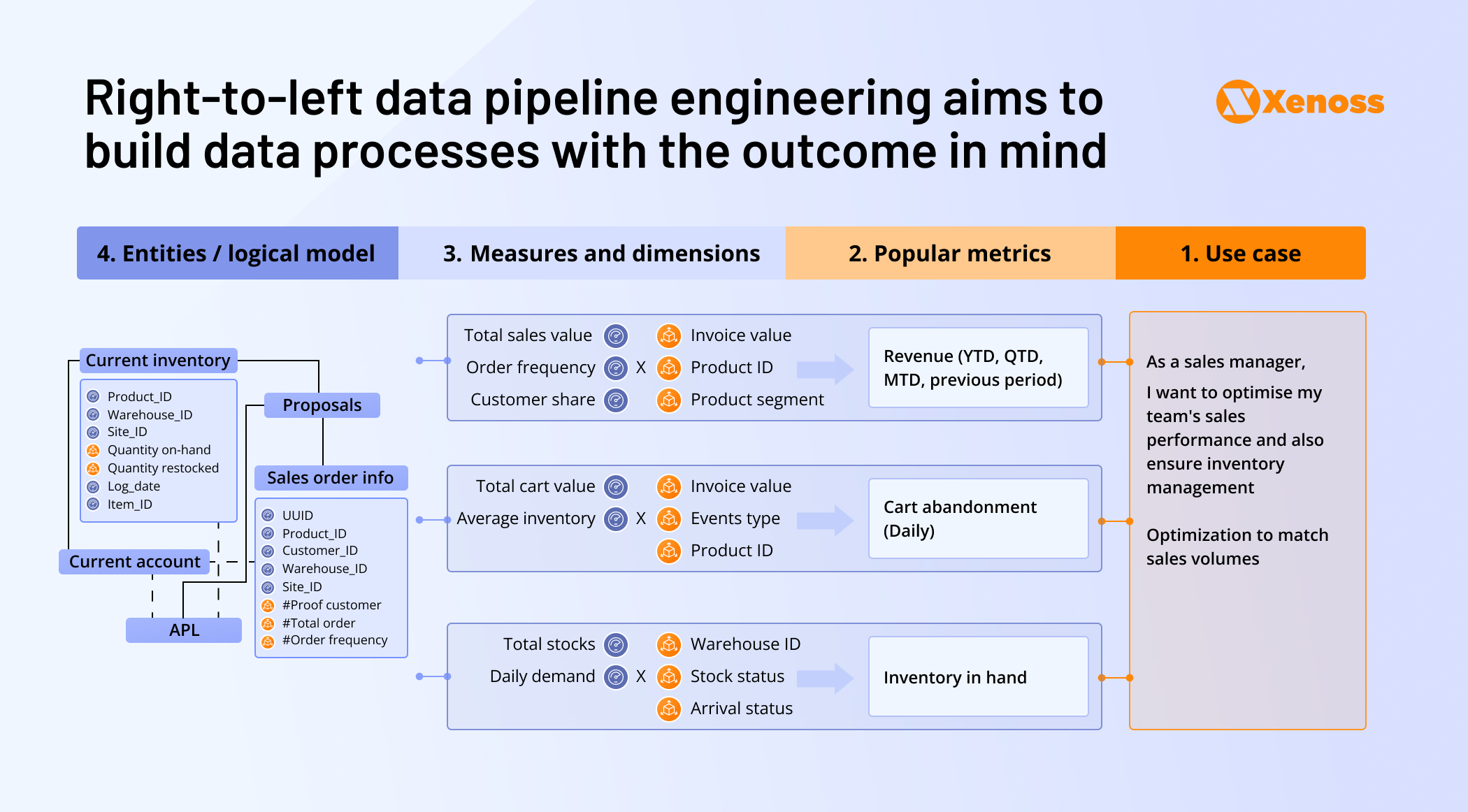

Successful consolidation starts with outcome-focused planning. Define the specific business problem your pipeline solves before selecting any tools. For customer segmentation, that means identifying the exact metrics that drive business decisions, such as average cart size per segment or customer lifetime value by cohort. For dynamic pricing in AdTech, focus on mean eCPM improvements and bid optimization rates.

Once you’ve defined success metrics, work backward to identify the minimum number of processes needed to generate those outcomes. This reverse engineering approach naturally eliminates tools that don’t directly contribute to business value.

Create an architecture diagram that maps every tool interaction and data flow. This visual representation makes redundancies obvious and helps identify consolidation opportunities. If three tools perform similar functions, you likely need only one.

The goal is to build the simplest possible architecture that delivers the required business outcomes at the necessary performance levels.

2. Develop standardized vendor assessment protocols

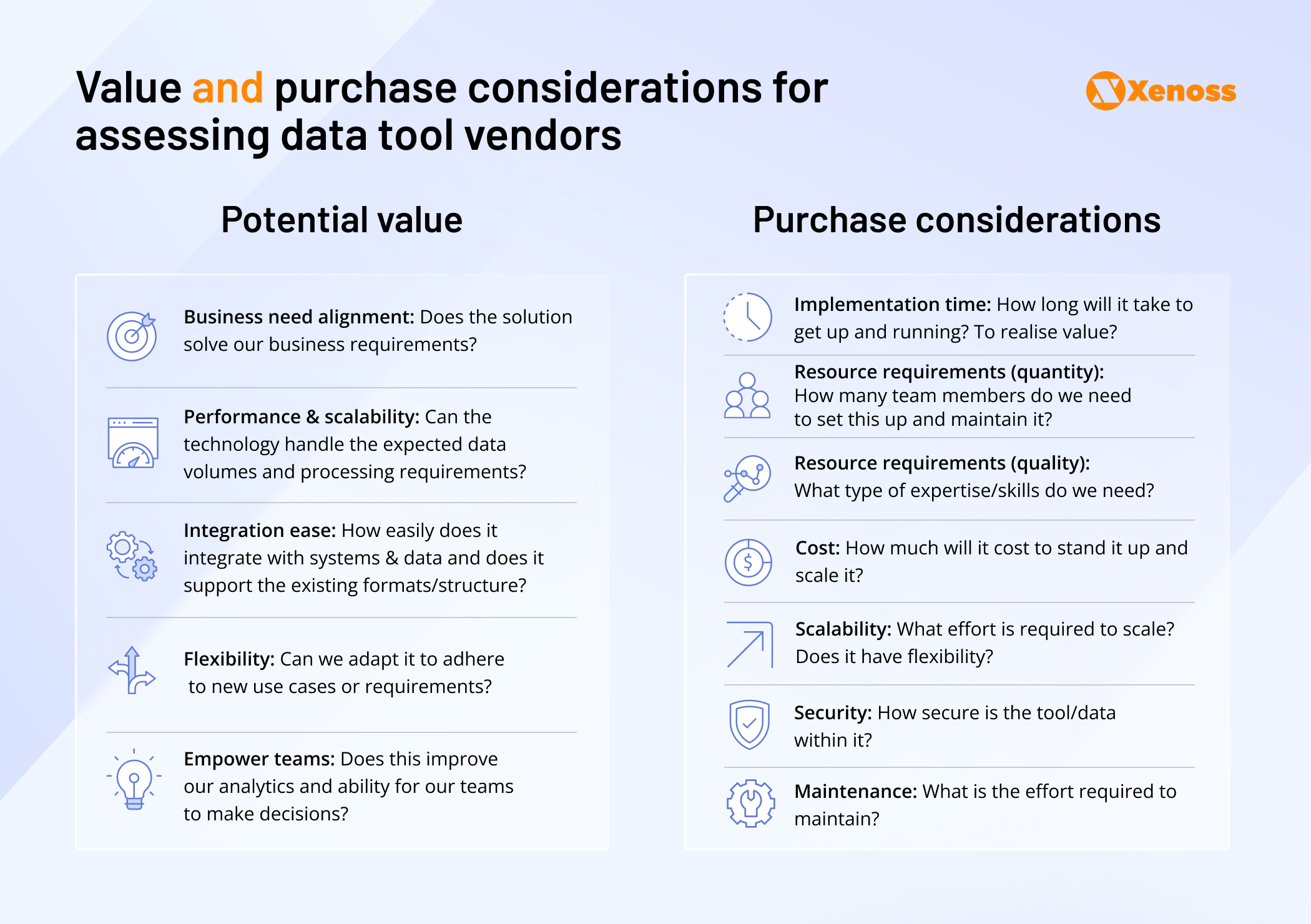

Not all data tools deliver equal value. Some solve narrow problems with minimal business impact, while others consolidate multiple capabilities under a single platform. The key to avoiding tool sprawl is distinguishing between vendors who create dependencies and those who reduce them.

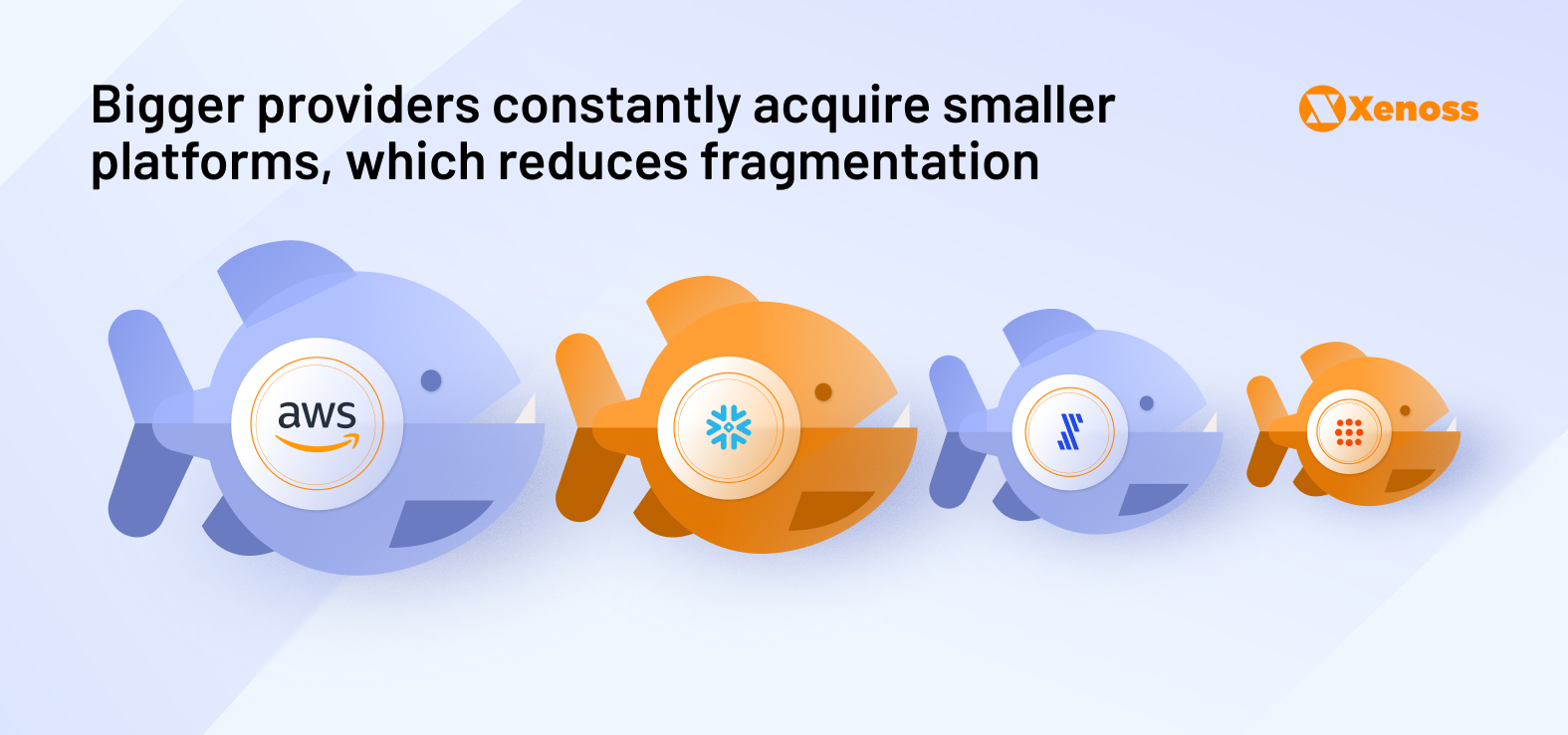

Successful teams prioritize platform vendors over point solutions. These vendors continuously acquire smaller tools and integrate their capabilities, allowing you to meet most data needs through one relationship. This approach reduces integration complexity without creating true vendor lock-in, provided your architecture remains portable.

Smart procurement means choosing a primary vendor you trust, plus two backup alternatives for critical services. When Oracle discontinued its AdTech suite in 2024, teams with migration plans adapted quickly, while those with single-vendor dependencies faced costly emergency transitions.

Two-stage vendor evaluation prevents expensive mistakes

Stage 1: Business value assessment

- Business needs alignment: Validate if the solution solves the team’s data problem

- Scalability and performance: Ensure the technology can handle high data loads

- Ease of integrations: Make sure the vendor has a battle-tested API ecosystem, detailed documentation, and support for the data formats your team uses.

- Flexibility: Can one tool solve multiple problems?

- Team empowerment: Check if the tool improves or slows down internal productivity and decision-making capabilities of the engineering team.

Stage 2: Total cost analysis

- Implementation time: How much time it takes to set up the platform and onboard the team?

- Resource requirements (quantity and quality): the number and seniority levels of people required to set up and maintain the new point-based service.

- Cost: Licensing costs and in-app features your team may need down the line.

- Scalability: How progressively the expenditure associated with the tool will grow as data loads increase over time.

- Maintenance: Are there hidden maintenance fees (e.g., “pay-per-update”) pricing models?

Teams that implement structured evaluation frameworks catch integration challenges, resource requirements, and cost escalations before they become expensive problems. This upfront diligence typically prevents 60-70% of tool sprawl decisions that teams later regret.

3. Leverage open-source alternatives to cut infrastructure costs

Open-source alternatives can reduce your data infrastructure spend by 40-60% while often providing superior flexibility and performance. Before licensing any commercial platform, evaluate whether open-source solutions meet your requirements.

The enterprise-grade options available today challenge the assumption that “free” software lacks commercial reliability:

Data integration and ELT platforms. Airbyte offers the same connectivity as Fivetran at a fraction of the cost. With over 300 pre-built connectors and active community development, it handles most enterprise integration needs while allowing custom connector development when needed.

Business intelligence and visualization. Metabase and Apache Superset deliver dashboard capabilities comparable to Tableau, but with a lower total cost of ownership. Hosting these platforms on cloud VMs or containers typically costs 70-80% less than commercial BI licensing, especially as user counts grow.

Data warehousing solutions. ClickHouse and DuckDB provide high-performance analytics capabilities that rival Snowflake for many use cases. ClickHouse excels at real-time analytics with massive datasets, while DuckDB offers simplified analytics for smaller-scale operations.

Monitoring and observability. The Prometheus and Grafana combination replaces expensive solutions like DataDog while providing more granular control over metrics and alerting. This approach works particularly well for teams that need custom monitoring capabilities.

Beyond cost savings, open-source tools offer strategic advantages that commercial platforms cannot match. You gain complete control over feature development, can customize solutions for specific business needs, and benefit from community-driven innovation that often outpaces commercial development cycles.

The key consideration is to ensure your team has the technical capability to implement and maintain these solutions, or partner with specialists who can provide that expertise.

4. Implement unified observability to reduce tool complexity

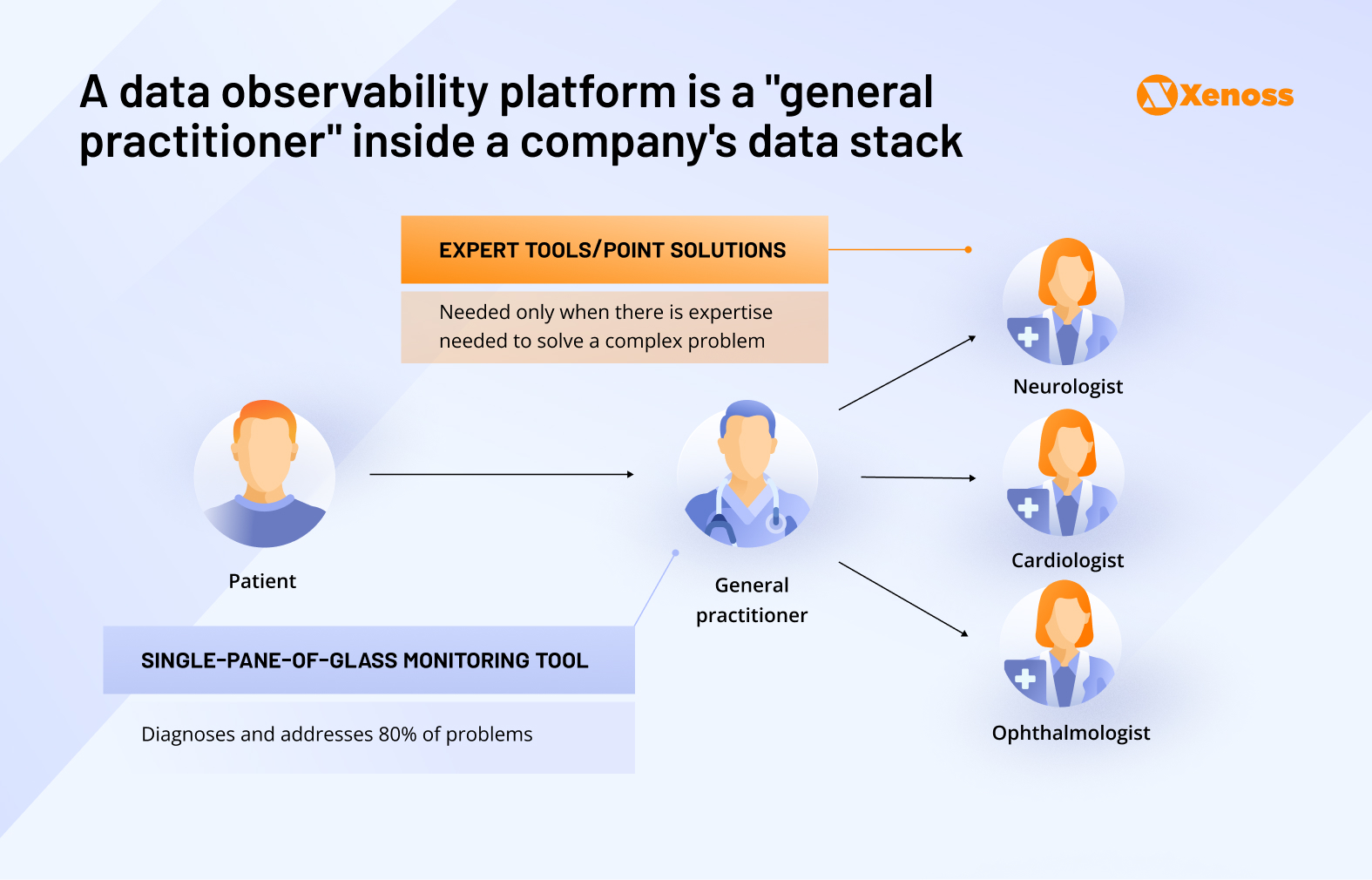

Tool sprawl creates a paradox: the more monitoring tools you add to track your data infrastructure, the harder it becomes to monitor effectively. Unified observability platforms solve this by providing a single interface to understand your entire data ecosystem.

These platforms prevent the most expensive form of tool duplication: buying new capabilities that already exist in your current stack. By providing complete visibility into what each tool does and how well it performs, observability platforms help teams maximize existing investments before adding new ones.

Central visibility prevents redundant tool purchases

Instead of managing separate dashboards for each data platform, unified observability gives you comprehensive insight into pipeline performance, data quality, and resource utilization across all tools. This visibility reveals optimization opportunities that typically remain hidden in fragmented monitoring setups.

Teams using centralized observability report 30-40% reductions in tool acquisition because they can clearly see which capabilities they already own. When gaps do exist, the platform provides detailed requirements analysis that leads to more targeted, cost-effective purchases.

The implementation approach that works: Start with monitoring tools you already have rather than buying new observability platforms. Most data infrastructure includes basic monitoring capabilities that can be consolidated and enhanced. Tools like Grafana can aggregate metrics from multiple sources, while custom dashboards can provide the unified view your team needs.

For enterprise-scale operations, consider platforms that combine monitoring, cataloging, and lineage tracking in one interface. ServiceNow, for example, automated its internal infrastructure management by implementing unified process monitoring that reduced both operational overhead and tool redundancy.

The goal is operational clarity that enables better decisions about which tools to keep, optimize, or eliminate from your data stack.

Take action before tool sprawl becomes unmanageable

Data tool sprawl will only accelerate as AI infrastructure adds new layers of complexity to already fragmented data stacks. Organizations that act now to consolidate their platforms will maintain a competitive advantage, while those that continue adding point solutions will find themselves trapped in increasingly expensive and inefficient architectures.

The path forward requires discipline over convenience. Teams succeeding at consolidation focus on three key principles:

Build for current problems, not future possibilities. Most tool sprawl stems from optimizing for edge cases that may never materialize. The infrastructure that serves 80% of your regular workflows determines your data product’s real-world performance and reliability.

Prioritize visibility over features. Before adding any new capability, ensure you can monitor and optimize what you already have. Teams with clear visibility into their existing tools discover they can solve most problems without additional purchases.

Choose consolidation over optimization. Perfect individual tools matter less than seamless tool integration. A simpler architecture with fewer vendor relationships delivers better business outcomes than a complex system with optimized components.

The organizations that master these principles will build data infrastructure that scales efficiently while reducing costs. Those that don’t will find themselves managing tools instead of delivering insights.

Want to reduce your data tool sprawl? Xenoss engineers help enterprise teams consolidate their data infrastructure without sacrificing performance. We’ve guided organizations through complete stack simplification, reducing tool counts by 40-60% while improving pipeline reliability and team productivity. Contact our data engineering team to assess your current infrastructure and develop a consolidation strategy tailored to your business needs.