Maintaining a truly scalable data engineering pipeline is easier said than done.

A common pitfall enterprise organizations face is not designing data pipelines with scale and governance in mind. At earlier stages of the organization’s growth, CSV exports, Python scripts, and manual management helped cover data engineering needs.

Eventually, a point comes when a patchwork of tools and flows can no longer process growing data volumes and requests. At that time, a critical assessment and optimization of data pipelines is unavoidable, and data engineering teams should approach it systematically.

This guide is for both ends of the spectrum: data engineers building smaller pipelines that may scale over the years and the leaders of large teams who need to revamp legacy pipelines so that they meet industry standards.

We’ll walk through the best data pipeline management practices across every critical area:

- Design

- Cost optimization

- Performance

- Data quality

- Data security

Root causes of data pipeline processing issues

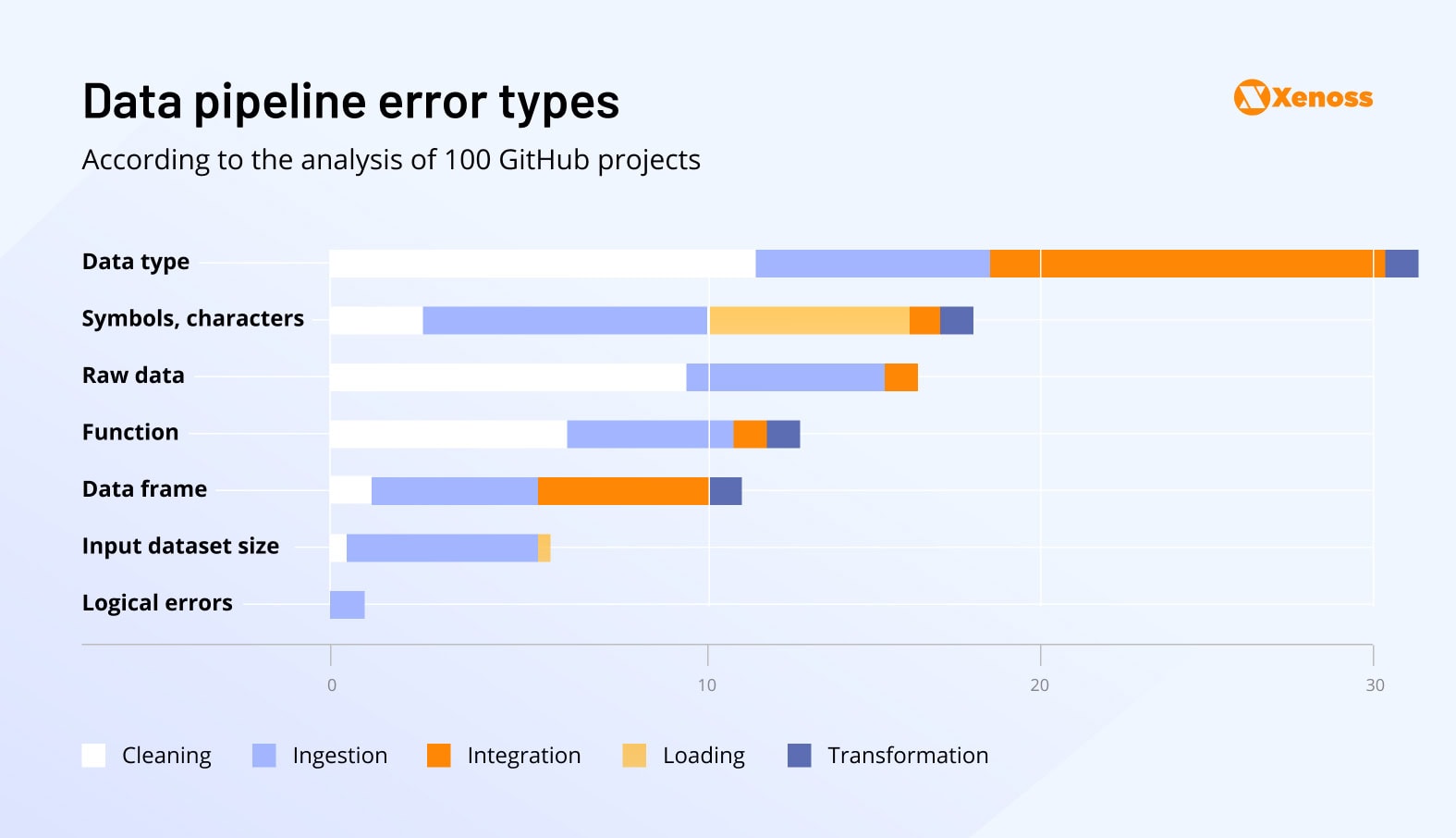

A study of 100 data pipelines stored as GitHub projects went over the root causes of data pipeline processing issues and classified them into broader groups.

Data type errors

Frequency: 33% of surveyed projects

Data type errors in a data pipeline happen when a piece of data arrives in a different format than the table is designed for. For example, a column expected to hold numbers gets a text value like “abc,” or a date field receives “31-02-2025”.

When data types don’t match, transformations or calculations that follow can fail or crash the pipeline.

Among surveyed GitHub projects, type errors mostly affected single data items or frames and occurred at the cleaning or integration stages.

At scale, data type errors make it difficult for teams to apply their data. Uber’s data engineering team pointed out that “broken data is the most common cause of problems in production ML systems”.

Addressing data type errors with automated quality control tools helps speed up pipeline deployment and maintain high accuracy of model outputs.

Misplaced characters

Frequency: 17% of surveyed projects

Misplaced characters in a data pipeline are stray symbols, like extra commas or quotes that slip into the data stream, breaking the expected structure and causing parsers to misread the data.

In surveyed pipelines, data items contained diacritics, letters from different alphabets, and other characters not supported by the standard encoding system.

A 2019 Microsoft Research paper titled “Speculative Distributed CSV Data Parsing for Big Data Analytics” looked at the ripple effect of a stray quote or comma. A misplaced character led to the creation of two legally parseable but semantically opposite tables. When the pipeline was deployed, wrong Spark queries were generated, and some data jobs crashed altogether.

Microsoft’s solution to the problem was a parallel parser that would detect and quarantine syntax errors.

Raw data issues

Frequency: 15% of surveyed projects

Raw data issues are an umbrella term that covers missing values, data duplication, corrupted data, and other input data irregularities. Data engineers mostly struggled with these during data ingestion and integration.

On its own, incomplete or duplicate raw data might not be a showstopper for data pipeline performance. It’s when errors pile up that downstream performance bottlenecks appear.

The issue of data cascades, chains of self-enforcing raw data errors, is well-known. Google, for one, covered it in the “Everyone wants to do the model work, not the data work” paper, noting that 92% of teams have faced the impact of poor raw data.

Other data pipeline errors

Less common data-related issues, listed below, had to do with pipeline functionality, data frames, and processing high loads.

- Difficulties managing functions appeared in 13% of the studied pipelines, mostly during data cleansing.

- 11% of errors appeared when creating, merging, and managing data frames.

- Large datasets caused difficulties in reading, uploading, and loading data, and reduced the pipeline’s scalability.

- Logical errors were usually calls to non-existent object attributes or methods.

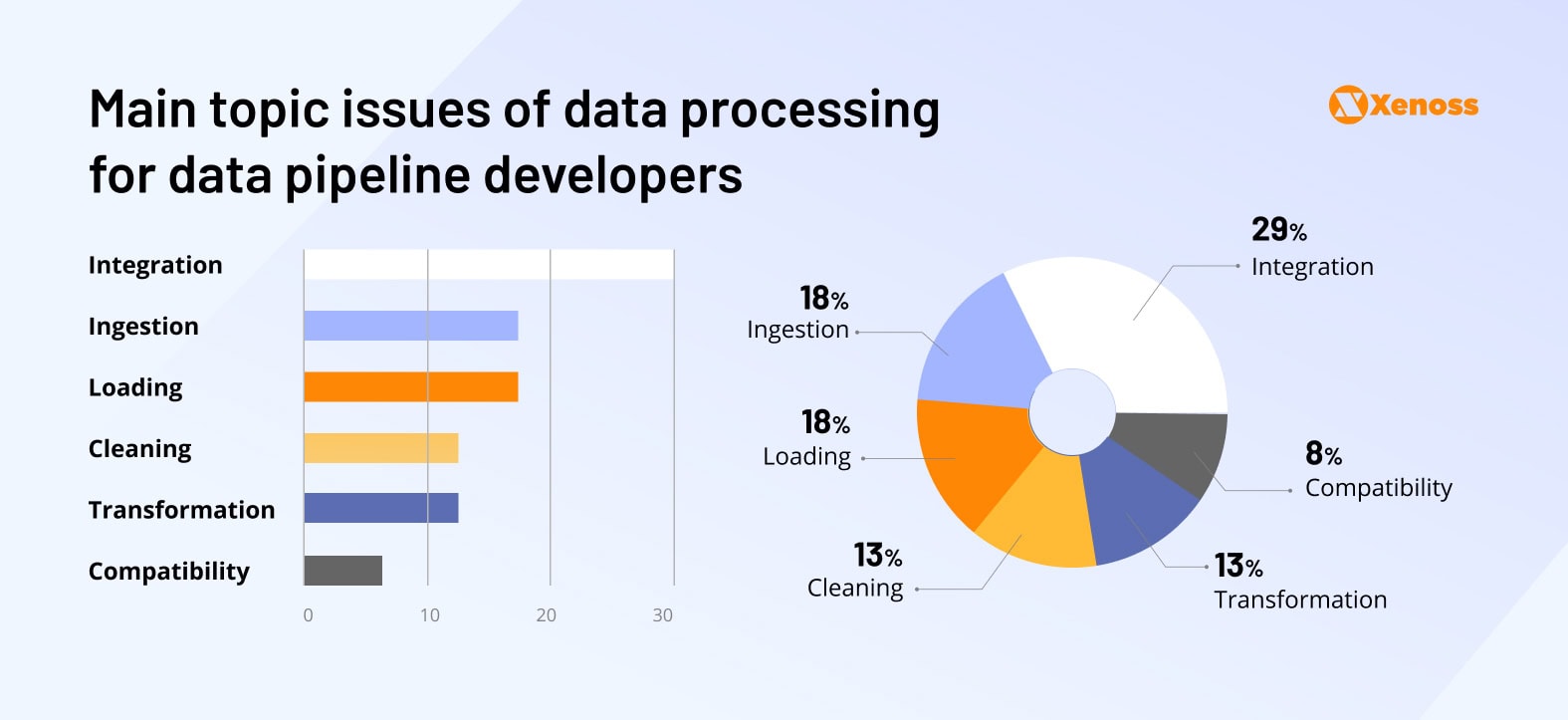

Most error-prone data pipeline workflows

Beyond specific error types, the study also identified which stages of the data pipeline are most vulnerable to failure. The results help highlight where engineering teams should focus their preventive efforts.

- Integration issues were the most common and were seen in 29% of examined projects. Developers reported difficulties transforming data across databases, managing tables and data frames, and aligning platforms and programming languages.

- Ingestion challenges found in 18% of projects were mainly related to uploading specific data types or connecting databases.

- Loading issues accounted for 18% of the total and impacted the efficiency (loading speed, memory usage) and correctness of the loaded data (incorrect or inconsistent outputs).

- Data cleaning issues in 13% of pipelines were limited to handling missing values.

- Transformation challenges resurfaced in 13% of projects and comprised character replacement and handling data types.

- Compatibility issues appeared in 8% of surveyed pipelines, across all stages of the ETL process. They were mostly linked to code performance bottlenecks on different operating systems and differences between programming languages.

Best practices for data pipeline design

Xenoss data engineers help enterprise clients in AdTech, MarTech, finance, and other data-intensive industries design secure, reliable ETL pipelines that process petabytes of data per second.

To maintain service excellence at this scale, our engineering teams follow tried-and-tested practices that ensure pipelines remain scalable, modular, and future-proof. Below is a breakdown of the key areas we focus on with examples and tools you can apply in your own projects.

Modularity

In a modular pipeline, individual components are easier to test, update, and reuse, which increases the speed of deployment and reduces long-term maintenance overhead.

Breaking data pipelines into standardized components, like Transformer, Trainer, Evaluator, and Pusher, helped Google data engineers cut deployment time by months and reduce the reliance on ad-hoc scripts.

Tools that enable data pipeline modularity: Apache Airflow, Dagster, Prefect, dbt (Data Build Tool), Kedro, Luigi, Flyte, Mage, ZenML, Metaflow

Data quality assurance

Data engineering teams should validate data across all quality dimensions (completeness, accuracy, validity, consistency, integrity) to prevent poor-quality data from offering distorted insight and hindering decision-making.

Enterprise data engineering teams usually automate data quality checks with proprietary evaluators or open-source libraries like Amazon’s Deequ. Libraries like Deequ run regular code checks to block Spark jobs that do not meet accuracy, completeness, or statistical criteria.

Tools for data quality assurance: Atlan, Collibra, Informatica Data Quality, Talend Data Quality

Documentation

Document all ETL steps to make sure that all stakeholders on your data engineering teams can navigate and manage mission-critical pipelines (e.g., a fraud detection system a bank uses to flag suspicious transactions).

LinkedIn data engineers shared their experience of creating a system for generalized metadata search, which was later open-sourced as Datahub. Introducing process metadata for each ETL step (lineage, execution, lifecycle) improved the scalability and governance of data pipelines.

Since LinkedIn switched to Datahub, it has been used by over 1,500 LinkedIn employees to process over 1 million datasets, track over 25,000 metrics, and support more than 500 AI features.

Tools that facilitate ETL documentation: Apache Atlas, dbt, Datahub, Alation

Logging

Consistent logging in data pipelines simplifies debugging and enables better observability and root-cause analysis.

After entering the gaming market, Netflix saw a sharp increase in the number of batch workflows and real-time data pipelines. Whenever a failure occurred in a workload, data engineers needed to investigate the logs from multiple systems.

To access and manage these logs, the platform used its Pensive system. This tool collects job and cluster logs, classifies stack-traces, and automatically retries or repairs broken Spark or Flink pipeline steps.

Tools that manage data pipeline logging: Apache Airflow, Prefect, Dagster, dbt, Fluentd, Datadog

Isolated environments

Separating development, staging, and production environments minimizes the risk of downstream errors. The official AWS best-practice handbook explicitly recommends that cloud engineers “establish separate development, test, staging, and production environments.”

The ability to capture pipeline errors before deployment is higher if the staging environment closely mirrors production.

Tools for building isolated environments: Docker, Kubernetes, Apache Airflow (with KubernetesExecutor or DockerOperator), dbt (with dbt Cloud environments), Terraform

Data sources

It’s becoming an industry standard to avoid working with manually created data sources (e.g., Google Sheets).

However, when no alternative is available, data engineers should implement strict change monitoring with preset protections.

The Deequ library by AWS, used inside AWS Glue and AWS Sagemaker, runs declarative checks on each newly arrived partition, blocking jobs when metrics drift. This way, teams create a unit-test-like guardrail for large datasets.

Tools for data source monitoring: Bigeye, Prometheus, Grafana, OpenTelemetry, Databand, Metaplane, Kensu

Best practices and tools for optimizing data pipeline performance

To further enhance pipeline efficiency, we recommend structuring optimization efforts across four domains: cost, performance, data quality, and security, with tailored best practices for each.

Data pipeline cost optimization best practices

Maintenance is the bane of existence for data engineering teams. A Fivetran survey reports that 67 % of large, data-centralised enterprises still dedicate over 80% of data-engineering resources (both talent and budgets) to maintaining existing pipelines, leaving little capacity for new AI/analytics work.

Examining the efficiency of data pipeline maintenance can thus significantly slash costs. Here are a few tips engineers implement for better resource management.

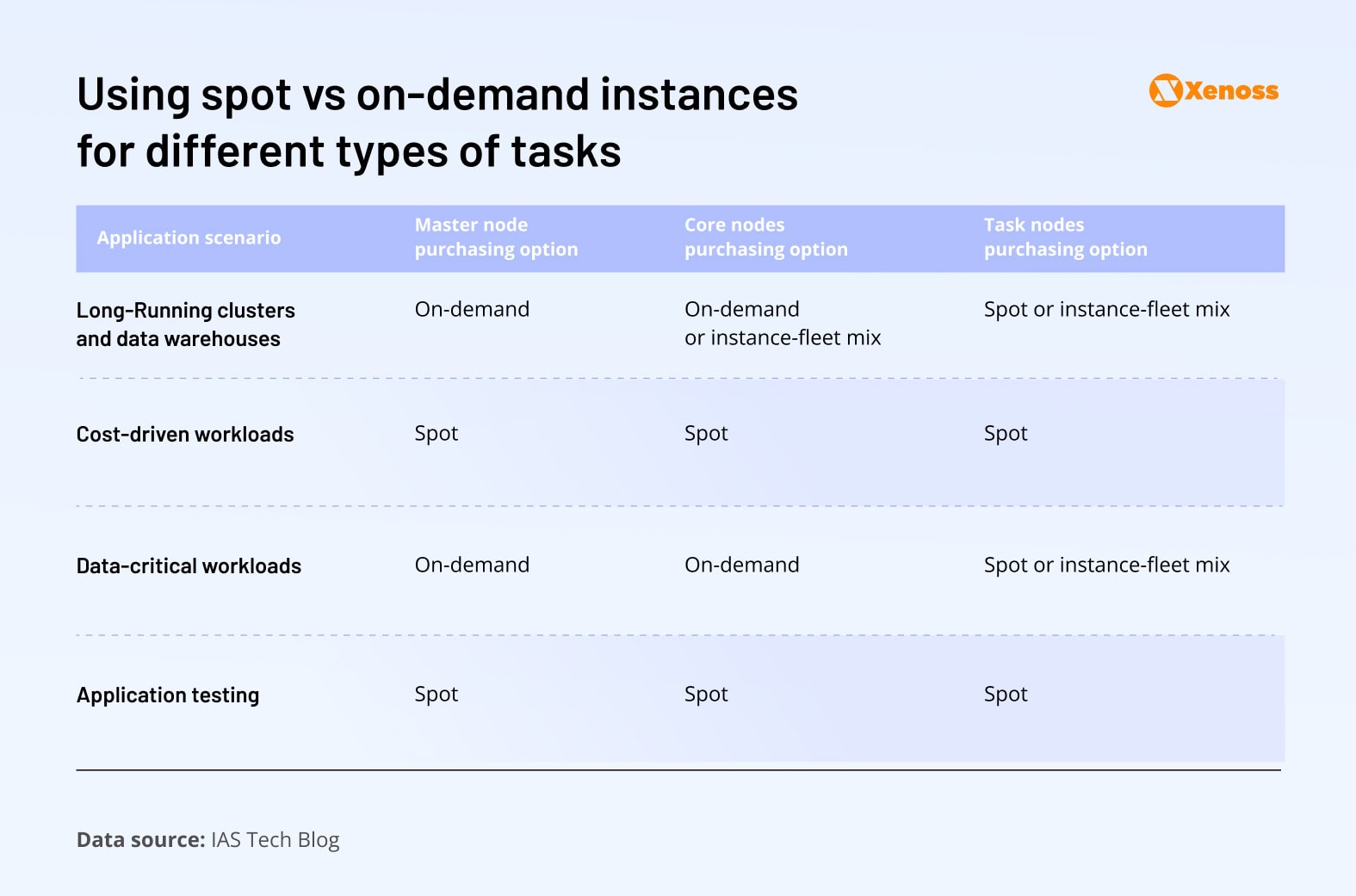

- Using spot instances. These are cheaper to manage than on-demand instances, so they are a good match for uninterrupted, non-critical tasks. As such, time-sensitive jobs like real-time data processing or fixed-deadline regulatory tasks should be handled by on-demand nodes, whereas the distributed training of machine learning models or nightly ETL can use spot nodes instead. Knowing which tasks can run on spot nodes helps data engineering teams cut processing costs by up to 90%.

- Auto-scaling. Adjust the computing power a data pipeline uses by deploying managed services at your cloud platform, like AWS Glue, Google Cloud Dataflow, or Azure Data Factory. Auto-scaling allows data engineers to reduce consumption or shut it off during periods of low or no use.

- Data deduplication. Integrate AWS Glue, Apache Spark, or other solutions with data deduplication capabilities into the pipeline and schedule regular data checks. This helps identify and eliminate redundant items, reduce processing load, and save storage space.

Data pipeline performance best practices

The performance of a data pipeline is critical, especially in user-facing applications, where a slow-loading dashboard can tank engagement. Internally, long queue times delay the completion of data jobs, slowing down data engineering teams.

There’s no one-size-fits-all approach to performance optimization; data engineering teams should consider data types, use cases, and tech stack when building an action plan.

At the same time, several big-picture considerations Xenoss engineers swear by help prevent performance bottlenecks regardless of specific use cases or types of data in the pipeline.

Here is a brief rundown of these practices.

- Parallel processing. Break data jobs into smaller, manageable workflows to improve pipeline performance and ramp up processing capacity. Kafka Streams, AWS Glue, Snowflake, and Hadoop MapReduce all have parallel processing capabilities.

- Split monolith pipelines into a series of ETL tasks. This strategy improves parallelization and allows us to identify and fix bottlenecks quickly.

- Use efficient formats. Determine which formats data pipelines use for each data application. Parquet and other columnar formats are common for managing analytical queries, while row formats like CSV are used for transactional data.

- Use in-memory processing. Identify pipeline components where in-memory processing is applicable to reduce the time needed to run disk I/O operations and boost processing speed. The tools often used for in-memory processing are usually Redis, Presto (for interactive in-memory queries), PySpark (supports in-memory caching, and TensorFlow (for in-memory data pipelines in ML workflows).

Data quality optimization best practices

While the awareness of the need for data cleansing and filtering is generally growing, there’s little focus on the fact that, in any pipeline, data quality deteriorates over time. ‘That is why, aside from ensuring inaccurate, outdated, or incomplete data is stored in a data lake or warehouse, teams should hone in on validating, standardizing, and monitoring data quality at all times.

The following workflows are generally helpful in maintaining high data quality over the entire lifecycle.

- Setting validation rules. Run automated data quality checks with Deequ, Monte Carlo, Informatica Data Quality, or other tools to reduce the risk of human errors and monitor pipelines 24/7.

- Data standardization. Present data from disparate sources in a standardized format to prevent misinterpretation. AWS Glue, dbt, Microsoft SQL Server Integration Services (SSIS), and Apache Spark are go-to standardization tools for many engineering teams.

- Building metadata-based pipelines. This design allows teams to specify data ingestion and transformation requirements.

- Regular stress testing ensures pipeline recovery from malfunctions. Test pipeline performance under high data loads and simulate component failure with tools like AWS Fault Injection.

- Continuous monitoring. Use Prometheus, Grafana, or other tools to set up around-the-clock tracking and reporting, and be confident that only accurate data enters the pipeline.

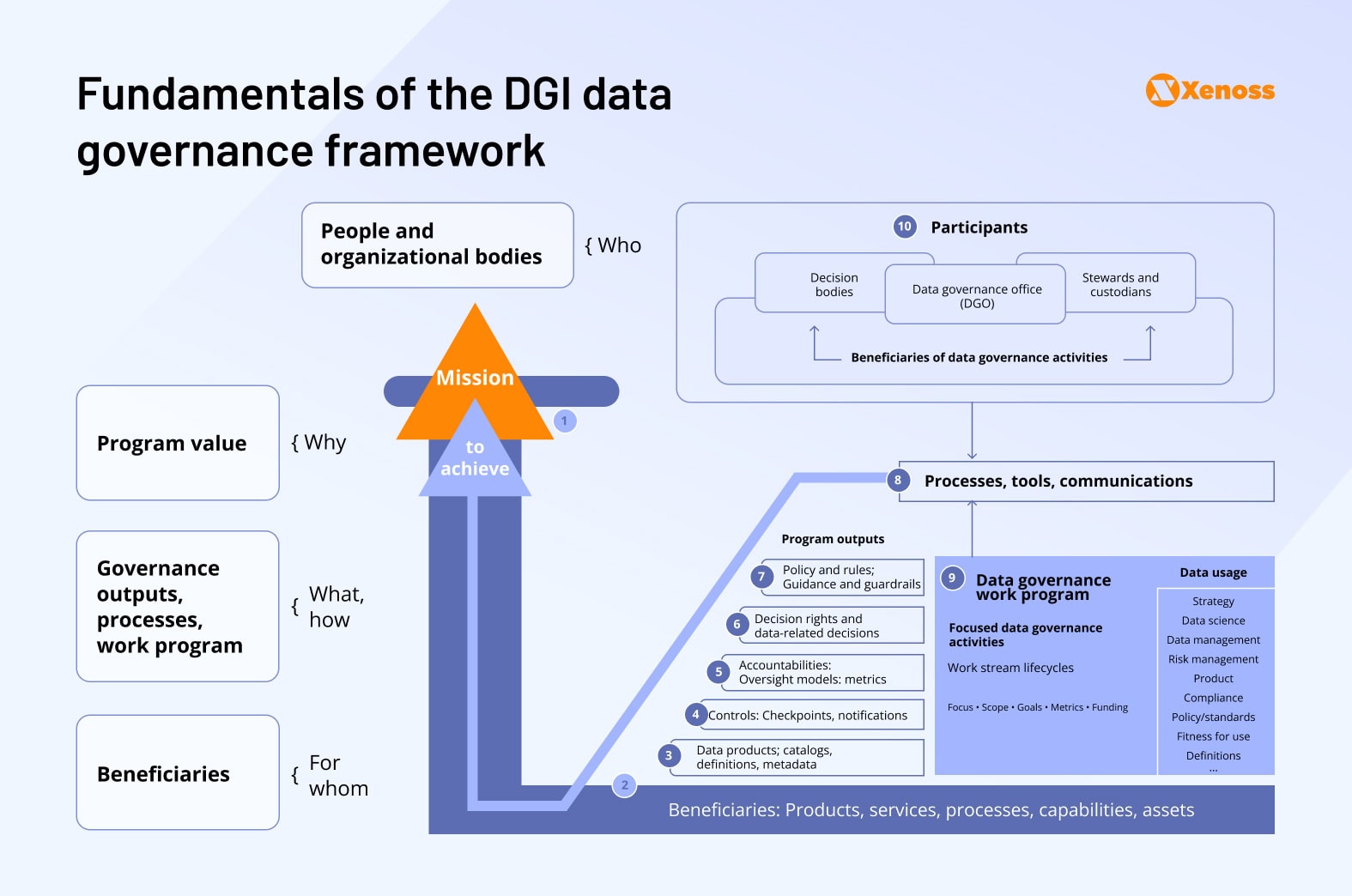

- Building a data governance framework. Standardize data governance by developing an internal governance system.

The Data Governance Institute (DGI) framework, summarized in a graph below, is a solid blueprint for designing rules that control data products, decision rights, policies, rules, and participants in high-impact decisions. It identifies 10 foundational components of a data governance program: mission, beneficiaries, products, controls, accountability watchers, decision rights, policies, tools, workstreams, and participants. Adopting this framework allows data engineering teams to cover their bases and make sure no component of their data strategy is left without oversight.

Data security best practices

Depending on the types of data a data product is managing, the stringency of security policies will vary.

The implementations below are a good starting point for reducing the impact of potential breaches.

- Use a secrets manager like AWS Secrets Manager, Azure Key Vault, or Google Secret Manager to save and access credentials and keep secrets rotated for tighter security.

- Ensuring reproducibility. Build reproducible pipelines to ensure disaster recovery and prevent data duplication when replaying the pipeline—Apache Airflow, dbt, and Git all have the toolset for that.

- Enforcing organization-wide security rules (e.g., preventing PII and other sensitive information) from entering the pipeline.

Bottom line

A thoughtful approach to data pipeline design helps data engineering teams save hundreds of dollars down the line and scale the pipeline without major restructuring or downtime.

Intelligent data pipeline management essentially boils down to understanding its moving parts: types of data the pipeline is handling, use cases organizations plan to support, and tools the engineering team uses to build the pipeline.

Understanding how to choose between batch and streaming processing, where to switch from on-demand to spot nodes, how to manage metadata, and track data lineage helps teams process more data without putting in extra time and resources.

Experienced data teams know how to make intelligent decisions across all areas of pipeline design to optimize performance, data quality, and maintenance cost. To get a free data pipeline assessment from seasoned data engineers and draw up a roadmap for cost, performance, and data quality optimization, contact Xenoss.