In 2023, Jordan Tigani, a founding engineer of Google BigQuery, claimed Big Data could be dead by 2025. At Xenoss, we see it differently. Tigani’s statement isn’t obsolete or surprising—in fact, his provocative article garnered significant attention in the tech community, and for good reason. With his extensive experience as a founding engineer of Google BigQuery, Tigani brings valuable insights to the table. His core argument highlights an important reality: most organizations can effectively manage their data through traditional analytics methods. For roughly 99% of companies, basic aggregation queries, curves, histograms, and straightforward statistical analysis are sufficient, provided their data engineering is properly implemented. Only about 1% of data projects truly require advanced machine learning solutions.

However, as AdTech specialists, we operate in that crucial 1% where hyperscale, highly dimensional, complex, and always-on data powers innovation. We know firsthand that Big Data isn’t dead—it’s far from it. While Tigani’s point about past practices—like rollups, summarizations, attempts to downsample, and other tactics to manage scale—is valid, new AI and ML advancements are changing the game. These tools are making it possible to process data in its full richness, unlocking Big Data’s true potential. In industries like AdTech, built entirely on data, one thing is clear: Big Data isn’t dead—it’s evolving.

The term “Big Data” has been around for over two decades, but its challenges have shifted. Early technologies no longer meet the demands of today’s enterprise data environments. Advanced solutions are now essential to process and analyze the vast volumes of data businesses generate. Let’s explore the latest Big Data trends and the cutting-edge technologies driving this evolution across industries.

Hyperscale Big Data redefining AI and ML

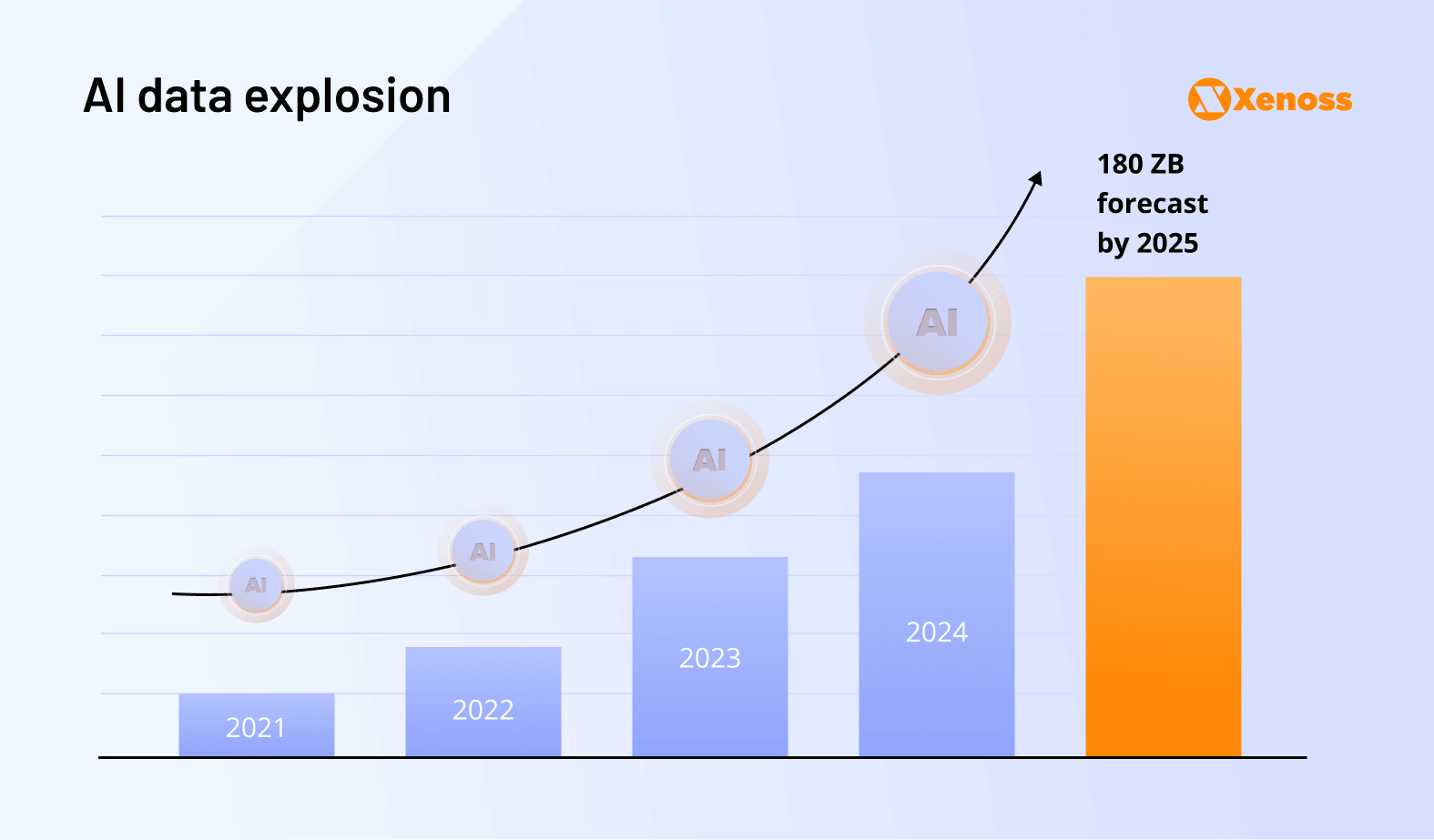

As we move into 2025, big data continues to evolve at an unprecedented scale, fueled by the proliferation of devices, media sources, and AI advancements. Terabytes have given way to petabytes and beyond, and traditional systems are no longer sufficient to handle this deluge of information. Hyperscale data analysis engines, once considered niche, are becoming critical enablers of the next generation of big data applications.

The challenge for the future isn’t just the volume of data but the speed at which insights need to be derived. Advances in NVMe SSDs, multi-core CPUs, and high-speed networking are redefining the benchmarks for data processing. These are not just hardware upgrades but foundational shifts that allow processing terabytes of semi-structured data into usable formats almost instantly.

With hyperscale solutions, AI and ML applications are poised to become more precise and prescriptive. The ability to incorporate enriched datasets—combining historical trends with real-time streams—means models aren’t just making predictions but offering actionable strategies tailored to highly specific scenarios.

The real opportunity lies in the questions that can now be asked. Instead of relying on predefined correlations, the scale and granularity of data available in 2025 open the door to discovering entirely new patterns and relationships. This is particularly critical for industries like AdTech, FinTech, and Healthcare, where hyper-personalization and predictive analytics are game-changers.

Zero-party data scaling

Zero-party data—information customers intentionally share—has become a cornerstone for personalization across sectors like Media, Retail, Healthcare, and Finance. In Media, it helps tailor content recommendations and optimize ad targeting. In Retail, search queries, user-generated content, and chatbot interactions reveal purchasing intentions and help refine product offerings. In Healthcare, patient interactions with digital platforms provide insights for personalized care plans, while in Finance, user preferences and feedback improve service delivery and build trust.

AI amplifies the value of zero-party data by transforming unstructured inputs into actionable insights. Conversations with AI chatbots, social media activity, and support interactions are now easier to analyze using advanced tools like large language models (LLMs). These tools help businesses uncover trends, optimize processes, and make data-driven decisions. Combining zero-party data with structured datasets enables hyper-personalized experiences, precise predictions, and improved operational efficiency.

Scalable, future-ready data platforms are key to unlocking this potential. Organizations should start by focusing on high-impact areas, such as understanding customer intent or refining product recommendations, and gradually expand their strategies.

Adopting unified batch and streaming architectures

As businesses push for faster, data-driven decisions, traditional systems are struggling to keep up. The answer lies in hybrid data pipelines, merging real-time and batch processing to deliver both speed and depth. Real-time processing captures fast-moving data for instant actions, while batch processing tackles larger datasets to ensure accuracy and uncover long-term trends. Together, they meet the growing demand for both agility and reliability.

This approach powers a wide range of use cases:

- IoT data processing: Managing streams from IoT devices, like smart sensors, to detect and act on events as they happen.

- Social media analytics: Tracking sentiment, identifying trending topics, and analyzing user engagement in real time.

- Financial analytics: Monitoring live market data for rapid decisions while running historical analyses for risk management.

- Log and event processing: Analyzing server logs and application events to identify issues and trends before they escalate.

- Recommendation systems: Delivering personalized suggestions by combining real-time behavior with historical preferences.

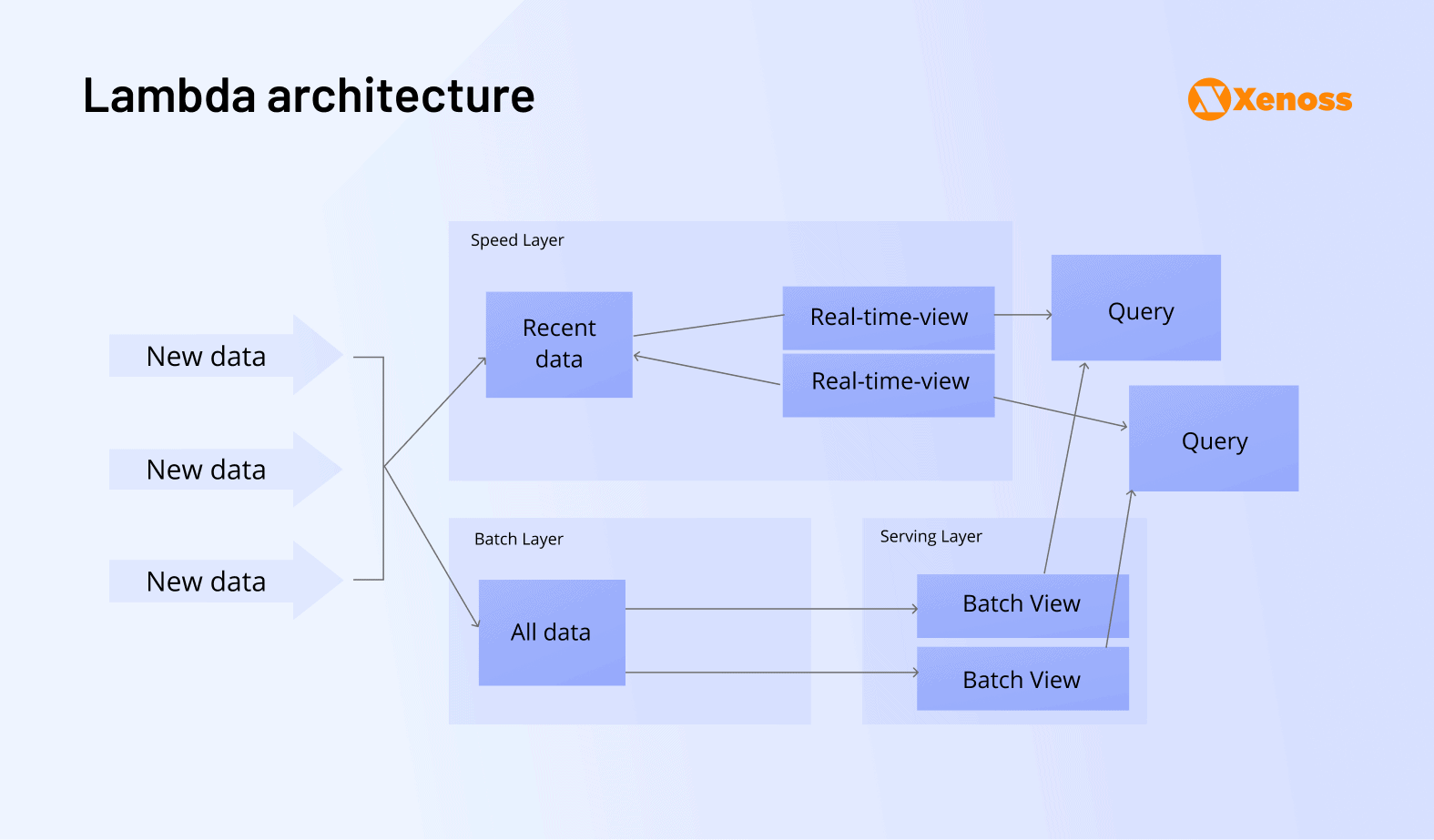

Frameworks like the Lambda architecture are leading the way. This approach splits the workload: real-time layers handle streaming data for quick insights, while batch layers dig into historical data to uncover trends and ensure precision. The outputs combine in a unified view, making it easier for businesses to act on fresh, reliable data.

While this setup demands more effort to manage, the payoff is clear. Industries like finance, retail, and healthcare are already seeing the value. Real-time insights, backed by robust batch analysis, give them a sharper edge in fast-paced markets. Hybrid pipelines are becoming the blueprint for a future where businesses can act instantly without sacrificing accuracy.

Streamlining data transformation and quality processes

As data volumes grow, traditional ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) pipelines are showing their limits. These processes often create bottlenecks, slowing down analytics and leaving business intelligence outdated. To keep up with real-time demands, companies are rethinking how they handle data transformation and quality management.

The focus is shifting toward advanced ELT pipelines, which optimize workflows by reducing unnecessary steps and enabling faster processing. Techniques like caching intermediate data and filtering irrelevant datasets early in the pipeline are helping to streamline operations.

Automation and AI are also transforming big data pipelines. AI-driven tools now handle tasks like data validation and compliance checks, reducing manual effort and ensuring data meets privacy and governance standards. Meanwhile, automated quality checks and anomaly detection are becoming standard, keeping data accurate and reliable throughout the process.

These innovations not only speed up analytics but also enable businesses to act on fresher, more accurate insights. In industries where timing is critical, such as finance and retail, these advancements are proving essential for maintaining a competitive edge. The era of “no-fuss” data transformation is here, and it’s redefining how companies extract value from their data.

Breaking down data silos with orchestration tools

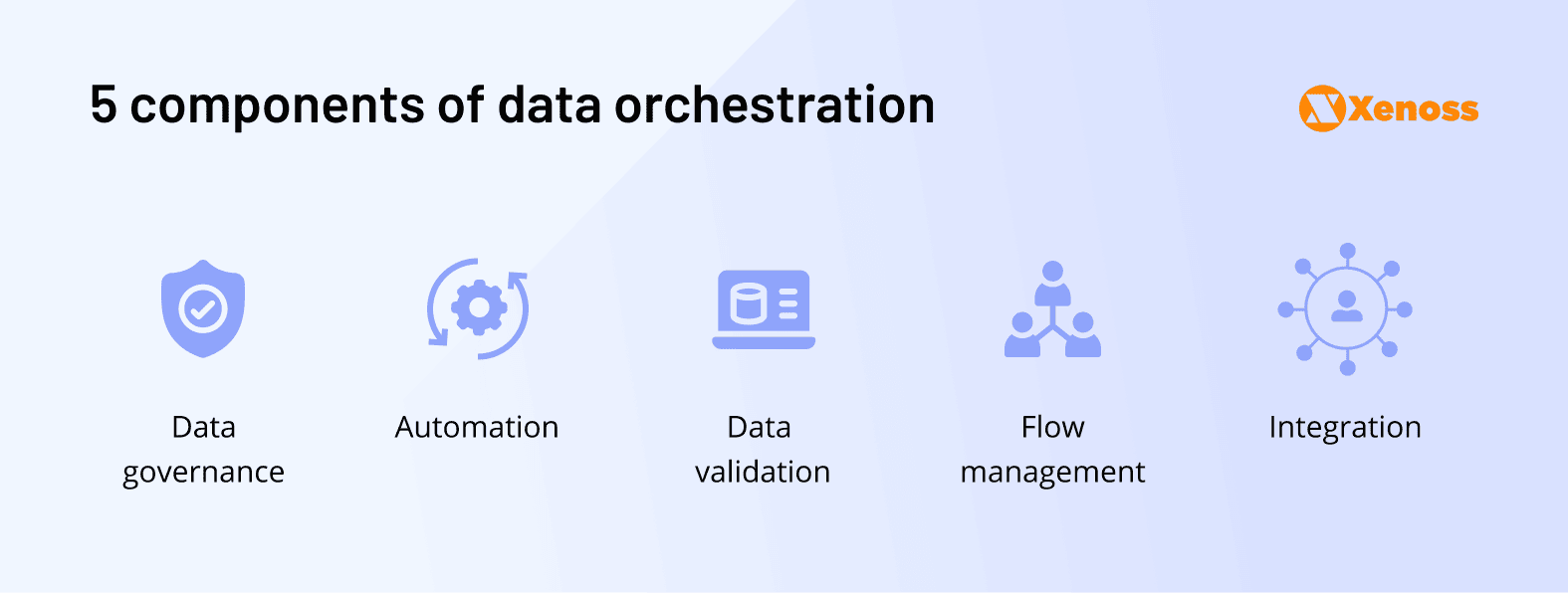

Breaking down data silos is no longer just about integrating systems—it’s about enabling seamless workflows and empowering teams to act on insights without delays. Modern orchestration focuses on five key components: data governance, automation, data validation, flow management, and integration. Together, they ensure data moves smoothly between systems while maintaining accuracy and quality, critical for enabling real-time insights and self-service data access.

The rise of self-service BI platforms aligns with this shift, making it easier for non-technical users. These platforms now offer intuitive interfaces and simplified workflows, allowing non-technical users to explore and analyze data independently. This democratization of data not only accelerates decision-making but also reduces bottlenecks caused by reliance on IT teams.

AI-driven orchestration is another game-changer. These tools handle error detection, streamline transformations, and improve data quality, reducing the complexity of managing diverse sources. Meanwhile, open-source solutions like Apache Airflow and Dagster are making advanced orchestration accessible to businesses of all sizes.

Enhancing concurrency management for scalability

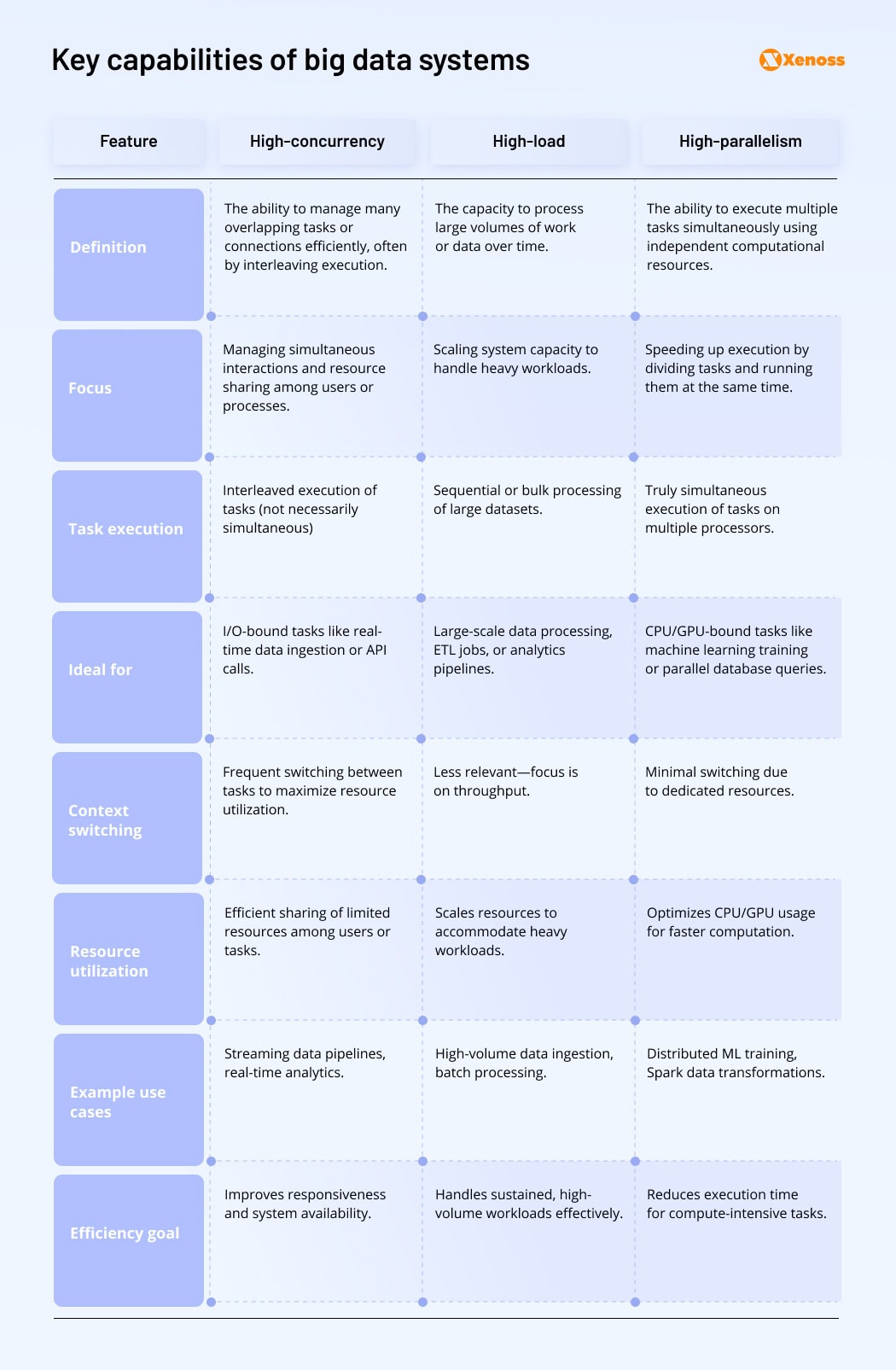

As data systems evolve, handling high concurrency, high loads, and parallel processing has become a top priority. High concurrency ensures systems can support hundreds of simultaneous user queries without slowing down, a necessity for real-time analytics and responsiveness. Heavy-load capabilities allow for the processing of vast data volumes efficiently, ensuring large-scale ETL jobs or batch processes don’t overwhelm the infrastructure. Parallelism, on the other hand, optimizes execution by dividing tasks across multiple computational resources, significantly reducing processing time for machine learning and data transformation tasks.

Together, these capabilities are reshaping data environments, ensuring systems remain scalable, responsive, and efficient under increasing demands.

Big Data → Big Ops

The 2020s mark the shift from Big Data, which focused on collecting and storing vast amounts of information, to Big Ops, which emphasizes orchestrating the growing complexity of data-driven operations. Big Ops goes beyond managing data to seamlessly integrating automated processes, apps, and workflows that interact with data in real time.

This transition is driven by the need for organizations to not only collect data but activate it effectively across marketing, sales, service, and operational layers. In a world where 44% of enterprise data remains uncaptured and 43% of captured data goes unused, Big Ops focuses on bringing “dark data” to light and ensuring its value is realized through optimized operations.

The defining metric of success in this new era is orchestration—how effectively companies align their digital operations, from automated tools to human workflows, to deliver consistent, data-informed outcomes. Big Ops transforms static data management into a dynamic, interconnected system that powers agility and competitive advantage in an increasingly complex digital landscape.

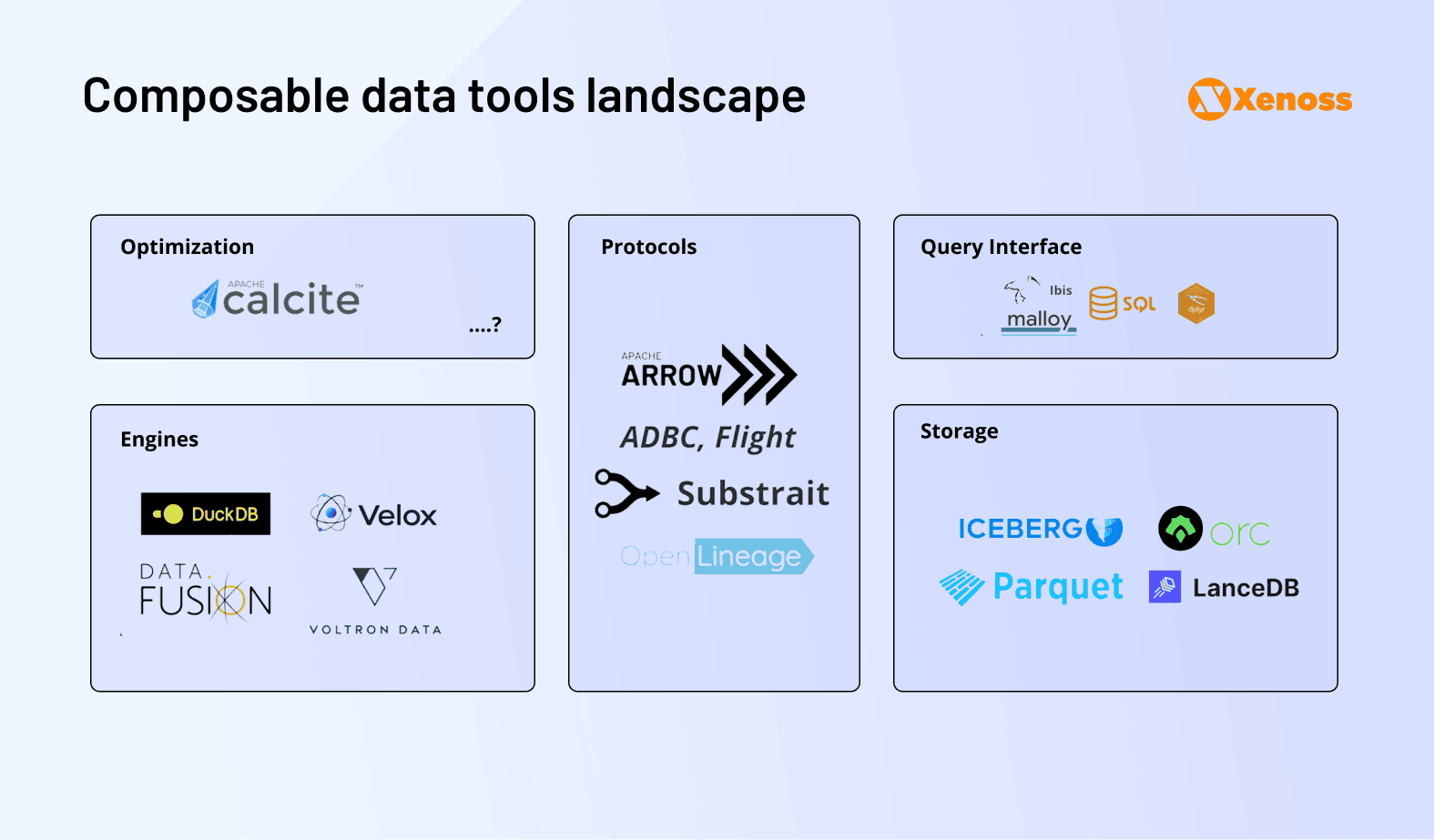

Composable data stacks for Big Data

Composable data stacks are gaining traction as open standards like Apache Arrow, Substrait, and open table formats reach widespread support. These modular systems enable integration across engines, languages, and tools, allowing organizations to store data once and choose the most suitable database engine for their workloads. Lightweight engines like DuckDB handle datasets under 1TB, while Spark or Ray manage mid-sized data, and hardware acceleration supports larger volumes.

This shift addresses challenges like data silos and vendor lock-in, providing modularity, hardware adaptability, and simpler management. It empowers teams to streamline workflows, reduce costs, and deliver consistent user experiences across diverse workloads.

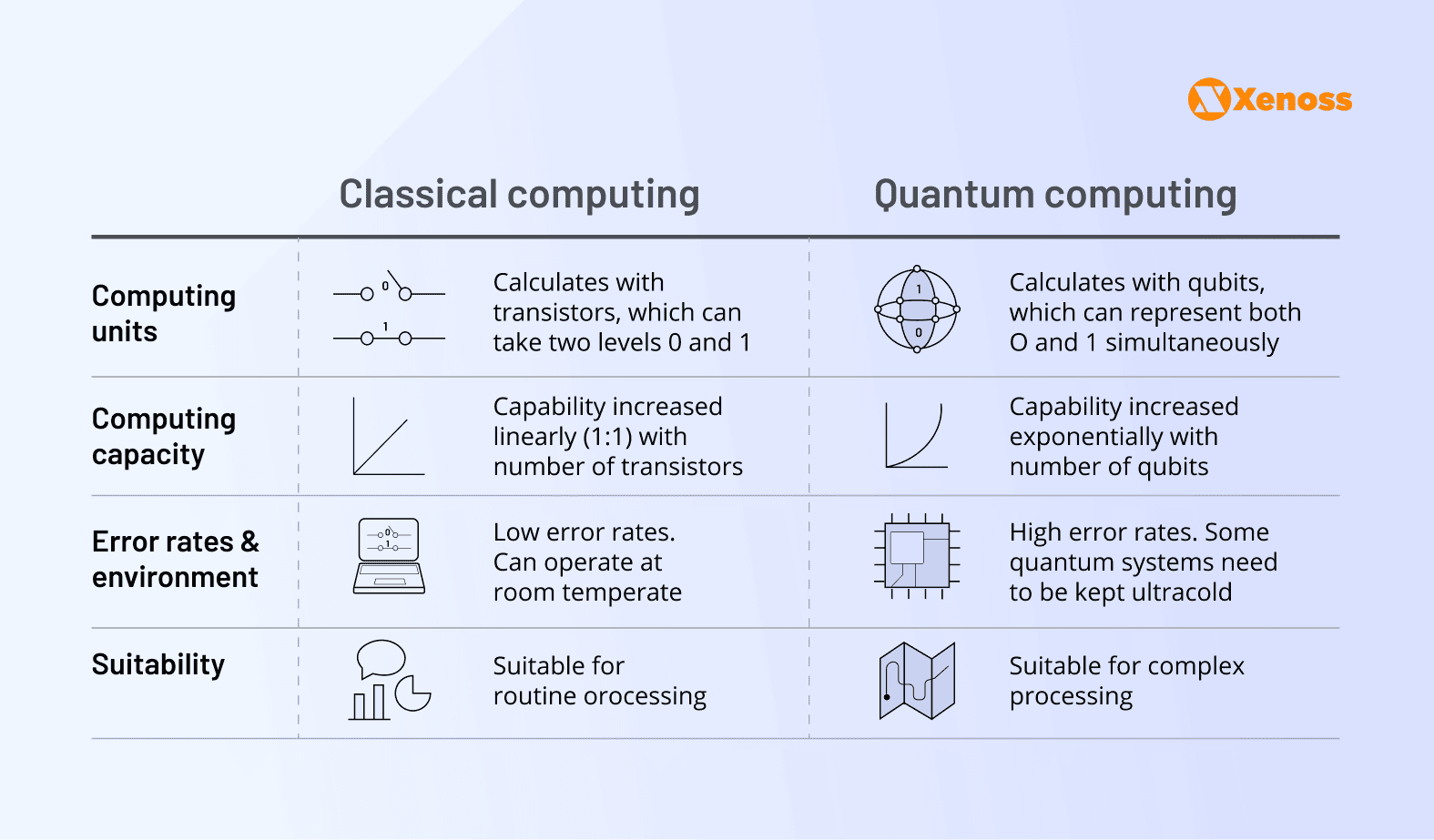

Quantum computing for Big Data

Quantum computing is revolutionizing Big Data by tackling challenges that were previously impossible with classical systems. By leveraging quantum bits (qubits) and parallel processing capabilities, quantum computing enables faster analysis of massive datasets, making complex problem-solving and predictive modeling more efficient.

For Big Data, this means breakthroughs in optimization, data clustering, and machine learning. Quantum algorithms like Grover’s search and Shor’s factorization are already demonstrating their potential to accelerate data processing. Industries like finance, healthcare, and logistics are at the forefront of exploring quantum-powered insights.

In finance, companies like Wells Fargo are using quantum computing in collaboration with IBM to improve machine learning models for financial analysis and risk management. Similarly, Crédit Agricole CIB is leveraging quantum-inspired computing for the valuation of derivatives and credit risk anticipation.

In healthcare, organizations like Cleveland Clinic are leveraging the IBM Quantum System One, the first quantum computer dedicated to healthcare research, to accelerate biomedical discoveries and improve patient outcomes. Boehringer Ingelheim is exploring quantum computing for drug discovery, using quantum simulations to analyze molecular compounds and speed up medication development. Meanwhile, Pfizer is utilizing quantum algorithms to simulate molecular interactions more efficiently, aiming to identify therapeutic compounds and advance drug discovery processes.

In logistics, Volkswagen Group has pioneered the use of quantum algorithms to optimize traffic flow and vehicle routing. In collaboration with D-Wave, Volkswagen has demonstrated the ability to use quantum computing for real-time navigation, significantly reducing congestion and improving travel efficiency. This innovation showcases the potential of quantum technology to revolutionize logistics operations and create more sustainable transportation systems.

As quantum hardware advances and becomes more accessible, its integration into Big Data ecosystems will redefine scalability and open new possibilities for data-driven decision-making.

Balancing cost and performance in large-scale data environments

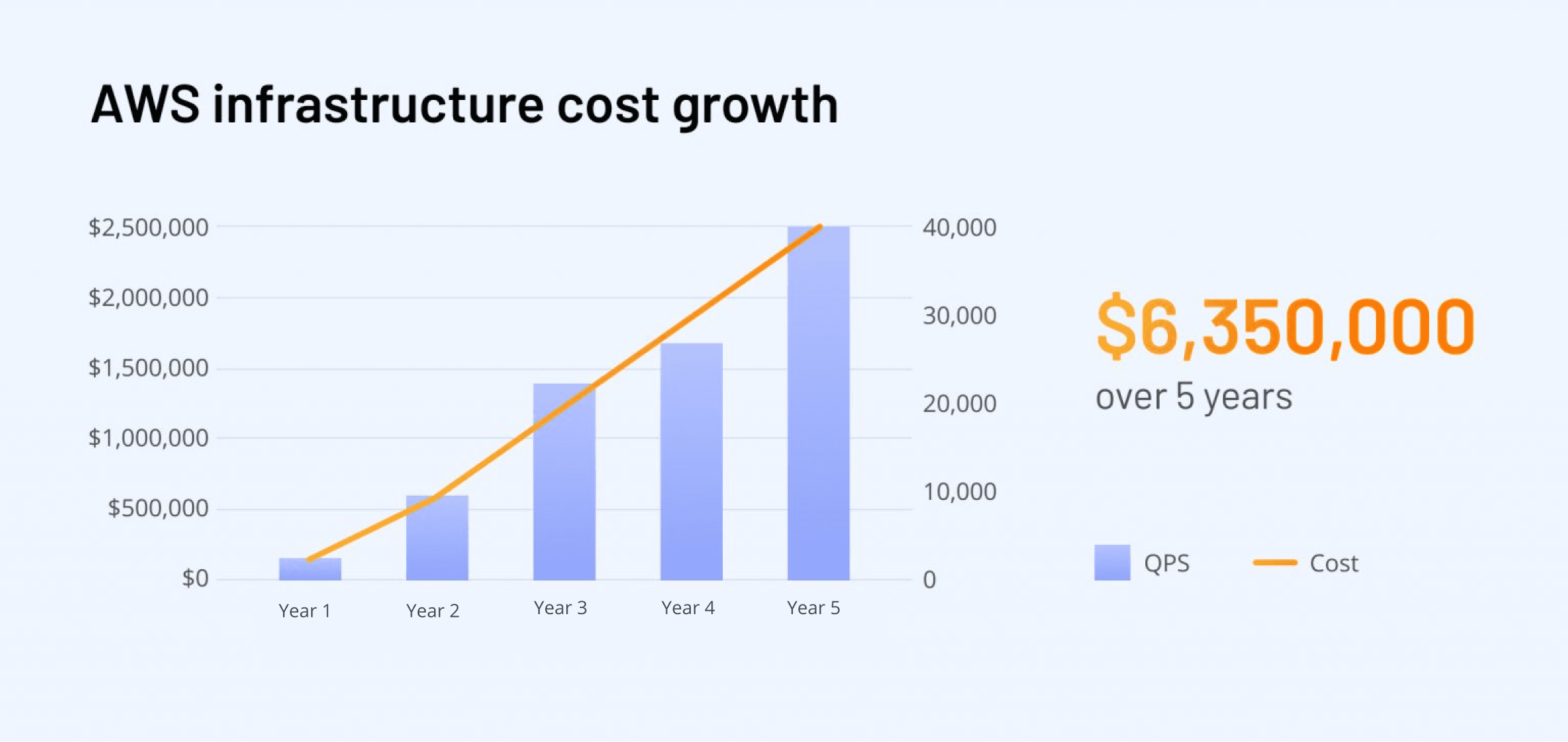

Balancing cost and performance in large-scale data environments has shifted from common sense to a critical focus in the big data era. With data volumes growing and real-time processing demands increasing, infrastructure costs can quickly spiral out of control, threatening profitability and long-term success. Startups and tech-driven companies are particularly vulnerable, where mismanaged database architectures can cripple growth.

We’ve seen this firsthand with one of our clients, an ad tech company running a demand-side platform (DSP). Initially, their AWS costs were negligible, but within five years, they had skyrocketed to $2.5 million annually, with over $6 million spent in total. This uncontrolled expense drew resources away from growth-driving initiatives like marketing and product development.

To address this, we executed a comprehensive infrastructure overhaul. By replacing shared-economy services and costly data-transfer tools with more efficient alternatives, deploying an on-premise system, and switching MongoDB to Aerospike’s in-memory storage, we slashed costs and improved performance. Xenoss re-engineered the architecture reduced their annual cloud bill from $2.5 million to $144,000, cut server requirements from 450 to just 10, and doubled their traffic capacity.

This experience underscores the importance of a well-planned architecture that scales efficiently. Businesses must prioritize cost-performance optimization from the start. In today’s data-centric landscape, success depends on designing systems that not only handle growth but do so sustainably, balancing the twin demands of performance and cost. For companies ready to scale smarter, the rewards can be transformative.

Final thoughts

That wraps up our list of the top big data trends shaping the industries. Common among these trends is the drive toward more scalable, efficient, and intelligent systems that break silos, enhance real-time insights, and streamline data operations. If your business is experiencing the effects of these shifts, connect with Xenoss specialists to explore how we can help future-proof your strategy and harness the full potential of your data. Let’s build a robust, data-driven foundation for your success!