When it comes to deciding how to structure your data stack, there’s no one-size-fits-all answer—the data landscape is fragmented, and every data engineering team faces unique challenges. The choice between building and buying can vary significantly based on which layer of the data stack you are considering.

Some components of a data platform are typically better suited for purchasing rather than building internally—storage solutions are prime examples of this. Deploying infrastructure for storage from scratch demands highly specialized skills and a substantial engineering investment—something that only a few organizations are positioned to achieve. Due to the significant challenges of building infrastructure at this scale, companies specializing in platform and infrastructure development are best positioned to handle the creation of storage and solutions.

Major cloud providers like Google, Amazon, and Microsoft have both the resources and expertise required to successfully develop these components, with offerings such as Google’s BigQuery and DataProc, Amazon’s Redshift, Glue, and Athena, and Microsoft’s Synapse and Azure Data Lake. Additionally, other specialized companies, such as Snowflake, Databricks, Onehouse, and Tabular, are uniquely focused on data infrastructure, providing powerful alternatives tailored to specific needs. Each of these companies has distinct capabilities that allow them to successfully develop storage technologies. Consequently, if your organization isn’t already operating in the domain of offering storage as a service, there is little justification for building these fundamental components of your data stack from scratch.

However, the decision isn’t always just about building from scratch or buying fully managed solutions—open-source alternatives also offer a middle ground. There are numerous open-source tools that can empower a data engineering team to set up in-house compute and storage solutions without relying on managed services. For example, Kubernetes or YARN could handle compute, MinIO or HDFS could serve as open-source file systems, and lakehouse technologies like Apache Hudi or Delta Lake could manage storage. Even more traditional open-source data warehousing tools, such as Hive and HBase, are potential options.

This leads us to a fundamental question: Should your team build its storage and compute infrastructure on an open-source platform and dedicate resources to maintaining it in-house, or would it be more beneficial to opt for a managed service—whether from an open-source or cloud-based provider—and allow your engineering team to focus on higher-priority projects?

To find the answer, it’s essential to revisit the initial considerations around cost, time-to-value, and strategic goals and assess how they apply to your storage solution.

Cost considerations and resource assessment

The first step is to assess your budget and what your data engineering team is prepared to invest. An upfront cost that seems steep may ultimately save money by reducing workload, optimizing compute costs, or boosting operational efficiency. The key takeaway: Don’t be deceived by initial costs; the full cost picture is often more complex.

Storage forms the backbone of your data stack, so the cost of building a scalable solution that meets your current and future requirements is crucial. Is it more cost-effective overall to set up and maintain an open-source solution, or do the savings of a managed solution justify the expense? As a data engineering lead, it’s your responsibility to identify that tipping point and optimize accordingly.

For example, Airbnb faced the challenge of spiraling cloud costs as their operations scaled. Their Data Quality initiative led to a comprehensive reconstruction of their data warehouse, aimed at addressing inefficiencies and revitalizing their data engineering processes. By strategically implementing data retention policies, optimizing storage tiers, and reducing reliance on unused storage, Airbnb managed to significantly lower their Amazon S3 costs while improving data management efficiency.

In addition, their focus on long-term cost optimization included rethinking storage strategies, embracing Kubernetes, and leveraging AWS effectively. These efforts saved $63.5 million in hosting costs within nine months, proving that targeted investment in custom infrastructure can yield substantial benefits over time.

Beyond the cost of getting your initial solution up and running, you also need to consider long-term expenses. How much maintenance will be required? Will you need to develop additional in-house tools—such as for data ingestion, orchestration, or monitoring—to work with your self-managed open-source solution? All these costs need to be factored into your build vs. buy decision.

For Venatus, a leading gaming advertising technology platform serving over 900 publishers including Rovio, EA, and Rolling Stone, the challenge was similar to many companies scaling up their operations: balancing cost with long-term value. When they approached Xenoss, they were operating across multiple cloud platforms – using Google Cloud Storage for ad transaction logs and AWS S3 for other partner data and platform-collected information, with data management through BigQuery.

However, as Venatus processed increasing volumes of ad impressions (reaching 1.4B monthly video impressions), managing data across multiple platforms became problematic. The ongoing costs of maintaining data transfer across services and the variable costs associated with cloud services required a strategic overhaul.

Xenoss stepped in to transform their data infrastructure. After a thorough analysis of their tech stack and cloud infrastructure, our team identified potential bottlenecks and developed an optimization strategy. We helped Venatus transition to a single cloud provider (AWS) and implemented ClickHouse for data processing, which significantly reduced storage costs and improved data pipeline efficiency. The optimization efforts included integrating 14 new ad networks and implementing advanced header bidding with Rubicon’s prebid server to increase advertising yield.

The results were transformative: system performance improved by 10x, and Venatus was able to grow their publisher base from 200 to 900. This validated our strategic decision to build a custom open-source solution – we had reached a scale, both in terms of data volume and organizational size, where building our own open-source solution became the most beneficial option—despite the future costs involved. The new tech stack, consisting of AWS, Yandex ClickHouse, React, Java, MongoDB, Rubicon Prebid server, Prebid.js, and Metabase, provided the scalability and performance needed for Venatus’s continued growth.

Interoperability and technical complexity

How well will your storage solution support your future needs? This ties directly into your decision between open-source and managed options because the interoperability of your storage solution will have a significant impact on the total cost of maintaining your data platform.

First, let’s take a look at some managed solutions that are available right out of the box. Managed solutions from providers like Google, Amazon, and Microsoft are typically vertically integrated, offering a complete ecosystem for your data stack. This integration can sometimes limit flexibility but also simplify decision-making down the line. Platforms like Snowflake, which remain cloud-agnostic, promote greater interoperability by supporting a wide range of integrations based on your data needs. Likewise, lake and lakehouse solutions offer increased flexibility due to the adaptable nature of their storage.

How does deploying an open-source solution in-house compare to a managed service? Open-source solutions generally offer better extensibility compared to managed alternatives but come with added complexity. Implementing an open-source approach often involves research, experimentation, and time dedicated to understanding complex software, which is largely unnecessary with managed services. Additionally, it requires engineers to comprehend security models, auto-scaling mechanisms, and troubleshooting system failures.

From that perspective, opting for a managed version of these open-source solutions can often be the easier path since much of the groundwork is already established. Your data team can leverage the benefits of interoperability more quickly, without dealing with the complexities of setting up an in-house solution.

Still, despite the inherent challenges, building a robust storage solution using open-source software could be the right choice for some teams. At a certain scale, the advantages of extensibility can outweigh the downsides of open-source complexity. If your data requirements are highly specialized, the flexibility of open-source tools might be necessary to craft a solution capable of supporting them. In such cases, building in-house might be well worth it.

It’s crucial, however, to recognize that costs aren’t confined to the initial deployment of your solution; maintaining it over time also adds up. The more of your data stack you decide to manage internally, the greater these ongoing costs will be. Your choice will also influence the tools you’ll have available for future use.

The build or buy decision comes down to the specific needs of your platform. It’s not about which option is inherently better but about which one fits best. To scale effectively, it’s important to understand how new tools will integrate and whether they’ll add unnecessary complexity or make things simpler. Sometimes, opting for simplicity by choosing a ready-made solution is the best way forward.

Data engineering expertise and team development

Maintaining a storage solution demands deep expertise in file systems, databases, and related infrastructure—skills that many teams may not possess.

For smaller companies and startups, setting up open-sourced systems, the cloud can work as an MVP solution. However, as the company scales, maintaining this infrastructure requires a dedicated engineering effort, which often evolves into a role handled by a DevOps team. The choice depends heavily on the skill set of your data engineers. If in-house expertise is lacking, investing in managed services might be necessary until you have a DevOps team that can fully manage that part of the infrastructure.

The conversation becomes particularly interesting when discussing lakehouse infrastructure—a concept pioneered by Databricks that represents a paradigm shift for data platforms. The technologies involved in building lakehouses are advanced and still relatively new. Many data engineers may not yet have the skills required to implement a complete lakehouse solution, though the industry seems to be moving in this direction.

Imagine assembling the components of a data warehouse, and then being responsible not only for managing them but also for troubleshooting any issues that arise. Warehouses have numerous intricate components, and addressing major errors while maintaining interoperability and consistent support demands substantial expertise in systems and infrastructure.

Speed of implementation and ROI

Setting up an in-house storage solution in today’s data landscape is highly challenging, demanding a considerable investment of time from specialized data engineers. Even with a skilled team working tirelessly, it will still take significant time to get a solution like this operational.

The initial learning curve can be quite challenging, requiring mastering different system configurations, adapting them to fit your environment, and implementing the right metrics and fail-safes for reliability and robustness. For example, users in some open-source communities have shared that deploying a lakehouse solution can take over six months. Considering these difficulties, it could be a long time before your efforts translate into real value for the business and end-users.

Moreover, as with other aspects of storage solutions, the concern isn’t just about the time it takes to establish your initial setup, but also the ongoing effort required to maintain and improve it over time. Although it’s hard to predict the full scope of future maintenance, understanding these requirements upfront is crucial for informed decision-making. This approach will also help allocate resources effectively for future projects by planning ahead based on your chosen path.

Strategic value and market differentiation

You may have the time, resources, and technical skills to build your storage and compute solution in-house. But is that truly in your company’s best interest?

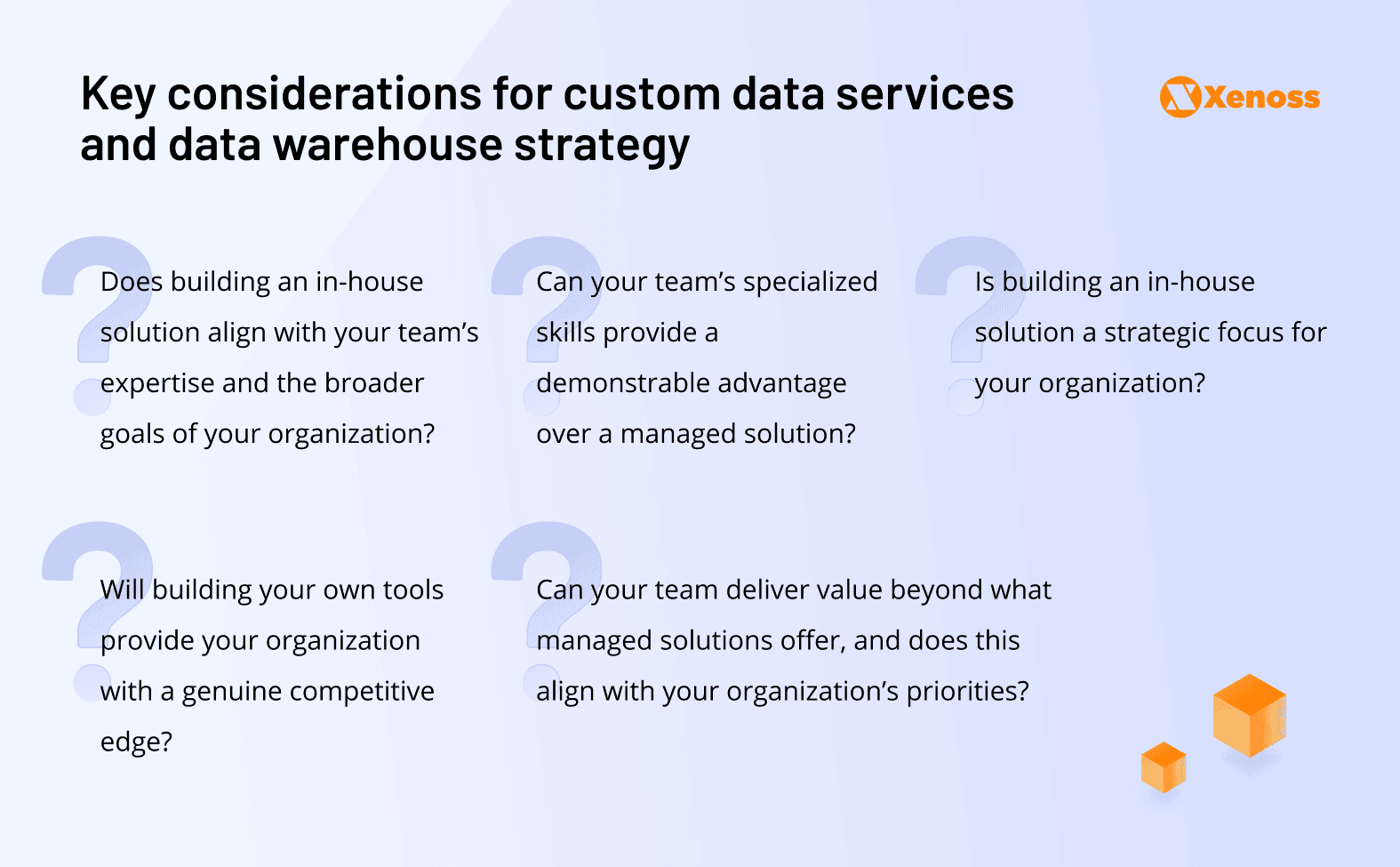

Even if you answered ‘yes’ to all these factors, you still need to ask: Does building an in-house solution offer a distinct strategic advantage for your organization? If the answer is ‘no,’ then pursuing an in-house solution may not be justified.

In some cases, organizations have developed their data platforms because their requirements exceeded the capabilities of existing solutions, and building their own platforms offered a unique competitive edge. The state-of-the-art data platform they built enabled near real-time data availability for data scientists, analysts, and engineers—improving capabilities such as fraud detection, experimentation, and overall user experience.

The same can be said for companies like Google or Netflix, which operate on a massive scale with specific, complex requirements. However, for most organizations, this isn’t the reality. Unless the cost of purchasing a managed solution is significantly higher than the engineering effort required to build one, opting to buy is often a more effective way to leverage your data platform for competitive advantage than investing heavily in building an open-source-based solution.

While out-of-the-box platform solutions are increasingly sophisticated, offering extensive feature sets and improved interoperability, the decision between building and buying remains highly context-dependent. For some organizations, managed solutions provide the perfect balance of functionality and efficiency. For others, like Venatus, building custom solutions using open-source technologies can deliver superior value, especially when dealing with unique requirements or specific performance demands.

The key to success lies not just in the platform choice itself, but in how effectively you implement, customize, and optimize your chosen solution. Whether building or buying, your competitive edge will come from how well you understand your specific needs, leverage your data effectively, and align your infrastructure decisions with your long-term business strategy. By carefully weighing the considerations we’ve discussed—from costs and complexity to expertise and strategic value—you can make an informed decision that best serves your organization’s unique circumstances and goals

Summary

In this blog post, we have taken a closer look at the data storage layer and explored its role in shaping the rest of your infrastructure. It is essential to invest in suitable tools from the outset, as your storage will heavily impact how you design, implement, and utilize other elements of your data stack in the future.

Building could mean starting from scratch or using open-source tools to create a custom solution. Setting up your data warehouse solution depends on your team’s specific needs, which ultimately boil down to the five key considerations:

- Cost & resources

- Complexity & interoperability

- Expertise & recruiting

- Time to value

- Competitive advantage

The storage layer you choose should align with your organization’s unique use cases, data-sharing policies with cloud providers, and overall cloud or infrastructure architecture. Whether you’re deciding on a warehouse solution or data observability tools, making great choices often involves tough discussions. Embrace these build-versus-buy debates—they drive innovation and progress.