Data-driven organizations have a roster of challenges: Exploding data volumes, fragmented sources, and the demand for low-latency insights. To keep control over increasingly complex data flows, engineering teams had to transform data pipelines from static ETL scripts into dynamic, intelligent systems.

To that end, new concepts sprang up, such as Data mesh architecture, DataOps, and multi-cloud architecture. These architectural innovations aim to break silos and speed up pipeline deployment, all while ensuring data reliability and observability.

This blog post explores how data pipeline trends help companies future-proof their operations for a growing number of AI and advanced analytics use cases.

Trend #1: Data mesh architecture

What is data mesh?

Data mesh is a recent concept coined by Zhamak Dehghani in 2019 to describe a data platform architecture that embraces domain-driven design and distributed storage. Instead of centralizing all data into monolithic lakes or warehouses, it encourages a federated model, where data is owned, produced, and consumed within business domains.

In a detailed write-up, Deghgani outlines the evolution of data platforms. Enterprise data warehouses, the first data architecture paradigms for many teams, left organizations grappling with a heavy maintenance burden and massive tech debt.

They were substituted by data lakes that, while addressing the maintenance of ETL jobs, could only be managed by a hyper-specialized engineering team and struggled to support operations in all business dimensions.

As for the modern data platform, Deghgani points out the focus on streaming architectures (or a streaming-batch mix) and embracing cloud architecture.

Modern data platforms improve over predecessors in scalability and maintenance cost, but leave room for improvement in serving different business functions. Thus, the idea of a data mesh, equivalent to microservice architecture in data engineering, has emerged to solve the problem.

Key components of a data mesh

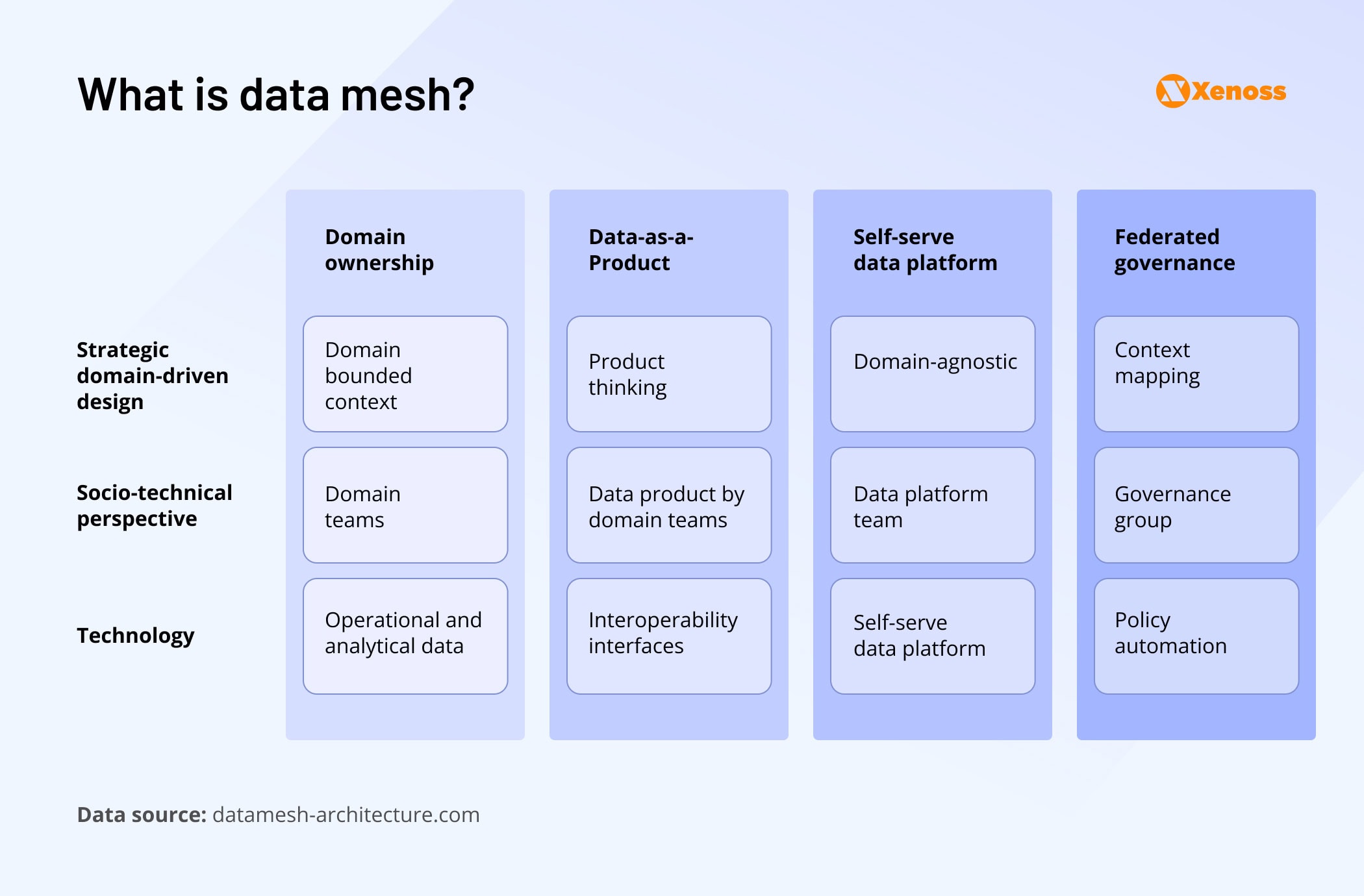

Zhamak Dehghani breaks data mesh architectures into four pillars.

- Domain ownership: Internal teams should assume responsibility for their data pipelines.

- Data-as-a-product: Domain teams view other teams as potential data consumers, ensuring high-quality data products

- Self-serve data platform: Domain teams use this system to build and maintain pipelines.

Federated governance: A dedicated governance group enforces and monitors interoperability.

How organizations use data mesh

For a recent concept, data mesh is getting an enthusiastic response from enterprise organizations with complex data processes.

Netflix was among the early adopters of data mesh.

At one point, the streaming company needed to create an architecture that would facilitate accessing and processing Netflix Studio data that would be used by content production and management teams.

After experimenting with different architectures (batch processing with data consumers setting up ETL jobs, later replaced by event-driven streaming data processing) that helped decouple data movement from databases, the company embraced data mesh architecture.

Here is how the new architecture powers data movement at Netflix.

- Netflix Studio applications expose GraphQL queries via Studio Edge (a graph that connects data and ensures consistent retrieval).

- Change Data Capture reads database transaction logs and emits change events.

- These events are passed onto the data mesh enrichment processor, which issues queries to Studio Edge for data enrichment.

- Enriched data is stored in the Netflix Data Warehouse as Iceberg tables. Teams can use these tables ad hoc for querying and reporting. Using Iceberg enables schema evolution, time travel, and efficient partitioning, allowing domain teams to run reliable, performant queries even as the underlying data changes.

At Netflix, data mesh was a solution to the problem of increasing volumes of poorly connected and underutilized data. The new infrastructure allowed domain teams to build pipelines without relying on third parties, which helped extract value from data at higher speeds.

Similarly to Netflix, enterprise companies that process large data volumes have realized the benefits of a flexible and disintermediated infrastructure.

That’s why the adoption of data mesh now spans multiple industries. JP Morgan built a domain-owned data mesh to connect and maximize the impact of financial data. Roche Diagnostics uses a data mesh to connect data between the company’s nine pharma plants.

At this scale of adoption, data mesh has essentially transitioned from an emerging trend to a battle-tested architectural approach.

Trend #2: DataOps

What is DataOps?

DataOps (short for data operations) is an increasingly popular methodology for facilitating data access, processing, and management.

Its foundational ideas are process automation, integration, and tight-knit collaboration between data scientists, engineers, analysts, IT, and other organizational stakeholders.

Much like DevOps revolutionized application delivery, DataOps emerged to address the growing complexity of enterprise data environments.

Existing data management methodologies struggle to keep up with ever-growing data volumes coming through enterprise-grade pipelines. DataOps emerged as a more agile and scalable alternative.

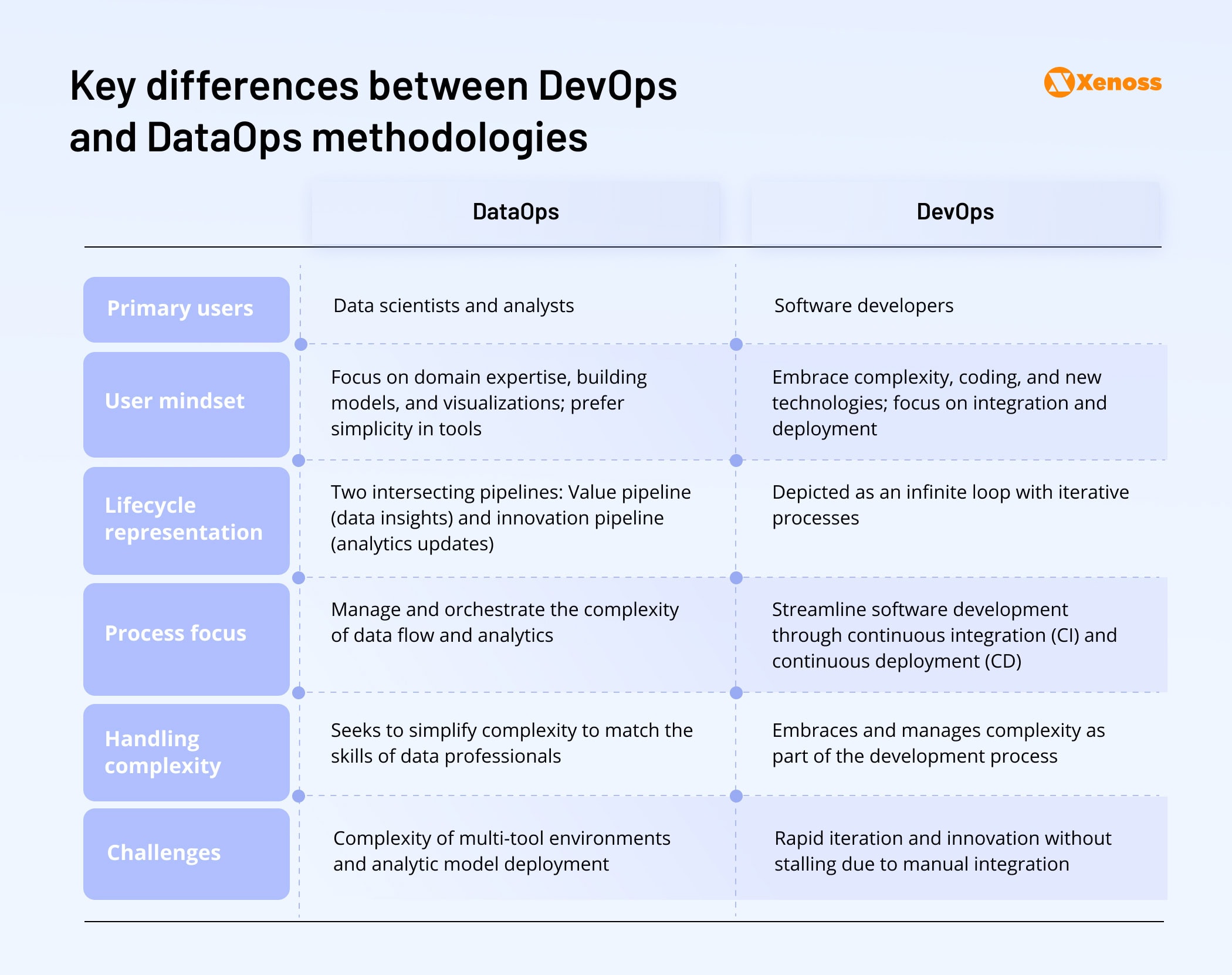

To understand why DataOps became an independent concept instead of being referred to as “DevOps for data”, it’s helpful to see what sets the two apart.

The table above summarizes the granular differences between DevOps and DataOps.

In broader terms, the goal of the former is to create workflows for faster software delivery. While the latter aims to break data silos and increase the value of collected data.

For DataOps, speed is secondary, and accessibility is key.

At the same time, both in engineering and data, observability stays critical. Just as engineers use logs and metrics to prevent incidents or detect them in real-time, data engineers monitor the lineage, distribution, and volume of data in the system to keep pipelines under control.

How organizations use DataOps

DataOps has gained traction across industries, from digital marketplaces to financial services, to reduce friction in data delivery, enforce quality standards, and enable continuous improvement across data workflows.

Airbnb adopted DataOps by introducing automated testing practices and version-controlled data pipelines. To manage the growing scale and complexity of its data, spanning hosts, guests, listings, and transactions, the company introduced automated testing, pipeline versioning, and rigorous monitoring practices.

By treating pipelines as code and embedding validation into every workflow stage, Airbnb accelerated deployment cycles while maintaining data trustworthiness, which is critical for downstream analytics, experimentation, and personalization.

The need to work with sensitive information in pharma and finance makes DataOps practices critical for data accessibility and manageability.

At GSK, data-driven decision-making thrives thanks to cross-functional teams. Inside the organization, AI/ML specialists, data engineers, and research scientists work side by side to enrich, interpret complex datasets, and apply them to therapeutic objectives.

Capital One created a “You Build, Your Data” platform with Snowflake to enforce DataOps principles. This self-service environment allows engineers to auto-generate Snowflake and AWS pipelines, embed data quality services, and allow product teams full ownership of both data and the underlying codebase.

The early adopters of DataOps were data-first companies like Netflix and Airbnb, but the benefits of CI/CD principles in data engineering eventually reached global enterprise organizations, like banks and big pharma.

Now that regulators have a tight privacy grip, flagship DataOps practices, like continuous monitoring and real-time anomaly alerts, are set to become the mainstay of enterprise data pipelines.

Trend #3: Multi-cloud data architecture

Multi-cloud architecture is becoming a mainstay practice in data engineering; 98% of teams surveyed by Oracle have used at least two public clouds.

Data-driven organizations shift to using multiple clouds to avoid vendor lock-in and prevent downtime if the vendor’s infrastructure breaks down. Multi-cloud approaches also allow teams to tap into best-of-breed services across providers, such as using Vertex AI on GCP for ML workloads while leveraging AWS for scalable storage or Azure for enterprise integrations.

It’s especially attractive to companies navigating cross-border operations, where data residency and compliance requirements vary by region.

Types of multi-cloud data pipelines

Two standard approaches to multi-cloud data architecture design are composite and redundant.

In a composite multi-cloud architecture, the application portfolio is distributed across two or more cloud service providers. This model allows organizations to prioritize data pipeline performance.

In a redundant architecture, two or more instances of an application are hosted on different CSPs. If a cloud service fails, a different one takes over, ensuring uninterrupted runtime. This approach allows organizations to prioritize resilience and availability.

How organizations leverage multi-cloud data architecture

Enterprise organizations across multiple domains have transitioned to multi-cloud architectures over the past five years.

McGraw Hill, a global provider of learning materials, hosted its internal apps in a multi-cloud architecture distributed on AWS, Microsoft Azure, and Oracle Cloud Infrastructure (OCI) following the closure of its on-premises data centers.

The multi-cloud architecture, additionally supported by a unified management interface, helped the company increase the scalability of its pipelines, ensure consistent performance, and address the threat of vendor lock-in.

In finance, Goldman Sachs enabled multi-cloud tenant deployments into its Cloud Entitlements Service. The platform allows data applications can run both on AWS and GCP, depending on latency or cost goals.

Enterprise organizations need to balance the speed of pipeline deployment with resilience and minimal downtime risks.

Multi-cloud architecture helps engineering teams accomplish both by decoupling them from a single provider, helping build more tailored tech stacks, and improving interoperability across partner ecosystems.

Bottom line

Modern data pipelines are becoming smarter, faster, and more adaptable, driven by the growing demand for real-time insights, scalable architectures, and AI-ready data.

Data pipeline trends like data mesh architectures, DataOps, and multi-cloud environments give domain teams more flexibility in accessing strategic insight and improve the scalability and robustness of the pipeline.