Imagine building your entire marketing strategy around something most people now reflexively reject. That’s exactly what’s happening in 2025. Across Europe, fewer than 1 in 4 users accept cookies. Acceptance rates continue falling as consumers grow more privacy-conscious and regulatory pressures intensify.

For marketers, this creates a fundamental challenge. Traditional targeting methods are becoming obsolete. But here’s the counterintuitive reality: you’re not actually running out of data for personalization. Most teams are sitting on mountains of ultra-valuable, privacy-compliant information that customers willingly provide. Customer reviews reveal authentic preferences. Chat logs documenting real purchase intent. Voice recordings capture emotional nuance. Social posts showing genuine brand sentiment. Survey responses detailing exact needs.

The challenge lies in the nature of this data. It’s unstructured, messy, and non-tabular. Traditional marketing tools can’t process it effectively, which means most companies aren’t using it at all.

Multimodal AI changes this equation entirely. This technology can transform scattered customer conversations, heart-shaped emoji reactions, and voice note feedback into real-time, trust-based personalization that outperforms traditional tracking methods.

This guide reveals how forward-thinking marketing teams are combining multimodal AI with zero-party data strategies to create personalization that’s more accurate, more compliant, and more sustainable than anything possible with third-party tracking.

Zero-party data: Building the foundation of privacy-first marketing

One of the most overlooked assets in every data stack is information customers give you on purpose, also known as zero-party data.

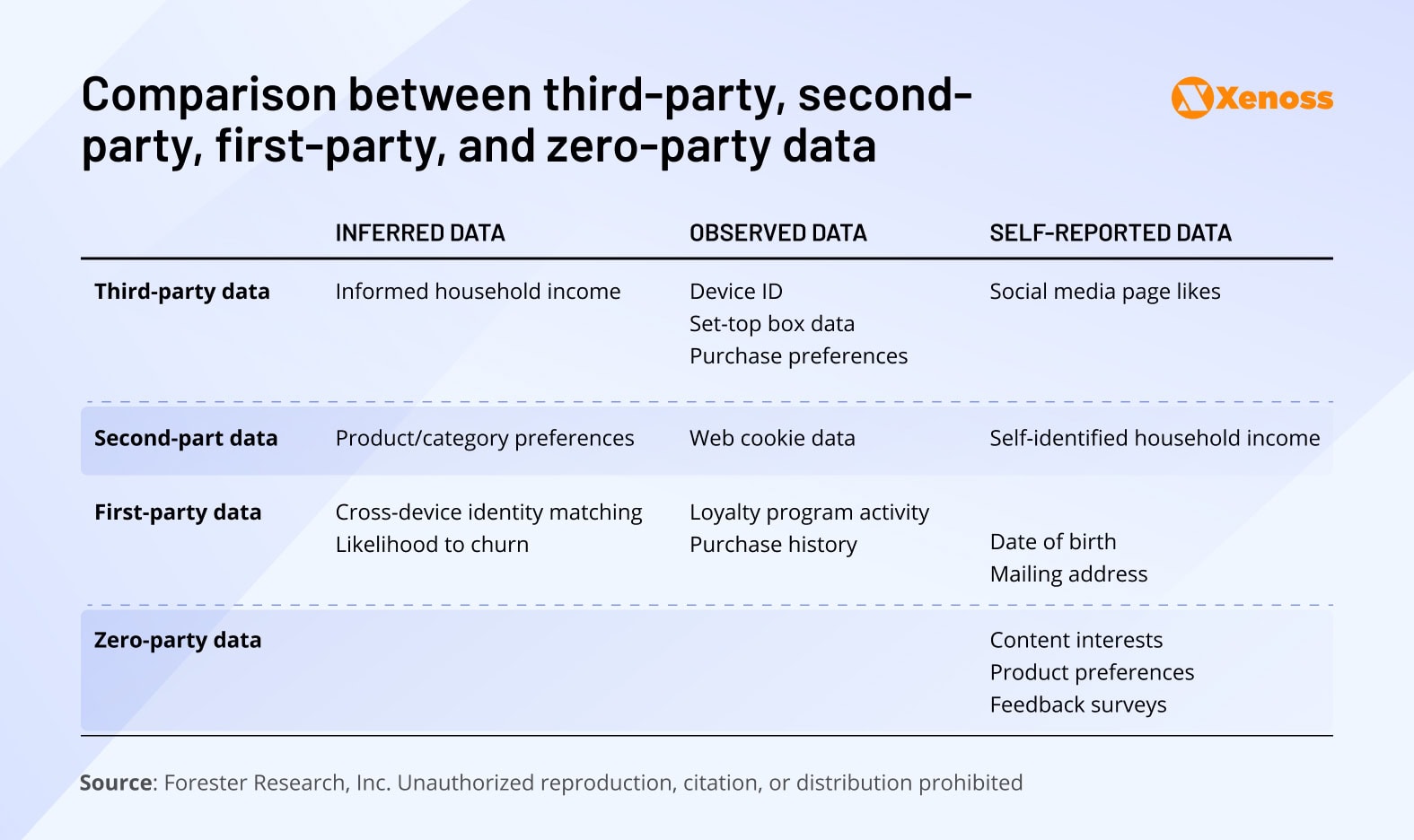

Forrester first coined the term zero-party data (ZPD) to describe “data that a consumer intentionally and proactively shares about herself” when interacting with your brand. This could be:

- Preference center data: email frequency, product categories of interest, shipping addresses

- Purchase preferences: feedback on past orders, saved items, favorite delivery windows

- Personal context: content interests, hobbies, lifestyle indicators

- Self-identification cues: “plant mom”, “eco-conscious parent,” “anime fan”

ZPD represents the most accurate information you’ll get about a customer because it’s self-disclosed. Unlike first-party data, which is passively collected through user actions like page views or purchase history, or third-party data purchased from external providers, zero-party data starts with explicit consent rather than implied behavior.

Zero-party data is explicit, not implied. Almost half of consumers are open to telling brands how they want to be seen and served if that leads to better experiences. This makes ZPD a powerful method for engaging and personalizing in a privacy-first world.

Understanding the data spectrum: From third-party to zero-party

The distinction between different data types has become crucial for compliance and effectiveness. Third-party data comes from external sources without direct customer relationships, often through data brokers or tracking networks. Second-party data involves sharing between trusted partners with customer consent. First-party data captures behavioral signals from direct customer interactions. Zero-party data represents intentional customer sharing for better experiences.

Because zero-party data is shared willingly and proactively, its usage helps build trust. Three-quarters of consumers won’t buy from brands with poor data ethics, which typically involves pervasive tracking, ultra-targeted advertising, or a history of data leaks. Zero-party data addresses this by creating a permission-based loop where customers control the conversation.

When implemented correctly, multimodal marketing with zero-party data can unlock hyper-personalization without surveillance concerns. You can optimize content experiences across touchpoints in real-time based on self-disclosed customer preferences. You can feed user preferences into real-time bidding optimization models to prioritize higher-value segments for targeting. You can connect profile inputs to conversion optimization tools for more relevant offers.

Effective zero-party data collection methods

The most successful zero-party data collection happens through:

- Onboarding questionnaires that feel helpful rather than intrusive

- Email or app preference centers that give customers control

- Loyalty programs with profile enrichment opportunities

- Style or product discovery quizzes that provide immediate value

- Exit or post-purchase surveys timed for maximum engagement

- “Build your bundle” or configurator tools that capture preferences naturally

- Chatbot interactions that document self-reported intent

However, operationalizing zero-party data presents unique challenges. The datasets are smaller and harder to scale compared to automated tracking. Participation is voluntary, which means you must earn attention and trust before requesting information. When the exchange feels one-sided, users will opt out quickly.

To maximize zero-party data value, you need to combine it with first-party behavioral data obtained with proper consent. This combination enriches customer profiles, refines customer journey mapping approaches, and enables smarter automations across key touchpoints while maintaining privacy compliance.

Unstructured first-party data: The complementary asset hiding in plain sight

About 80% to 90% of all information produced today is unstructured. This includes customer service transcripts, support chat logs, social media comments, product reviews, voice recordings, and video interactions.

These assets often hold the context, rationale, and emotional nuance behind customer decisions. Structured data can tell you what happened. Unstructured data can tell you why it happened.

Yet unstructured data remains vastly underused due to persistent data silos and suboptimal IT infrastructure.

Leaders struggle to integrate structured and unstructured data because they don’t share common storage architectures. Structured data lives in SQL-based warehouses, while unstructured data resides in data lakes and object stores. Customer data integration becomes complex when these systems don’t communicate effectively.

Moreover, unstructured insights require advanced extraction, transformation, and modeling before they can be fed to standard marketing automation tools. This technical barrier has prevented most organizations from capitalizing on their unstructured data assets.

The untapped value in unstructured customer data

There’s significant upside to investing in streaming data pipelines and data lakehouse architecture that enables dual-type data processing.

According to Deloitte, companies that use first-party data:

- Get 8X higher ROAS from targeted ads

- Drive 10% or higher lift in sales

- Are 44% more likely to beat revenue goals

When combined with zero-party data, these results can be sustained and amplified in the data protection era, thanks to new generation AI marketing tools that can process both structured preferences and unstructured behavioral signals.

Customer service transcripts reveal frustration patterns and feature requests that structured surveys miss. Social media interactions capture authentic brand sentiment and community conversations. Voice recordings contain emotional nuance, including tone, pace, and hesitation that text-based data loses completely. Product reviews include detailed use cases, comparison criteria, and decision-making factors. Chat logs document real-time purchase intent and consideration processes. Email conversations show relationship progression and engagement patterns over time.

The challenge has been extracting actionable insights from this messy, unformatted information at scale. Traditional analytics tools struggle with the variability and context-dependent nature of unstructured data, leaving valuable customer intelligence trapped in isolated systems.

Multimodal AI: The technology that unifies zero-party and unstructured data

Combined zero-party and first-party data offer richer context. One is volunteered, the other observed. But neither lives in the clean, structured rows that most MarTech tools can process effectively. Multimodal AI solves this integration challenge.

Multimodal AI models can process and synthesize different sensory modes, including text, audio, video, visuals, and all connected metadata within the same model. Unlike regular generative AI models primarily trained to excel at one job well, such as working with texts like GPT-4 or video like Stable Diffusion, multimodal algorithms aren’t limited to single input or output pairs.

They can be fed with different data modalities and prompted to produce any content type based on a comprehensive analysis across multiple signal sources.

The industry is moving rapidly in this direction. Gartner predicts that 40% of gen AI solutions will be multimodal by 2027, up from just 1% in 2023.

For marketing, the major advantage of multimodal AI is comprehensive customer behavior analysis. Such algorithms don’t treat customer preferences, reviews, voice notes, and chat transcripts as separate channels with context lost between interactions. The system reads them as one continuous conversation with cross-model validation, reducing ambiguities and knowledge gaps.

Multimodal AI bridges declared and behavioral intent

Multimodal AI creates a unified view of what customers say they want and how they actually behave and feel. This enables marketing teams to unify declared and behavioral intent, powering more human-like, context-aware engagement.

Multimodal AI marketing use cases

- Unified customer identities, based on a mix of quantitative and qualitative data

- AI-powered personalization, driven by past customer support interactions or survey data

- Cross-channel journey mapping by linking chat logs, app interactions, and purchase history

- Early churn prediction based on sentiment changes in emails, call transcripts, and product reviews

- Advanced sentiment analysis, based on social media posts, video reviews, and other UGC

- Ad bidding strategy or creative selection, based on multimodal behavioral analytics

- Visual trend mapping from tagged images, reels, and unstructured community content

In short, multimodal AI unlocks real-time, nuance-driven personalization. By combining structured and unstructured signals, marketers can stop guessing and start creating campaigns that reflect what customers actually think, feel, and say.

Implementation approach: Building the technical foundation for multimodal AI deployment

Serving regular machine learning models requires a certain degree of data management and MLOps maturity. You’ll need to fine-tune your current infrastructure to support faster data processing, seamless data sync, and effective infrastructure multi-GPU orchestration. On top, there’s also the cultural shifts to consider.

Data architecture requirements

To run multimodal AI in production, you need the right data infrastructure behind the scenes. Legacy data processing infrastructure based on warehouses and rigid ETL pipelines won’t suffice. You’ll need storage infrastructure with flexible schemas to host video, voice, and clickstreams alongside structured tabular data.

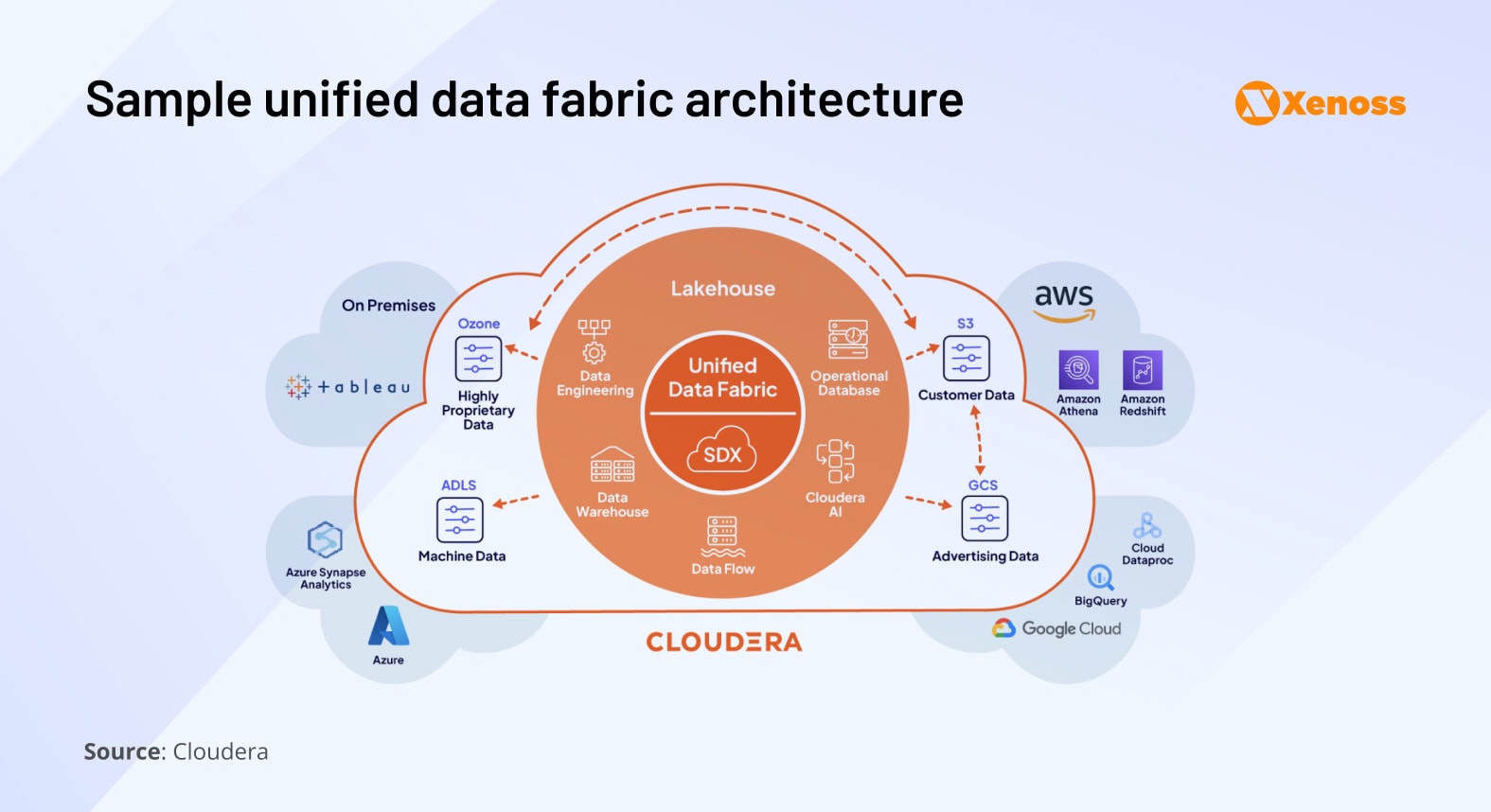

The optimal solution is a data lakehouse architecture. This represents an open data management approach that combines the flexibility and scalability of data lakes with the reliability and governance controls of data warehouses. With a data lakehouse, you can run multimodal AI, predictive analytics, and other AI models on all data types, minimizing information asymmetry and blind spots.

Already, 55% of enterprises run more than half of their analytics on a data lakehouse, and 67% expect to reach the same benchmark within three years as part of their AI adoption strategies. The advantages include greater cost-efficiency, unified data access, and improved ease of use.

The next critical element is a unified data fabric. This creates a way to connect and govern all different data sources across hybrid and multi-cloud environments. Data fabric links and routes data from all connected sources to the tools and models that consume it. It also helps build unified data catalogs to discover available insights, implement and enforce necessary data privacy, security, and governance policies, plus ensure data replication across systems.

Speed matters significantly for multimodal AI applications. These systems need to make decisions within split seconds for customer experience optimization. A customer’s rating of a recent purchase or reporting of an issue must be processed and acted upon immediately. Real-time data processing pipelines, built with tools like Apache Kafka or Flink, enable quick capture of event-driven user signals and data synchronization across services.

Together, these building blocks enable data synchronization, governance, and interoperability at scale, which are necessary for effective multimodal AI deployment.

Cloud infrastructure considerations

Standalone natural language processing or computer vision models already require substantial cloud resources. Multimodal AI amplifies these requirements significantly. Many models require at least 16GB of VRAM for inference operations. You’ll need powerful multi-machine GPU clusters to train and run the models effectively.

Cloud service providers have already expanded their support for multimodal AI deployments. Google Cloud Platform offers access to NVIDIA B200 Tensor Core GPUs and Ironwood, plus managed services like Google Cloud Batch, Vertex AI Training, and GKE Autopilot that minimize the complexities of provisioning and orchestrating multi-GPU environments.

AWS provides access to 8 GPUs per instance, based on the latest NVIDIA architectures like H100, A100, V100, and Blackwell B200. The new AWS Neuron SDK helps optimize performance to deliver quantitative speedups and decreased latency.

Beyond raw computational power, multimodal AI requires seamless data synchronization. If image preprocessing or text embedding generation gets bottlenecked, the model won’t be able to produce quality outputs. This creates risks of modality incompleteness, when one or more modalities are missing during training or deployment, leading to skewed results.

To avoid that, you’ll need to apply rigorous data pipeline optimization to keep data in sync:

- Enforce consistent timestamps across all data streams to align events from text, audio, and video inputs

- Embed metadata (e.g., session IDs, timestamps, source tags) directly into each data object to keep modalities contextually linked rather than siloed

- Use Apache Flink or Kafka Streams for real-time event correlation and time-windowed joins.

- Implement event versioning and schema enforcement to ensure compatibility between modalities

- Apply buffer windows or watermarking techniques to account for latency variations across modalities and restrict premature processing

- Configure observability tools to monitor ingestion lag and data skew for early issue detection

Lastly, it helps to keep human‑in‑the‑loop validation, especially during the trending stage, to catch early failures and wire-in reliability before deployment.

Organizational readiness

Deploying multimodal AI is not only about the technology, but also about the people.

Talent access is a major constraint. The demand for AI experts fluent in multimodal data is outweighting the supply. Over 85% of companies struggle to hire AI developers, with average time-to-fill for open roles reaching 142 days in 2024.

Even when you partner with experts in generative AI, internal organizational issues must be addressed. Beyond connecting systems, you’ll need to bridge collaboration gaps between teams, which are often the root cause of data silos. You’ll need to build a culture of proactive data sharing and clear data ownership, both of which require new habits of data democratization and data stewardship. Although challenging to implement, data stewardship can help both new and legacy businesses create stronger competitive advantages.

Unilever aims to operationalize over a hundred years of proprietary research — ancient formulations, trials, and consumer insights with AI models. As Alberto Prado, a global Head of Digital & Partnerships at Unilever, explained: “A century of skincare expertise is now structured, searchable, and ready to be applied. We are using AI to connect the dots across decades of research, accelerating discovery in new materials while simultaneously optimising formulations for specific needs, like different skin types”.

The better news is that even newer entrants can achieve the same advantage by applying multimodal AI to previously untapped data — IoT sensor readings from oil and gas production monitoring systems, geospatial insights from drone mapping surveys, satellite imagery in precision agriculture, or behavioural data from self-service retail kiosks. Cordial, for instance, recently launched a multimodal AI model for optimizing retail brand messaging across brand creative, illustrations, photography, and text. Early customers reported a 2X to 3.2X increase in revenue.

Effectively, multimodal AI can operationalize data sources, once considered too messy or unstructured to use at scale. But for that, you’ll need to align people, processes, and platforms around a common vision of data stewardship.

Building a business case for multimodal AI adoption in marketing

Multimodal AI will be a turning point for marketing personalization. It can help businesses recoup over $3 trillion, lost to data silos, by unlocking a new pane of contextual awareness, precision, and emotional intelligence, driven by a combo of first- and zero-party data.

Getting there won’t be simple. Technical complexity, talent shortages, and cross-team collaboration gaps remain major roadblocks. Privacy and security add another layer of friction. Despite the hype, only 20% to 25% of organizations are actively using custom AI models in production today.

That’s why a strong business case matters. You’ll need to connect the cost of data infrastructure optimization and model development against possible gains in conversion, retention, and operational efficiency. Moreover, it pays to think about wider, non-quantifiable benefits, like shorter feedback loops, faster campaign pivots, and a more profound understanding of your customers. And most importantly, risk management. By activating zero-party data, you reduce the compliance burden and reduce your reliance on third-party cookies.

At Xenoss, we help organizations determine the optimal path for multimodal AI deployment. Our team can advise on infrastructure configuration, reducing cloud costs without compromising model performance. Our goal is to help you build both the business case and the technical system for AI that drives measurable ROI. Let’s discuss how multimodal AI can transform your marketing capabilities.