Legacy infrastructure and incompatible field technologies are major drags on production efficiency. Across much of the sector, operations still hinge on siloed systems built in different decades for different purposes, rarely upgraded, and almost never designed for interoperability.

The consequences aren’t abstract. At Pemex, decades of entrenched inefficiencies turned the state-owned giant into one of the world’s most indebted oil companies. Production decreased. Safety eroded. Environmental lapses became routine. And yet, Pemex is not an outlier. Every year, an estimated 177 exajoules of energy, equivalent to nearly $467 billion, are lost to the frictions and blind spots of fossil fuel production and delivery.

These operational breakdowns aren’t just management-related — they’re structural. The rift starts deep in the tech stack, where outdated systems, disconnected edge devices, and siloed telemetry make it nearly impossible to form a cohesive picture of what’s happening across the value chain.

Why oil and gas data fragmentation happens

- Disparate SCADA systems and vendor-specific PLCs. Operators often deploy multiple SCADA platforms across sites, each with proprietary protocols and integration limitations. About 62% of companies name “legacy system integration” as a major operational barrier, with integration costs consuming up to 40% of SCADA deployment budgets.

- Isolated field devices with no centralized access. One offshore platform can monitor over 50,000 I/O points, and a subsea well can track up to 200 sensors to measure pressure, temperature, flow rates, and valve positions. But this data often remains stored locally without upstream connectivity.

- Lack of integration between upstream, midstream, and downstream operations

Each operational layer tends to run its own operational technology stack, creating blind spots in handoff zones. This is particularly acute in LNG supply chains where production, liquefaction, transport, and regasification often live in separate data environments.

What data fragmentation leads to:

- High energy losses. After extraction, hydrocarbons go through multiple stages — from processing into fuels and electricity, to powering devices that deliver heating, mobility, and other end services. But inefficiencies at each step result in colossal EJ waste and, thus, lower operational profits.

- Slow incident response. Without a unified view, control room teams operate reactively, and mostly when the incident has already caused damage. For instance, Sunoco LP became aware of a leaking pipe only after the compliance investigation was initiated by local residents.

- Unplanned downtime. Limited access to upstream data delays corrective maintenance, leading to longer downtime. Unplanned downtime costs offshore platforms an average of $38 million annually, with some cases reaching $88 million in losses.

- Manual data consolidation. Engineers often rely on Excel exports and handheld logs to bridge systems. Such data collection and reconciliation processes are time-intensive and error-prone, leading to delayed responses and subpar decision-making.

- Higher digital infrastructure TCO. Duplicate data increased cloud infrastructure costs. The average SCADA platform alone can generate up to 2 TBs of data from one offshore platform. If that data gets replicated across multiple hot-tier cloud storage locations, that’s about $520-$600 in daily wasted costs or over $180K annually.

The goal of digital transformation in the oil and gas industry: Unified, real-time production monitoring across assets

If there is one digital transformation imperative for oil and gas executives, it is this: consolidate the data.

In an industry where downtime costs millions and safety lapses carry steep, irreversible consequences, the continued reliance on fragmented pipeline monitoring systems and lagging offshore telemetry is less a risk than a liability.

By modernizing production monitoring, you ensure more agile operations, better compliance, and stronger bottom-line performance, and real-time, IoT-driven visibility across the asset chain is what makes that possible.

At Saudi Aramco, engineers no longer wait for wells to signal trouble. A network of real-time sensors, embedded directly into wellheads, now tracks pressure, temperature, and flow rates across sprawling fields with quiet precision. This constant stream of data feeds into an oil and gas digital twin, a virtual replica of physical operations, that allows engineers to monitor system behavior, catch anomalies before they escalate, and fine-tune production strategies without ever stepping foot on site.

French TotalEnergies, in turn, created the SmartRoom Real Time Support Center (RTSC) for remote drilling operations monitoring across the globe. The system aggregates data from hundreds of IoT oil and gas sensors installed on drilling rigs in real-time. Thanks to this data, operators can better deal with complex cases like ultra-deep well drilling, high-pressure/high-temperature conditions, and deviated or horizontal boreholes. The center enhances operational safety, ensures faster anomaly detection, and supports drilling teams with predictive insights powered by AI tools like DrillX.

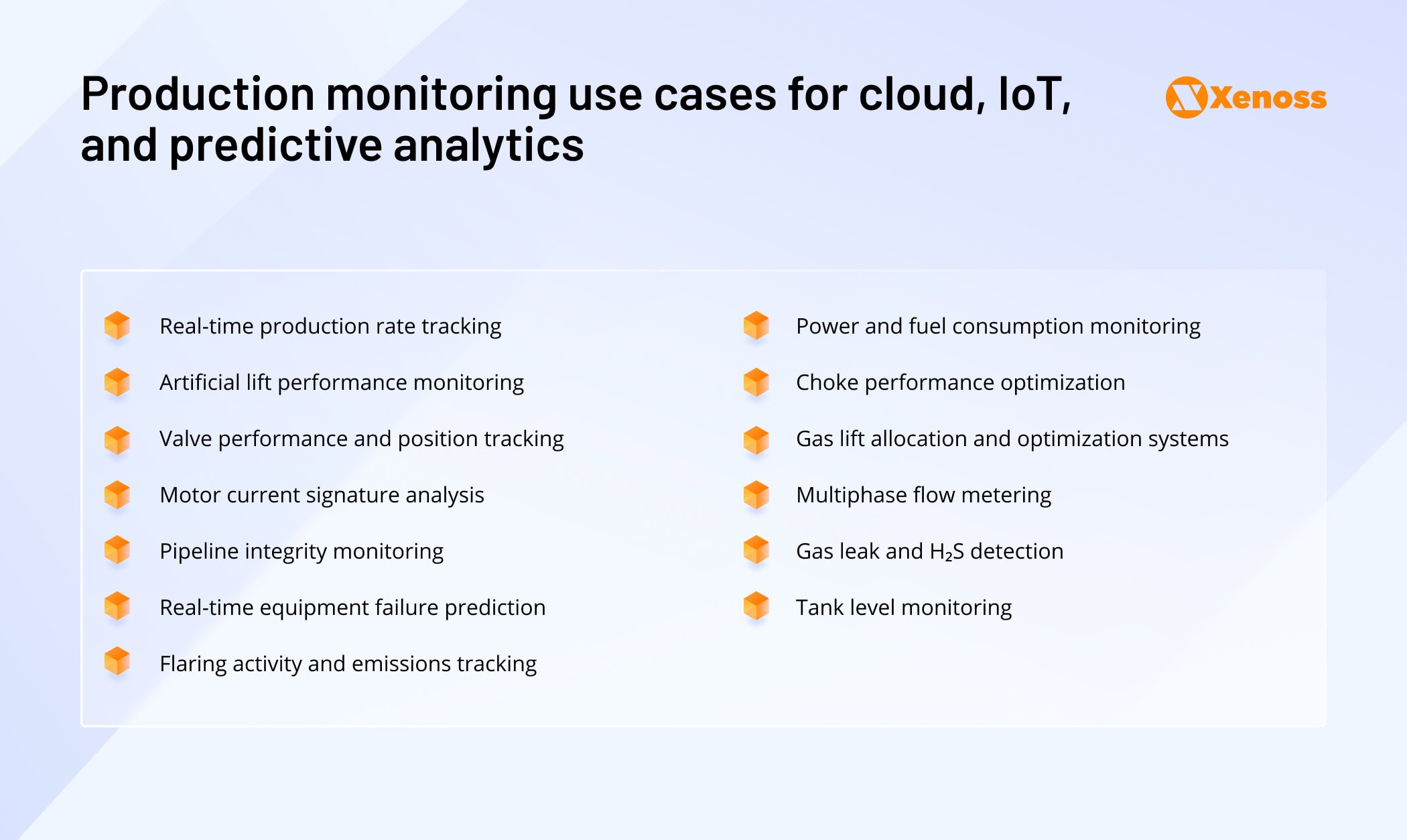

The combination of cloud, IoT, and predictive analytics in the oil and gas industry also enables a host of other production monitoring scenarios.

Components of a scalable IoT data management infrastructure for production monitoring

Digital oil and gas solutions can unlock major efficiency, safety, and performance gains, but only with the right data infrastructure in place. To turn raw sensor streams into actionable insights, your IoT architecture needs to be built for speed, scale, and integration.

Here are the core components modern production monitoring systems should include.

Intelligent edge data ingestion

To achieve real-time production visibility, oil and gas companies must start at the edge, where the data is generated. Traditional polling-based systems collect data from field devices in fixed intervals, often every 5-10 minutes.

But in dynamic environments like wellheads, pipelines, or refineries, that delay is a missed opportunity. Equipment anomalies, pressure fluctuations, or safety-critical changes can go unnoticed for minutes (or hours) simply because the data didn’t arrive fast enough.

Modern edge ingestion shifts the paradigm from passive polling to event-driven streaming. Rather than pulling all data at set times, edge systems push only what’s necessary, when it’s necessary. Lightweight protocols like MQTT and OPC UA, built for low-bandwidth environments, real-time responsiveness, and global asset connectivity, enable this shift. MQTT alone can reduce network load by up to 90% compared to legacy protocols like Modbus.

This unlocks:

- Faster data delivery, even across satellite links or rugged terrains

- Continuous monitoring without overwhelming SCADA systems

- Event-triggered transmission that trims duplication and cloud costs

One oil and gas company that recently adopted the MQTT protocol for data transmission to their SCADA system reduced data latency from 5-10 minutes to several seconds. Fast-rate data transmissions enable plenty of predictive analytics scenarios in oil and gas, pump failure, well decline rates, or equipment corrosion risk predictions.

When lightweight messaging is combined with smart edge gateways, you gain even more operational advantages.

Smart edge gateways like HPE Edgeline EL300, Siemens Industrial Edge, or Advantech UNO series act as a local processing unit, capable of transforming raw sensor readings into structured, contextualized, and prioritized data streams. Such gateways can:

- Translate multiple industrial protocols (e.g., Modbus, OPC-UA, HART) into a unified format like MQTT or JSON

- Filter out irrelevant data, such as flatlining signals or background noise, to reduce network bandwidth

- Enrich data locally by applying rules, tagging metadata, or performing lightweight analytics (e.g., threshold-based alerts or first-level anomaly detection)

- Buffer and store data during connectivity outages, then sync once reconnected to minimize data loss

- Be configured to encrypt data at the edge to ensure secure transmission to cloud or SCADA systems

By deploying smart gateways, you can shift from “send everything, sort it out later” to a more intelligent model where data is curated and contextualized before it ever leaves the field. This not only reduces cloud ingestion costs and SCADA overload but also improves the quality of insights used in real-time decision-making.

Real-time data pipelines and event-driven architecture

Most data pipelines rely on a batch process, collecting information at set intervals, processing it in bulk, and delivering it with a delay. In oil & gas, that delay could be minutes, hours, or even days, which diminishes visibility.

Real-time streaming data pipelines flip that model. They continuously ingest, process, and deliver data the moment it’s generated, enabling immediate action when something changes in the field.

For production monitoring, this shift is game-changing. Instead of searching through historical records, operators can detect and decipher performance anomalies as they happen using aggregated data from industrial IoT systems. This leads to faster decision-making, shorter mean time to resolution (MTTR), and stronger safety and compliance.

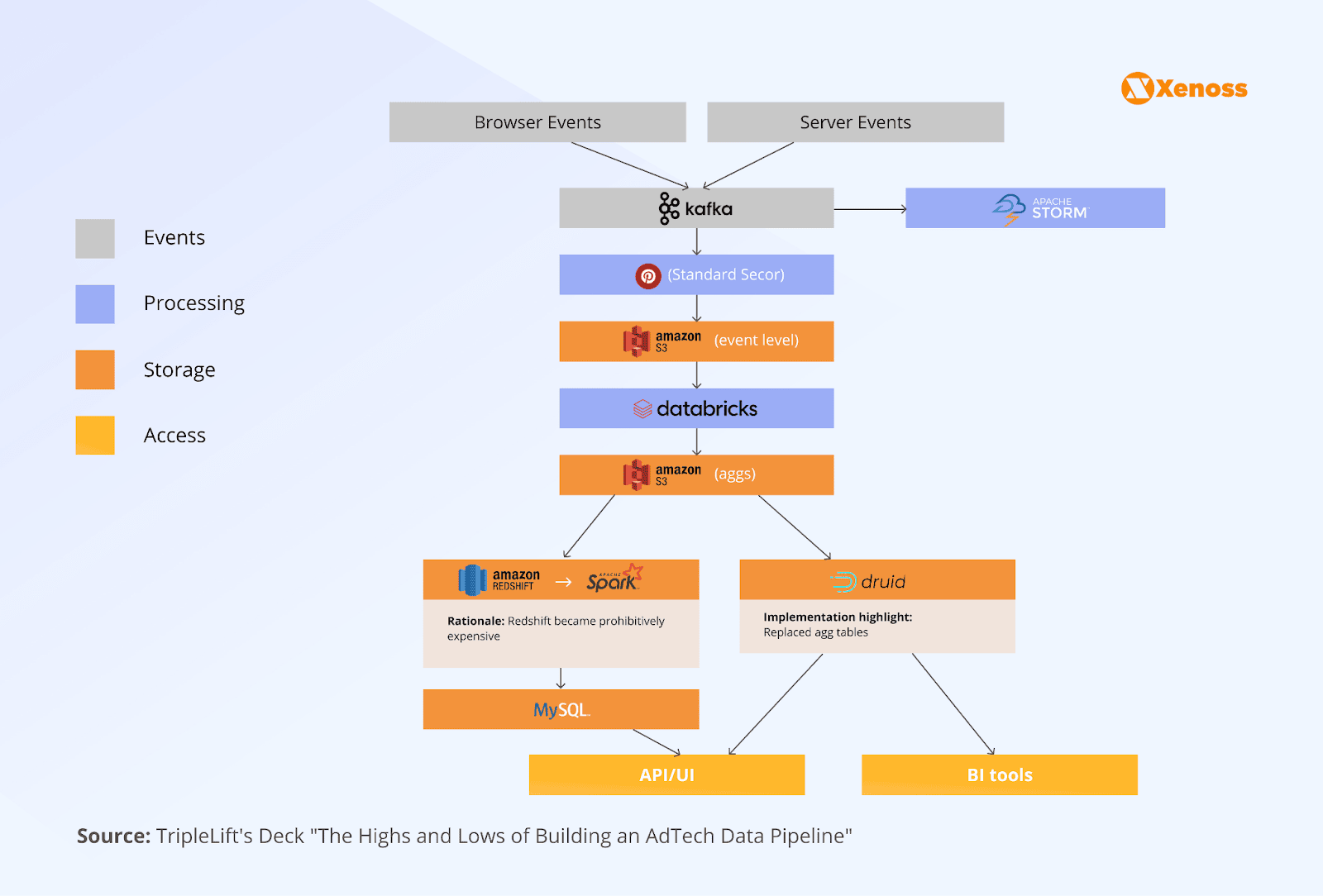

To enable this, oil & gas companies need streaming data infrastructure. Such data pipelines originally scaled in high-volume industries like AdTech and can be effectively adapted for industrial telemetry.

- Event stream platforms like Apache Kafka, Amazon Kinesis, and Azure Event Hubs enable high-throughput, low-latency data ingestion from edge devices.

- Stream processors like Apache Flink, Apache Spark Streaming, and AWS Lambda help filter, transform, and analyze data in motion without storing it first.

- Scalable data storage like AWS S3 + AWS Timestream or Databricks Lakehouse can effectively host time-series data, while supporting low-latency queries from BI tools or custom machine learning models.

Combined, these elements create an event-driven architecture—a system design where real-world changes automatically trigger downstream actions. Suppose a vibration sensor crosses a defined threshold. In that case, the system immediately triggers a pipeline to log the event, alert the operator, and kick off an inspection workflow—no human needed to pull the data or start the process.

Overall, event-driven architecture better reflects the dynamic nature of oil and gas operations. It reduces data lag, supports proactive maintenance, and improves coordination across upstream, midstream, and downstream assets.

Unified data storage and operational analytics

To support real-time production monitoring at scale, oil & gas companies need a storage setup that balances high-speed ingestion with long-term data availability.

Field telemetry, sensor readings, system logs, and anomaly alerts are all event-based. They arrive in huge volumes and often irregularly.

This calls for a tiered data storage architecture with:

- Hot storage for fresh, high-frequency data needed for real-time dashboards and alerting

- Warm storage for operational context over weeks or months for root cause analysis

- Cold storage for historical trend analysis, compliance audits, and ML model training

In practice, the setup for a real-time pipeline monitoring system could look like this:

- Real-time API data ingestion with Apache Kafka or Amazon Kinesis

- Long-term object storage in Amazon S3 or Azure Data Lake

- High concurrency data query layer with Snowflake or ClickHouse

- Time-series databases like InfluxDB or AWS Timestream for more granular analysis

Effectively, such a setup brings structured (e.g., SCADA metrics), semi-structured (e.g., JSON telemetry), and unstructured (e.g., logs, images) data into a single, accessible architecture, instead of scattering it across point systems and departments.

This allows all operational data — regardless of format or size — to be stored, queried, and visualized from one place, without ETL bottlenecks, costly duplication, or format conversions.

Data unification is essential for sharper operational analytics. If maintenance logs sit in one system, sensor data in another, and AI models in yet another, you can’t run reliable predictive analytics in oil and gas. Unified storage eliminates that fragmentation. It ensures that root-cause analysis, optimization models, and compliance dashboards are all drawing from the same source of truth.

Modern analytics platforms — like Microsoft Fabric, Tableau, Power BI, or Grafana — can tap directly into unified storage layers to deliver live insights across the organization. Combined with predictive models and alert systems, this turns your operational data from something you report on into something you act on continuously.

Challenges of deploying IoT solutions for the oil and gas industry

When it comes to industrial IoT solutions, leaders’ ambitions don’t always match the pace of execution. Primarily, because off-the-shelf platforms don’t address the complex, on-the-ground realities of oilfield operations.

Common limitations include:

- Poor compatibility with hybrid IT/OT environments. Most commercial IoT solutions assume clean, cloud-enabled setups, not the reality of aging field systems alongside modern analytics tools.

- Lack of support for legacy field protocols. Protocols like Modbus, HART, and vendor-specific SCADA interfaces often require custom connectors or costly middleware.

- Rigid deployment architectures. Many industrial IoT systems are designed for isolated use cases and can’t adapt to the cross-functional needs of upstream, midstream, and downstream operations.

As a result, IoT-related oil and gas digital transformation projects stall due to magnifying deployment costs, crippling complexities, and meager ROI.

Solution: Custom IoT engineering services

The complexity of oil and gas operations calls for custom IoT solutions. Instead of trying to force generic tools onto complex infrastructure, custom solutions are designed around how your operations actually work, from the edge to the control room.

Specialist IoT development teams bring deep domain expertise to integrate with existing SCADA systems, often without disrupting day-to-day operations. They design and deploy connectors that speak the language of legacy protocols, enabling real-time data capture from equipment that’s been in the field for decades.

Beyond connectivity, custom engineering enables unified data storage — a critical step for achieving cross-asset production monitoring. With all telemetry centralized and normalized, operators can track upstream, midstream, and downstream performance in one place, rather than jumping between interfaces.

Finally, you can also embed AI-driven alerting and optimization logic into your architecture. By leveraging context-aware alarms and real-time production tuning, your data will continuously drive efficiency, safety, and value.

In a sector where downtime and inefficiency cost millions, real-time production monitoring powered by modern IoT architecture is foundational. At Xenoss, we help oil & gas enterprises unify their operations, modernize their infrastructure, and unlock next-level productivity.