The vector database market is incredibly saturated. Over twenty dedicated vector management systems compete for attention, while traditional databases rapidly add vector support.

With $200+ million in VC funding raised since 2023, it’s challenging to compare these platforms objectively and understand which delivers real value beyond the hype.

This guide offers a comprehensive vector database selection framework. We will examine the market landscape, define selection criteria, and make a side-by-side comparison of three popular market players—Weaviate, Qdrant, and Pinecone to explore the long-term future for vector databases that goes beyond the hype.

The rise of vector databases

The surge of generative AI and RAG applications created perfect timing for vector databases. Enterprise organizations needed tools to connect unstructured internal data, corporate documentation, knowledge bases, and customer records to LLMs.

The process transforms raw data into numerical representations called embeddings, stored as high-dimensional vectors that vector databases can efficiently manage and query.

Vector search evolved from a niche infrastructure to a competitive battleground. New entrants like Qdrant emerged while traditional databases rushed to add vector capabilities.

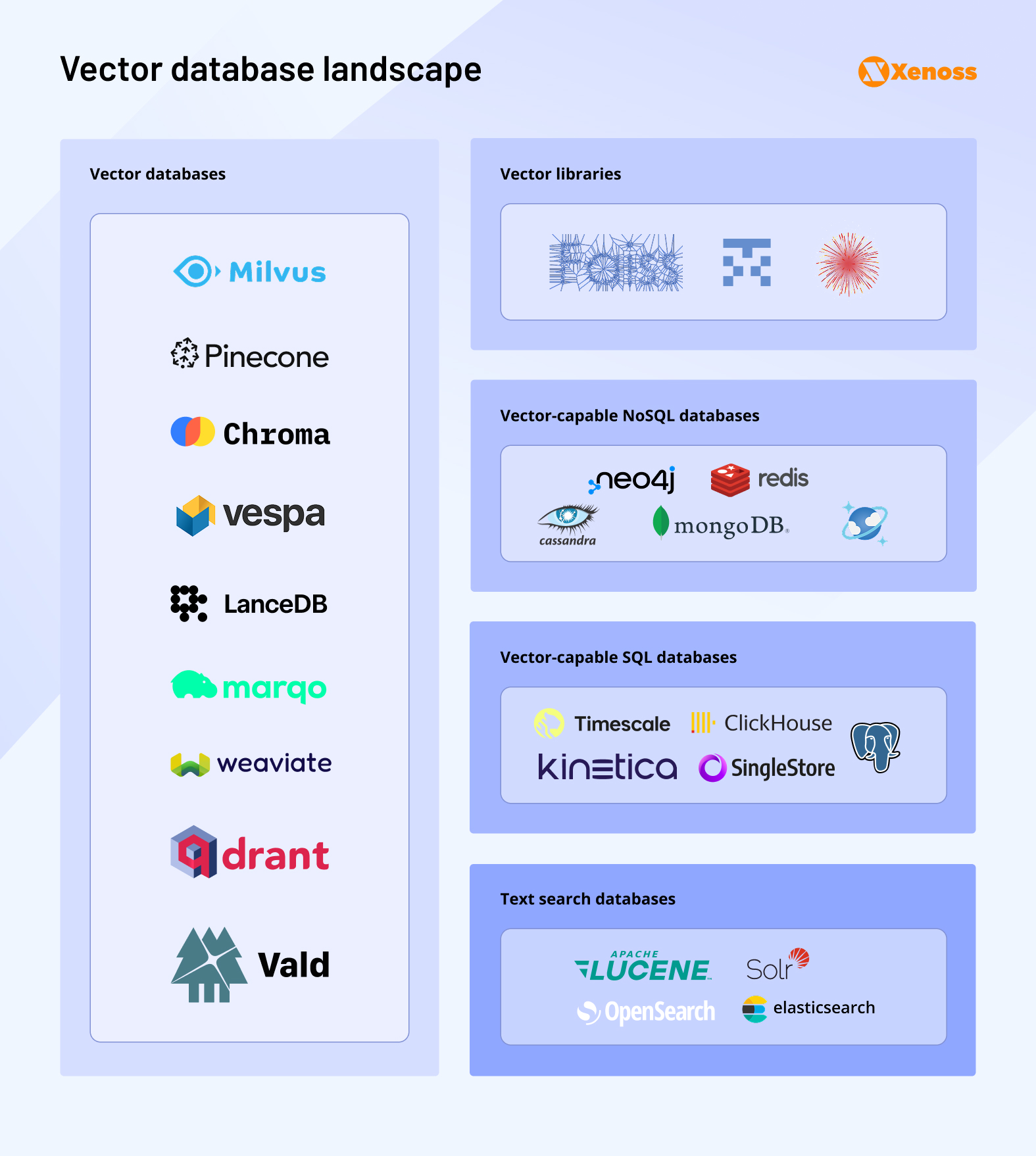

In 2025, the vector database market roughly has the following landscape.

Dedicated vector databases

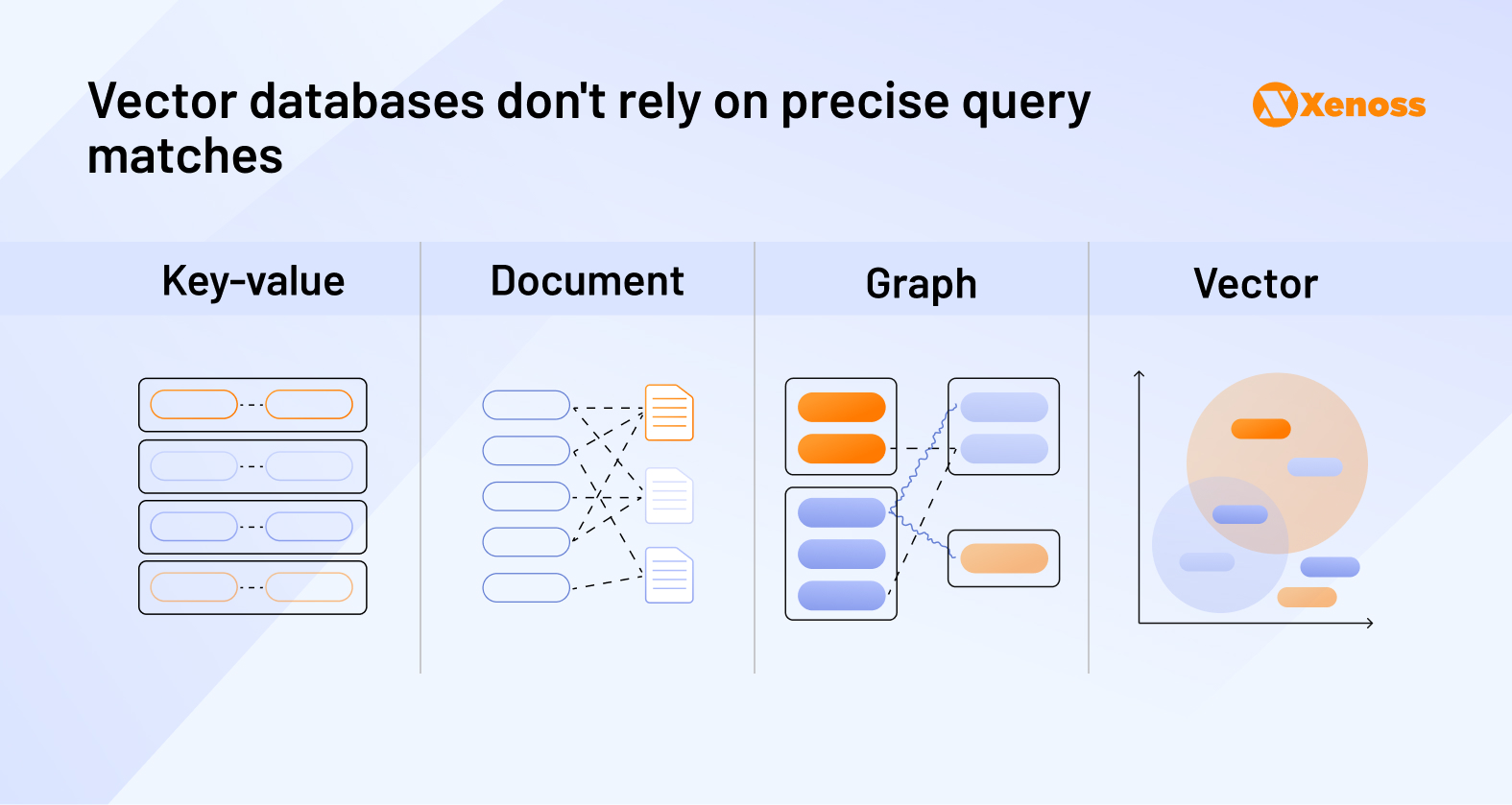

Vector databases enable machine learning teams to search and retrieve data based on similarity between stored items rather than exact matches. Unlike traditional databases that rely on predefined criteria, vector databases group embeddings by semantic and contextual connections.

This means finding similar content even when it’s not semantically identical or doesn’t fit precise user-defined criteria.

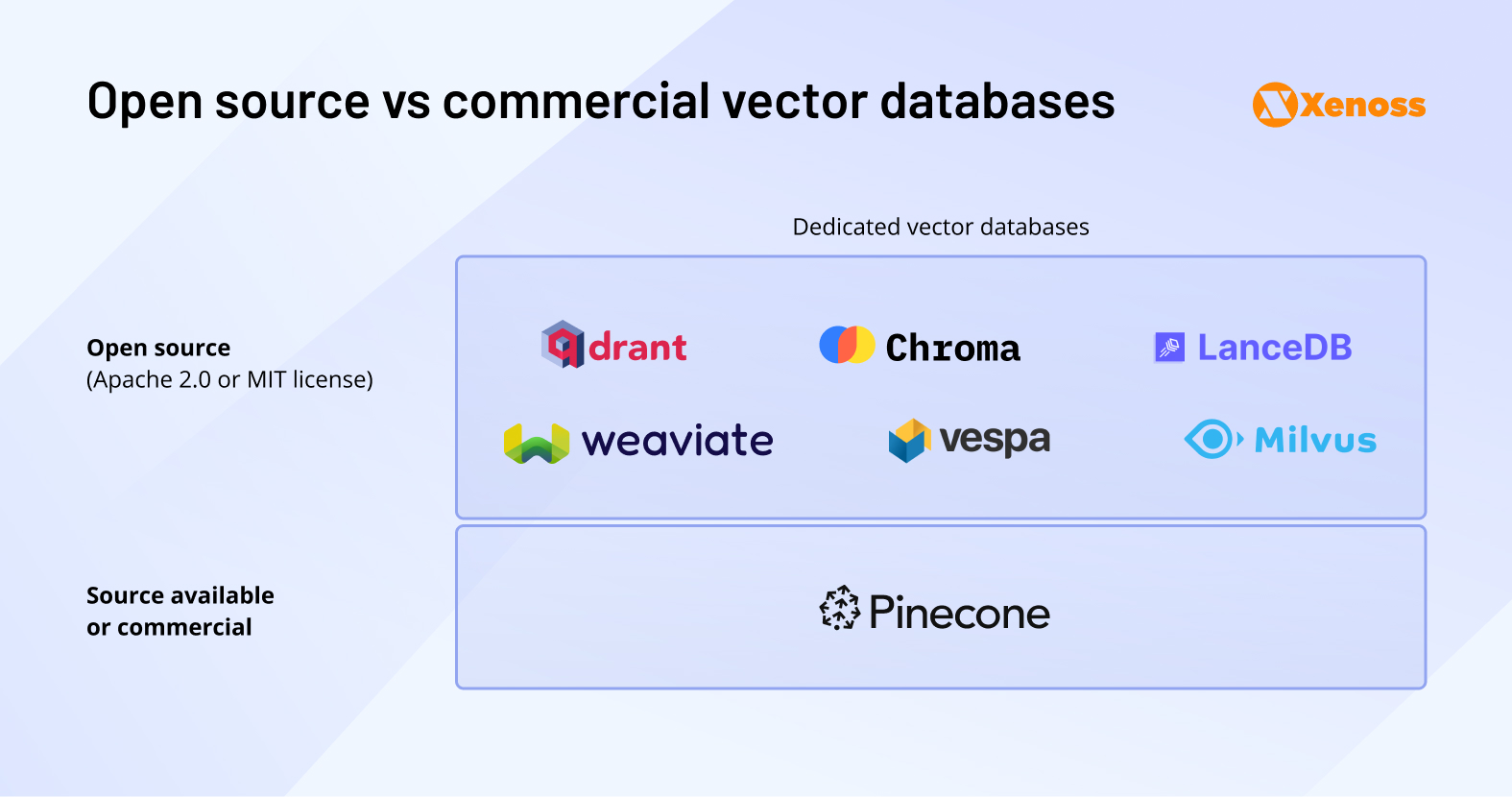

Dedicated vector databases can be further clustered into two buckets: open-source platforms like Qdrant, Weaviate, and Chroma, and commercially licensed solutions like Pinecone.

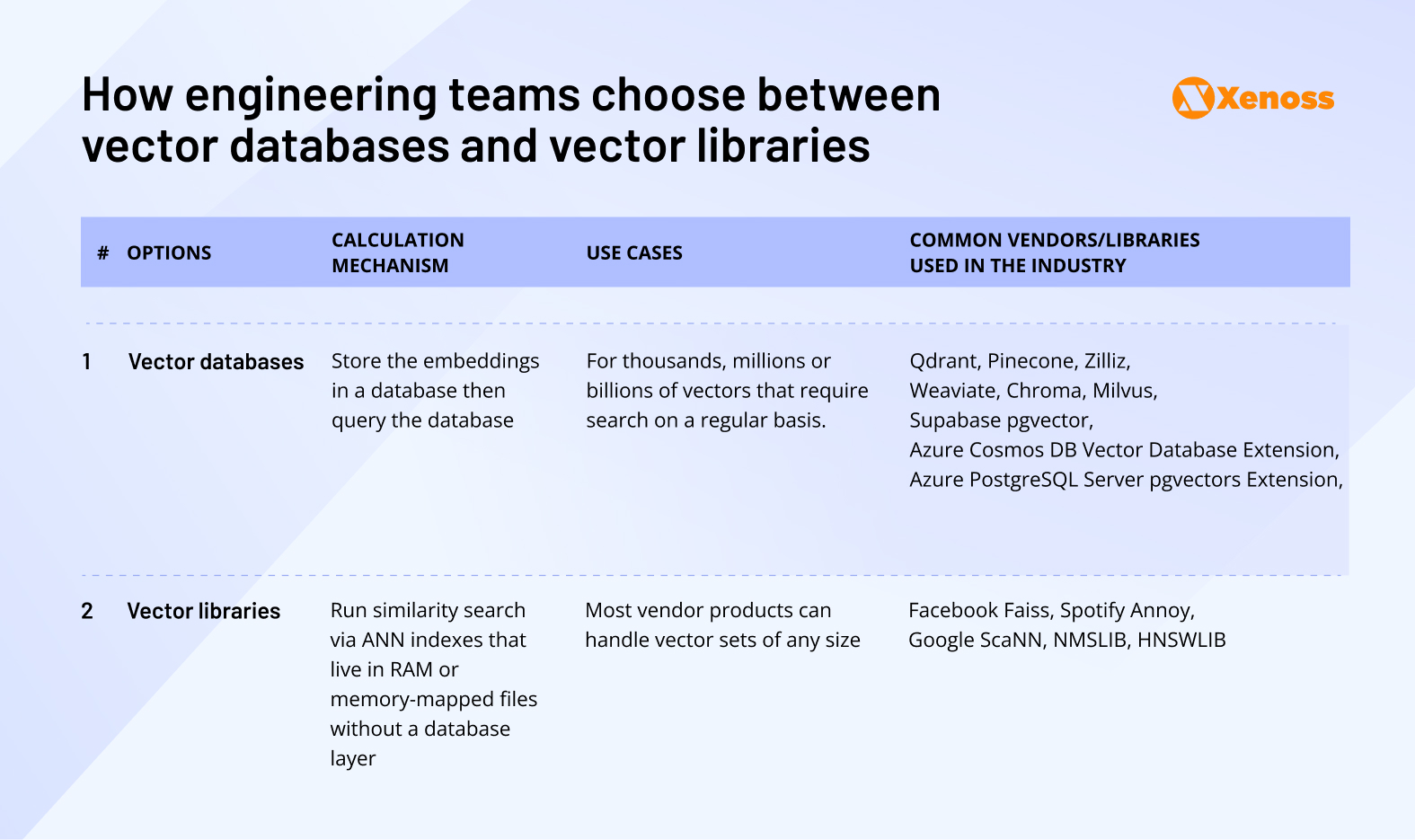

Vector libraries vs. dedicated databases

Vector libraries like Facebook’s FAISS (35.9k GitHub stars), Google’s ScaNN (35.9k stars), and Spotify’s ANNOY (14k stars) offer high adoption rates for specific use cases.

While vector databases provide comprehensive vector management, libraries focus primarily on similarity searches using the approximate nearest neighbor (ANN) algorithm.

They differentiate from the rest of the market by the ease of setup and integration, but have limitations that prevent their widespread adoption for enterprise-grade use cases.

Limited mutability. In most vector-search libraries (e.g., Annoy or ScaNN) an index becomes effectively read-only after it is built. Adding new vectors or deletions requires to recreate the index from scratch. Some libraries (e.g.,Faiss) does offer add/remove operations with some trade-offs.

Real-time search, but not real-time updates. These libraries return millisecond-level results once an index exists, yet they lack streaming ingestion; fresh data must be batched and the index rebuilt, which complicates maintenance at million- or billion-scale.

Here is the recap of key differences between vector databases and libraries.

Vector-capable traditional databases

Seeing the success of dedicated vector databases (Pinecone closed a $100 M Series B round in 2023, and Qdrant raised $28 million in Series A last year), traditional database vendors hopped on the bandwagon and added vectors to supported data types.

Vector-capable databases are now emerging both in the SQL and NoSQL infrastructure layers.

- NoSQL databases: MongoDB, Cassandra, Redis, Neo4j

- SQL databases: TimeScale, SingleStore, Kinetica, ClickHouse.

While vendors promote “one-size-fits-all” approaches, vector search capabilities remain limited in ranking, relevance tuning, and text matching.

Jo Kristian Bergum, Chief Scientist at Vespa.AI, pointed out in a blog post ‘The rise and fall of the vector database infrastructure category”:

“Just as nobody who cares about search quality would use a regular database to power their e-commerce search, adding vector capabilities doesn’t suddenly transform an SQL database into a complete retrieval engine.”

That’s why enterprise engineering teams continue to choose specialized vector databases over traditional solutions augmented with vector capabilities.

Text-based databases

Vector search and traditional text search are rapidly converging. Vector databases like Weaviate now position themselves as “AI-native databases” with full search capabilities.

On the other hand, ElasticSearch, a long-term leader in the search engine market, incorporated vector search capabilities built on top of Apache Lucene in 2023.

Now, the platform is marketed as a “search engine with a fully integrated vector database.”

What type of vector search tools is the best fit for enterprise-grade applications?

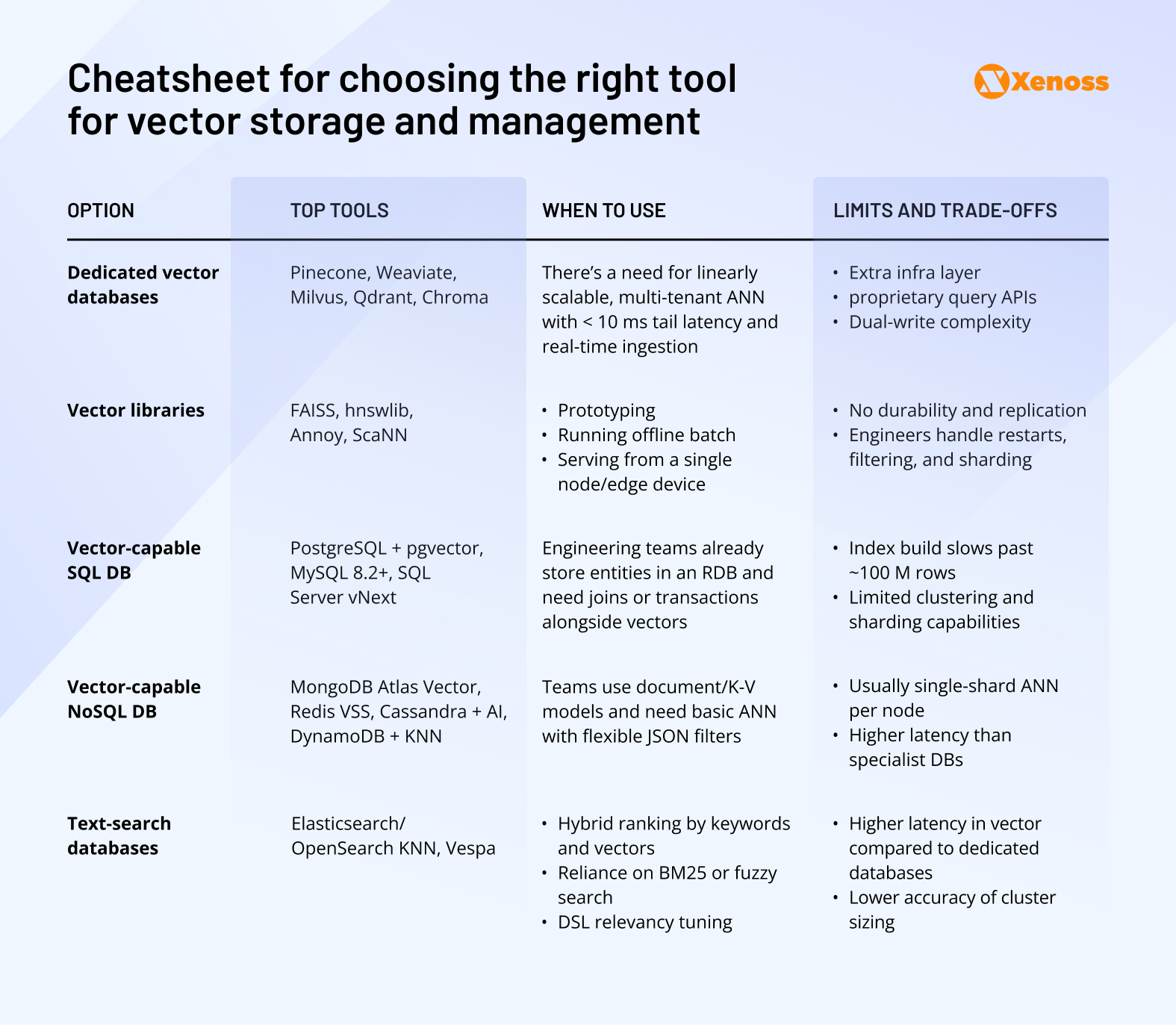

Before comparing individual vendors, engineers need to choose the right category of tools for their vector search use case.

Here is a short recap of when engineering teams prefer using tools in each of the categories listed above.

Key consideration: Vector integrations in traditional databases and search engines remain relatively new. While these will likely mature over the next 3-5 years, dedicated vector databases are currently the safest choice for enterprise applications.

Vector-first database market leaders

There are dozens of vector databases on the market. To simplify the selection process, we are zooming in on three rapidly growing vector-only DBs: Pinecone, Qdrant, and Weaviate.

Pinecone

Pinecone is a high-performance, fully managed vector database that scales to tens of billions of embeddings at a sub-10 ms latency.

It is compliant with SOC 2, HIPAA, ISO 27001, and GDPR and is a popular choice among enterprise companies. Pinecone partnered with both Amazon Web Services and Microsoft Azure and is used by rapidly scaling companies like Notion.

Qdrant

Qdrant offers high-performance, open-source vector search with single-digit millisecond latency. Available as managed cloud, hybrid, or private deployments with enterprise features: SSO, RBAC, TLS, Prometheus/Grafana integration, SOC 2, and HIPAA compliance.

Notable users: Tripadvisor, HubSpot, Deutsche Telekom, Bayer, and Dailymotion.

Weaviate

Weaviate combines cloud-native architecture with an optimized HNSW engine for sub-50ms ANN query response. Supports multi-tenancy and enterprise compliance like Pinecone and Qdrant.

Notable users: Morningstar, a global finance and banking enterprise, used Weaviate to power internal document search, and Neople, one of the leading game publishers in South Korea, built an agentic customer service platform on top of the database.

Criteria for choosing a vector database

Each of these databases has been battle-tested on the enterprise scale, so the choice between them is not straightforward.

To break the tie, let’s compare Pinecone, Qdrant, and Weaviate side by side across benchmarks that are critical for successful deployment.

Key features comparison

Let’s examine how three leading vector DBs rank in cost, hosting, and ease of use, which assesses the presence of documentation and the API ecosystem.

Hosting models:

- Pinecone: Fully managed

- Qdrant & Weaviate: Open-source, self-hosted options

Pricing:

- Pinecone: Free plan → $50/month Starter → $500/month Enterprise (full compliance, private networking, encryption keys)

- Qdrant: Free 1GB cluster → $0.014/hour hybrid cloud → Custom private cloud pricing

- Weaviate: $25/month Serverless → $2.64/AI unit Enterprise → Custom private cloud

Performance (QPS):

- Weaviate: 791 queries/second

- Qdrant: 326 QPS

- Pinecone: 150 QPS (p2 pods)

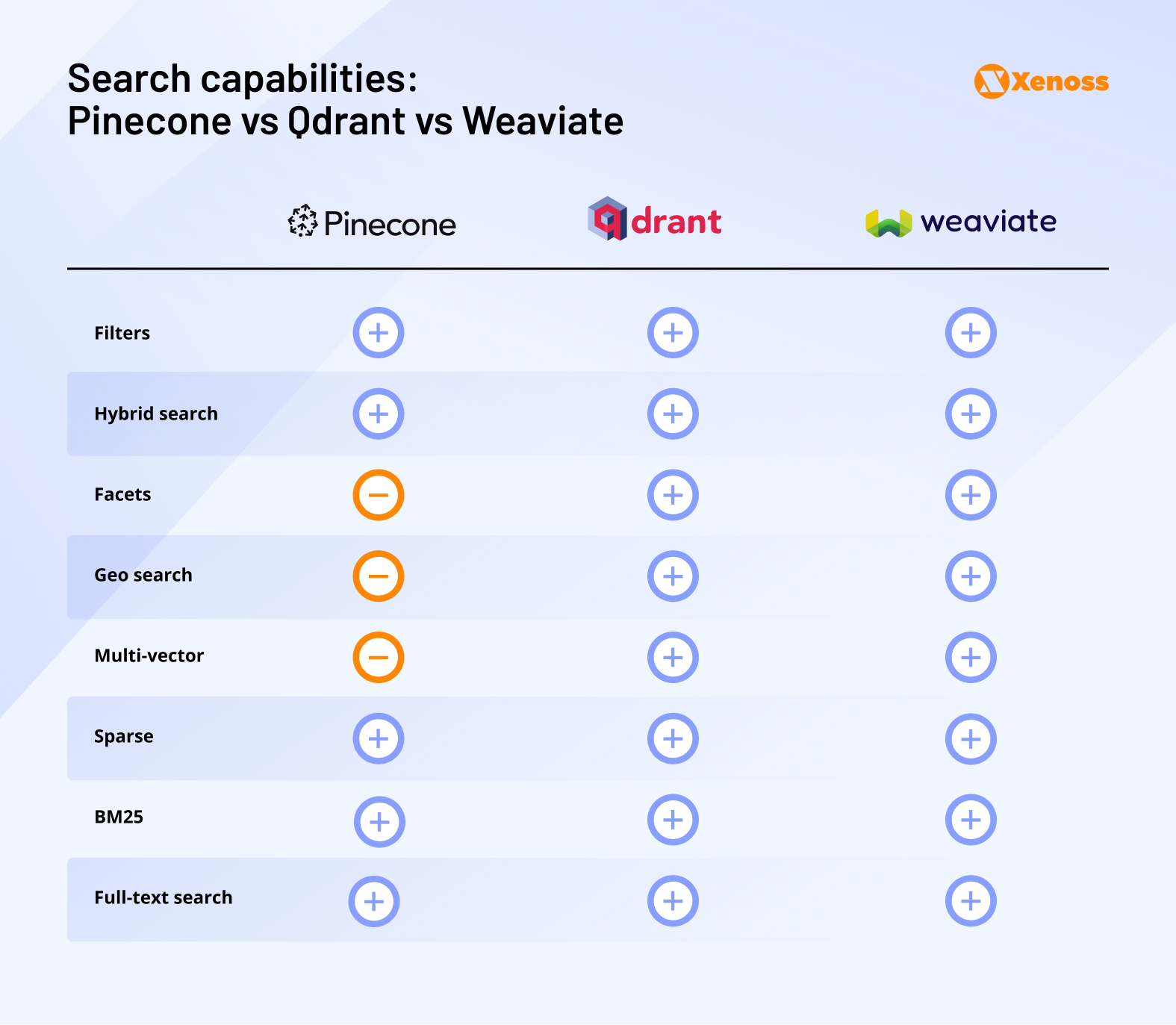

Search capabilities

Essential vector search features to evaluate across Pinecone, Weaviate, and Qdrant:

Core search features:

- Filters to narrow vector-search results according to metadata conditions (e.g., brand=”Nike” AND price < 100). Vector DBs use different filtering strategies. Pre-filtered search applies filters before running a search. Most engineers would not recommend this approach as it can cause the HSNW graph to collapse into disconnected components. Post-filtered search ranks the results after a search has been performed. This filtering strategy is often too rigid, and if there’s no exact output-filter match, the search may return no result at all. Custom filtering, used by Weaviate and Qdrant, enables engineers to fine-tune their filter settings and avoid missing relevant results or compromising the HSNW graph.

- Hybrid search blends vector similarity scores with lexical or rule-based scores in a single ranking. This feature gives product, legal, discovery, and support teams a list of results that satisfy both “fuzzy” and exact-match relevance requirements.

- Facets return count buckets (color, category, etc.) for drill-down navigation.

- Geo search restricts or orders hits by distance from a latitude/longitude point or region. Enterprise teams can use this feature to surface the “nearest store/asset/technician” within the same vector query, meeting location-based SLA and compliance needs (e.g., GDPR data residency or radius-restricted content).

Advanced capabilities:

- Multi-vector stores or queries several embeddings per item/query to capture different semantic aspects. If a vector database supports multi-vector search, engineering teams can store multilingual documents, multimodal data, or complex queries (title + body + tags) without duplicating rows.

- Sparse vectors support high-dimensional vectors with mostly zero weights (e.g., SPLADE) for better recall on jargon-heavy content like technical documentation or medical records.

- BM25: Classic TF-IDF relevance scoring for keyword matches

- Full-text search tokenizes and indexes raw text, allowing engineering teams to run phrase, wildcard, or boolean queries.

Content type support

All three databases can perform multimodal vector search across text, image, and other non-text embeddings; Weaviate and Qdrant ship ready-made multimodal modules, while Pinecone offers the same capability via its general-purpose vector API once you generate and upload the image or video embeddings yourself.

Choosing an optimal vector DB for enterprise use cases

Colin Harman, CTO at Nesh, emphasizes that venture capital rounds or tutorial popularity shouldn’t drive vector database selection. With no clear “market leader,” the right choice is entirely case-by-case.

Compliance

Pinecone delivers a fully managed, SOC 2 Type II, ISO 27001, GDPR-aligned service and has completed an external HIPAA attestation—making it attractive to regulated pharma, banking, and public-sector teams.

Weaviate Enterprise Cloud gained HIPAA compliance on AWS in 2025 and lists SOC 2 Type II for its managed offering.

Qdrant Cloud is SOC 2 Type II certified and markets HIPAA-readiness for bespoke enterprise deployments, although a formal HIPAA report has not yet been published.

Choosing a managed service lets enterprises off-load day-to-day ops, audits, and most of the shared-responsibility burden to the vendor.

Multi-tenant deployment

Pinecone offers project-scoped API keys, per-index RBAC, and logical isolation through namespaces. In BYOC mode (GA 2024), clusters run inside the customer’s own AWS, Azure, or GCP account, giving hard isolation when required.

Qdrant provides first-class multitenancy through named collections/tenants with quota controls, sharding, and tenant-level lifecycle APIs.

Weaviate implements tenant-aware classes with lifecycle endpoints, ACLs, and optional dedicated shards per tenant.

Organisations with global business units should compare each platform’s isolation depth, operational tooling, and total cost before deciding.

Best for integrated RAG workflows: Weaviate & Pinecone

Choosing a vector database that can handle not just retrieval but the whole Retrieval-Augmented Generation (RAG) loop can shave days off prototyping and weeks off hardening a production stack. As of mid-2025, two vendors expose fully-managed, server-side RAG pipelines:

Weaviate

Version 1.30 introduced a native generative module. You register an LLM provider (OpenAI, Cohere, Databricks, xAI, etc.) at collection-creation time, and a single API call then performs vector retrieval, forwards the results to the model, and returns the generated answer. This happens entirely inside the Weaviate process and removes the need to stitch together a second micro-service. Because the retrieval and generation share the same memory space, Weaviate avoids an extra network hop and allows tweaking top-k, temperature, or model choice at runtime without redeploying the app.

Pinecone

Pinecone Assistant (GA January 2025) wraps chunking, embedding, vector search, reranking and answer generation behind one endpoint. It allows users to upload docs, pick a region (US or EU), and stream back grounded answers with citations. Users retain direct access to the underlying index and can run raw vector queries alongside the chat workflow.

What this integration buys you

Unified component stack: Vectoriser, retriever and generator are configured in one place. Schema migrations, monitoring, and incident response all live in a single dashboard instead of three.

Lower latency and cost: By eliminating two outbound calls (database to app, then app to LLM) vendors report double-digit-millisecond savings per query and a noticeable drop in egress fees. Even a 30 ms gain can keep interactive chat UIs feeling snappy.

Provider flexibility: Weaviate allows swapping among OpenAI, Cohere or Databricks via a one-line config change, while Pinecone Assistant exposes an OpenAI-compatible interface and its own model catalogue to prevent vendor lock-in.

Qdrant

Qdrant’s new Cloud Inference service brings embedding generation into the cluster, unifying that half of the pipeline and cutting a network hop. However, answer-generation still runs in the application layer or an external LLM, so it’s one step short of a fully in-database RAG loop.

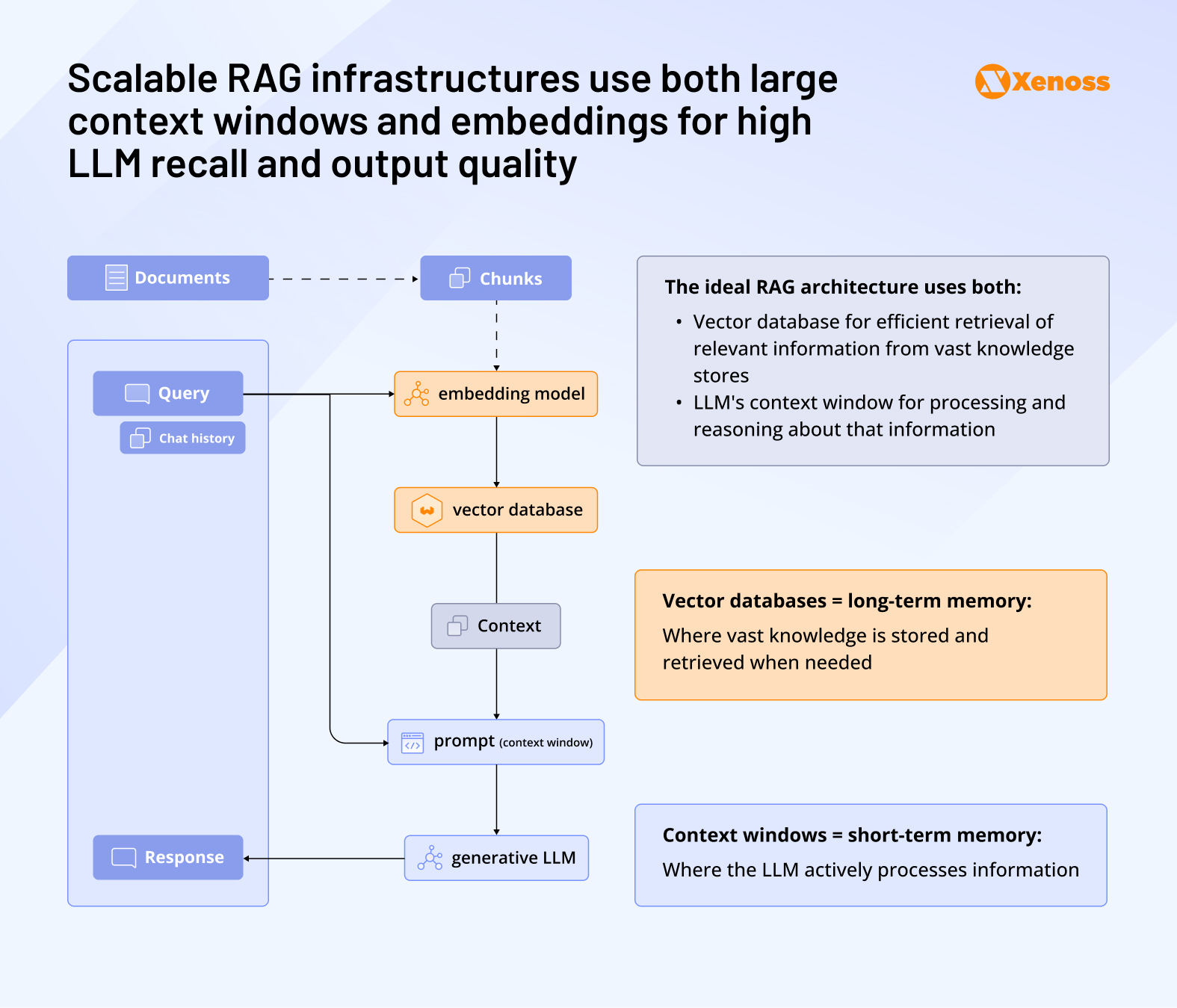

Vector databases are here to stay despite larger context windows

Machine learning engineers have questioned whether expanding LLM context windows will eliminate the need for vector databases, assuming users could share all data directly with GPT, Claude, or Gemini.

However, Victoria Slocum, ML engineer at Weaviate, explains why larger context windows won’t replace vector databases: the concepts serve fundamentally different purposes. Context windows function like human short-term memory—immediately accessible but limited in scope. Vector databases operate more like long-term memory, where retrieval takes time but offers reliable, persistent storage for vast amounts of information.

Even with billion-token context windows, processing massive datasets would remain computationally inefficient and make it harder for models to focus on relevant prompt sections. This computational reality means RAG architectures combining both technologies will continue as the optimal approach for enterprise AI applications.

Within the limits of modern AI infrastructure, RAG is not going anywhere, and vector databases remain essential components of scalable AI systems. For organizations building enterprise AI solutions, vector databases provide the foundation for efficient data retrieval while large context windows handle immediate processing needs.

Rather than competing technologies, they work together to create more effective and economical AI solutions. This makes choosing a vector database that matches your team’s use case, skillset, and budget a critical priority for CTOs and CIOs planning their AI infrastructure investments.