“We’ve seen dozens of [AI product] demos this year. Maybe one or two are genuinely useful. The rest are wrappers or science projects.” As one CIO put it in the MIT study, many AI vendors still sell smoke and mirrors. Businesses aren’t looking for someone to add AI frosting on an already working product or to run marathon math contests to show off their engineering muscles. They’re looking for partners who roll up their sleeves and fix real business problems.

However, finding a capable AI vendor doesn’t guarantee a project’s success. As a company leader, your role in shaping the process and setting the right success criteria is just as important as the vendor’s technical expertise.

Vendor evaluations often follow a similar flawed pattern: impressive demos, technical feature comparisons, and contract negotiations that overlook the critical elements determining long-term success. Organizations need a systematic approach that links AI investments to tangible business value.

This guide outlines a 7-step framework for evaluating and partnering with AI and data engineering vendors. You’ll learn how to align project objectives, validate technical depth, calculate TCO, and build sustainable partnerships that drive measurable ROI.

Understanding AI vendor management fundamentals

AI software development vendors offer comprehensive AI and machine learning development services, encompassing custom generative AI and conversational AI solutions, computer vision software, predictive modeling systems, deep learning, and neural network solutions.

Experienced AI service providers know how to select fitting off-the-shelf models from Anthropic, OpenAI, Mistral, and DeepSeek. They also offer custom and domain-specific model training, development, and inference services.

Besides developing AI solutions, AI vendors help businesses prepare their infrastructure for seamless AI use by:

- ingesting company data from disparate systems

- enabling automated data cleansing

- integrating AI with internal software via custom APIs

- ensuring continuous model improvement with new data

- establishing machine learning operations (MLOps) for seamless model deployment and maintenance

Trusted vendors also monitor AI legislation across various countries and domains, including model clauses in the EU AI Act and data protection rules for AI software development, as outlined in the GDPR, HIPAA, and PCI DSS. Unlike traditional software procurement, AI project procurement requires organizations to incorporate risk management, data governance, human oversight, transparency, and accountability as core principles from the outset.

The vendor relationship management process should follow a structured, step-by-step path: prepare internally, define your goals, screen vendors, validate technical capabilities, negotiate contracts, and manage ongoing collaboration.

Step 1. Prepare internally before engaging vendors

Before diving into an AI vendor search, assemble an internal team of AI advocates who will secure financial support for AI development, explain technical nuances, and ensure security and compliance standards.

You can always opt for artificial intelligence consulting services if you lack some specialists in-house.

| Role | Primary responsibilities | Why it matters |

|---|---|---|

| Executive sponsor (C-suite leader) | Secures funding, ensures alignment with company strategy, and collaborates with the vendor to oversee project direction. | Strong executive support ensures that AI initiatives remain strategic, well-funded, and aligned with business priorities. |

| Technical lead/Data architect | Evaluates architecture, integration feasibility, and oversees technical execution. | Ensures the vendor’s solution fits your infrastructure and scalability needs. |

| Business stakeholder/Product owner | Defines KPIs and translates business goals into measurable outcomes. | Provides vendors with clarity on the business value they’re expected to deliver. |

| Procurement representative | Manages vendor selection, contracting, and compliance. | Balances innovation with governance and risk control. |

| Security & compliance specialist | Reviews data handling, access control, and regulatory adherence. | Protects sensitive data and ensures compliance across all systems. |

With due support from your internal team, define your AI maturity level to set the right expectations for collaboration with an AI vendor.

A thorough data infrastructure audit will give you most of the answers. Examine where your data is stored, who has access to it, how it’s used, your data analytics approaches, core data challenges, data silos, and which tools your data engineers employ.

With a general understanding of your data management practices, you’ll know which data engineering services you’ll need before AI strategy development and can plan your budget efficiently.

Step 2. Define business outcomes

If you jump on the AI bandwagon driven only by model development hype without connecting technical benefits to business objectives, your AI initiatives risk stalling halfway through or even stopping at the ideation phase.

People, organizational structures, and processes account for 70% of all challenges with AI workflows, while technology and algorithms are responsible for only 30% of implementation difficulties.

Map AI and data initiatives to revenue and efficiency metrics

Tools, model selection, architecture patterns, and data pipeline configurations become relevant after establishing clear objectives. Begin by setting a clear goal that follows the SMART formula.

Think about a specific business problem to solve with AI, then attach measurable KPIs that will help you monitor the project’s success. Ensure that your AI initiatives are achievable and relevant to your current business needs. And finally, make your goals time-bound to maintain focus, and give your team and the vendor a clear accountability window for delivering measurable results.

Here are some examples of SMART goals:

- Increased revenue: “Increase average order value by 15% through personalized recommendation engines in six to eight months.”

- Improved employee productivity: “Reduce invoice processing time from 5 days to 2 hours via automated reconciliation in the first month post-rollout.”

- Customer retention: “Improve customer satisfaction scores from 7.2 to 8.5 within a year through predictive support routing.”

For instance, an e-commerce company might set an objective to “reduce website load time by 30% through predictive server allocation algorithms”. This specificity helps vendors understand exactly what business problem they’re solving and work toward achieving the 30% improvement target.

Pro tip from Xenoss CEO:

Dmitry Sverdlik, a technical expert himself with more than 15 years of experience, also highlights the importance of aligning AI powers with business needs:

Document constraints and non-negotiables early

Apart from setting clear, attainable goals, provide your AI and data engineering services partner with well-documented project constraints and non-negotiable requirements, such as:

- Technical requirements: Legacy system integration capabilities, data architecture compatibility.

- Regulatory compliance: GDPR, SOC 2, HIPAA requirements that cannot be compromised.

- Timeline expectations: Proof of concept to production deployment windows.

- Budget boundaries: Total cost of ownership beyond initial licensing fees.

This step proves essential for any software initiative and becomes especially crucial for AI projects. While traditional development follows predictable patterns with identifiable bugs, AI projects operate in a more fluid and uncertain space.

Model behavior isn’t always deterministic. Resolving issues like persistent hallucinations can take longer than expected. That’s why anything that can be clarified or structured upfront should be carefully documented. It brings stability to what is otherwise an inherently experimental process.

For instance, a global insurer implementing AI-driven claims processing could specify that its 15-year-old policy system must remain operational. Additionally, all AI services should connect only through existing APIs, and no mainframe code can be altered due to compliance rules. These constraints can help the vendor design around reality instead of proposing disruptive upgrades that would’ve delayed ROI and delivered little value.

Here’s what Eric Helmer, CTO at Rimini Street, said on the matter:

Companies who have a lot of legacy applications may find themselves on an AI journey they didn’t ask for. You may go through the evolution of these very disruptive upgrades, only to find out the functionality you got will never be of use.

The more clearly you define your constraints, the more effectively your AI partner can focus on solving the right problems without over-engineering the solution.

Step 3. Vendor research and screening

Look beyond vendor websites and polished case studies. Explore independent analyst reports (Gartner, Forrester, IDC), cloud partner directories (AWS, GCP, Azure), trusted websites like Clutch or G2, and open-source communities where experienced engineering teams often share project insights.

When reading reviews, verify vendors’ years in operation and project completion rates to ensure maturity. Confirm that vendors were engaged in AI and data projects before the 2022 ChatGPT boom. This demonstrates long-established AI and data expertise and capability to handle projects of varying complexity.

Next, screen mature vendors with proven expertise against these factors:

Financial health. Review the funding history or revenue growth of your potential vendors to ensure they have the financial capacity to provide a modern tech stack and experienced AI engineers.

Delivery model. Understand how the vendor collaborates. Some operate as external consultants who hand off deliverables, while others embed directly into your teams and co-own outcomes.

Reference quality. Request contacts from similar-scale implementations, not just success stories, to speak directly with clients and verify vendor reliability. Reference interview questions can include:

- “How did the vendor handle challenges or scope changes during the project? Were they transparent and solution-oriented?”

- “How responsive were they to issues or optimization requests after deployment?”

- “Would you work with this vendor again, and if not, what would you do differently next time?”

Exit strategy. Understand data portability, contract termination terms, and transition support to secure your business in case you need to halt cooperation.

Red flags include vendors who can’t provide recent customer references, refuse to discuss their financial backing, or have significant gaps in their project history.

Evaluation matrix: A structured approach to comparing options

Use an evaluation matrix to objectively score vendors. Assign weights to key categories, such as technical expertise (40%), business alignment (25%), delivery track record (20%), and partnership model (15%). This structured approach helps create a clear, data-driven vendor shortlist for deeper evaluation.

For example, a manufacturing company deploying predictive maintenance across multiple plants might assign greater weight to data infrastructure and integration expertise, as sensor data from legacy SCADA systems must be ingested and analyzed in real time.

A factory automation project using computer vision for quality control may prioritize model accuracy and edge deployment capabilities, as performance directly impacts production throughput.

Meanwhile, a supply chain optimization initiative might emphasize business alignment and delivery track record, ensuring the vendor understands logistics workflows and can deliver measurable ROI within tight operational windows.

Basic screening helps filter out immature candidates lacking AI experience, while the evaluation matrix enables you to sift through potential vendors with even greater scrutiny.

Step 4. Evaluate technical depth over marketing claims

Marketing materials often reflect only surface-level engineering capabilities and may be helpful in the initial shortlisting of potential partners. However, they rarely reveal the deep technical expertise needed for complex AI implementations.

To determine that, you’ll need to conduct an interview and ask the relevant questions. Here’s a list of typical questions that our engineers answer during initial consultations with clients.

Questions to ask AI vendors to evaluate technical depth

| Area | Key questions to ask | Superficial answers | Strong answers |

|---|---|---|---|

| Data infrastructure | How do you design data pipelines for scalability and fault tolerance? | “We use cloud tools like AWS or GCP for everything.” | Discusses architecture patterns (e.g., Lambda/Kappa), message queues (Kafka, Pulsar), vector databases (Pinecone, Qdrant, Weaviate), checkpointing, and schema evolution handling. |

| Data quality and governance | How do you ensure data accuracy and lineage across sources? | “We validate data before loading.” | Mentions validation frameworks, automated lineage tracking (e.g., Great Expectations, OpenMetadata), and observability practices. |

| Model development | What’s your process for feature engineering and model retraining? | “We use standard ML workflows with retraining scripts.” | Explains data drift monitoring, CI/CD for ML, and retraining triggers using MLOps pipelines (e.g., MLflow, Vertex AI, Kubeflow). |

| LLM and GenAI expertise | How do you approach fine-tuning or retrieval-augmented generation (RAG)? | “We fine-tune models depending on the dataset.” | Details token limits, vector database design, chunking strategies, and latency optimization for RAG pipelines. |

| Architecture and integration | How do you integrate new systems with existing legacy or on-prem infrastructure? | “We just use APIs.” | Outlines integration adapters, data contracts, hybrid-cloud approaches, and incremental migration strategies. |

| Performance and cost optimization | How do you balance compute costs with model performance? | “We use autoscaling.” | Describes cost-aware design: model quantization, caching, distributed training, FinOps monitoring, and workload scheduling. |

| Security and compliance | How do you ensure compliance with GDPR/HIPAA in data pipelines? | “We encrypt everything.” | Explains access control, anonymization, audit trails, and secure multi-tenant data handling. |

| Monitoring and maintenance | What does your observability stack look like for data and model performance? | “We monitor logs and metrics.” | Mentions distributed tracing, data drift dashboards, alert thresholds, retraining KPIs, and root-cause analysis workflow. |

| Domain experience | What similar problems have you solved in our industry? | “We’ve done projects in many verticals.” | Cites specific use cases (fraud detection, personalization, predictive maintenance) and quantifiable outcomes. |

Verify AI and data infrastructure expertise

The success of your AI project depends on the vendor’s data engineering expertise. 79% of organizations cite a lack of expertise in managing unstructured data as the biggest challenge hindering the value of AI.

Many enterprises still rely on legacy software and data warehousing solutions, whereas modern AI workflows often require access to unstructured data via vector databases or a data lakehouse.

Ask potential vendors about their hands-on experience with:

- Overall AI infrastructure optimization strategies

- Batch processing for periodic high-volume data ingestion using AWS Batch or Apache Airflow

- Real-time data streaming using Apache Kafka, Apache Flink, or Apache Spark

- Multi-cloud engineering across AWS, Azure, and GCP for hosting AI solutions or LLM self-hosting with orchestration frameworks

- Retrieval-augmented generation (RAG) techniques to build enterprise knowledge bases

The critical consideration isn’t so much experience with specific data and AI infrastructure tools as an AI partner’s ability to compose a flexible AI architecture (with optimization in mind). The ideal approach doesn’t require immediate shift to a data lakehouse or heavy investment in on-premises data centers and GPUs.

Instead, your AI ecosystem should extend your current data infrastructure through modular integrations and API-driven interoperability, ensuring scalability without unnecessary complexity.

Assess GenAI and ML integration capabilities

Experienced vendors should be able to manage the full AI/ML lifecycle, from data ingestion and model training to validation, deployment, and inference, within a single, unified environment. Such vendors show expertise in:

- Seamless ingestion of structured and unstructured data (e.g., via integration platforms as a service (iPaaS), natural language processing (NLP) engines for processing unstructured data)

- Domain-specific LLM fine-tuning experience to train AI models with as much company data as possible while minimizing GPU memory requirements and model training costs

- Production-grade model serving and deployment capabilities with AI observability, audit trails, and fallback logic

- Model-agnostic architecture preventing vendor lock-in

AI solutions operate as continuous pipelines where data flows in, outputs serve production needs, and feedback loops enable model retraining. Each stage requires automation, observability, and scalability. Vendors should clearly explain their approach to this process and provide monitoring tools to facilitate continuous improvement of the AI model.

Step 5. AI vendor evaluation in the field: RFP and PoC development

To avoid misunderstandings down the road, write a comprehensive request for proposal (RFP) with detailed specifications.

For initial RFPs, focus on the first four points and address the others during a consultation call.

Core RFP components

| Section | Purpose | What to include |

|---|---|---|

| 1. Executive summary | Provide background and business motivation. | Describe your company, core objectives, and the business problem AI is expected to solve. Outline strategic relevance (e.g., customer retention, operational efficiency, compliance). |

| 2. Project scope and objectives | Define measurable outcomes. | List primary use cases, success metrics (KPIs), and expected timeframes (e.g., proof of concept in 3 months, production in 9). |

| 3. Functional requirements | Clarify what the system must do. | Detail AI features (e.g., predictive analytics, NLP, RAG search), data inputs, and user interactions. Prioritize requirements as must-have, nice-to-have, and optional. |

| 4. Technical environment | Ensure architectural compatibility. | Specify current data architecture, existing tools (data warehouse, ETL, monitoring systems), APIs, and integration points. |

| 5. Constraints and compliance | Communicate non-negotiables. | Include data residency, security standards (GDPR, HIPAA, SOC 2), performance expectations, and cost boundaries. |

| 6. Vendor response format | Standardize submissions. | Ask vendors to describe: solution architecture, model training and deployment approach, data handling methodologies, and observability frameworks. |

| 7. Project management and communication | Set collaboration expectations. | Define preferred reporting format, decision-making process, and key stakeholder roles. |

| 8. Post-deployment support | Plan for sustainability. | Request details on knowledge transfer, documentation standards, retraining cycles, and ongoing maintenance terms. |

Proof of concept testing

Another way to validate vendor trustworthiness without incurring significant risks is through proof of concept (PoC) development. It typically takes between four and six weeks. Besides validating the vendor’s capabilities, a PoC is also helpful in measuring initial AI ROI and convincing executives to increase investments following successful results..

Provide vendors with actual business data (anonymized if necessary) rather than synthetic datasets and create user stories that represent your most critical workflows and edge cases. Document current pain points and their impact on the business, and test whether the vendor’s solution effectively addresses these specific issues.

A good PoC should reveal:

- How quickly the vendor can adapt to your environment.

- Whether the proposed architecture performs as expected.

- How well they document and explain results.

- The level of collaboration and transparency under real project pressure.

Pro tip: Treat the PoC as a rehearsal for partnership. The vendor who listens, experiments responsibly, and iterates fast is often the one who’ll perform best in full-scale implementation.

Step 6. Navigate pricing and contractual terms strategically

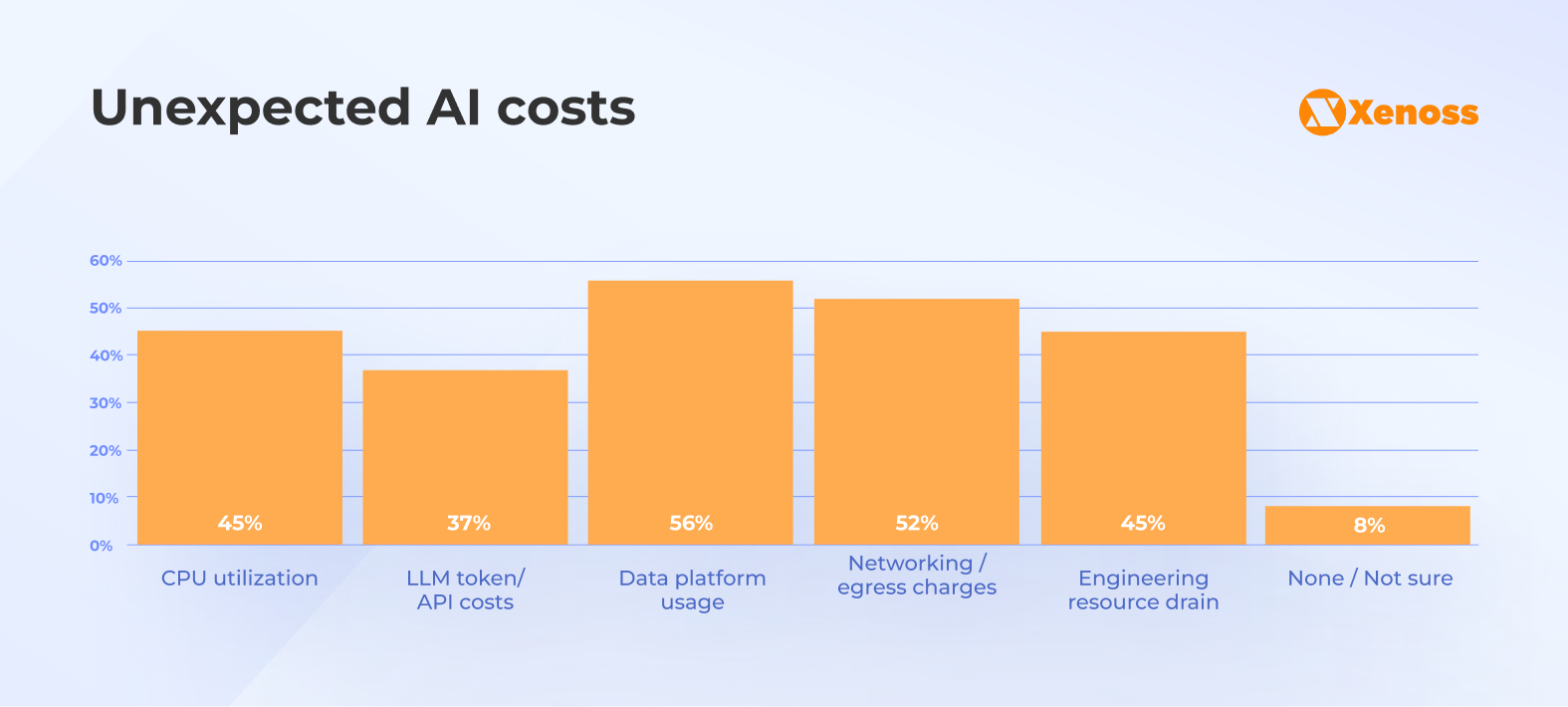

85% of organizations exceed their AI cost forecasts by 10%. Data platforms and networking charges are the top sources of unexpected AI costs.

When estimating AI project costs, organizations should look beyond LLM token costs and CPU/GPU expenses.

Calculate the total cost of ownership of AI systems

Hidden expenses accumulate quickly across multiple categories that vendors rarely disclose upfront. A comprehensive TCO framework should account for:

- Software licensing and subscriptions for AI models and tools

- On-premises infrastructure vs. cloud costs (difference in capital and operational expenses)

- Ongoing maintenance and support

- Data preparation and integration processes

- Legacy system integration costs

- Knowledge transfer and team training investments

- Scaling costs as data volumes grow

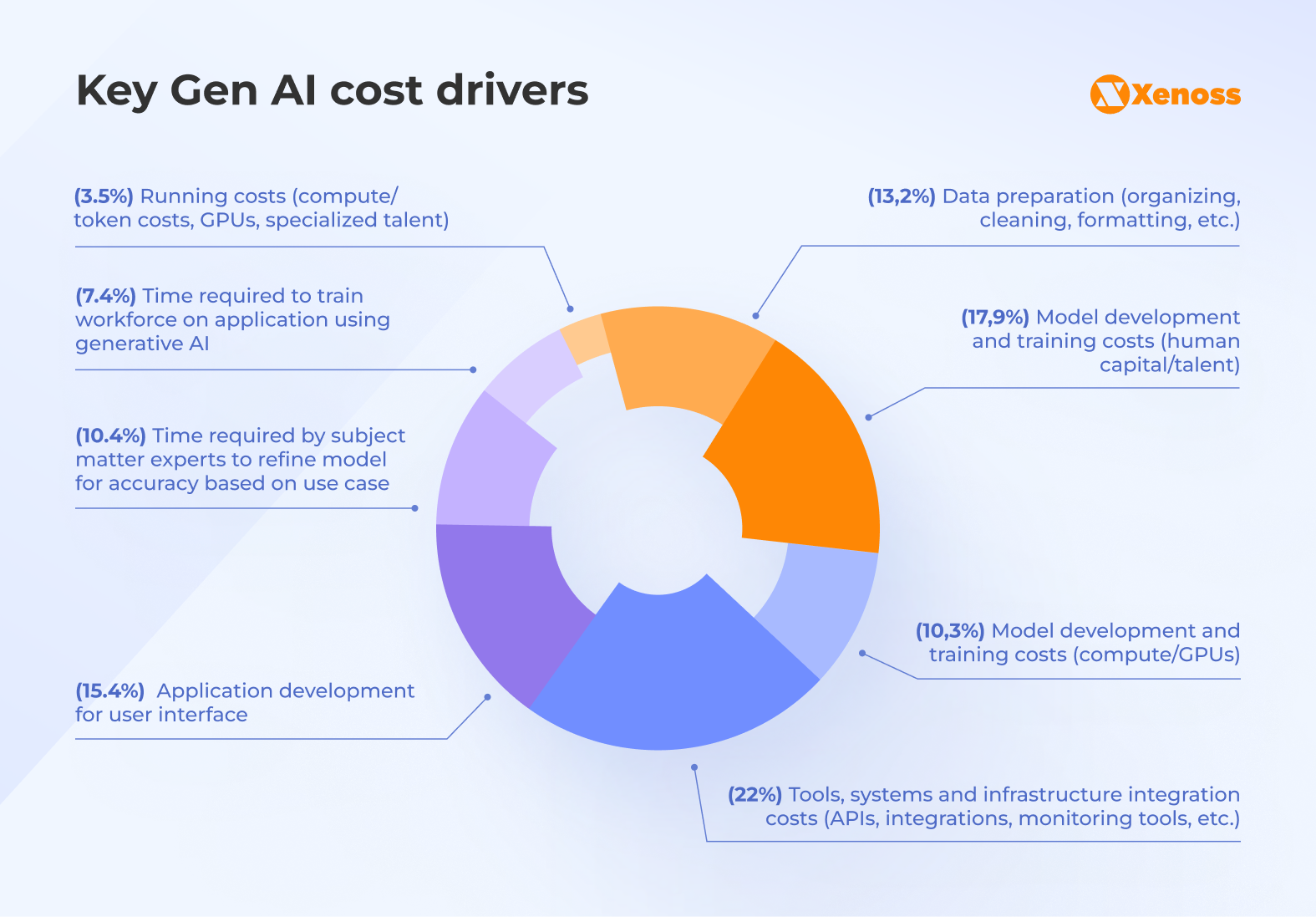

A report on hidden AI costs reveals that model development and training, in terms of human capital and computing resources, account for the largest share of the AI bill (28.2%). However, less obvious aspects, such as data preparation, workforce training, model refinement and fine-tuning, should also be considered at the outset of your collaboration with the AI vendor.

Skilled vendors have experience building comprehensive TCO models for AI project development, deployment, and cross-company implementation. They realize that planning for implementation complexity upfront prevents expensive surprises later.

Negotiate service-level agreements (SLAs) that protect business continuity

Traditional SLAs are often focused on uptime guarantees and application response times. However, with AI’s non-deterministic nature added to the mix, these agreements require more scrupulous attention from the client and the vendor.

When an AI chatbot maintains 100% availability but provides biased answers in 35% of cases, the solution fails to deliver business value.

Beyond basic system response and availability requirements, AI SLAs should include output quality metrics, such as the percentage of accurate outputs and user satisfaction scores, and model performance metrics, including precision, recall, and F1 scores.

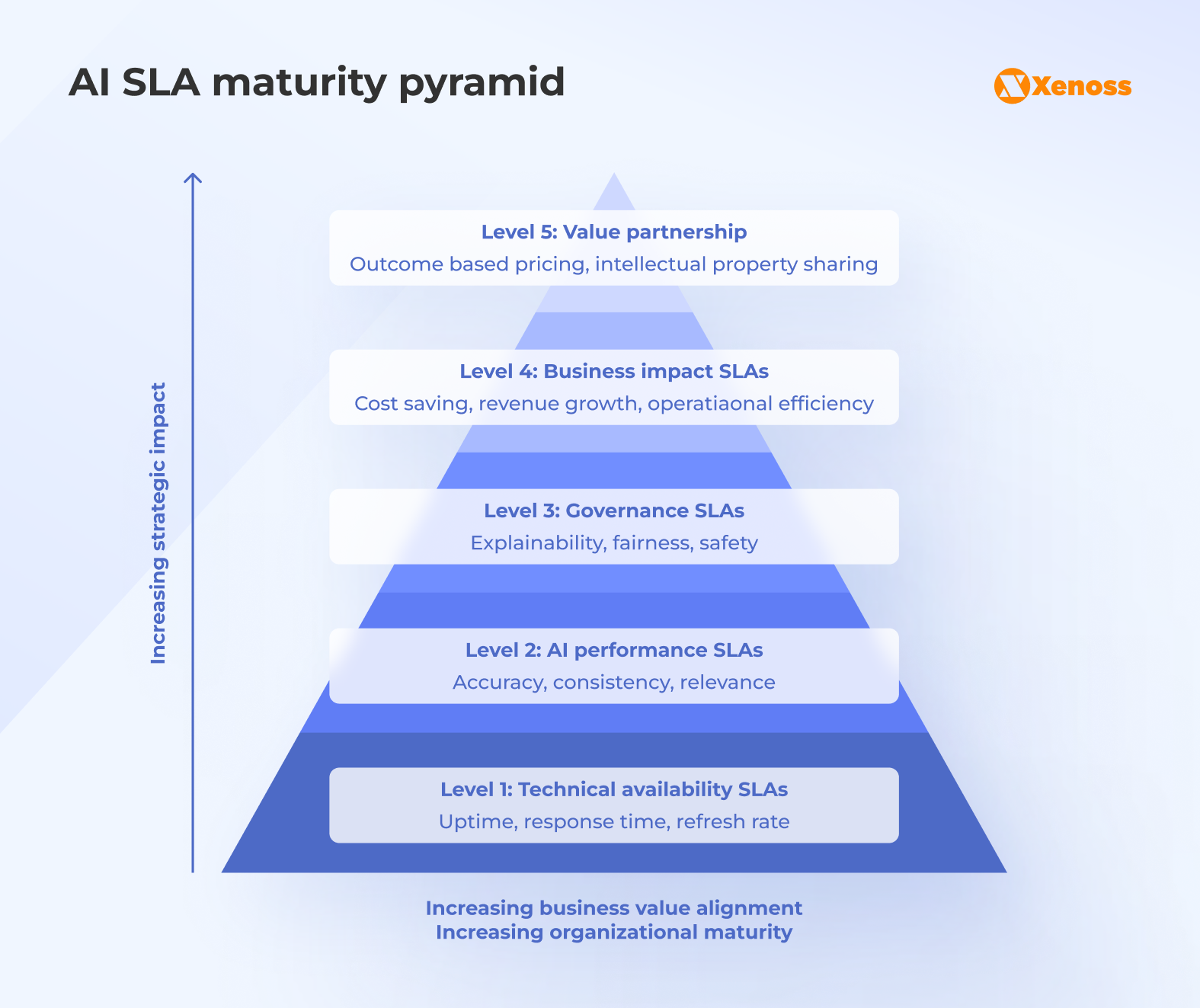

The SLA maturity pyramid below illustrates that technical availability and performance are at levels 1 and 2 of AI SLAs, forming the foundation for AI projects.

AI governance, business impact, and value partnership are at the top of the pyramid, indicating that greater AI maturity shifts the focus from uptime metrics to shared business outcomes, ethical standards, and innovation partnerships.

Depending on the level of business maturity and strategic importance of the AI solution, the complexity of AI project SLAs increases and should be thoroughly documented in vendor contracts.

Step 7. Partnership viability beyond technical capabilities

Organizations can waste millions on vendors who deliver solid code but fail at collaboration, communication, and long-term partnership requirements.

Like traditional software development projects, collaboration with an AI and data engineering company requires well-established communication strategies and post-implementation support services.

Cultural fit and communication effectiveness

Poor cultural alignment and communication barriers lead to disengagement and project delays. Evaluate these partnership indicators during your AI vendor selection process:

- Collaboration approach. Observe how vendors handle cross-functional meetings. Do they ask clarifying questions about your business context or immediately jump to technical solutions?

- Communication strategy. Track response times during the evaluation phase to ensure timely communication. When deep in project development, seasoned AI vendors should also understand that enterprise clients value efficiency above all else. They may skip daily check-ins, but expect detailed monthly reports that show results validated against initial objectives.

- Problem-solving methodology. Present vendors with a realistic challenge scenario. Watch whether they seek to understand root causes or propose generic solutions.

Knowledge transfer and ongoing support

Effective knowledge transfer determines whether your team can maintain and evolve AI systems after they are implemented. Without proper transition planning, organizations risk creating vendor dependencies that limit future flexibility.. Consider the following:

- Documentation standards. Request examples of technical documentation from previous projects. Look for comprehensive architecture guides, troubleshooting procedures, and operational runbooks.

- Training methodology. Verify that vendors can provide hands-on training for your in-house team beyond document sharing. If an AI partner also offers training materials for AI software end-users, it’s a huge plus and a time-saver for your change management team.

- Support structure. Understand their ongoing support model, response time commitments, and escalation procedures for different severity levels.

The investment in proper knowledge transition pays off in reduced vendor dependency and improved AI solution maintainability.

Xenoss, for instance, integrates seamlessly into your in-house team from day one, facilitating knowledge and skill transfer as smoothly as possible. Additionally, our team prepares thorough project documentation and user manuals to help AI users quickly get started with the system.

Common pitfalls in AI vendor relationships and how to avoid them

For first-time AI buyers, the biggest challenge is realistically assessing infrastructure readiness for AI adoption. Organizations often overestimate their data quality and system integration capabilities, making them vulnerable to vendors who oversell unnecessary services.

If you’re just stepping into the AI market, request AI consulting services and look out for vendors who are honest with you rather than overpromising. Also, be aware of these common pitfalls that can arise in AI vendor relationships.

- Expectation gap. When investing in progressive technologies like AI, businesses can have too high expectations. But AI is only transformative to the extent that your current infrastructure and data readiness permit it. To minimize issues and conflicts, openly communicate your concerns, constraints, and expectations early on, and allocate time for regular mid-project check-ins to ensure you’re on the same page with your vendor.

- Underestimating data readiness. Organizations consistently overestimate their data quality and accessibility. They often discover, months into projects, that significant data cleansing and integration work is required. Conduct a thorough data audit before vendor selection, be transparent about data limitations during the RFP process, and budget up to 20% of resources for data preparation work.

- Unclear data ownership and compliance. Contracts that do not define who controls data, trained models, or derived insights can lead to serious disputes later. You should clarify intellectual property and data governance from the outset and pay close attention to contract and SLA development.

Every partnership, even the unsuccessful ones, moves you forward. Each project gives you a clearer picture of your AI goals, your team’s strengths, and what it takes to build a more effective collaboration next time.

Final thoughts

How you approach AI vendor selection and management determines whether your AI investment delivers business value or becomes expensive operational overhead.

First, look for teams with domain-specific data infrastructure experience, proven production-scale implementations, and a solid presence in the AI and data engineering market. Vendors who understand data foundations and AI workflows deliver solutions that integrate well across your organization and scale effectively over time.

Once you find the right partner, align their technical expertise with your business goals, discuss budgets and TCO early on, and develop comprehensive SLAs aligned with long-term business strategy.

Xenoss guarantees all of the above and stays with you through it all: from promising proofs of concept to demanding production rollouts that require persistence and methodical execution.