Disclaimer: The information provided in the article is accurate as of September 2025 and may change as AI technology continues to advance.

Let’s start with a thought experiment. Imagine your enterprise is facing a chess match against the future. The pieces aren’t pawns and knights; they’re language models: large, powerful, and capable of transforming how business gets done.

But which piece do you advance first? OpenAI’s GPT, Anthropic’s Claude, or Google’s Gemini? Or do you at all?

Choosing the right Large Language Model (LLM) platform is a technology decision that turns strategic, likely to modify your productivity and operational efficiency.

This guide evaluates implementation, TCO, integration, and security benchmarks to help you select the platform that aligns with your operational priorities and risk tolerance.

The enterprise AI decision matrix: Why the right LLM platform matters

Today’s enterprise LLMs have already graduated from chatbots to business cognitive infrastructure. The right models automate complex, time-consuming tasks, surface empirical evidence from enterprise knowledge bases for decisions, and speed up innovation cycles across customer service, content creation, trend analysis, internal operations, and strategic reasoning.

The business case for enterprise LLMs in numbers

Speed: AI‑enabled processes can slash cycle times by 40‑60%, turning days of document processing into hours and freeing teams to focus on higher‑value work.

Scale: AI agents now resolve 80% of customer-support queries, speeding up service by 52% and improving service quality without a proportional increase in headcount.

Scope: LLMs automate 70–90% of manual operations across industries, powering compliance, market research, legal reviews, and predictive analytics for high-value decision support.

Strategy: As of 2025, 37% of enterprises deploy five or more specialized AI models to match specific workflows, maximizing ROI and minimizing vendor lock-in through multi-model strategies.

With AI everywhere, and the landscape often hard to parse, let’s start with a quick fact-check of the three main players.

OpenAI: The Microsoft marriage

Launched by Sam Altman, Elon Musk (he left the board in 2018), and others as a nonprofit in 2015, OpenAI pivoted to a capped-profit model in 2019. The company’s valuation skyrocketed from $157 billion to $500 billion between October 2024 and August 2025, driven by its exclusive Microsoft Azure partnership.

Musk tried to buy back control with a $97.4 billion hostile bid in February 2025, but the board rejected him, calling it “an attempt to disrupt his competition.”

Anthropic: The safety-first upstart

Founded by ex-OpenAI siblings Dario and Daniela Amodei, Anthropic’s valuation tripled in just 6 months, jumping from $61.5B in March to $183B in September 2025. The company secured backing from both Amazon and Google, engaging with both cloud giants while maintaining flexibility.

Despite its safety-first branding, Anthropic accepted up to $200 million in defense contracts from the Pentagon and is seeking investments from Middle Eastern sovereign wealth funds.

Google Gemini: The context window giant

Rooted in DeepMind’s research, Google introduced Gemini in 2023 as Google’s AI counterattack to OpenAI’s dominance. Gemini’s 1 million token context window can process up to 1,500 pages of text or 30,000 lines of code simultaneously, analyzing vast datasets in a single conversation. The system is deeply integrated across Google’s product stack, creating what could be considered the largest AI deployment in history.

With all its technical advantages, Gemini is trailing behind in enterprise adoption due to late market entry.

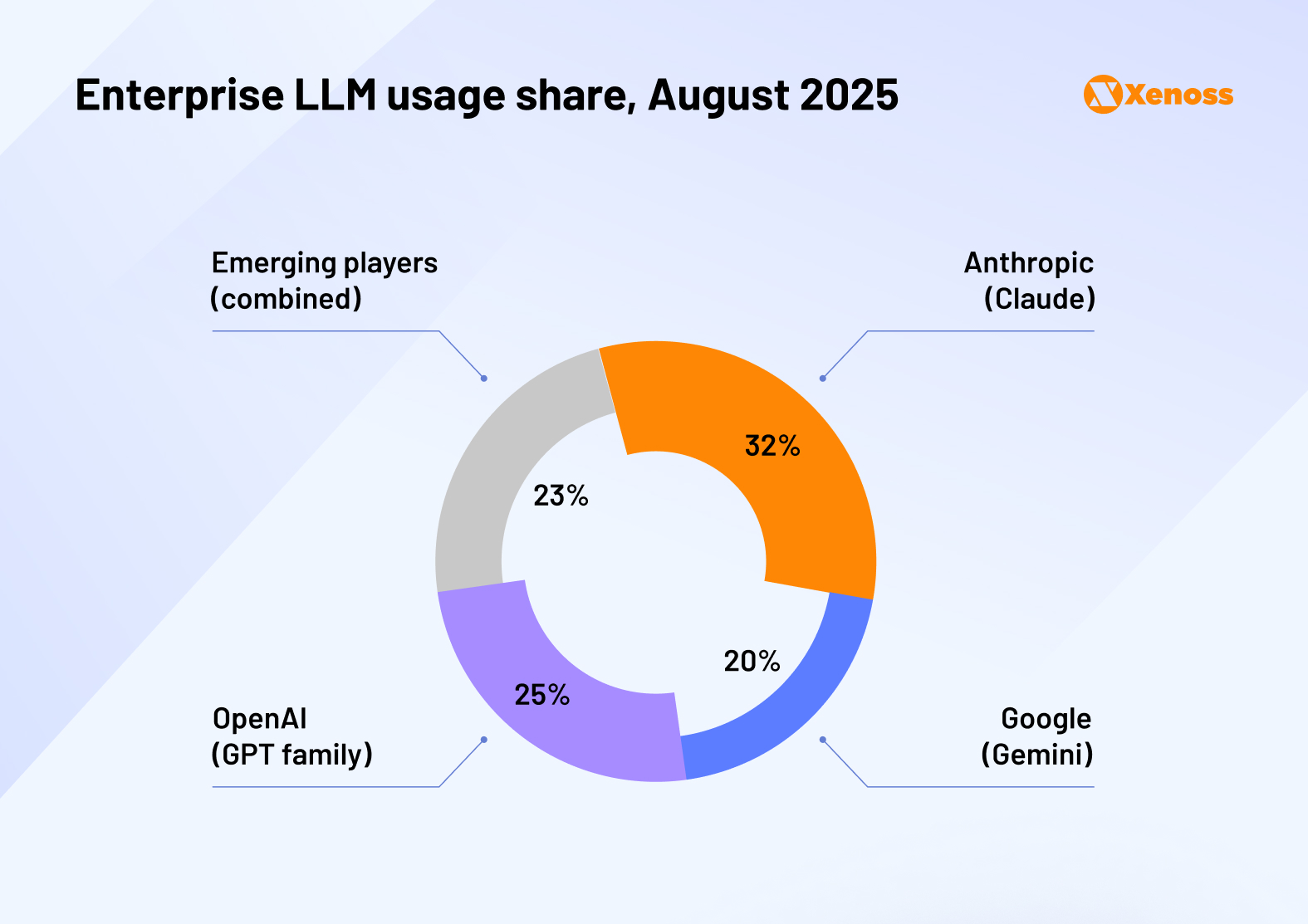

Originally with distinct strengths, each major enterprise LLM platform now serves a different purpose, surging the market adoption. Enterprise LLM spending rose to $8.4 billion by mid-2025 (up from $3.5 billion in late 2024) as more businesses moved models into full production.

As the usage breakdown stands, Anthropic has overtaken OpenAI’s early lead through its focus on safety, while Google is rising through ecosystem integration.

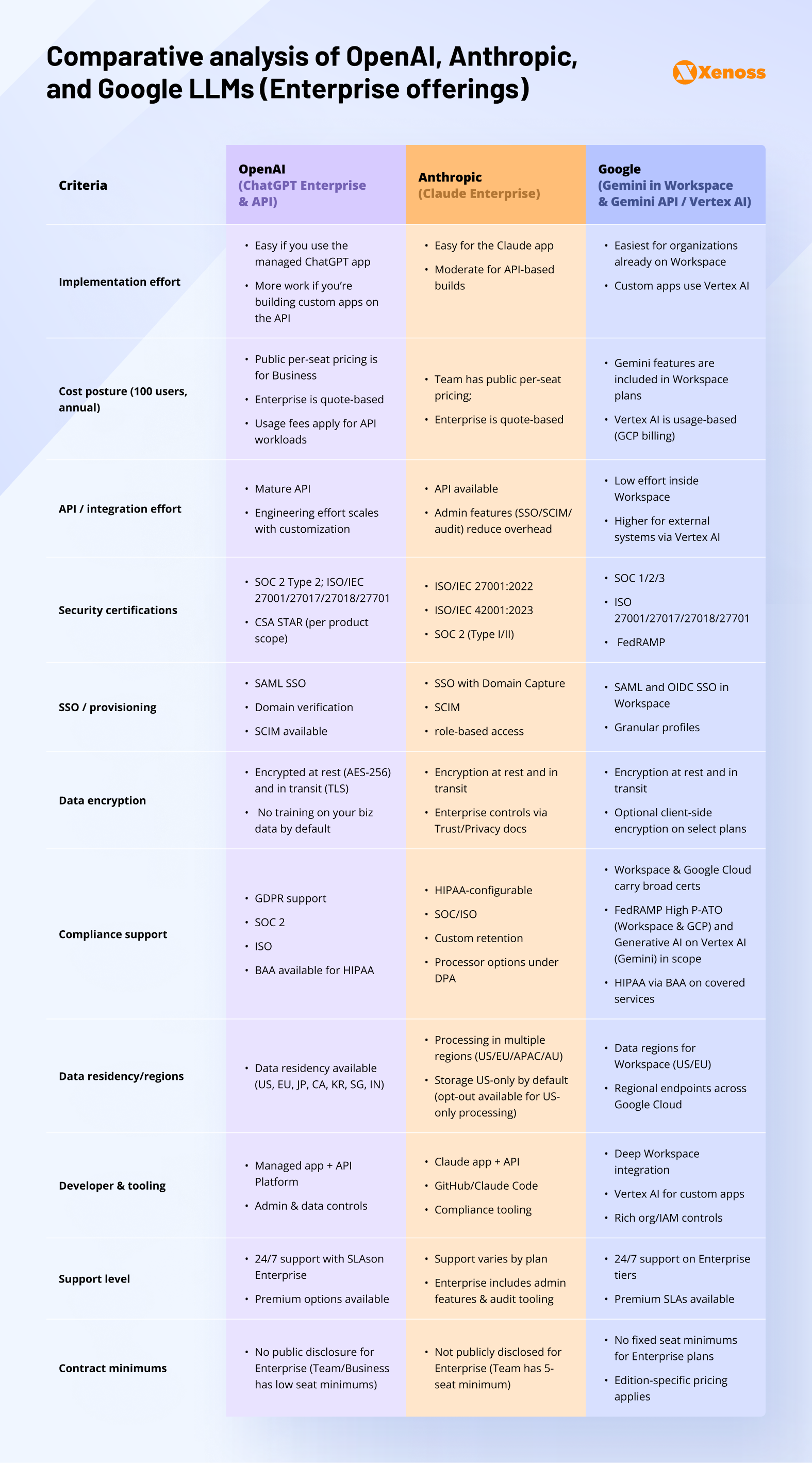

To minimize budget and security risks, market trends are not enough, though. When selecting an LLM platform, evaluate these four practical factors:

- Implementation complexity: How easily does the model integrate into existing workflows without disrupting operations?

- Total Cost of Ownership: What are the ongoing subscription, API usage, customization, and scaling expenses?

- Integration requirements: Does the platform align with existing cloud service ecosystems and enterprise software stacks?

- Security and compliance: Does the vendor meet industry-specific standards for data privacy and regulatory governance?

Implementation complexity analysis

The implementation speed and quality of LLMs for enterprises depend on data integration, model customization, infrastructure scalability, security, compliance, and ongoing maintenance.

OpenAI Enterprise Platform

OpenAI’s enterprise platform became more accessible to businesses in 2025, offering AI capabilities with the latest GPT-5 models in Azure AI Foundry, along with trusted enterprise-grade security, compliance, and privacy protections that enterprise IT teams require.

The platform is designed for most standard business applications. Companies can implement OpenAI’s platform within weeks. The setup process integrates with existing company systems and requires minimal technical expertise for basic use cases.

Key implementation challenges:

- Usage planning: High-volume applications need careful capacity planning

- Custom models: Training custom AI models requires extra technical resources

- System integration: Connecting with existing business software may require additional enterprise application modernization services

- Multi-environment setup: Managing development and production systems adds complexity.

Business considerations:

The platform works best for enterprises with clear use cases and realistic expectations. Organizations already using the Microsoft suite may find easier implementation paths, while others should factor in additional integration time and costs.

Success depends on having appropriate technical support, whether internal development teams or external consultants, and allowing enough time for staff training and system integration.

Anthropic Claude Enterprise

Anthropic’s Claude Enterprise, backed by the latest Claude Opus 4.1 and proprietary reinforcement learning, offers enterprise-grade security and supports stepwise, tool-integrated AI agents. It aligns with standard enterprise workflows, offering a relatively short implementation timeline. Setup typically includes single sign-on, audit logging, and role-based access that align with existing systems.

Even though Claude Code smoothes out developer onboarding, complex agent workflows, or specialized tool integrations can add time and require deeper technical expertise.

Main implementation issues:

- Frequent updates: Ongoing feature development requires regular training programs for staff

- Identity management: SCIM integration may demand advanced expertise in identity systems

- Compliance setup: Custom data retention policies need legal review in regulated industries

- Directory configuration: Multi-directory support can be complex for large organizations

Business considerations:

Claude Enterprise is well-suited for sophisticated AI-agent workflows via the Model Context Protocol (MCP), which simplifies integrations with external tools and services. Its reinforcement-learning approach performs well in iterative, multi-step problem-solving.

The application’s success depends on clearly defined use cases and allocating time for teams to adapt to an evolving feature set.

Google Gemini Enterprise

Google’s enterprise platform builds on existing Workspace infrastructure, pairing Gemini 2.5 capabilities with enterprise-grade data protection and tight workflow integration.

For current Business, Enterprise, and Frontline customers, implementation is typically seamless thanks to built-in compliance features and industry-specific validation.

Some implementation concerns:

- Non-Workspace complexity: Organizations outside Google’s ecosystem face steep learning curves

- Cloud expertise: Advanced security configurations require Google Cloud Platform knowledge

- Network redesign: Zero-egress deployment models demand significant infrastructure changes

- Cross-platform integration: Connecting with non-Google systems requires custom development work.

Business considerations:

Gemini Enterprise is strongest where companies are already invested in Google’s stack, integrating naturally with Workspace tools and processes. Advanced options, such as AI-agent orchestration and private network deployments via Vertex AI, are powerful but depend on solid GCP infrastructure skills.

Success hinges on existing Workspace adoption and teams familiar with Google Cloud services, or a budget for specialized AI consulting support.

Total Cost of Ownership (TCO) factors

All three vendors price their APIs by the number of tokens used (you pay for the model’s input and output) and offer separate per-seat plans for chat apps. TCO varies most by model tier choice (frontier vs. lighter models), output volume, and data stack integration complexity.

Each provider publicly lists token pricing, but the enterprise seat pricing is often negotiated. Across the market, list prices continue to fluctuate, but the pattern remains stable: lightweight models are generally cheaper; frontier models, on the other hand, cost more and are best reserved for higher-stakes reasoning.

OpenAI Enterprise TCO

OpenAI’s API pricing spans GPT-5 (frontier) through lower-cost mini tiers. OpenAI tends to be the most expensive per million tokens processed, justified by its model power and maturity.

For example (USD per 1M tokens), GPT-5 is $1.25 input / $10 output, and GPT-4o mini is $0.60 input / $2.40 output. Enterprise add-ons like reserved capacity and priority processing exist but are optional. API usage is billed separately from ChatGPT subscriptions.

Hidden costs include:

- API overage charges can be substantial

- Custom ML model fine-tuning requires separate pricing discussions

- Azure integration fees for organizations using GPT-5 through Microsoft

- Professional services for complex integrations typically go up

- Ongoing training and change management costs as new models are released

Anthropic Claude Enterprise TCO

Anthropic’s API pricing is tiered by model. Anthropic’s Claude is positioned as slightly cheaper than GPT-4 API on token costs, with Claude Instant variants for lightweight tasks optimizing expense.

Current headline rates (USD per 1M tokens) for Claude Sonnet 4 are $3 input / $15 output, and Claude Opus 4.1 is $15 input / $75 output. The prices include support for features like long context and caching, where applicable.

Additional costs to consider:

- Premium seat upgrades for power users add 30-50% to base costs

- Advanced analytics and audit features require higher-tier subscriptions

- Integration services typically cost more for complex deployments

Google Gemini Enterprise TCO

Google Gemini pricing is most attractive for organizations already on Workspace and Google Cloud because many AI features are now bundled into existing subscriptions, and API token prices are aggressive on the lighter model tiers.

Starting in 2025, Gemini capabilities are included in Workspace Business and Enterprise plans, ranging from $14.40/user/month to $23.40/user/month accordingly. Exact per-edition pricing varies by plan, region, and contract, so it’s best to refer to Google’s live pricing page rather than relying on fixed dollar figures.

Indirect costs to watch:

- For non-Workspace stacks, integration and service fees can dominate first-year costs (specifically identity, network, and data protection setups)

- The Optional AI Security features might be an add-on

- Advanced setups often use additional Google Cloud (e.g., Vertex AI, networking), billed separately

- Usage of grounding with Google Search and image/video generation is metered separately, affecting overall pricing

Integration requirements evaluation

All three major AI vendors support enterprise integration, but they differ in terms of ecosystem fit and technical demands, which influence the total effort and cost.

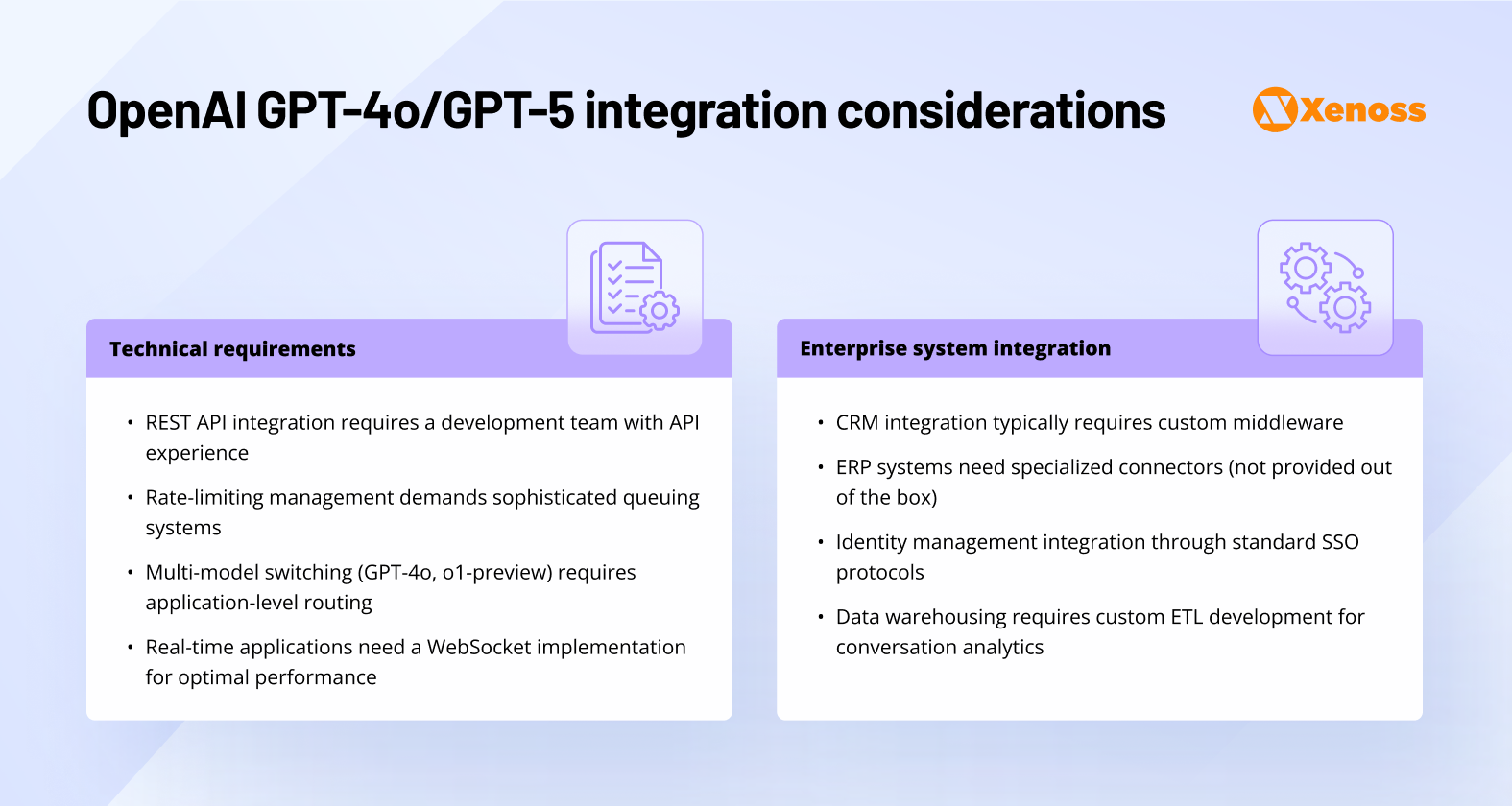

OpenAI GPT models integration architecture

OpenAI supports broad platform compatibility with mature SDKs and support for multiple third-party tools, enabling flexible integration across diverse environments. Its API-first approach offers maximum customization, although complex multi-agent or extended workflows often require external vendors or third-party services.

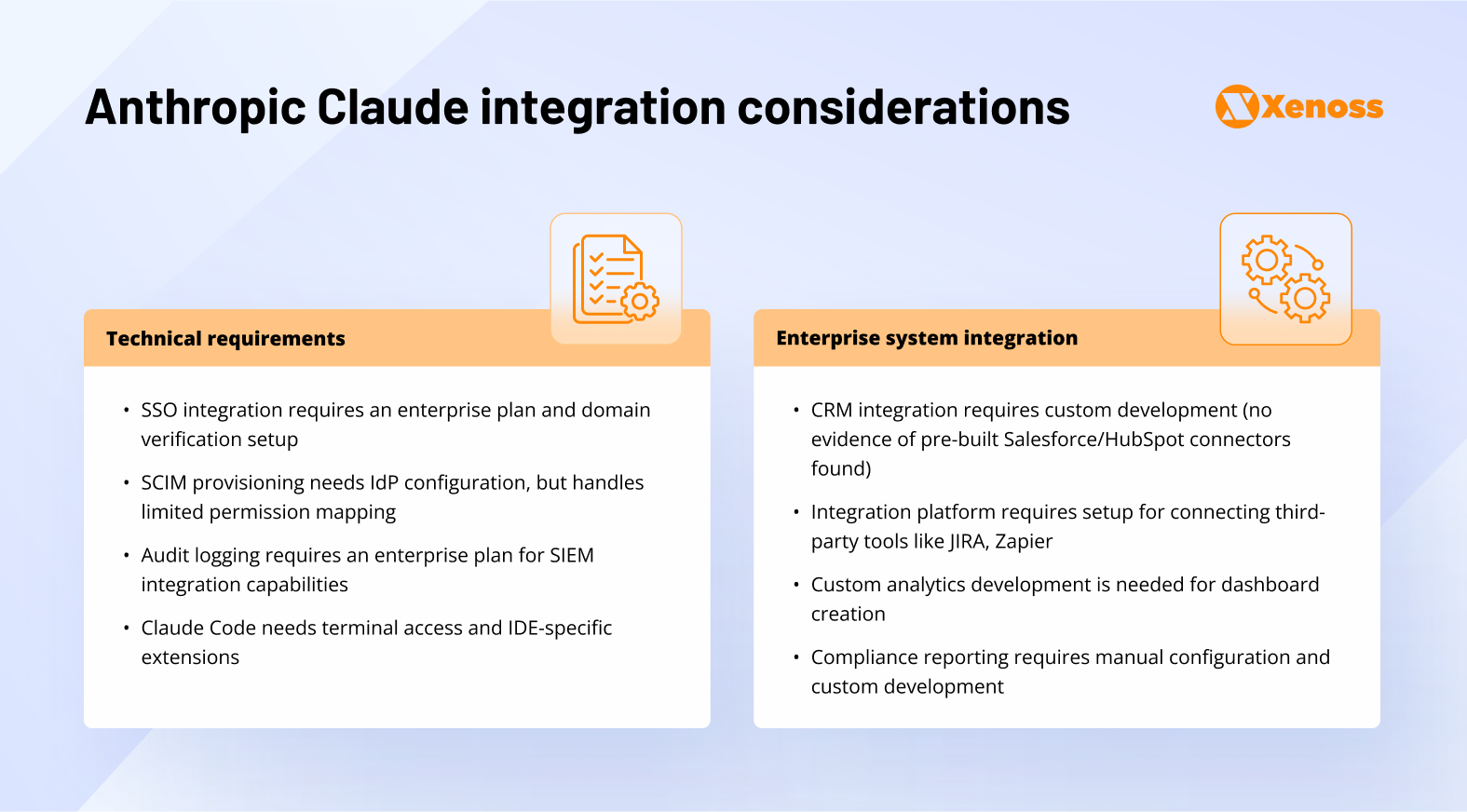

Anthropic Claude integration ecosystem

Anthropic’s open-standard Model Context Protocol (MCP) supports smooth modular integration with external tools, like search engines, coding environments, and calculators. It speeds up application development without heavy engineering while providing a secure foundation optimized for AI agent workflows.

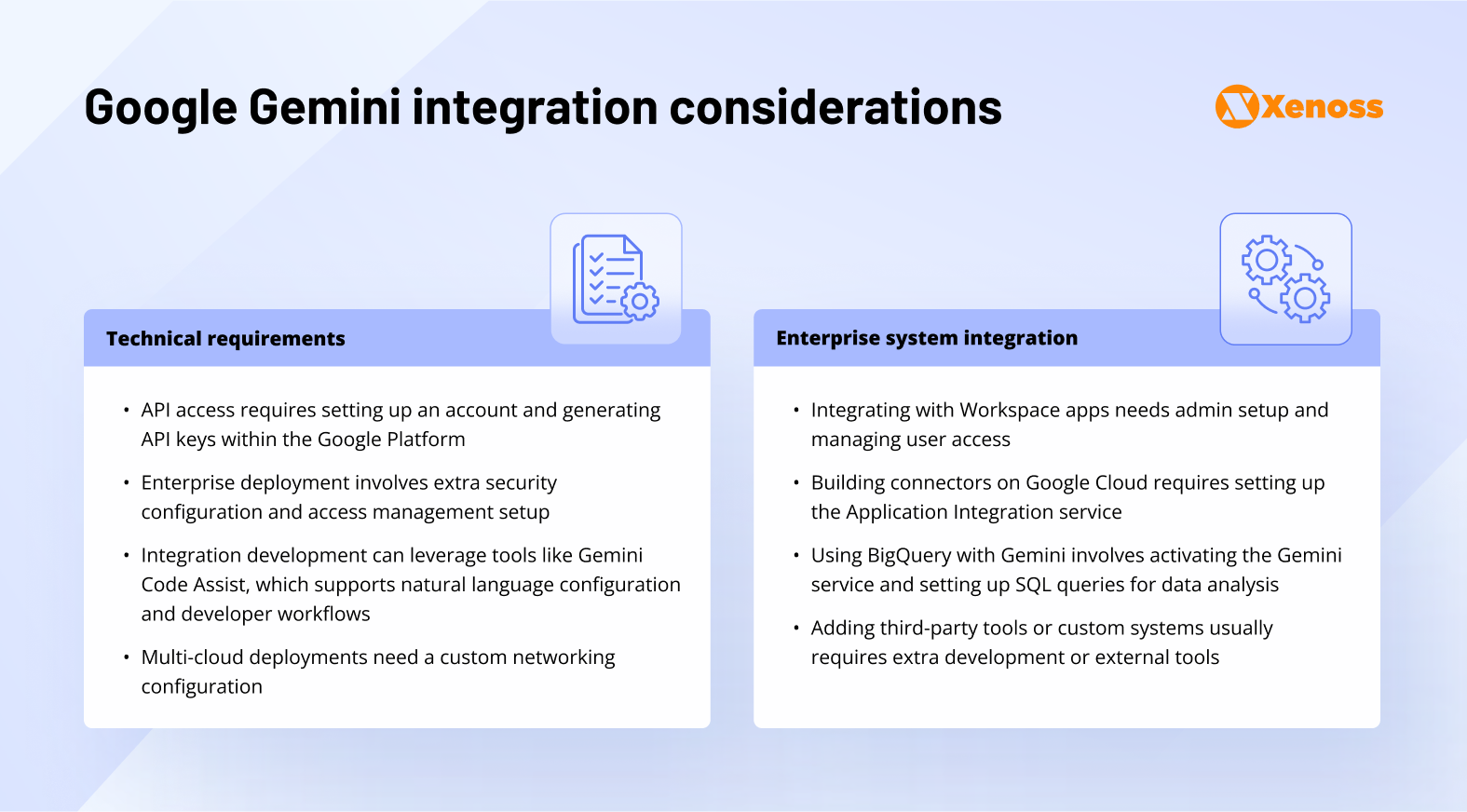

Google Gemini integration framework

Gemini is deeply integrated into the Google Cloud ecosystem and Google Workspace, providing seamless workflows within existing enterprise stacks. It supports Oracle ERP, HR, and CX systems through Vertex AI Agent Engine, improving automation for enterprises using Google infrastructure.

Gemini’s strength lies in built-in control and compliance features, though optimal deployment requires familiarity with Google Cloud architecture.

Security and compliance features: Enterprise LLM platform comparison

Enterprise AI deployment hinges on security, as enterprises feeding sensitive data into AI systems need bulletproof protection. All three platforms meet basic enterprise requirements through SOC 2 certifications and encryption standards, but differentiation emerges in specialized compliance frameworks.

Google Gemini leads with FedRAMP High authorization (the first generative AI platform to achieve this federal certification) alongside HIPAA compliance for healthcare deployments.

OpenAI provides Business Associate Agreements for limited HIPAA scenarios.

Anthropic offers SOC 2-aligned frameworks with zero-data-retention options.

Beyond standard certifications, each platform addresses AI-specific security challenges through distinct operational architectures.

Access control

Claude Enterprise provides SSO integration and Domain Capture functionality, connecting with existing identity providers. This reduces IT friction while maintaining security standards.

OpenAI goes further with Compliance API integrations, SCIM provisioning, and granular GPT controls that support enterprise-scale user management. Workspace owners control connector access through role-based permissions, enabling least-privilege implementation across AI tools.

Google leverages its enterprise heritage. Workspace Business, Enterprise, and Frontline customers get enterprise-grade data protection built into Gemini access, inheriting Google’s mature identity management infrastructure.

Data handling

Data retention policies determine enterprise viability.

Google’s approach reflects its cloud-first architecture. Commercial and public-sector Workspace customers receive enterprise-grade protections, though organizations must evaluate Google’s broader data ecosystem alignment with their requirements.

Anthropic offers zero-data-retention options, addressing the core concern of organizations hesitant to share proprietary information with AI systems. This proves essential for financial services and legal firms where data exposure creates liability.

OpenAI states that organization data remains confidential and customer-owned across Enterprise, Team, and API platforms. However, implementation specifics matter more than policies.

AI-specific threat protection

Traditional security frameworks don’t address AI-native attacks.

Google Gemini incorporates layered defense strategies specifically for prompt injection mitigation, recognizing that AI systems face unique attack vectors requiring specialized protections.

Anthropic deployed automated security reviews for Claude Code as AI-generated vulnerabilities increase. This capability addresses growing concerns about AI-generated code security, providing automated vulnerability scanning before deployment.

OpenAI has added IP allowlisting controls for enterprise security, enabling network-based access restrictions, which is critical for industries with strict network segmentation.

Operational security and ethical governance

The bar for AI in the enterprise is safety by design, combining operations within the current security architecture with responsible-AI compliance as standards shift.

Integration ecosystems. OpenAI’s ChatGPT Enterprise Compliance API integrates with third-party governance tools like Concentric AI, extending built-in data loss prevention beyond platform boundaries. This ecosystem approach recognizes that enterprise security spans multiple tools and vendors.

Anthropic takes a different path with Claude Code’s expanded enterprise features: administrative dashboards for oversight, native Windows support for secure deployment, and multi-directory capabilities for complex organizational structures. These operational tools directly impact security management at scale.

Google leverages its Workspace ecosystem advantage. Gemini maintains compliance with COPPA, FERPA, and HIPAA regulations while inheriting the same technical support infrastructure as core Workspace services. This unified approach reduces compliance complexity across collaborative tools.

Ethical frameworks as differentiators. With responsible AI transforming into a regulatory requirement, each platform’s ethical approach creates distinct compliance advantages.

Anthropic leads with Constitutional AI, training Claude on explicit ethical principles derived from sources including the UN Declaration of Human Rights. The provider achieved ISO/IEC 42001:2023 certification — the first international standard for AI governance. This systematic approach provides auditable ethical frameworks that satisfy regulatory scrutiny.

OpenAI focuses on output safety through content filtering and harm reduction, teaching AI systems to identify and avoid harmful responses. While effective for content safety, this approach emphasizes reactive measures over systematic ethical governance.

Google integrates responsible AI principles throughout development, updating its Frontier Safety Framework for EU AI Act compliance preparation. Gemini’s enterprise protections ensure customer content isn’t used for other customers or model training, addressing data contamination concerns.

The compliance angle. For heavily regulated sectors, like healthcare, finance and banking, government, these frameworks translate directly into procurement requirements. Anthropic’s Constitutional AI and ISO 42001 certification create the strongest foundation for organizations needing demonstrable ethical AI governance. OpenAI’s ecosystem integrations appeal to enterprises with complex existing security stacks. Google’s unified compliance posture simplifies governance for organizations already committed to its ecosystem.

A side note: The local AI and LLMs paradox

While analyzing the most powerful, globally recognized LLMs, we couldn’t overlook the concept of local AI models. This development reveals more about geopolitical tensions than technical limitations across multiple regions.

The performance data challenge conventional wisdom about AI dominance. Alibaba Cloud’s latest proprietary LLM Qwen-max achieved 86.4% accuracy on domain-specific tasks like Traditional Chinese Medicine. South Korea’s 32B model scored 81.8% on MMLU-Pro, ahead of Microsoft’s Phi-4 Reasoning+ (76%) and Mistral’s Magistral Small-2506 (73.4%).

By February 2025, the gap between top U.S. and Chinese models had narrowed to just 1.70% from 9.26% in January 2024, indicating lightning-fast convergence in capabilities.

European initiatives are also gaining traction. Switzerland’s public LLM, Apertus, offers an alternative to the extractive, opaque, and legally questionable practices of many commercial AI developers. While Korea’s A.X-4.0 and A.X-3.1 have shown performance comparable to OpenAI’s GPT-4o, demonstrating world-class ability in understanding Korean-language context.

This global proliferation reflects practical needs rather than nationalist posturing. Local models are optimized for specific linguistic, cultural, and regulatory contexts, giving them a clear technical advantage in those areas. The race for sovereign AI stems from countries seeking to build their own large language models to secure technological independence, reduce reliance on foreign providers, and ensure compliance with local regulations.

Studies document politically sensitive refusals and self-censorship behaviors in Chinese LLMs, partly reflecting training data filtering and policy alignment, but this represents compliance with local governance frameworks, not technical inadequacy.

The transparency argument cuts multiple ways. While Chinese AI development faces criticism for opacity, Western models embed equally strong cultural assumptions under the guise of universal “ethical alignment.”

The fundamental question shifts from local AI risks to whether the global community can accept a multipolar technical reality where no single geography controls model development standards.

Decision framework: Matching platform to risk profile

Choosing among OpenAI, Anthropic, and Google is an advantageous allocation exercise.

Begin with a platform that matches your primary constraints, architect for portability, and maintain evaluation capacity as model capabilities converge and pricing pressure intensifies across all vendors.

Tie your decision to existing cloud and security posture, use-case fit, and TCO controls. Pilot two vendors, measure like an operator, and scale what wins.

The operational test: Infrastructure compatibility

OpenAI is your first choice if your organization operates complex, multi-vendor security stacks requiring granular API control. The top-level GPT-5 API costs $1.25 per 1 million tokens of input and $10 per 1 million tokens for output, positioning it as a premium-priced option but offering the deepest ecosystem integration through Azure AI Foundry.

It fits enterprises with existing Microsoft commitments and sophisticated compliance tooling requiring custom middleware development.

Anthropic is your go-to solution if data minimization and AI-specific security controls are non-negotiable. Claude Opus 4.1 improves software engineering accuracy to 74.5%, while Constitutional AI provides auditable ethical frameworks meeting emerging regulatory standards. The zero-data-retention options address existential risk concerns for financial services and legal firms.

It’s a match for the needs of highly regulated industries where data exposure creates liability exceeding productivity gains.

Google checks if your organization has committed to Workspace infrastructure and needs operational simplicity over customization depth. Gemini’s bundled pricing within existing Google subscriptions dramatically reduces TCO for current Workspace customers while providing enterprise-grade compliance inheritance.

It’s the best-fitted choice for enterprises prioritizing fast deployment over custom integrations.

Implementation velocity versus long-term flexibility

Proof-of-concept phase. Google Gemini delivers the fastest time-to-first-value for Workspace customers through inherited compliance and integrated tooling. OpenAI provides a mature ecosystem support but requires more engineering setup. Anthropic’s evolving feature set demands ongoing training but speeds up developer workflows through Claude Code.

Production scaling. OpenAI’s mature ecosystem supports complex multi-agent workflows through extensive third-party integrations. Anthropic’s Model Context Protocol simplifies modular development with fewer external dependencies. Google’s integrated approach reduces operational overhead but limits vendor diversification.

Strategic flexibility. The tension between speed-to-value and strategic optionality determines long-term platform viability. API-first architectures enable multi-vendor strategies, integrated platforms optimize single-vendor efficiency, but reduce switching flexibility.

The decision algorithm

Security posture assessment. If existing security infrastructure requires custom API integration, choose OpenAI. If data minimization is existential, choose Anthropic. If unified compliance simplifies governance, choose Google.

Integration complexity tolerance. High customization needs favor OpenAI’s ecosystem depth. Modular AI agent workflows align with Anthropic’s MCP architecture. Operational simplicity prioritizes Google’s integrated approach.

Economic model alignment. Variable workload enterprises benefit from OpenAI’s caching economics. Regulated industries justify Anthropic’s premium for compliance-first architecture. Google’s bundled pricing optimizes for Workspace-committed organizations.

Implementation timeline constraints. Google delivers fast deployment for existing customers. OpenAI requires moderate engineering investment for maximum flexibility. Anthropic balances capability with evolving operational overhead.