Agentic AI has emerged as enterprise organizations’ most promising path to measurable AI ROI.

Capgemini data shows that one in five organizations already uses multi-agentic systems, 16% plan to build one next year, and over 30% are planning to start piloting agents in the next 2-3 years.

But even though teams are optimistic about agentic workflows, performance is still a bottleneck to wrestle with.

41% of machine learning teams name performance quality as the number-one challenge they face when building production-ready AI agents.

To improve the clarity and completeness of prompts and the accuracy of large-language model answers, engineers are experimenting with orchestrator frameworks. LangChain, LangGraph, Llamaindex, Pydantic AI, Autogen, and many other tools promise to help build ‘reliable agents’ (LangChain’s home page) using ‘any LLM or cloud platform’ (CrewAI’s website).

Do LLM orchestrators deliver on these promises?

This article looks closely at the rise of multi-agent systems, the role orchestrators play in streamlining them, and their intrinsic limitations that make engineers consider building custom tools instead.

No ‘agent to rule them all’: the rise of multi-agent systems

In January 2025, ChatGPT released Operator, and AI agents transitioned from a hyped concept to a battle-tested implementation. Watching Operator navigate tabs, create documents, and edit spreadsheets demonstrated autonomous AI’s potential.

But that original excitement wore off when users realized how practically inconvenient a general-purpose agent was.

A Reddit user gave it a relatively simple and automation-ready task: collecting a list of influencers in finance, and was not impressed by Operator’s performance.

Operator is quite simply too slow, expensive, and error-prone. While it was very fun watching it open a browser and search the web, the reality is that I could’ve done what it did in 15 minutes, with fewer mistakes, and a better list of influencers.

At the time of writing (August 2025), OpenAI rolled out the ChatGPT agent to Plus users, but it has similar cost, speed, and efficiency pitfalls.

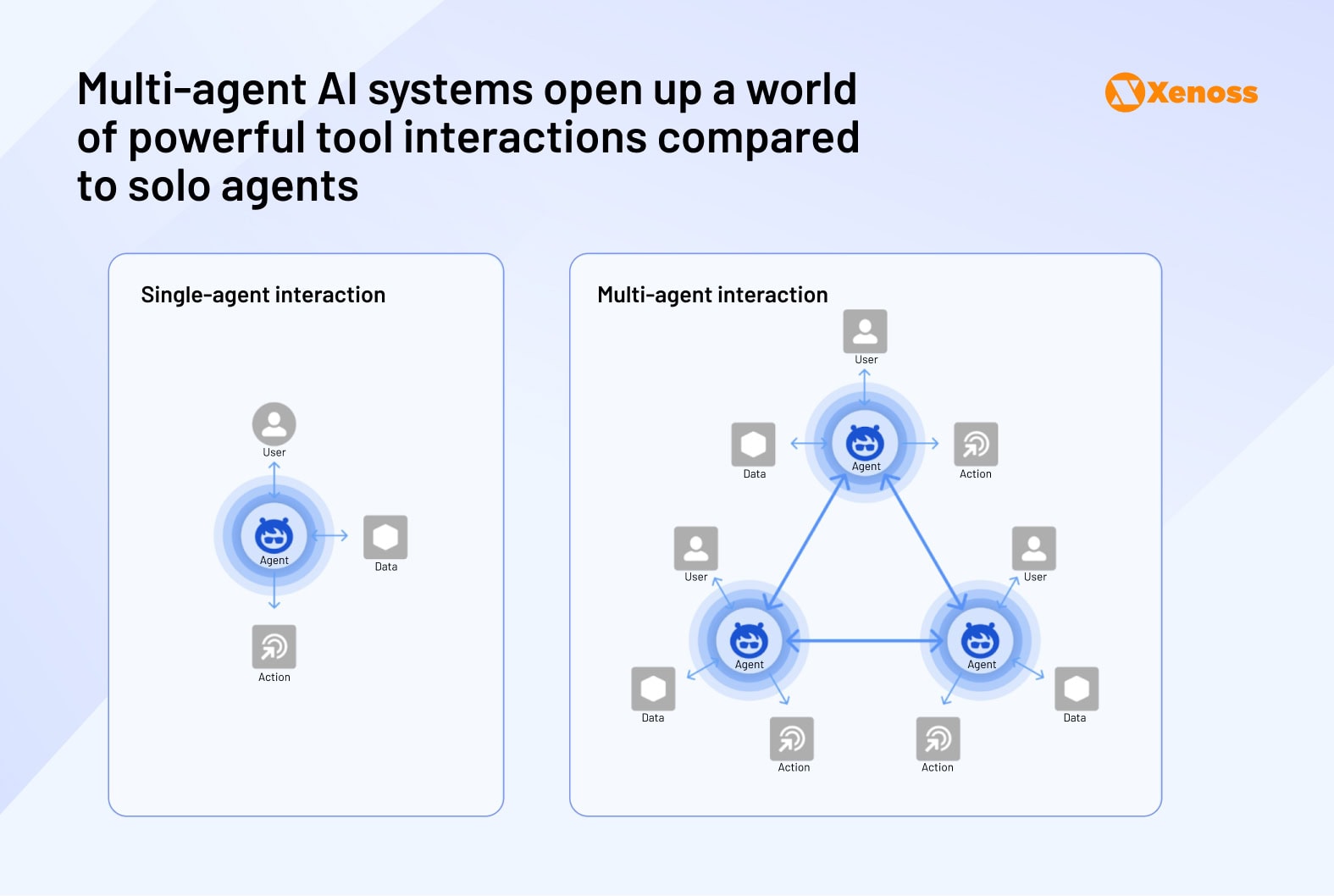

As general-purpose agents underperformed, teams at Anthropic, Google, and Microsoft pivoted to multi-agent systems.

They believed that, instead of trying to build one agent that can ‘do it all’, creating several narrow-purpose agents that share data and operate in unison is the way to go.

Operator’s issues highlight the current limits of general-purpose agents, but that doesn’t suggest that agents are useless. It appears that economically valuable narrow agents that focus on specific tasks are already possible. Ethan Mollick,

In June, Anthropic published a blog post titled ‘How we build multi-agent systems’, in which they laid out the architecture for using multiple Claude agents to explore niche topics in more depth.

Their example sold many AI skeptics, both in research and business, on the practical benefits of multi-agent systems.

I’ve been pretty skeptical of these until recently: why make your life more complicated by running multiple different prompts in parallel when you can usually get something useful done with a single, carefully-crafted prompt against a frontier model?

Simon Willison’s blog (June 2025)

This detailed description from Anthropic about how they engineered their “Claude Research” tool has cured me of that skepticism.

Here is the case Anthropic makes for building MASs.

- Multi-agent systems are flexible enough to tackle complex problems. The Anthropic team believes that linear one-shot pipelines cannot accurately comprehend complex challenges, as they require constant pivoting, applying multiple ways of thinking, and updating next steps based on new knowledge. In a multi-agent system, each sub-agent can examine a problem from a different perspective, all while maintaining independence and ensuring separation of concerns.

- Multi-agent systems effectively compress research data. The lead agent does not have to plow through troves of information to reach a conclusion (which often leaves LLMs confused). In a multi-agent system, the decision-maker agent gets compressed insights from each sub-agent and uses these takeaways to find precise answers.

- Collective intelligence is key to scaling performance. For humans, collective contributions historically have had a more lasting impact than the fruits of individual work. Anthropic researchers apply this logic to AI agents, believing that a multi-agent system beats even the most intelligent general-purpose agents by leveraging collective intelligence and the power of coordination.

Aside from one major caveat, the fact that multi-agent systems burn through tokens faster than single agents, the case for MASs looks convincing, especially if seen side-by-side with general-purpose agents’ failure to support real-life use cases reliably.

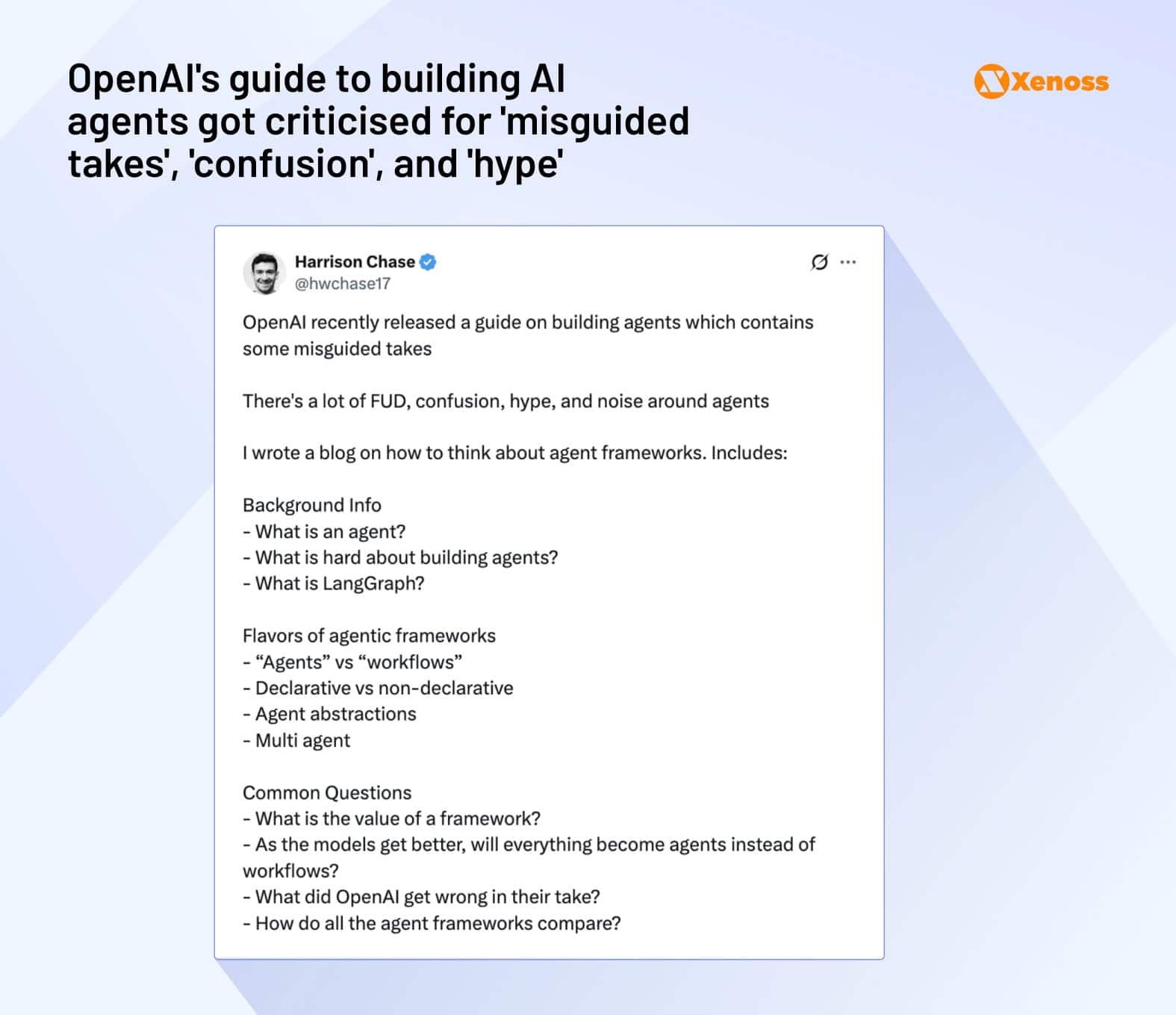

OpenAI’s ‘A practical guide to building agents’, received mixed reception, including criticism from Harrison Chase, co-founder and CEO of LangChain.

Both Anthropic and OpenAI implementations use single-vendor workflows (Claude or GPT) without LLM switching at the sub-agent level. While straightforward to implement, this approach creates performance limitations and vendor lock-in risks.

To have full autonomy over switching between LLMs, improving prompts with extra context via RAG, and implementing data quality guardrails, engineering teams at Microsoft, Uber, and other enterprise companies are using plug-and-play orchestrator frameworks.

How LLM orchestrators became the cornerstone of multi-agent systems

As multi-agent systems and agentic workflows become ubiquitous, machine learning engineers have started building orchestrator frameworks that help manage and monitor these systems.

They help break tasks into steps, manage state between them, call other models or APIs, handle errors, and maintain traceability, so that engineering teams don’t have to write the entire logic from scratch.

How orchestrators work

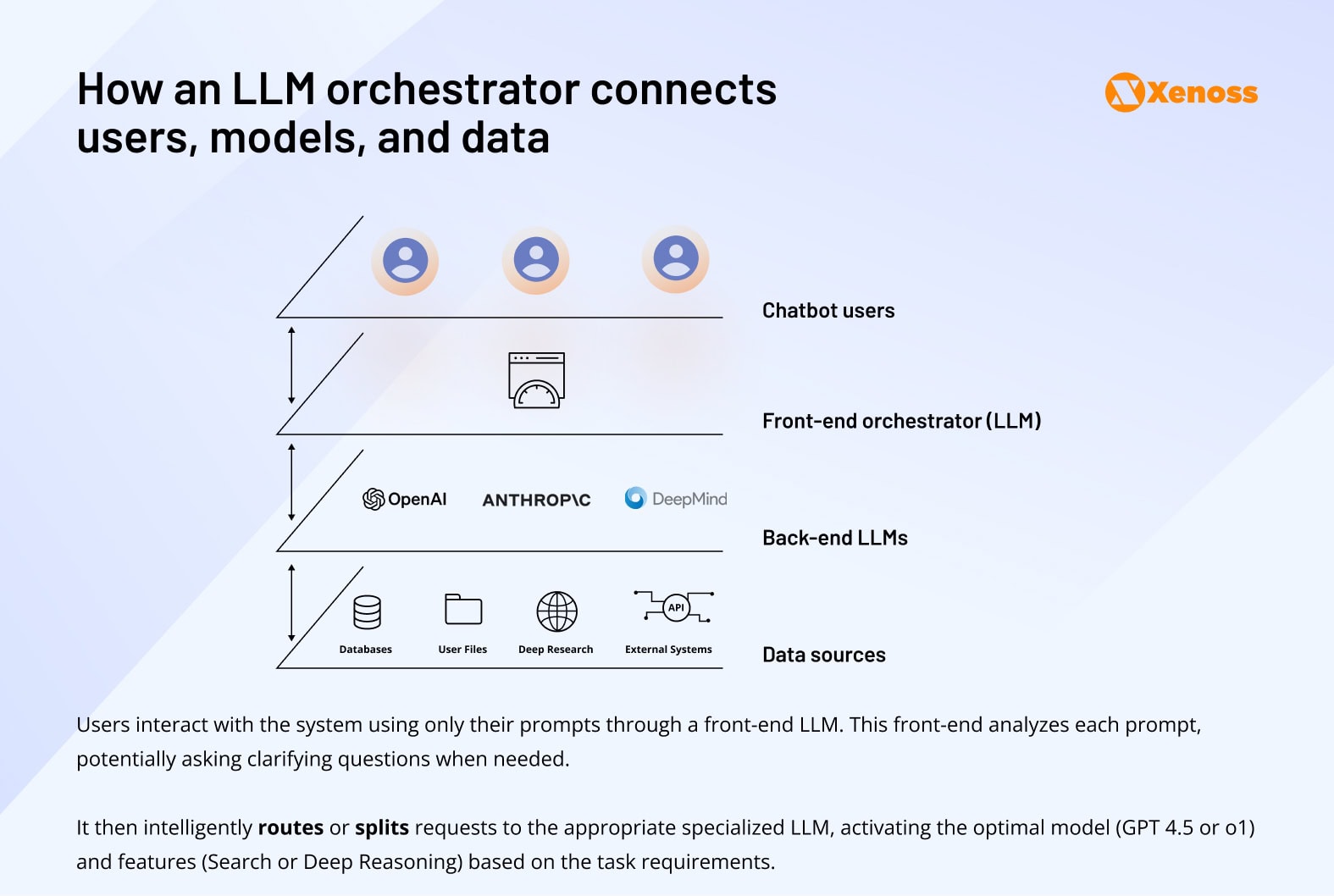

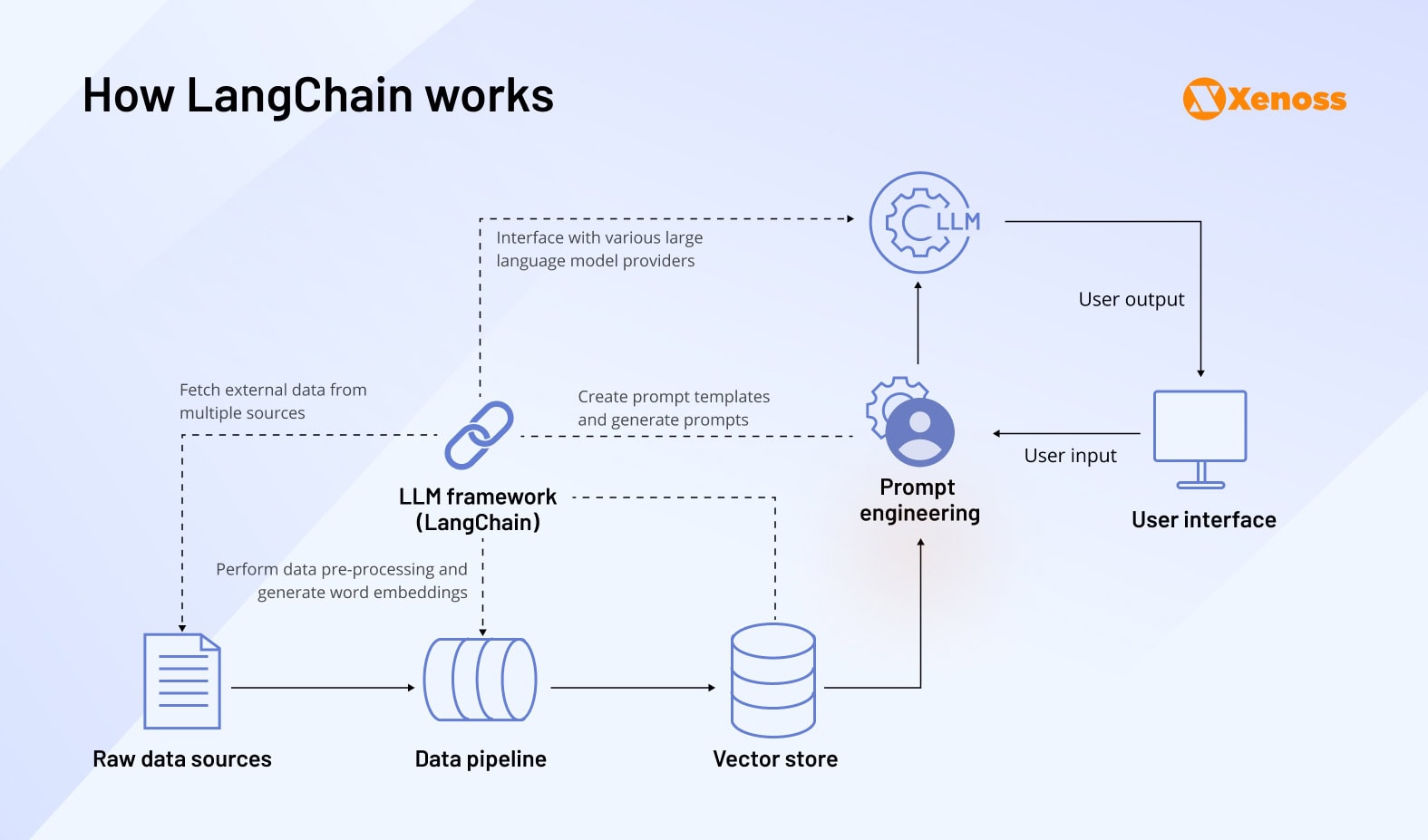

To understand how LLM orchestration frameworks support complex workflows, it is helpful to follow the journey of a user prompt through the orchestration layer.

- The algorithm evaluates the quality, completeness, and complexity of each new prompt.

- Based on this analysis, the orchestrator reroutes the prompt to an appropriate model or improves it by connecting to a vector database, connecting more project knowledge (this is a standard RAG pipeline).

- Once an improved prompt is rerouted to the best-fit model, a user gets the final answer at high speed and cost-efficiency.

Besides improving response accuracy, automating tool calling, and streamlining LLM communication, agents solve two core issues that limit the performance of multi-agent systems: intrinsic LLM limitations and poor context.

1. No model is good at everything

It is becoming an industry axiom that there’s no single best large language model. Nathan Lambert called this trend back in 2024 when assessing open-source models.

The last few weeks of releases have marked a meaningful shift in the community of people using open models. We’ve known for a while that no one model will serve everyone equally, but it has realistically not been the case.

His words ring even truer in 2025, when state-of-the-art LLMs like Qwen3, GPT OSS, and Kimi K2 are competing head-to-head in benchmarks.

Eugene Yan wrote something similar in his blog.

‘We probably won’t have one model to rule them all. Instead, each product will likely have several models supporting it. Maybe a bigger model orchestrating several smaller models. This way, each smaller model can give their undivided attention to their task.

The days of GPT-4 being ‘the model’ and everyone else an ambitious challenger are history.

GPT-5’s release wasn’t a benchmark sweep; it beat competitors by small margins while losing to Claude, Grok, and Gemini in specific areas.

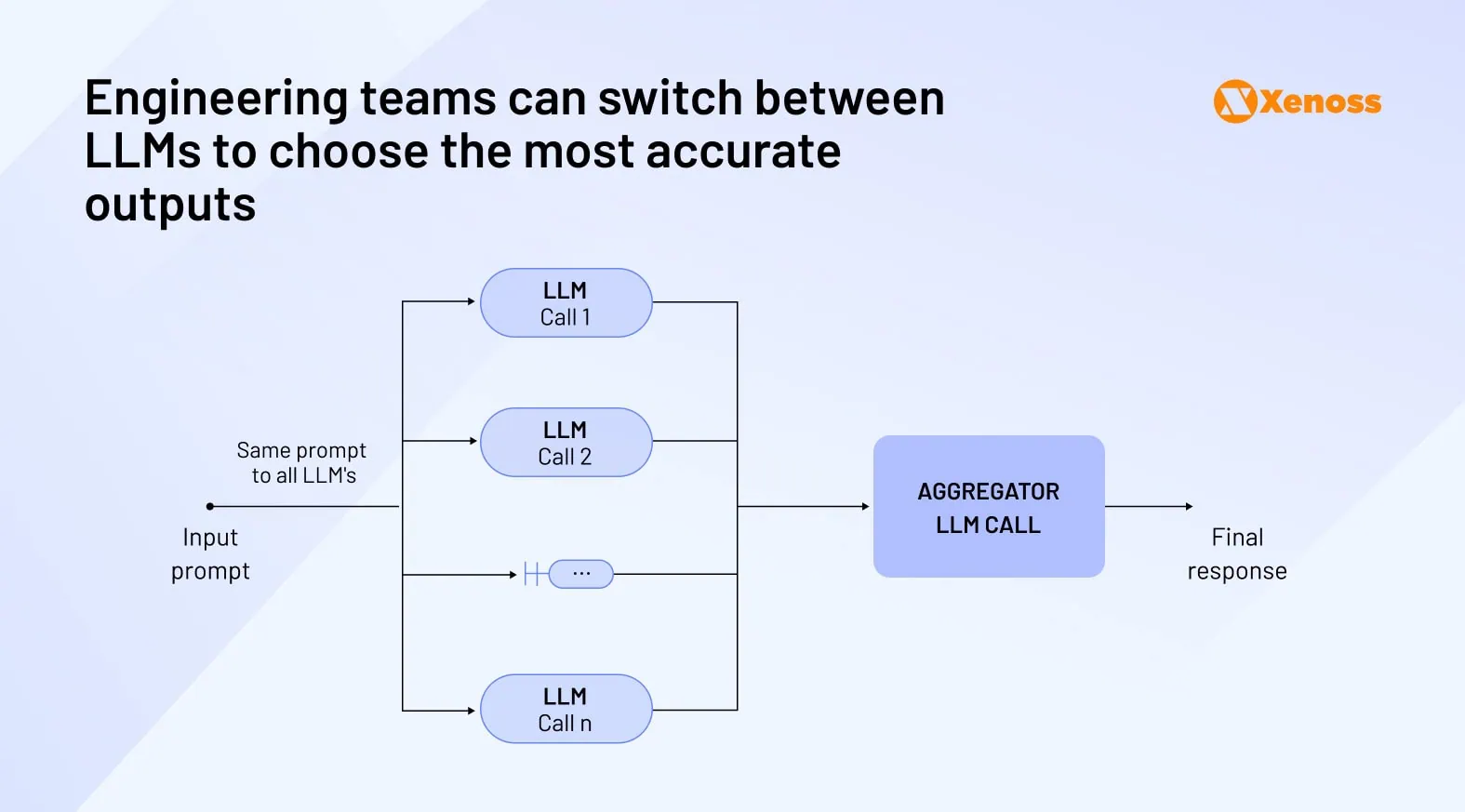

So, instead of hoping for a single LLM that aces every benchmark, developers use orchestrators to get ‘the best of all worlds’ and build a flow where each sub-agent applies the LLM best fit to its tasks.

Most state-of-the-art frameworks support fairly straightforward multi-LLM integration.

- LangChain’s init_chat_model() and configurable_alternatives help integrate and manage different models in a single agentic workflow.

- LangGraph’s documentation also explicitly states users can “swap out one model for another”.

- LlamaIndex also has a Settings.llm command for choosing among all state-of-the-art models

This ability to fairly swap between LLMs without having to revamp the entire workflow gives engineering teams more control and freedom in building with models that best serve their current objectives.

2. Poor context

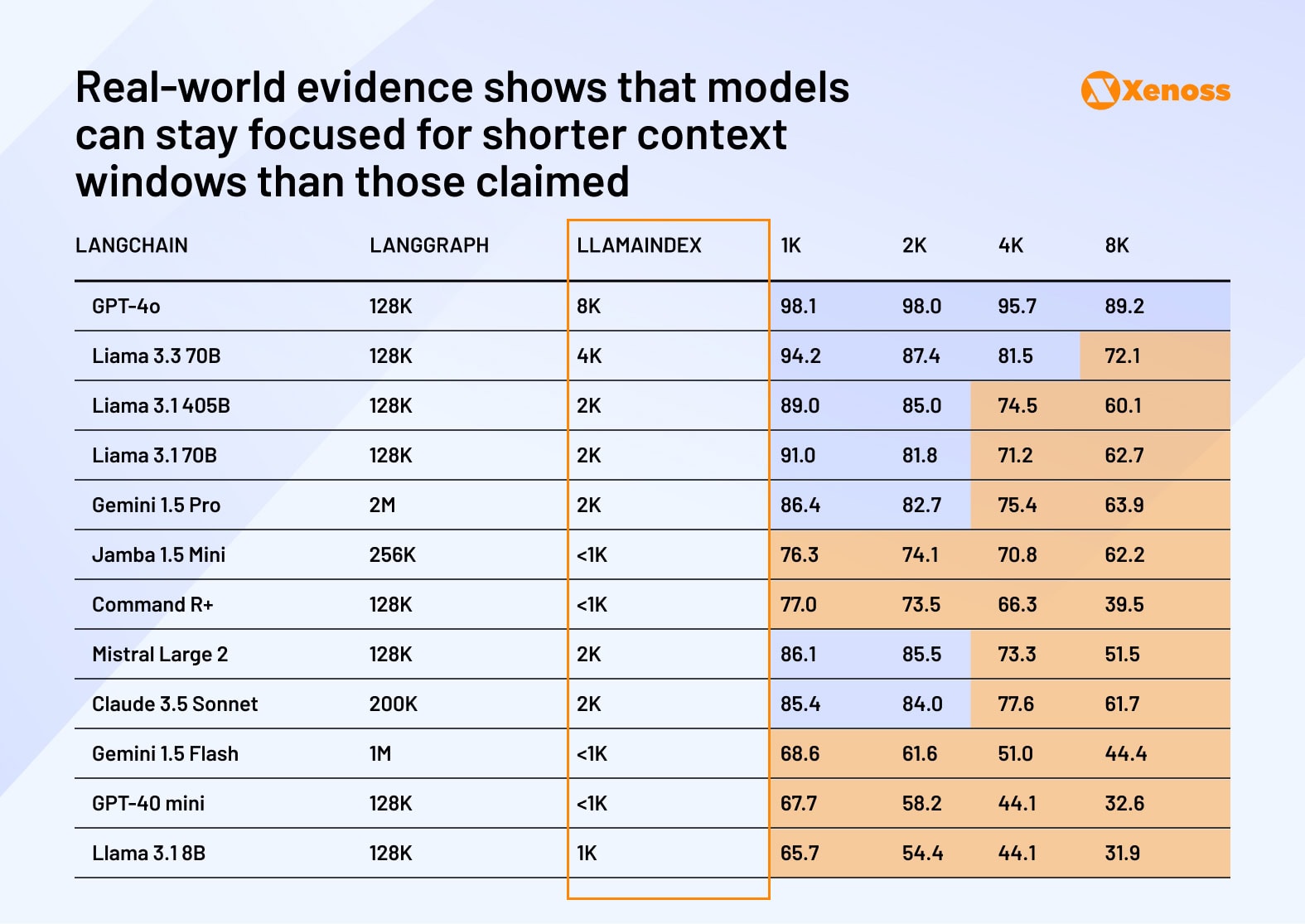

Context window limitations constrain enterprise applications despite frontier labs’ constant increases. Empirical testing reveals that models lose focus on large-context prompts, making functional context windows significantly narrower than official specifications.

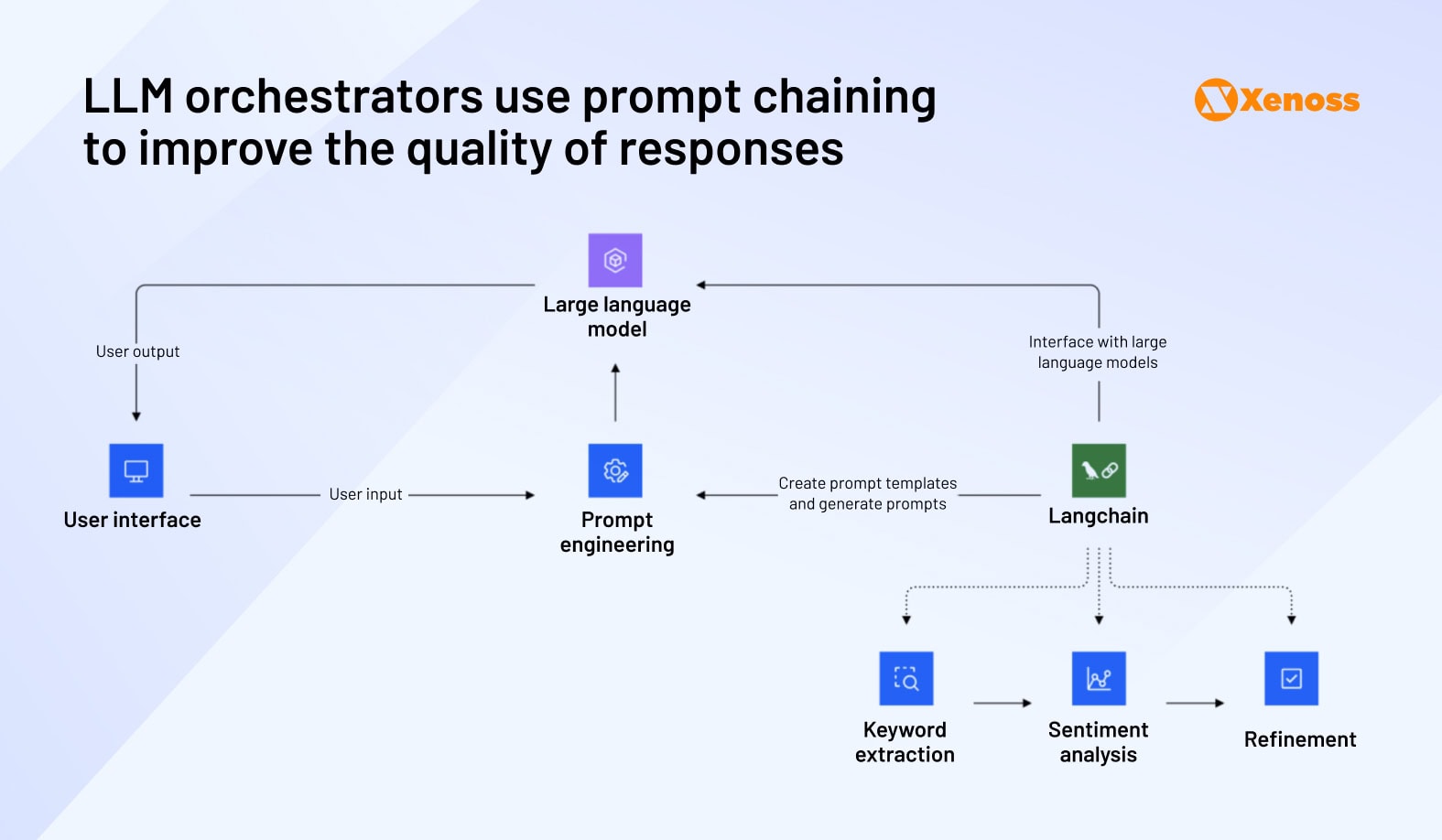

Orchestrators use multiple strategies to solve the context problem in multi-agent systems.

- Prompt templates. An orchestrator adds additional instructions, examples, and questions that help the LLM reason more deeply about a user query.

- Prompt chaining. Orchestrators share the same prompts with multiple LLMs, then combine and compare all outputs to generate the most accurate ‘master answer’. Besides, an orchestrator stores all user prompts in a library, improving the agent’s memory and combining several prompts that may help get better answers.

- Fact-checking. Even though SOTA model developers tend to improve model accuracy between releases, workflows empowering high-stakes use cases require nearly zero hallucination probability. Orchestrator frameworks offer engineering teams more confidence in LLM outputs by assessing if outputs meet custom guidelines, flagging ambiguous responses for human review, or providing alternative suggestions to give users more complete answers.

The ability to reliably address the blind spots of single LLMs and boost the performance of multi-agent systems led to the rapid development of the orchestrator ecosystem.

A quick tour of orchestrator frameworks

At the time of writing, there are dozens of acclaimed tools on the market and many more indie projects that aim to streamline agentic workflows.

Here is a brief overview of the market frontrunners.

1. LangChain

LangChain provides open-source building blocks for LLM applications, offering reusable components for prompts, models, tools, and data with extensive integration support.

LangChain anchors a comprehensive ecosystem: LangSmith for tracing and evaluations, LangServe for deployment, and LangGraph for stateful workflows.

The platform maintains market leadership with enterprise adoption across organizations, including Uber and Vodafone.

Key features:

- LCEL (LangChain Expression Language): Compose reliable, testable chains with declarative syntax

- Large integration catalogue: Hundreds of models, vector stores, and tools make it easy to swap vendors.

- Production tooling: Built-in tracing, evals, and API serving via LangSmith/LangServe.

- Python and JavaScript parity: Similar APIs in both stacks.

- Active community and docs: Robust documentation, community examples, and rapid iteration

Best use case: LangChain excels for orchestration-heavy RAG and agent systems requiring multi-step flows, retrieval, tool integration, guardrails, and enterprise-scale observability.

2. LlamaIndex

LlamaIndex is a data-centric framework that connects proprietary information to LLMs. It focuses on ingestion, parsing, indexing, and retrieval to transform internal documents, databases, and APIs into a clean context for prompt generation.

The platform extends beyond core functionality with specialized tools: LlamaParse for high-fidelity document parsing, LlamaHub for data connectors, and LlamaCloud for managed deployment and monitoring.

Key features:

- Data-first RAG building blocks: ingestion pipelines, chunking/node abstractions, metadata, and routing built in.

- Rich indexing & retrieval: vector, keyword, and graph indexes; hybrid retrieval, reranking, and query engines.

- High-quality parsing: LlamaParse handles PDFs, slides, tables, and complex layouts with minimal cleanup.

- Composability: plug-and-play “indices” and “query engines” you can compose, chain, and A/B easily.

- Broad connectors & tooling: LlamaHub loaders for common data sources; Python and JavaScript support.

Best use case: LlamaIndex shines when solving data-heavy RAG problems. It supports diverse document types, tricky parsing, and retrieval pipelines that need hybrid search, reranking, and graph enrichment.

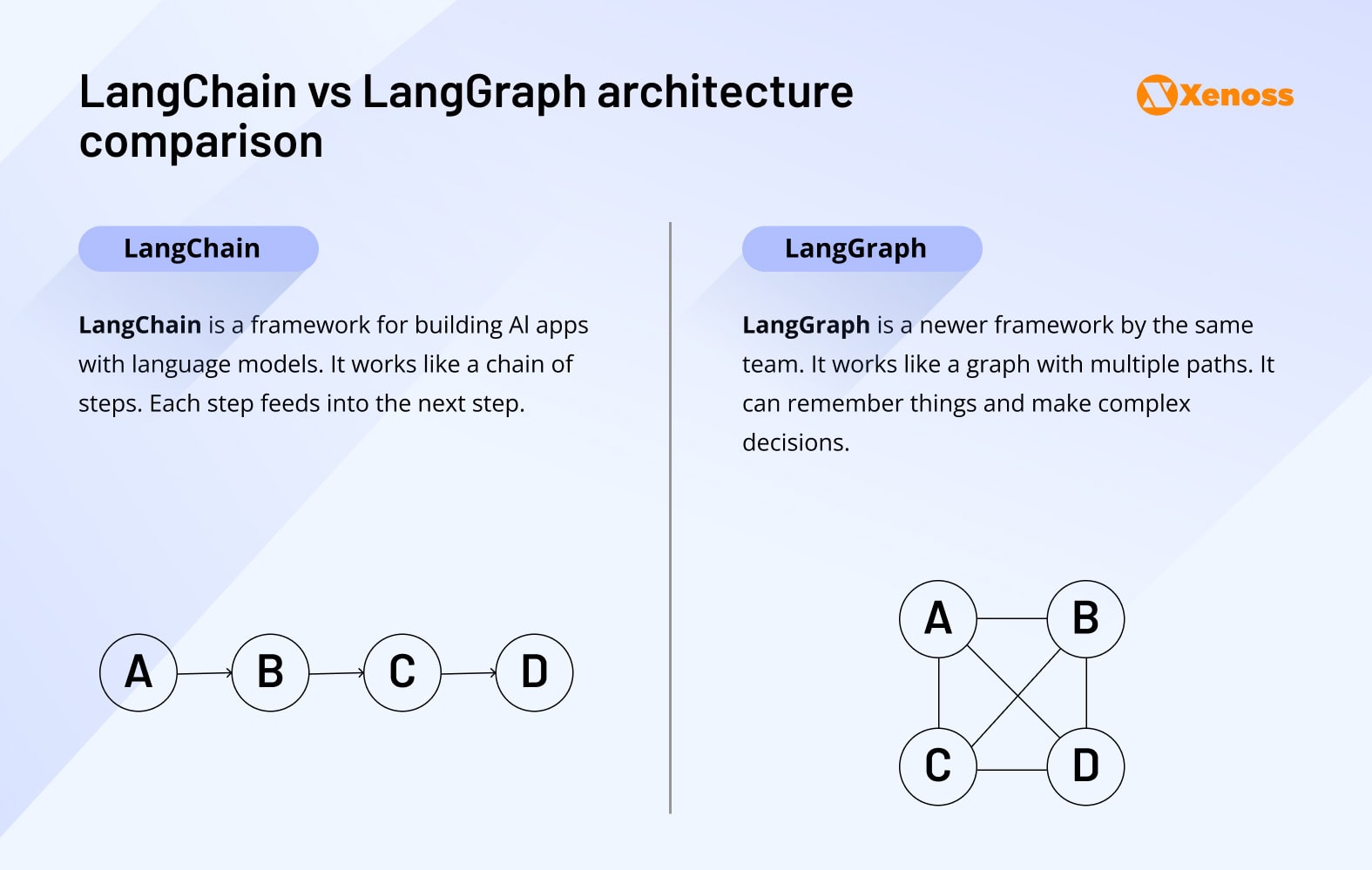

3. LangGraph

LangGraph enables building stateful, controllable agent workflows as directed graphs, rather than linear chains. Engineers model applications as nodes and edges with explicit state management, retries, and guardrails.

Key features:

- Graph-first orchestration: DAG/state-machine model enabling multi-step, branching workflows with complex decision paths

- Stateful persistence: Built-in checkpointers, resumability, thread-like sessions, and persistent memory across interactions

- Multi-agent patterns: Pre-built supervisor-worker, tool routing, and role-specialized agent architectures

- Fine-grained control: Step-level guards, human-in-the-loop interrupts, configurable timeouts, and retry mechanisms

- Enterprise tooling: Live tracing via LangSmith, deployment through LangGraph Platform

- Cross-platform support: Feature parity and similar APIs across Python and JavaScript ecosystems

Best use case: Engineering teams choose LangGraph for reliable, auditable, long-running agentic systems, including tool-using assistants, workflow automations, research pipelines, and approval-driven processes requiring state persistence and human oversight.

4. CrewAI

CrewAI provides an open-source Python framework specifically designed for multi-agent system development. The platform combines Crews (autonomous, role-based collaborative agents) with Flows (structured, event-driven control) to balance agent autonomy with precise orchestration.

CrewAI has gained significant community traction with 35k+ GitHub stars and enterprise adoption, including AWS, PwC, and IBM.

Key features:

- Dual architecture approach: Crews enable agent collaboration while Flows provide conditional logic, loops, and state management for production control

- Flexible process models: Sequential and hierarchical execution modes with manager agents for delegation and validation workflows

- Human oversight integration: Built-in human-in-the-loop capabilities for quality control and approval processes

- Extensive connectivity: MCP server support and provider-agnostic LLM integration via LiteLLM for maximum flexibility

Best use cases: Enterprise teams leverage CrewAI for reliable, auditable agentic automation combining autonomous collaboration with structured workflow controls, including code modernization pipelines, back-office process automation, contact center analytics, and compliance-driven eligibility determinations.

5. PydanticAI

PydanticAI delivers FastAPI-like ergonomics for building production LLM applications. The Python-first framework emphasizes type-safe validated outputs, clean dependency injection, and provider-agnostic model support with integrated Logfire tracing.

The platform demonstrates strong developer adoption with 11.5k+ GitHub stars and nearly 3,000 public dependents. Recent updates include Hugging Face Inference Providers integration for streamlined open-source LLM access.

Key features:

- Dependency injection: Pass data and services into prompts, tools, and validators with full static typing support for reliable development workflows

- Provider-agnostic architecture: Native integration with OpenAI, Anthropic, Gemini, DeepSeek, Ollama, Groq, Cohere, Mistral, and Hugging Face Inference Providers

- Comprehensive tooling: Built-in evaluations, reporting capabilities, Logfire debugging, and optional typed graph modules for complex workflow orchestration

Best use case: Engineering teams select PydanticAI for production-grade agent workflows where strictly structured outputs, comprehensive type safety, and enterprise reliability requirements take priority over rapid prototyping.

Should you buy or build your orchestrator framework?

Orchestrators like LangChain, LlamaIndex, and LangGraph emerged from LLM proliferation; they’re new tools with inherent limitations. Understanding these constraints helps teams make informed build-versus-buy decisions.

Why some engineers are done with orchestrators

On all major orchestrator subreddits, there’s no shortage of grievances that seem to be bothering LangChain, LangGraph, and LlamaIndex users equally.

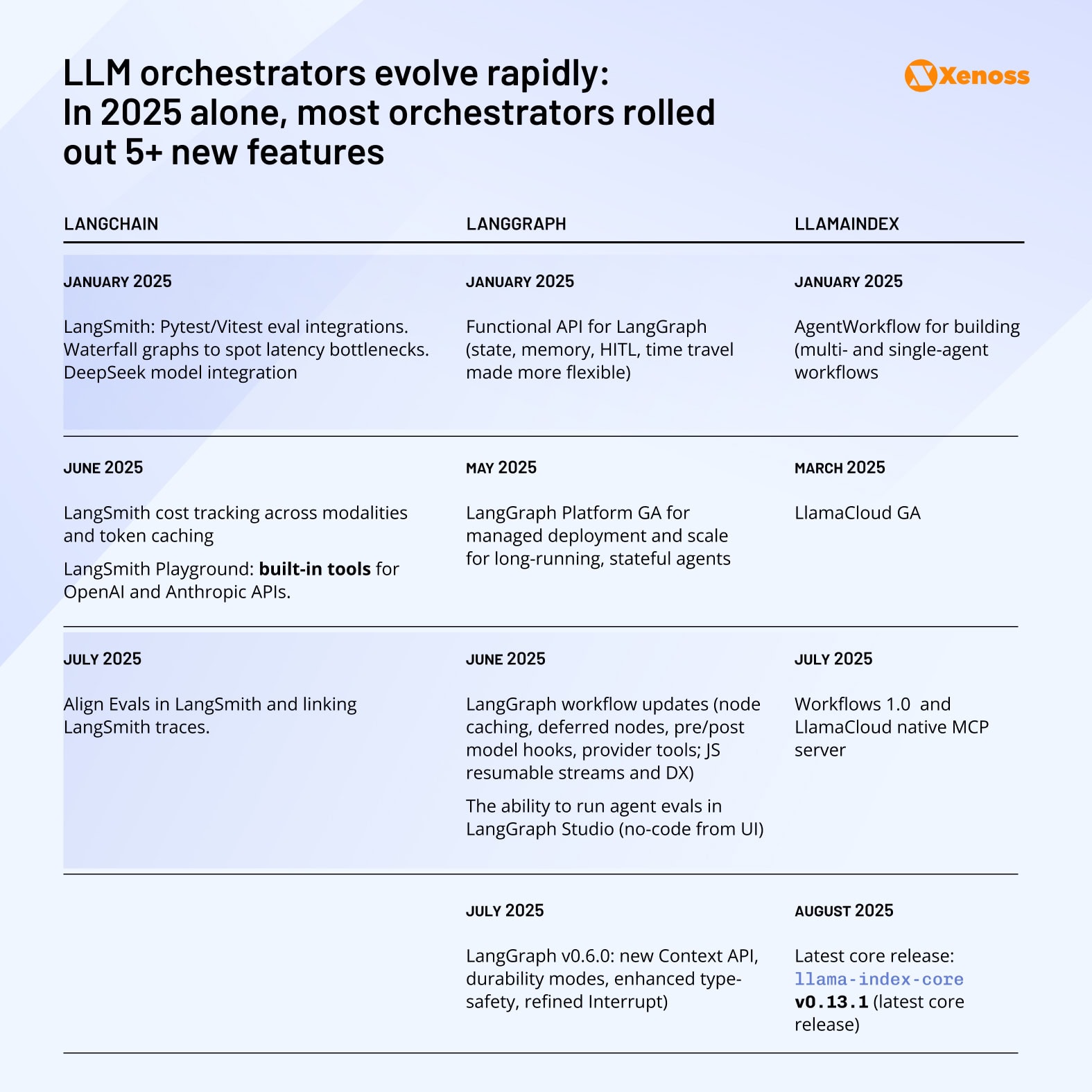

Rapid pace of change

Orchestrator evolution lacks standardization as vendors experiment with community feedback. Without established best practices, providers make educated guesses about essential building blocks, leading to frequent feature changes.

Feature deprecation cycles create enterprise risk. One engineer reported failing a job interview due to using deprecated LangChain methods, demonstrating how rapid changes impact professional competency.

For enterprise workflows, the impact of these changes can be significantly more devastating.

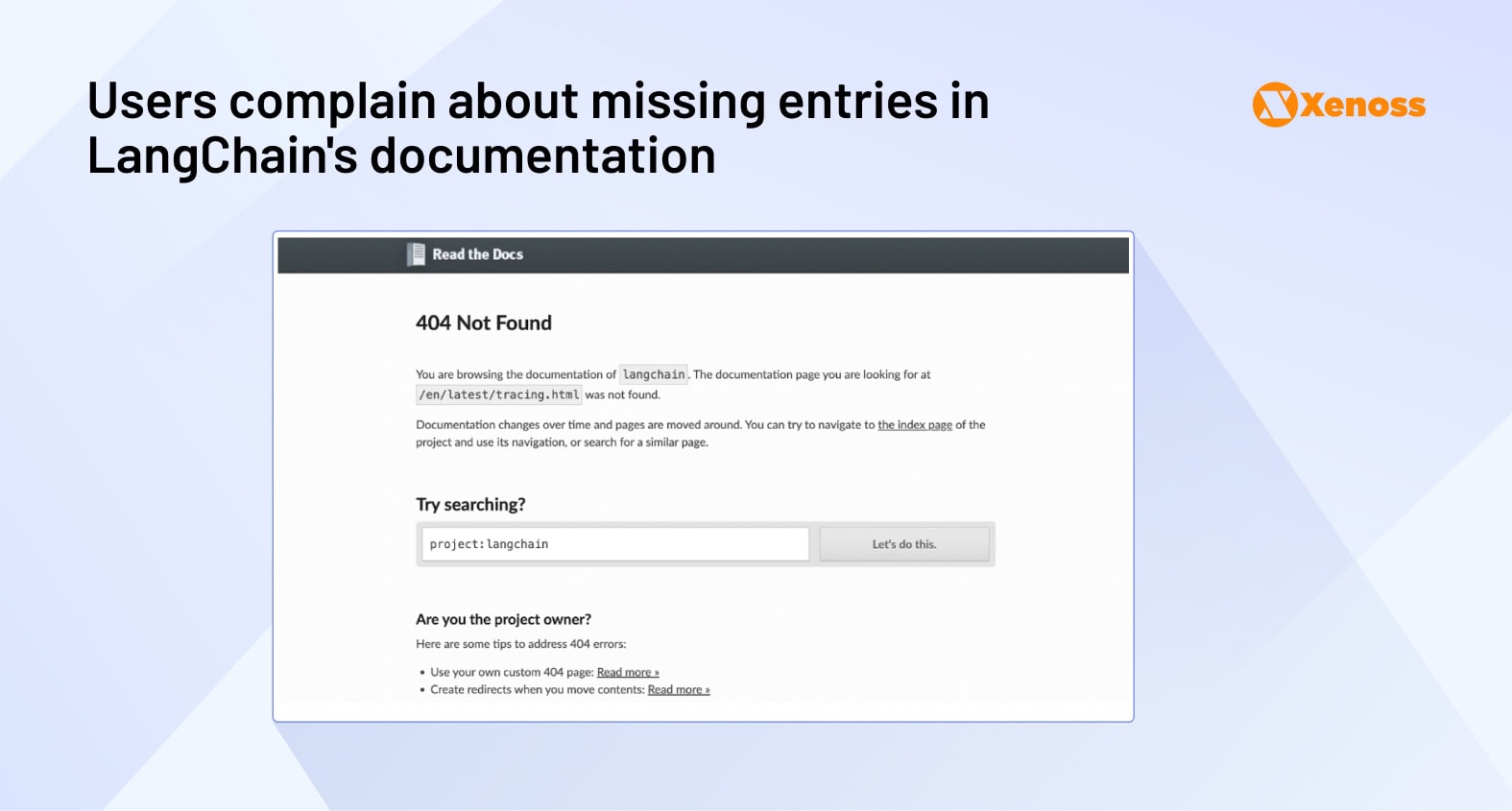

Documentation gaps

The lack of robust documentation is another orchestrator-agnostic feature.

On r/langchain, hardly a day goes by without a new documentation-related rant from a fed-up engineer.

Their documentation is out of date as soon as it’s written, and ironically, they haven’t put together any sort of flow to help them keep it up to date.

LlamaIndex users are also vocal about this.

The documentation is less than clear on many aspects. I had to look at the source code many times to understand what is available and how it works…

Because orchestrators do not have reliable docs and API references, the ‘let’s go back to vanilla Python’ sentiment, summed up by a Reddit comment below, is pretty common in the machine learning community.

I mean, if I have the energy to understand langchain’s documentation, that energy might be better spent on trying to read concept papers and master the native and core modules.

For some use cases, this viewpoint is reasonable.

When you should build an LLM orchestrator

Most orchestrators on the market face the ‘accidental complexity’ challenge. LangChain, as the market leader, gets most criticism about a bloated set of features, a messy codebase, and complex documentation, but there isn’t a solution entirely immune from these problems.

LangChain provides a reasonable starting point for prototyping, but enterprise teams often reach scenarios where the best framework is no framework.

Your use case does not have complex workflows or switching between LLMs

Designing complex multi-LLM systems for the sake of complexity alone might make sense for engineers who deliberately want to practice rerouting between models, but much less so for teams who want to manage business-critical tasks.

For the latter, the most optimal approach is the simplest one (both OpenAI and Anthropic highlight this point in their papers on multi-agent systems).

When building applications with LLMs, we recommend finding the simplest solution possible and only increasing complexity when needed. This might mean not building agentic systems at all. Agentic systems often trade latency and cost for better task performance, and you should consider when this tradeoff makes sense.

So, assuming an engineering team finds themselves with a use case where connecting a few agents powered by a single LLM solves the problem, using the vendor’s API is a reasonable alternative to LangChain, LangGraph, and the like.

The recent generation of SOTA models’ APIs is very powerful: OpenAI’s Response API, for example, supports memory-like state management, tool calling, and multi-step execution.

Vendor lock-in will be the trade-off here, but, on the other hand, removing the framework reduces the risk of abstracting away too much of the system logic and ultimately leaving the engineering team confused.

To avoid being more confused by the abstraction than by the very thing you are aiming to abstract, machine learning frontrunners generally recommend staying away from abstraction until your pipeline is so complex that they are truly required.

You need specific features without framework bloat

Post-prototype teams often identify streamlined requirements after LangChain experimentation. Octomind, an AI-powered QA testing platform, distilled their needs to four components:

- LLM communication client

- Tool calling functions

- Vector databases for RAG

- Observability platform for governance

After identifying these core pillars of a custom system, the development team used simple code and handpicked external packages to build a LangChain-like framework, excluding features that their team did not need.

Your team prioritizes software engineering best practices

Because LLM frameworks evolve at breakneck speed, their codebases are what one Reddit user accurately described as a “big monolithic cluster of code”. There is little separation between configuration and execution code, data transformations, and database connections.

This laissez-faire codebase is messy and error-prone, which has led to anecdotes about LangChain creating random functions that return empty values.

If your engineering team is all about microservices and modular architecture, introducing a monolithic framework may create a project management nightmare, and a DIY alternative seems like a better solution.

When using an LLM framework still makes sense

Given that there are no ‘best practices’ in the LLM framework community yet (because things change too fast for any to successfully stand the test of time), there’s a general ‘your framework is as good as mine’ attitude.

Dozens of open-source projects get shipped to GitHub every day, and even more engineering teams are building simple internal tooling to enable their agentic flows.

In such a Wild West landscape, why do LangChain, LangGraph, LlamaIndex, and other orchestrators still have a high market share?

In some scenarios, they are still the most convenient option. Let’s examine these use cases one by one.

Case #1. Your team needs to build fast

By far and large, the speed of deployment is the selling point of plug-and-play agent orchestrators.

Instead of having to carefully choose the algorithms, APIs, and external tools to power their custom multi-agent system, with LLM frameworks, machine learning teams get access to:

- Easily swappable integrations with all state-of-the-art LLMs

- Ready-to-deploy modules for external providers (e.g., vector databases)

- A large community bursting with tutorials, implementations, code examples, and answers to all common questions

LLM frameworks are a popular go-to solution for prototyping. Once the system is up and running, engineering teams tend to swap the orchestrator for internal tooling.

Case #2: You are building complex multi-step pipelines

The problem with most anti-framework takes is that they are written by solo engineers or the leaders of small teams. For small-scale use cases they are implementing, frameworks like LangChain or LlamaIndex are indeed redundant, and custom-coded solutions are fairly trivial.

However, as the pipeline complexity increases, so does the time and effort needed to build the entire boilerplate from scratch.

Enterprise-grade multi-agent systems require substantial infrastructure capabilities:

- External API orchestration and error handling

- Persistent state management across long-running processes

- Comprehensive fallback and retry mechanisms

- End-to-end traceability and audit logging

- Production metrics and observability tooling

All these can be coded internally, but doing so puts teams at risk of putting too much effort into infrastructure management instead of product features.

Frameworks offer these features out of the box, saving engineering teams months in development time, lowering maintenance overhead, and reducing boilerplate.

Case #3. You want to build a flow that transfers between projects

Another benefit frameworks have going for them is the tendency to enforce uniformity.

Since there’s no single best LLM framework on the market, it’s easy to imagine a more efficient system. However, even if your team builds one, that codebase will likely be use-case-specific and not transfer well to other projects.

LLM frameworks enforce this versatility and help standardize multi-agent system design across the entire organization.

The rule of thumb for when to use agent orchestrators is: If you have 3+ of state, branching, parallelism, multiple tools/LLMs, or strict observability needs, use an orchestrator. Otherwise, keep it simple.

Bottom line

In 2025, teams can accomplish a lot by building narrow multi-agent workflows. But large-language model APIs have limited multi-agent support and, even within those constraints, hosting all your workflows in one ecosystem increases the probability of vendor lock-in.

Orchestrator frameworks like LangChain, LangGraph, LlamaIndex, Pydantic.ai, and many others help circumvent these challenges by managing all architecture layers in a single interface: vector databases, third-party APIs, and interactions with different models.

The caveat to using orchestrators is that they tend to increase “accidental complexity” by adding redundant features on top of the baseline LLM -> API -> tools layer.

Before choosing the right orchestrator for your project, take the time to understand the use case carefully and ask yourself whether a framework is helpful altogether. Sometimes it may be a time-saver for your team, and other times it will just add ‘fluff’ on top of business-critical features: it’s up to you to decide which of these applies to your project.