In January 2024, an employee at a Hong Kong-based firm wired $25 million to fraudsters after joining what appeared to be a video call with their company’s CFO. The executive was never in that meeting—it was an AI-generated deepfake.

As synthetic voice and video capabilities improve, fraudsters gain new tools for elaborate schemes. Deloitte’s Center for Financial Services estimates that banks will suffer $40 billion in losses from genAI-enabled fraud by 2027, up from $12.3 billion in 2023.

For banking leaders, countering fraud means staying ahead of attackers. AI-detected schemes require stronger AI detection algorithms that can identify and stop fraud in real time, before damage occurs.

The finance industry is mapping out approaches for real-time fraud detection powered by transformers, retrieval-augmented generation, federated learning, and other machine learning tools.

This post reviews four approaches to countering voice fraud, multi-channel attacks, money laundering, and credit card fraud, each backed by real-world deployments. It also covers key considerations banking leaders should evaluate before building AI-based fraud detection systems.

The rising cost of financial fraud

Financial fraud strains the banking industry significantly. McKinsey projects banks will lose $400 billion to fraudulent activity by 2030, with authorized push payment (APP) fraud growing at 11% annually.

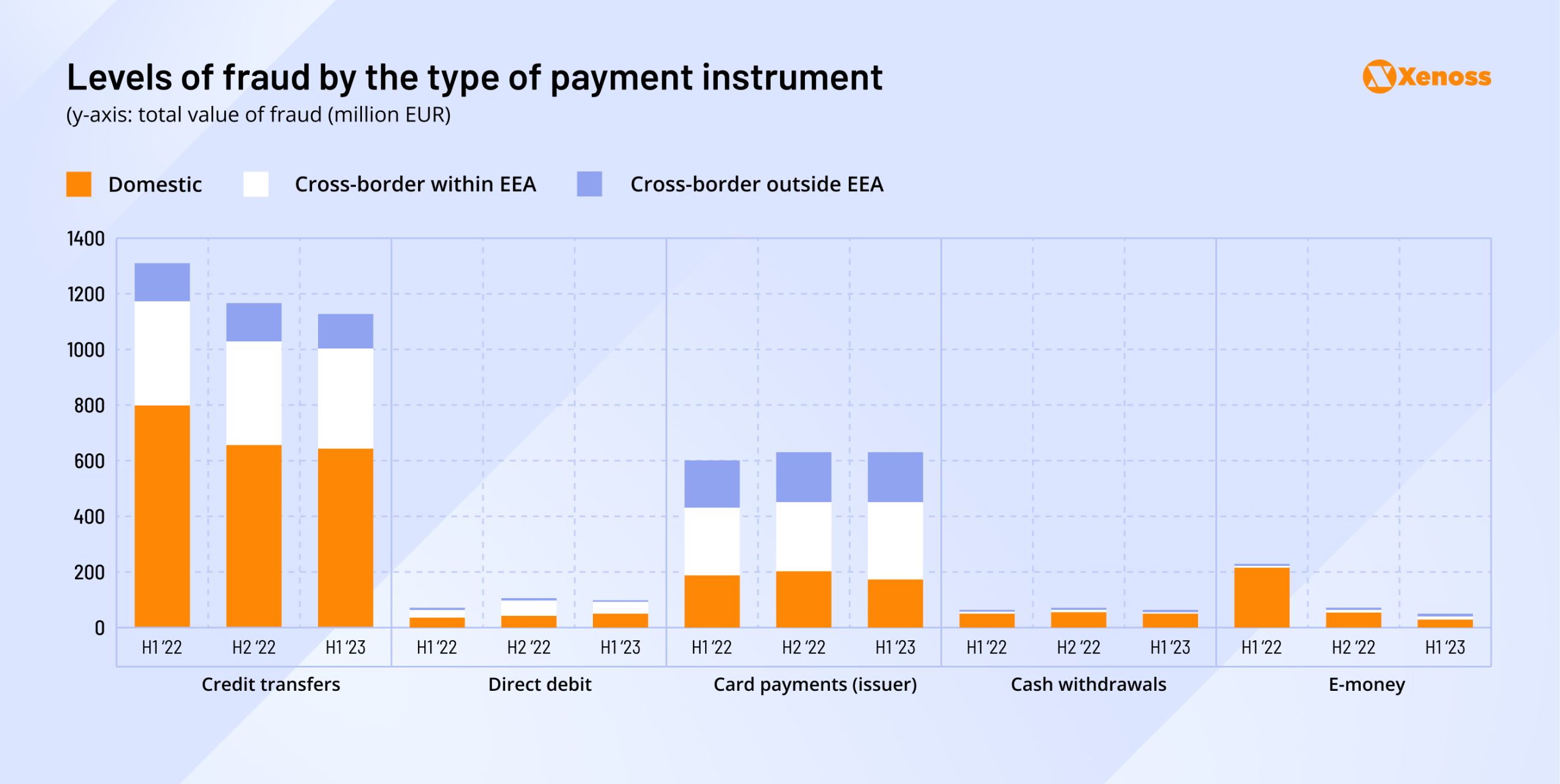

The European Banking Authority reports similar trends: €2 billion in losses occurred in the first half of 2023 alone. Domestic credit payments represented the highest fraud risk, followed by cross-border credit card payments within the EEA.

Despite $6 billion in AML-related fraud fines issued globally in 2023, financial institutions must strengthen protection efforts.

Real-time fraud detection helps identify unauthorized transactions before completion. Traditional methods include enhanced user authentication and GPS/biometric integration in identity verification workflows.

Machine learning real-time detection algorithms have gained significant traction over the past three years. Banks are developing proprietary algorithms trained on customer transactions to detect anomalous behavior.

Compared to traditional statistical methods, AI algorithms offer superior flexibility and fewer false positives through self-learning capabilities. Large language models and agent-based AI systems now scan for anomalies, impersonation attempts, and laundering patterns in real time.

#1: Real-time credit card fraud detection with advanced transformer models

Credit card fraud detection presents a classic machine learning challenge: massive class imbalance. Fraudulent transactions represent less than 0.2% of all credit card activity, meaning training data is dominated by normal behavior. This imbalance causes models to misclassify fraudulent events as legitimate, leading to multimillion-dollar losses.

U.S.-based researchers recently tackled this issue with a transformer-based architecture designed for real-time fraud detection. Their approach outperformed traditional methods like XGBoost, TabNet, and shallow neural networks across multiple benchmarks.

How advanced transformer models improve prediction accuracy

A five-step framework helps train a more accurate engine for detecting credit card fraud.

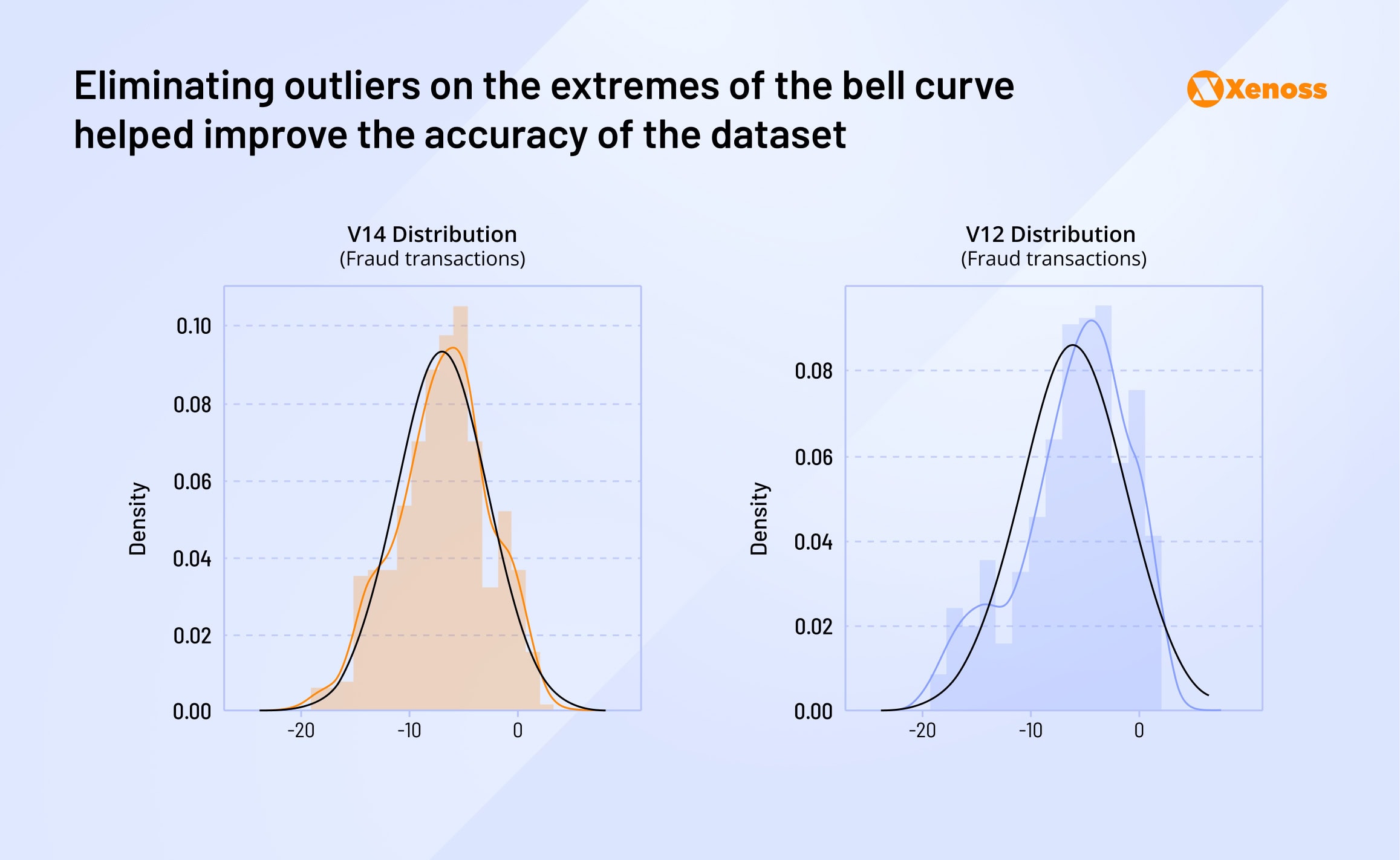

- Balance training data: Remove safe transactions until fraudulent and normal data points reach a 1:1 ratio

- Eliminate outliers: Apply the “box-plot rule” to remove values beyond normal spread and reduce model noise

- Validate dataset: Remove incorrect or duplicate data points

- Train transformer model: Use balanced data to identify and apply patterns to transaction history in real time

- Benchmark performance: Compare against other ML techniques using F1 scores

Benefits and use cases of this approach

Transformers excel in fraud detection because they evaluate all available data simultaneously, unlike decision trees or gradient-boosted trees that study one feature at a time. This enables detection of sophisticated patterns, such as transactions at atypical locations during unusual times.

Transformers also require minimal maintenance. Models update through minutes to hours of training on new data, while traditional approaches require redesigning decision trees from scratch.

In practice: How Stripe applies this approach

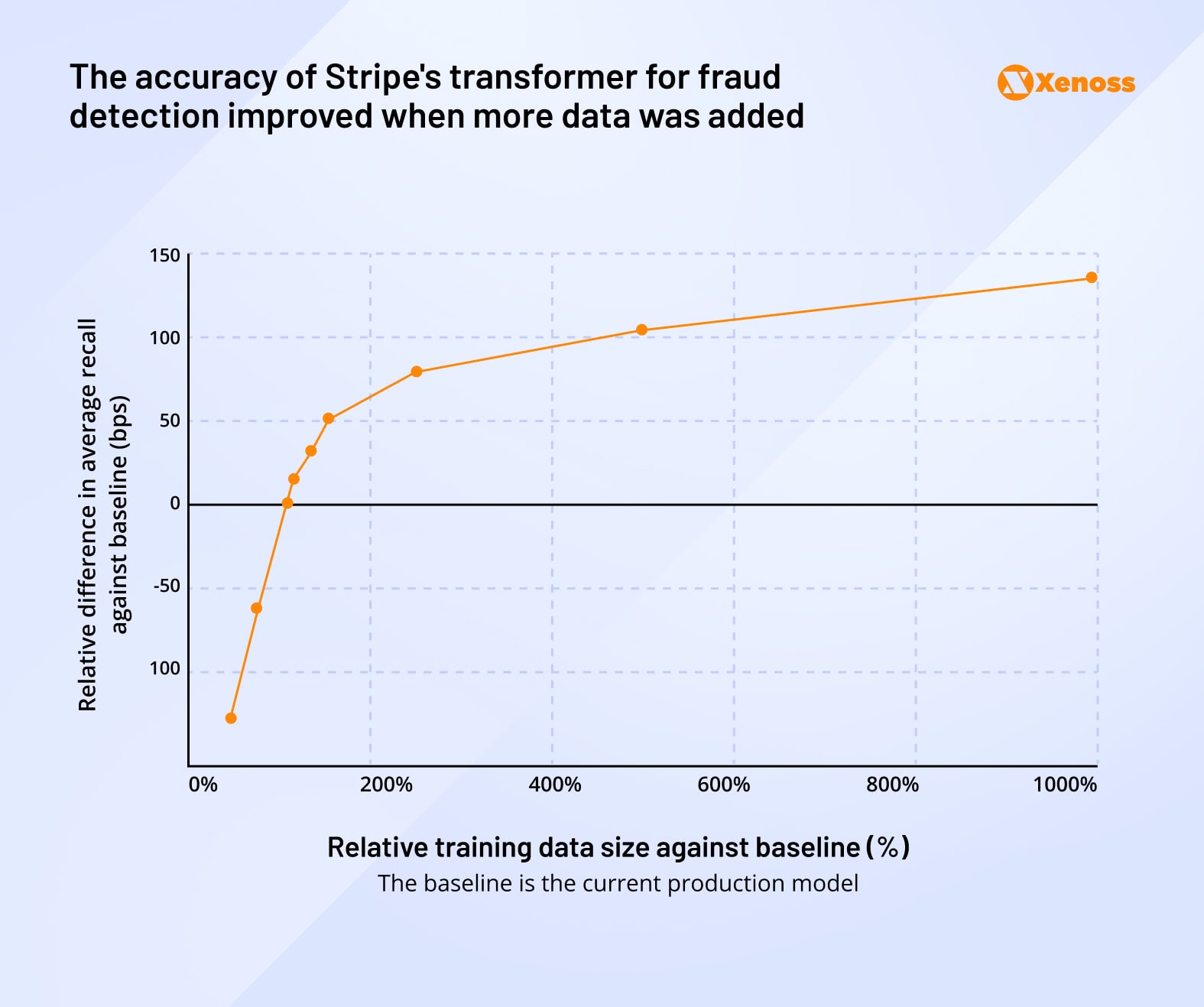

Stripe’s fraud detection engine, Radar, uses a hybrid of XGBoost and deep neural networks to scan over 1,000 characteristics per transaction. The system achieves 100ms response time and a 0.1% false-positive rate.

The approach suits omnichannel fraud detection, assessing up to 100 events simultaneously. Banking teams can build transformers that scan credit card fraud 24/7 across web, mobile, and ATM channels.

Stripe’s engineers note that expanding the dataset further improves accuracy, demonstrating the compounding returns of data-driven model tuning in fraud prevention.

#2: Stopping AI voice fraud with a RAG-based detection system

Phone fraud costs banks about $11.8 billion per year, and rapidly improving voice AI models make new schemes harder to detect. In 2024 and 2025, large-scale AI-voice scams went unchecked in the UK, the US, and Italy.

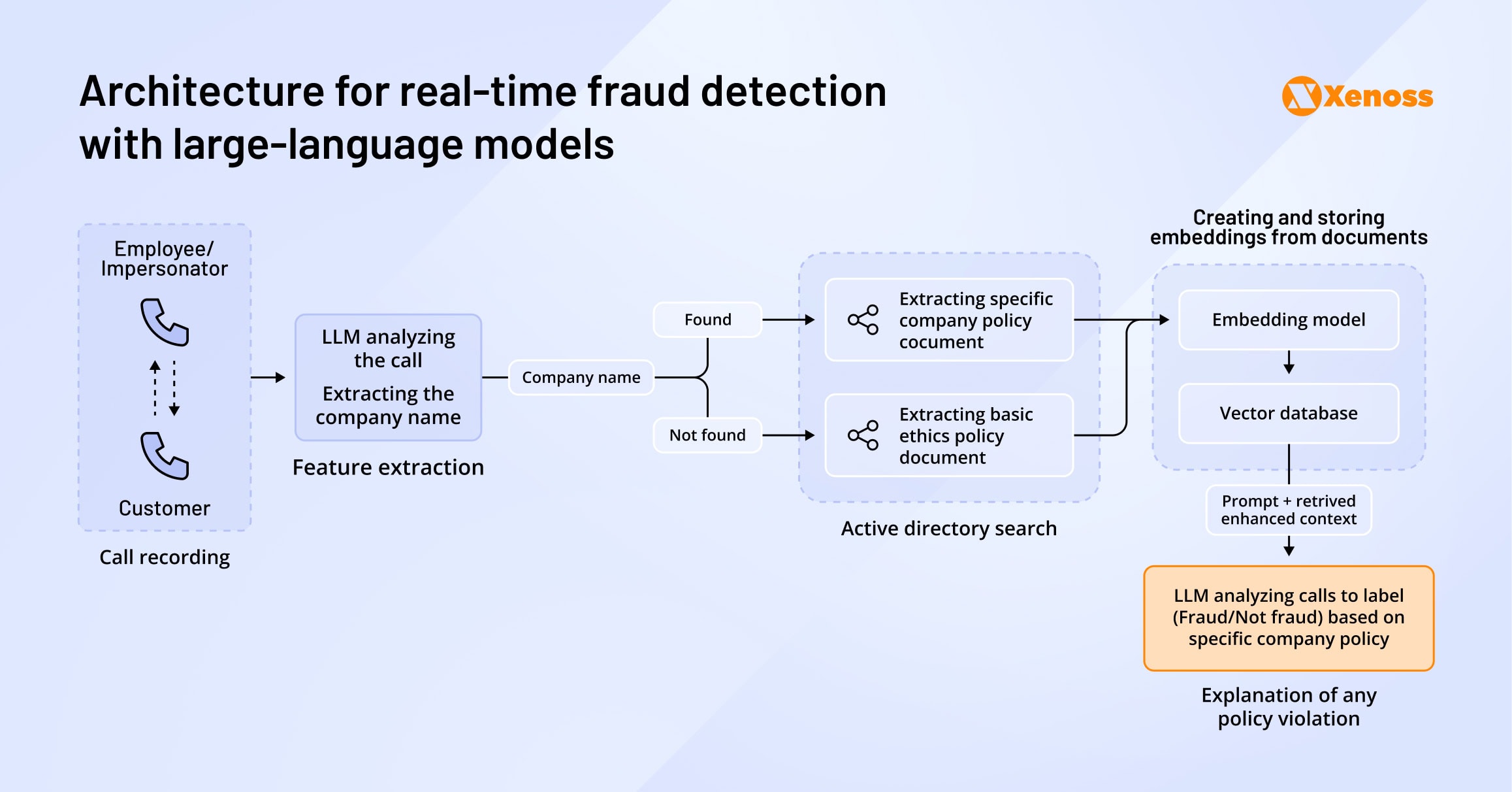

To counter this, researchers from the University of Waterloo introduced a real-time detection system powered by Retrieval-Augmented Generation (RAG). The architecture combines audio transcription, identity validation, and live policy retrieval to stop deepfake voice attacks before any sensitive information is shared.

The deployment pipeline includes four key steps:

Step 1: Audio capture and encryption

The bank’s system records audio conversations, encrypts them with AES for sensitive data protection, and shares encrypted data with speech-to-text models like Whisper (keep in mind that users need to opt in for recording each call).

Step 2: Transcription and analysis

Text-to-speech models convert audio to text, and a proprietary LLM extracts relevant data, such as company and employee names, from the transcript.

The paper suggests using RAG to retrieve the company’s current policy and cross-check whether the conversation’s flow is normal or potentially fraudulent.

Step 3: Parallel identity verification

While a RAG-trained LLM analyses the audio, an imposter module runs a parallel identity check. It validates whether the caller’s name is listed in the employee directory of the bank they claimed to be working for.

Step 4: Real-time response

The employee receives an OTP identity confirmation if the name is valid.

Because every stage is stream-based and lightweight, the whole loop is completed fast enough to warn the victim or hang up the call before sensitive information is leaked.

Adding RAG to the workflow allows swapping and updating documents in real time, without having to pause the system.

Benefits and real-world applications

The model reported in the paper has three powerful benefits.

One advantage of using RAG is that it allows updating the knowledge base in real time so that the model adheres to the bank’s up-to-date policies.

Two, the architecture allows for baked-in explainability. In the paper, engineers requested the LLM to support its output with a justification, which helps reverse-engineer the algorithm’s thought process.

Finally, by introducing OTP identity checks, finance teams can avoid false positives if the wording used is legitimate but the caller’s identity is not.

In practice: Mastercard’s 300% boost in detection

Mastercard deployed a RAG-enabled voice scam detection system in 2024, achieving a 300% boost in fraud detection rates. This demonstrates RAG’s practical effectiveness in current FinTech applications.

#3: Using generative AI to prevent multi-channel banking fraud

Financial fraud is no longer confined to a single point of access. Attackers probe multiple channels—ATM, mobile app, web banking, even call centers, before executing a scam. That complexity complicates fraud detection: signals are fragmented, formats vary, and latency constraints challenge real-time analysis.

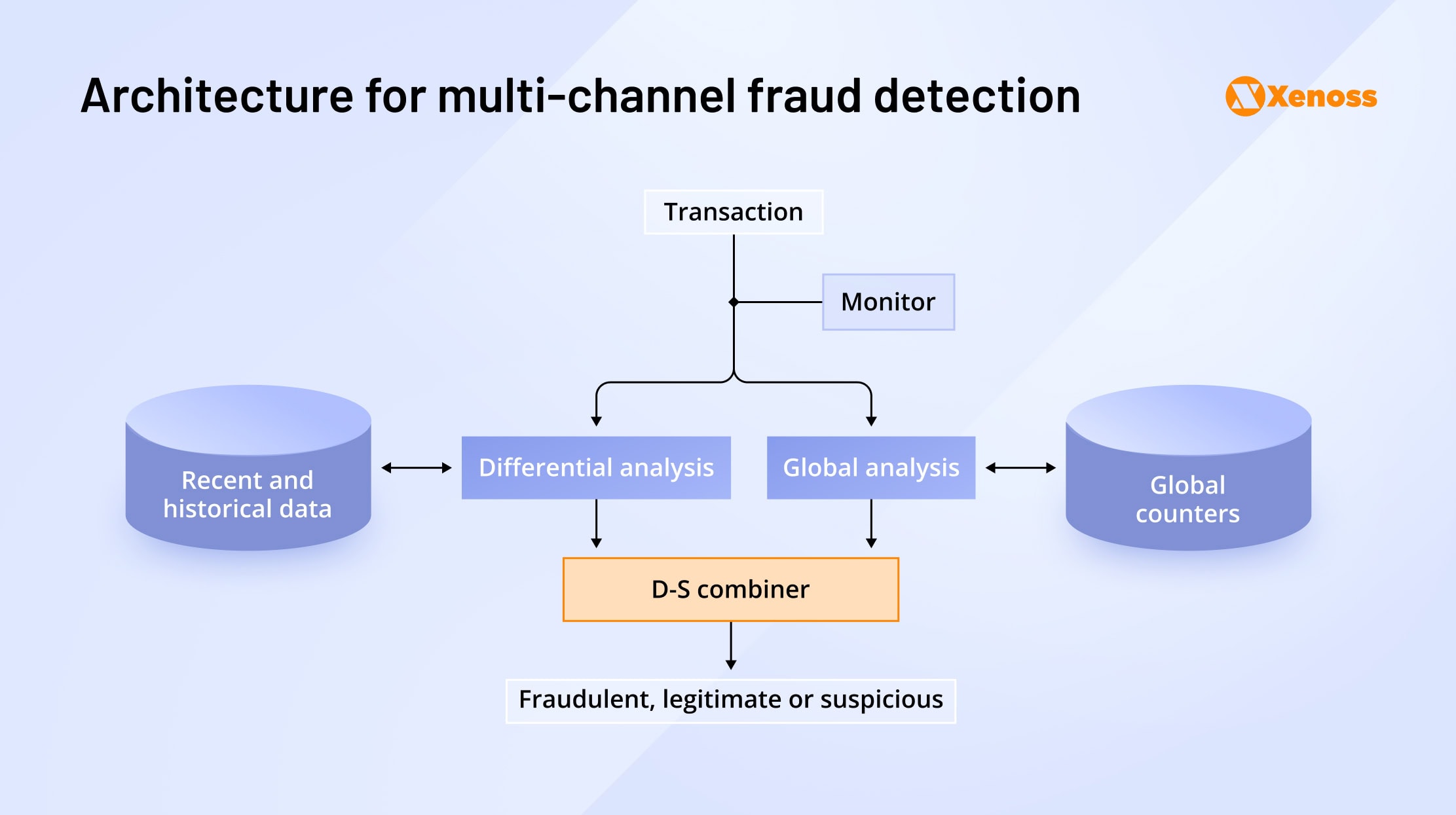

The International Journal of Computer Engineering and Technology outlines a five-step framework for generative AI-enabled omnichannel fraud prevention, designed to unify detection logic across all customer touchpoints.

Step #1: Channel-specific ingestion. Using a timestamped, format-specific protocol, the system logs every customer interaction, whether it’s a tap at an ATM, a browser session, or a mobile transfer. A monitor lock ensures that only complete and validated data enters the model.

Step #2: Behavioral profiling. Using historical data, the system builds a customer-specific “normal behavior” profile. It includes transaction velocity, average value, geo-coordinates, and device identifiers.

Step #3: Generative augmentation with GANs. Since fraud events are rare and it’s difficult to have a statistically significant number of data points, a generative AI model (usually a GAN network) generates scenarios with a few anomalous variables. This helps expose the model to commonly used fraud tactics.

Step #4: Unified decision layer (gut scoring). Outputs from each channel aggregate into a single risk score, enabling detection of multi-step fraud such as credential stuffing via call centers combined with mobile transfers.

Step #5: Real-time actioning. Upon detecting risk, the system makes split-second decisions: block the transaction, trigger biometric re-authentication, or escalate to manual review.

The engine achieved 96% accuracy over six months with only 0.8% false positives, though researchers recommend human oversight for edge cases.

Benefits and real-world applications

Cross-channel fraud detection allows banks to get a big-picture view of user activities across multiple touchpoints. Analyzing all inputs in an integrated manner yields higher accuracy of predictions compared to each channel making an independent judgment.

Xenoss engineers applied a similar cross-channel architecture to help a global bank modernize its credit scoring systems. By integrating multi-touchpoint behavior data into one model, the client achieved a statistically significant lift in prediction accuracy and greater confidence in transaction-level risk scoring.

In practice: Commonwealth Bank’s genAI system

Commonwealth Bank of Australia built a genAI-enabled system for detecting suspicious payments across its mobile app, online banking, branches, call centers, BPAY, and instant payment rails.

The model flags out-of-pattern transactions, drives app push messages, and integrates with NameCheck/CallerCheck to catch scams that start in one channel and execute in another. The bank reports a 30% fraud reduction following model adoption and sends approximately 20,000 daily alerts.

#4: Real-time money laundering detection with federated learning

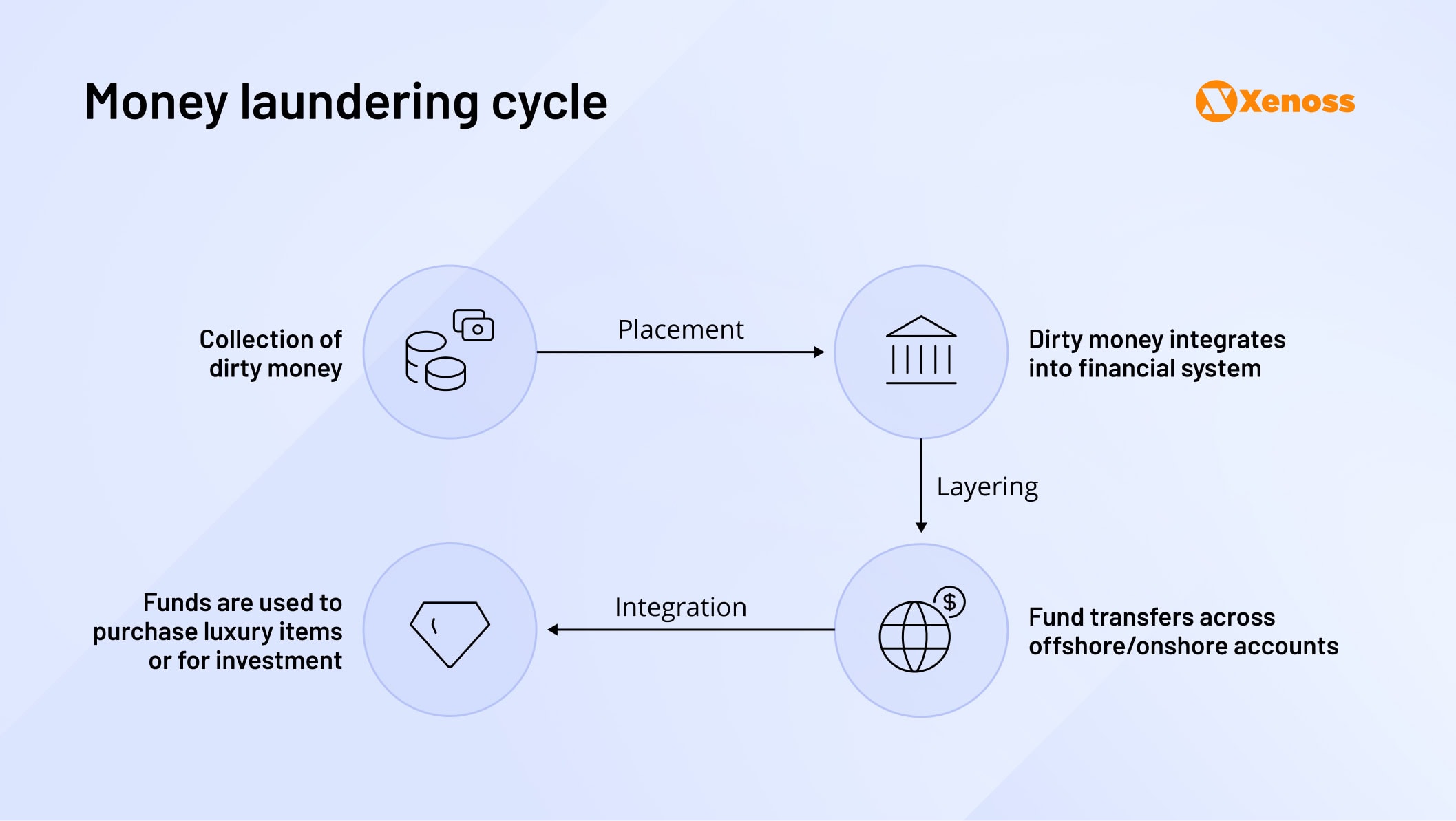

Money laundering drains up to $200 billion annually from the global economy, remaining one of the costliest and most elusive financial crimes. The difficulty lies in operational scale and fragmented data requirements for detection.

Banks, clearing houses, payment processors, and messaging networks each hold pieces of the puzzle, but privacy laws like GDPR, CCPA, and strict AML regulations prevent free data sharing between entities.

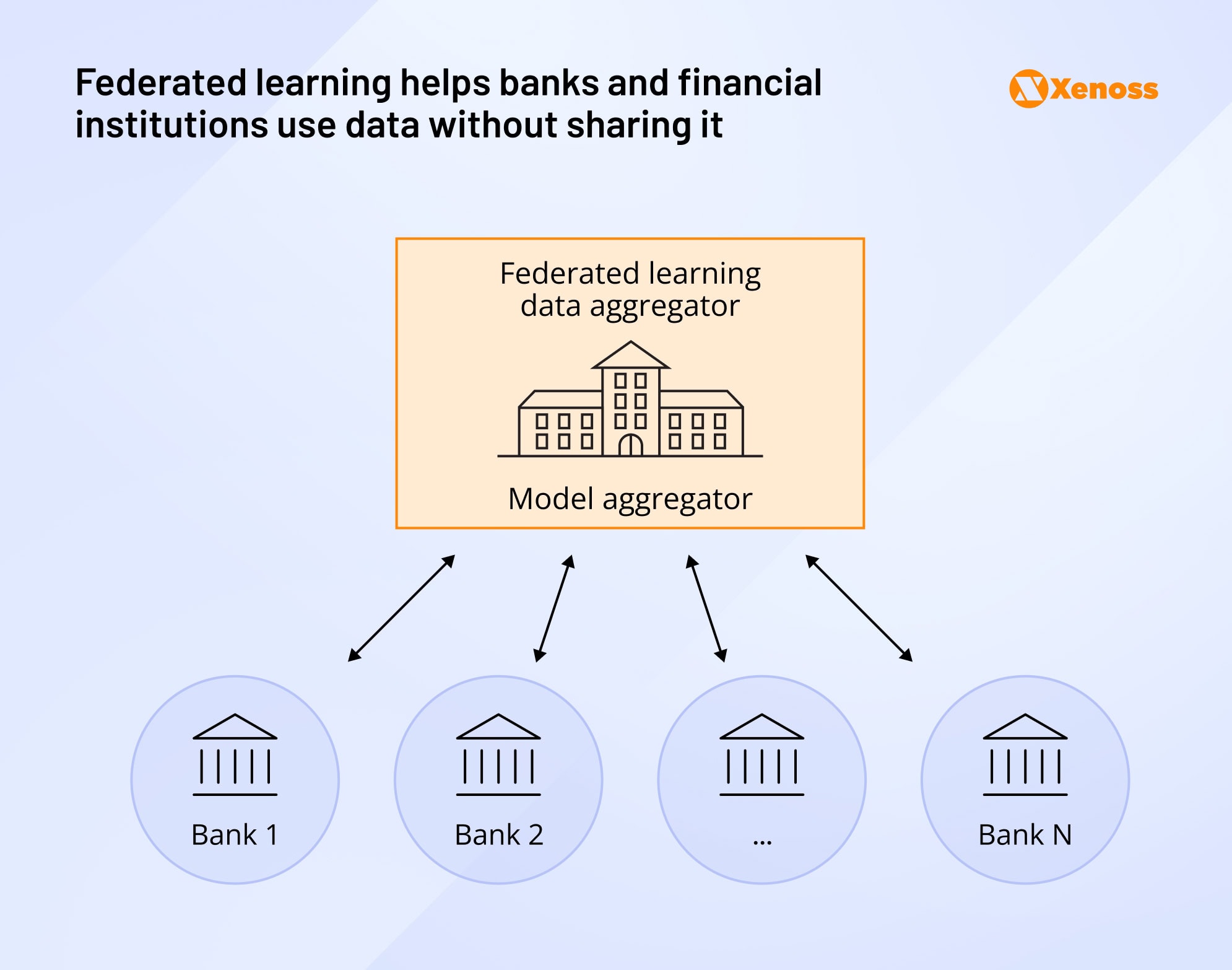

Researchers from Rensselaer Polytechnic Institute proposed federated learning for relational data (Fed-RD), a privacy-preserving architecture that allows banks to train shared fraud models without centralizing sensitive inputs.

How the federated detection model works

The framework relies on multiple financial actors contributing different slices of the transaction pipeline:

- Payment or messaging network operators (SWIFT, Visa/Mastercard, crypto-exchange matching engines, and others) supply the model with the transaction fingerprint, a record of communications between institutions.

- Banks that hold the sender’s account and keep KYC files, internal risk scores, and activity reports. They contribute to the model with the sender’s fingerprint – a record of a customer’s habits and “red flags”.

- Beneficiary banks (usually with entities abroad). They supply the receiver account fingerprint and help detect when deposited money enters high-risk sectors (a common pattern in the “integration” phase of money laundering).

- Intermediaries that are not part of all money laundering workflows but occasionally get involved in cross-border transfers. The data they store locally (nostro/vostro ledgers, SAR history) helps create a money trail.

- Regulators are typically outside the data flow. They provide a system of checks and balances, making sure the transaction meets privacy controls and satisfies legal requirements, usually without accessing raw inputs.

Privacy-preserving collaboration: Sharing data in real time

Federated learning allows each actor to keep their data on local servers. Instead of shipping data to a centralized server, institutions share the mathematical scores they derived based on the data. This way, the model can get input from the entire transaction pipeline without having to access raw data.

Here is the pipeline machine learning engineers built to implement this model.

- Local crunching: The payment network turns a transaction record into a short numeric fingerprint. Each bank does the same to the sender’s and beneficiary’s fingerprints.

- Privacy-preserving sharing. A pinch of random noise is added to numerical records, a practice ML engineers refer to as noisification. The index cards are then summed up with a secure calculator inaccessible to third parties.

- Joint verdict. A fusion program, holding all fingerprints, produces a real-time score that estimates the probability of money laundering.

- Iterative learning. Each actor in the model gets anonymized feedback on the transaction, which they can use to improve their fraud detection algorithms. This approach allows everyone involved to use the contribution of other institutions’ data without ever directly accessing it.

Benefits and real-world applications

The engineering team behind the model reported a 25% increase in money laundering attempt detection compared to gold-standard single-bank models. The algorithm also reduced data traffic between banks, allowing institutions to minimize exposure to security breaches.

In practice: Swift and Banking Circle’s federated learning deployments

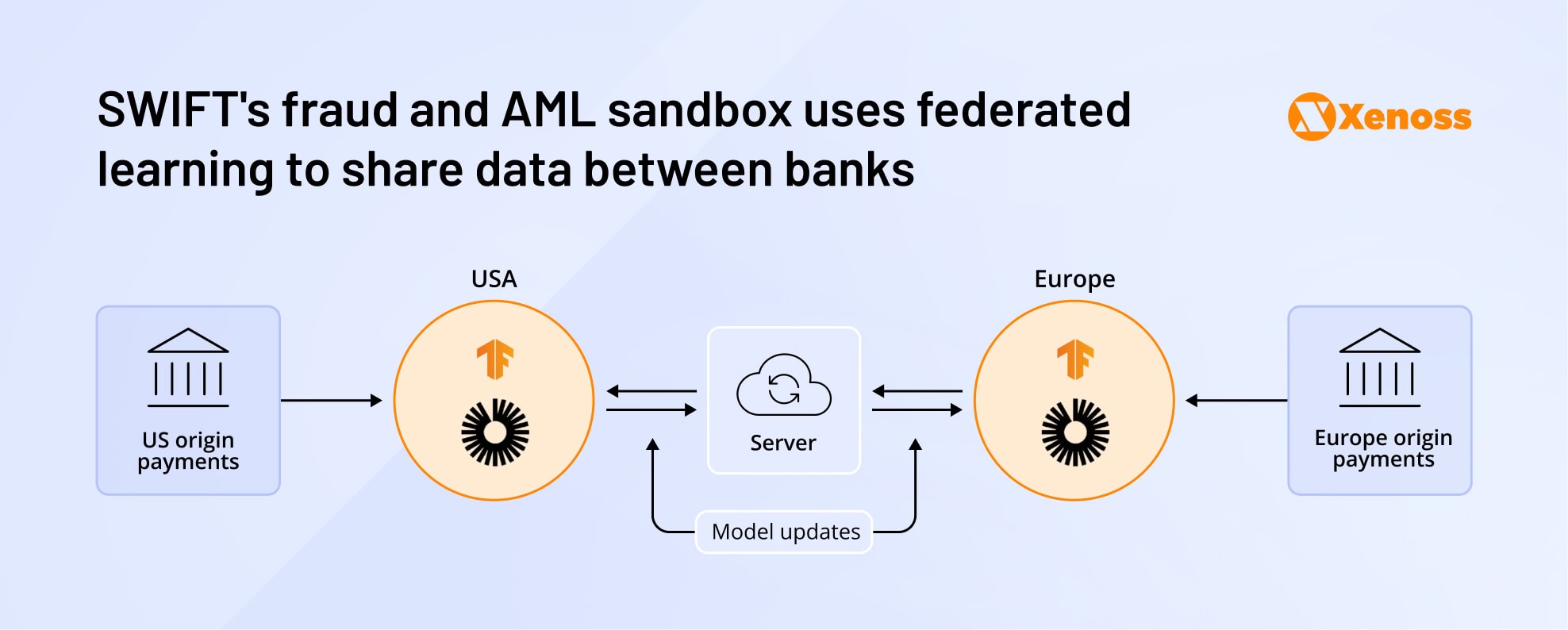

Swift has built a fraud-and-AML sandbox that uses federated learning and confidential computing on Google Cloud. Each of the 12 participating banks retrains Swift’s anomaly-detection model locally and returns gradient updates to a trusted execution server, allowing them to collectively flag mule networks (criminal groups orchestrating money laundering activities).

The Luxembourg-based bank Banking Circle built an internal federated learning system dubbed FLAME. It allows EU and US business units to collaborate within a single anti-money-laundering model.

Technical considerations for AI adoption in fraud detection

Generative AI has opened new frontiers in real-time fraud detection, but adoption carries inherent risks. Leaders in high-stakes banking environments must weigh performance gains against regulatory exposure, model explainability, and operational readiness.

How can organizations maximize AI-powered fraud prevention impact without jeopardizing compliance or customer trust?

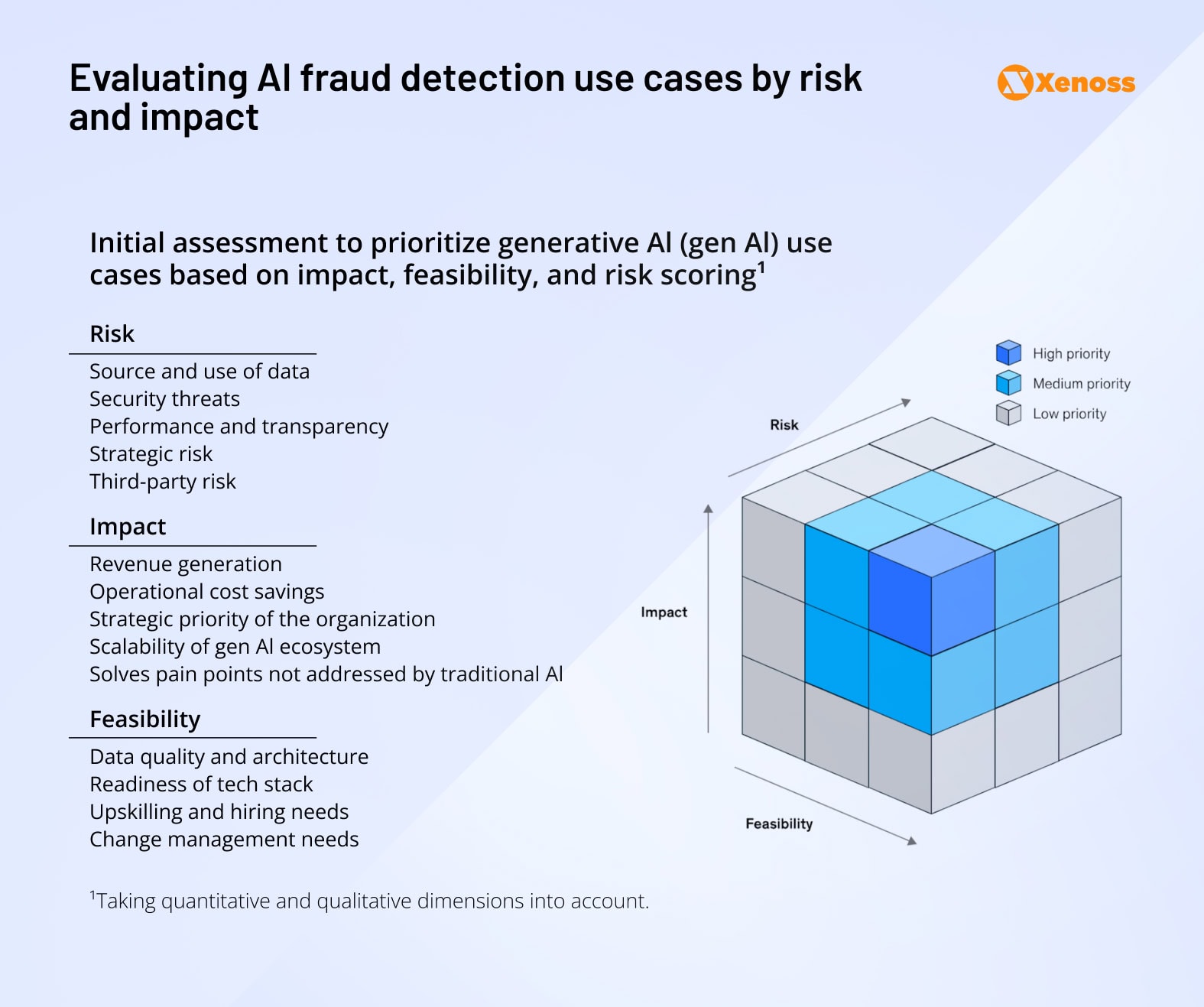

McKinsey offers a practical three-layer model for evaluating machine learning use cases in financial crime detection, serving as both a risk assessment framework and an MLOps strategy guide.

Layer 1: Risk and compliance alignment. Evaluate exposure to privacy violations, security breaches, and audit failures. Ensure that any AI-based fraud engine aligns with Industry-specific regulations like AML, broader AI governance rules (e.g., EU AI Act, CCPA, GDPR), and internal policies on model explainability and human-in-the-loop oversight.

Layer 2: Business case and scalability. Measure the use case’s potential to improve the bottom line and save operational costs, readiness to scale across the organization, and superiority to non-AI ways of addressing the same concerns.

Layer 3: Technical readiness. Examining organizational readiness for introducing AI-based fraud detection – the state of data and the tech stack, as well as skilled engineering talent.

Applying this model to AI fraud detection use cases helps banks prioritize pilots and choose those with the highest impact-to-risk ratio.

Bottom line

While banks and attackers have an even playing field regarding AI advancements (both parties can use LLMs, deep learning, and multi-modal generation to detect and perpetrate fraud, respectively), finance leaders can gain the upper hand through cross-institutional collaboration.

Banks should develop strategies to collaborate with partners within (other institutions and FinTech companies) and outside (AI tech vendors and talent sources) to stay one step ahead of fraudsters.

Increasing customer awareness of emerging fraud schemes through in-app push notifications or targeted email marketing campaigns will fortify the bank’s defenses and pressure attackers to look for new schemes. Training and upskilling the workforce to detect and prevent AI fraud will be another guardrail for reducing attack vulnerability.

Combining these strategies, new technologies, impactful collaborations within and beyond the industry, customer education, and workforce upskilling, helps ward off fraud threats and keep banks impenetrable to financial crime.