LLM frameworks are still pretty new to the AI stack, but they’ve made a big splash. LangChain kicked things off in late 2022, with LlamaIndex (originally GPT-Index) following around the same time, and LangGraph joining the party in 2024. The engineering community embraced them quickly.

Now we’re looking at a very dynamic and competitive landscape with no single best solution. Each framework has carved out its own niche, and picking the right one can feel overwhelming.

Understanding which orchestrator fits your use case best would require your team to research all tools independently.

However, even though a high-level comparison cannot give a 100% reliable answer as to which framework engineers should settle on, it’s helpful to understand how market leaders compare with one another and what their strengths and weaknesses are.

This article will review three widely used LLM frameworks: LangChain, LangGraph, and LlamaIndex, and determine which one is best suited for multi-agent systems (and which use cases others are designed for).

If you need a refresher on multi-agent systems, the basic components of an orchestrator, and scenarios where teams achieve better results with custom frameworks compared to off-the-shelf tools, check out our comprehensive guide to orchestrator frameworks.

This article presumes a basic understanding of LLM frameworks and implies that engineering teams have ruled out a tailor-made solution in favor of an off-the-shelf tool.

Framework overview: LangChain, LangGraph, and LlamaIndex

LangChain

LangChain is a composable toolkit for building LLM applications that uses an open-source LangChain Expression Language (LCEL) to create complex workflows and ‘chains’.

The framework plugs into all state-of-the-art LLMs, widely used back-end tools, and data sources.

Main components for building LangChain applications:

- Chains: Steps that run sequentially, in parallel, or branch based on conditions

- Tools: Schema-backed functions that bind to LLMs for API calls, code execution, and external system integration

- Prompts: Templates and structures that the orchestrator helps optimize and enrich

Note: Agents used to be part of LangChain’s ecosystem but have been deprecated and now live inside LangGraph.

LangGraph

LangGraph is a stateful framework for building multi-agent systems as graphs, created by the LangChain team and compatible with it.

Engineers model workflows using nodes (tools, functions, LLMs, subgraphs) and edges (loops, conditional routes) to create sophisticated agent interactions.

Key capabilities for multi-agent systems:

- State management: Persistent checkpointing, ‘time-travel’ debugging, pause/resume controls

- Human oversight: Built-in human-in-the-loop integration with safe agent restarts

- Production controls: Guards, timeouts, concurrency management, and per-node reviews

LlamaIndex

LlamaIndex is a data-centric LLM framework specifically designed for advanced RAG and agentic apps that use organizations’ internal data.

It has a strong suite of ingestion capabilities, with dozens of out-of-the-box data connectors, PDF-to-HTML parsing, metadata, and chunking.

LlamaIndex’s Workflow module enables multi-agent system design and powers simple multi-step patterns.

To understand important differences between these frameworks, we will compare them across four dimensions:

- Ease of use

- Multi-agent support

- Observability, debugging, and evaluation

- State management

Ease of use

LLM frameworks strive to find the middle ground between flexibility and robustness. Those that succeed give developers both all the building blocks for the target use case (e.g., pre-built agents for multi-agent apps in LangGraph) and enough wiggle room to customize these components.

Our evaluation focuses on API design, programming language support, documentation quality, and community resources. Here’s how the three frameworks compare for developer experience.

LangChain: 8/10

For linear, beginner-level projects, LangChain offers the smoothest developer experience. The framework handles common pain points through built-in async support, streaming capabilities, and parallelism without requiring additional boilerplate code.

LCEL’s native integrations with LangSmith and LangServe streamline the development-to-deployment pipeline, reducing glue code and manual optimization work.

LangChain’s tool calling is also one of the most straightforward out there. The framework uses a single .bind_tools() function to call models across all providers and a simple @tool decorator for creating new tools.

The major developer friction: rapid change and deprecation cycles. New versions ship every 2-3 months with documented breaking changes and feature removals. Teams need to actively monitor the deprecation list to prevent codebase issues.

LangGraph: 7/10

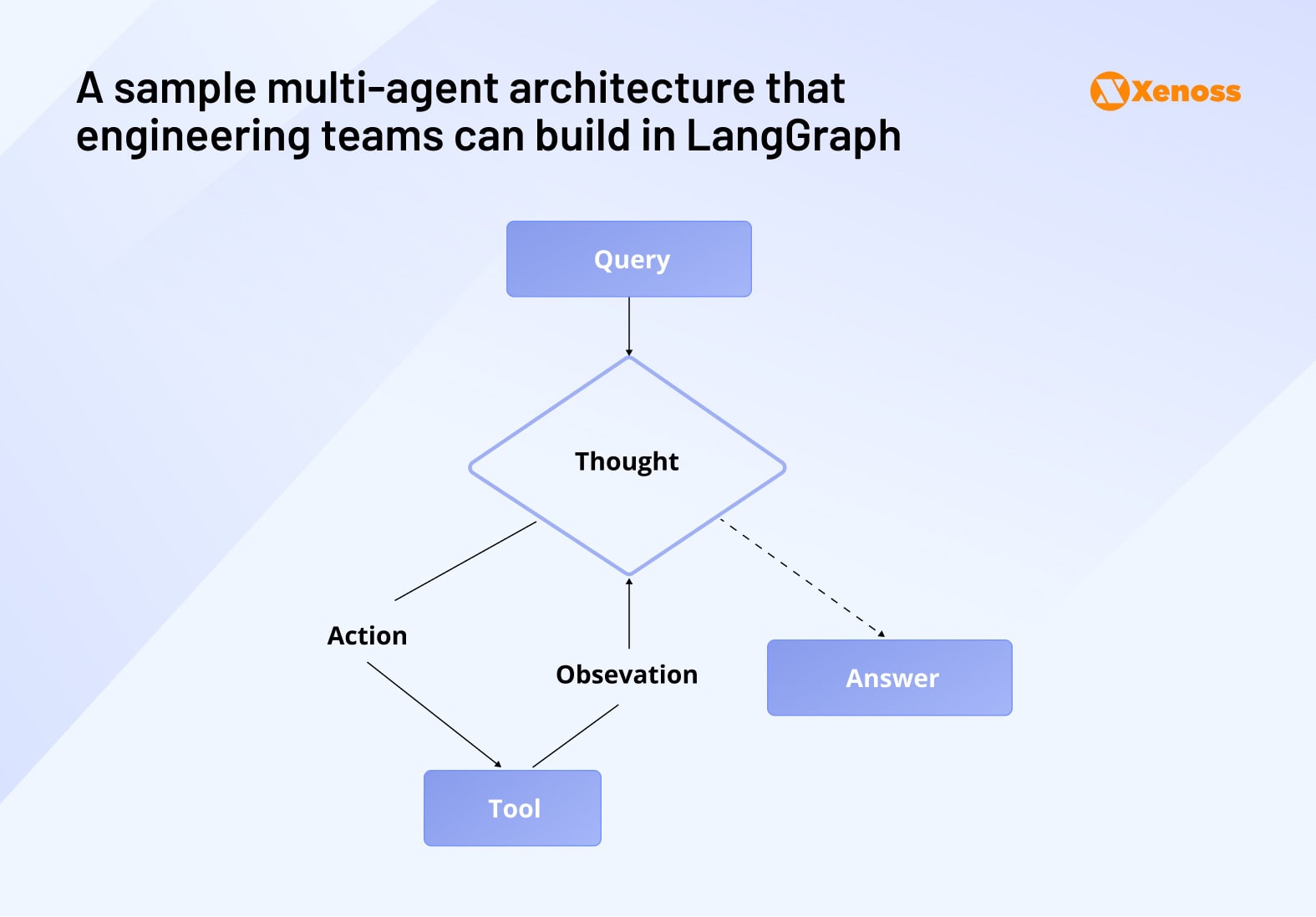

LangGraph’s stateful, multi-agent focus makes it inherently more complex than LangChain. However, building multi-agent systems in LangGraph is significantly easier than attempting to cobble them together in LangChain, even LangChain’s documentation recommends LangGraph for agent workflows.

The framework provides all essential multi-agent building blocks: state management, persistent memory, time-travel debugging, and human-in-the-loop validation out of the box.

To get the hang of the framework, engineers can build agentic workflows with a pre-built ReAct agent and the ToolNode for tool calling and customize them to meet project-specific needs.

As for minor inconveniences, since most developers try using LangGraph after building in LangChain, the switch from chains to graphs adds to the learning curve. On this point, it’s worth noting that LangGraph’s community is excellent, and there’s no shortage of video tutorials, starter packs, and other community resources that help quickly get the hang of the basics.

Technical constraint: Async functions in LangGraph’s Functional API require Python 3.11+, which may limit adoption in enterprise environments with older Python versions.

LlamaIndex: 6/10

Since LlamaIndex was designed with RAG-heavy workflows in mind, it has a best-in-class data ingestion toolset. The framework helps engineering teams clean and structure messy data before it hits the retriever, set up no-code pipelines in LlamaCloud, and sync them programmatically.

All of the above is a huge time-saver for RAG ops.

Another advantage for LlamaIndex is the support for multi-agent workflows and agentic apps in both Python and TypeScript.

The documentation, as for other frameworks, is LlamaIndex’s weaker point, but the product team is now creating step-by-step Agentic Document Workflows that are essentially tutorials and blueprints for engineering teams.

LlamaIndex’s 0.13.0 API created a bit of extra friction in the community. The new API deprecated several agent classes (FunctionCallingAgent, ReActAgent, AgentRunner), so engineering teams using the framework had to do extra refactoring.

Multi-agent support

When choosing a framework specifically for multi-agent systems, consider finding tools that have pre-built agents and ready-to-deploy presets for common patterns. These tools help engineering teams deploy complex applications with minimal friction.

LangChain: 5/10

LangChain guidelines clearly state it’s been created for ‘simple orchestration’ and openly suggest to ‘use LangGraph when the application requires complex state management, branching, cycles, or multiple agents.’

Therefore, for teams planning to build production-ready multi-agent workflows, LangGraph is a superior option.

LangChain’s role in MASs is limited to mapping out individual workflows for each agent or designing small multi-tool workflows and simple production chains.

LangGraph: 9/10

LangGraph is purpose-built for multi-agent orchestration. Its toolset for this use case is far superior to both LangChain and LlamaIndex.

Teams building multi-agent systems get comprehensive tooling:

- Persistence layer enabling agent recovery after failures or interruptions

- Advanced memory management across multiple agents and workflow steps

- Time-travel debugging for troubleshooting complex agent interactions

- Dual API approach: Graph API for full control vs. Functional API following standard Python patterns

- Pre-built components: ReAct agents, ToolNode for tool calling, and multi-agent coordination patterns

As helpful as LangGraph’s low-level approach to multi-agent orchestration can be in tightly controlling schemas, reducers, and threads, it creates an added conceptual load to orchestration, so it is not the best choice for programmers new to LLM frameworks.

LlamaIndex: 7/10

Though not as robust as LangGraph, LlamaIndex is a reliable choice for multi-agent orchestration. Like LangGraph, it comes with pre-built agents (FunctionAgent, ReActAgent, CodeActAgent) that teams can combine into a coordinating system.

The framework also has a library of multi-document agent patterns. Engineering teams can use these as blueprints to reduce time-to-first-system.

Deploying agents into production is fairly straightforward with LlamaDeploy – an async-first framework designed for moving multi-service systems into production.

While Workflows supports pause-resume and human-in-the-loop patterns, teams needing fine-grained checkpointing, built-in replay, and sophisticated interrupt semantics will find LangGraph more capable.

LlamaIndex works best for document-heavy multi-agent applications where data processing and retrieval coordination are primary concerns.

Observability, debugging, and evaluation toolset

Before choosing a framework, check how robust its toolset is for instant feedback. Developers should be able to tell which tools agents are calling, how they are communicating with each other, and how many tokens the system consumes.

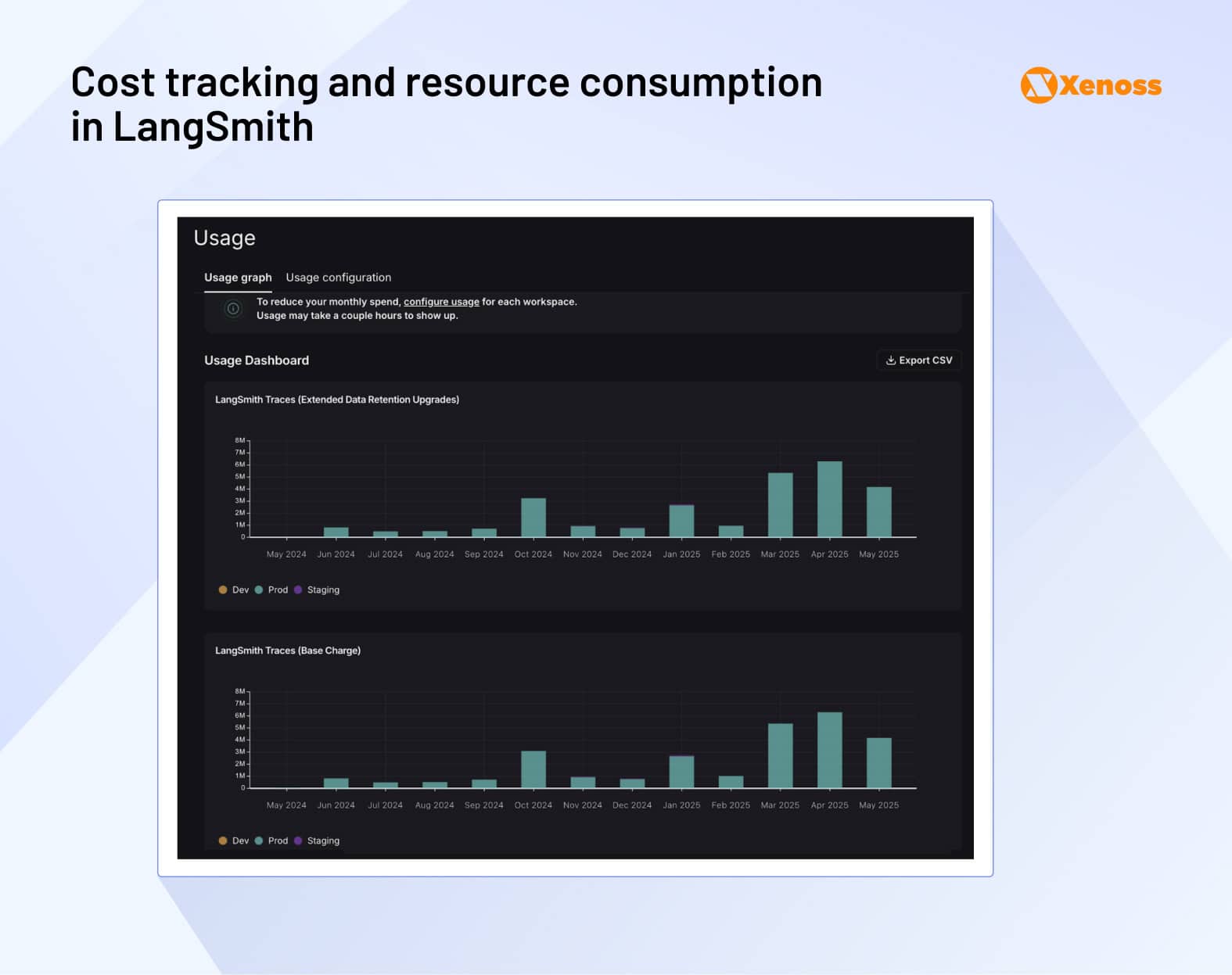

LangChain: 9/10

LangChain is seamlessly integrated with LangSmith, the tool for tracing and observability built by the same team.

LangChain allows teams to set up observability for prototyping, beta-testing, and production.

Recent updates enable sophisticated monitoring capabilities:

- Direct evaluator execution within the LangSmith interface

- Real-time alerts for latency spikes and production failures

- OpenTelemetry integration for full-stack tracing

- Multi-modal token consumption and caching monitoring for cost control

LangSmith can be used out of the box or self-hosted, which is helpful for enterprise use cases with strict data residency.

It’s worth pointing out that LangSmith itself is prone to security vulnerabilities. In June 2025, a now-fixed LangSmith issue could expose API keys via malicious agents. Although the product team solved this issue, teams should consider mitigating such risks via self-hosting and tighter key controls.

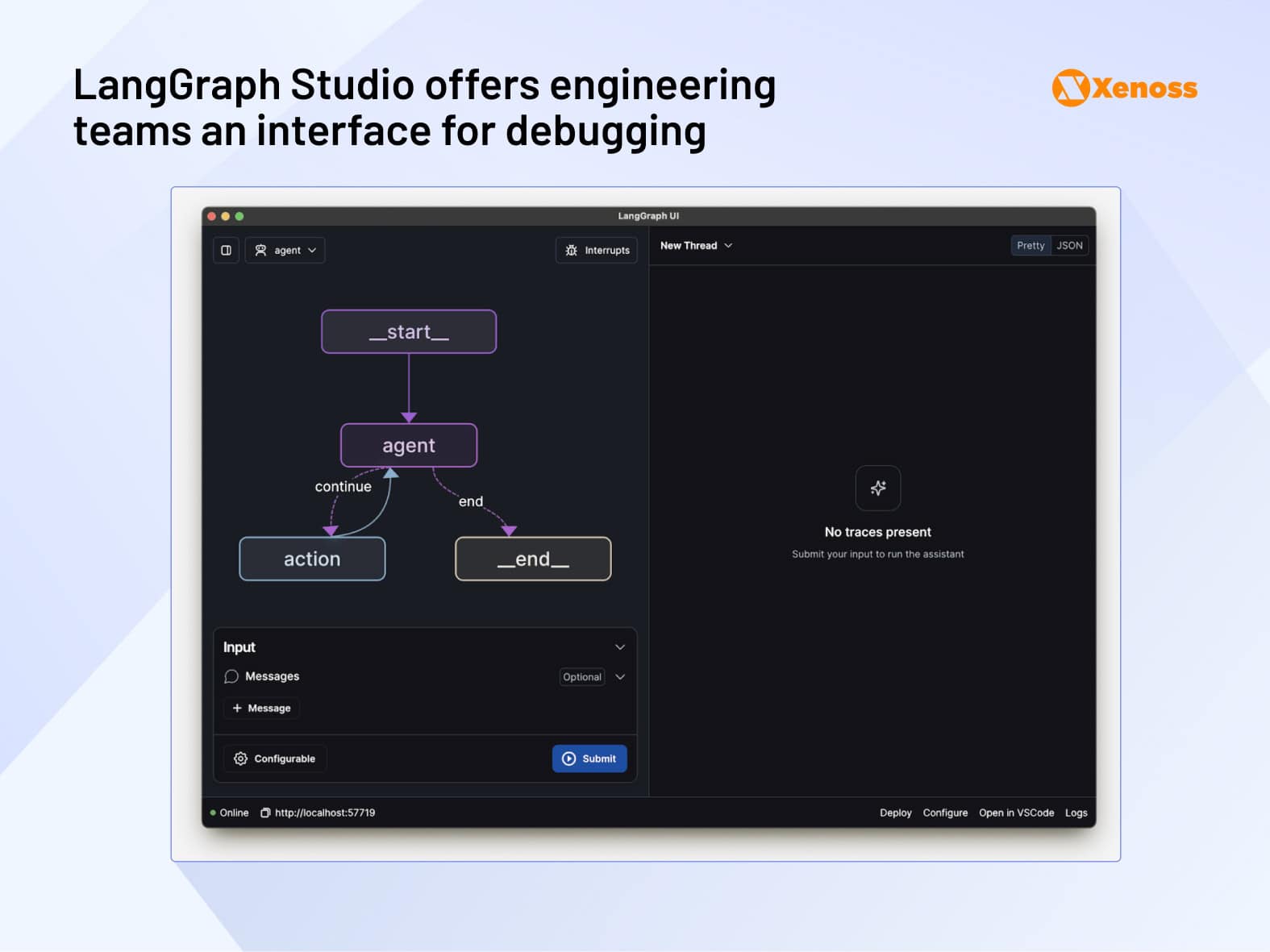

LangGraph: 9/10

LangGraph provides purpose-built debugging through Studio and Platform environments. LangGraph Studio offers advanced debugging capabilities, including time-travel debugging, visual graph inspection, and comprehensive state/thread management.

Studio v2 (released May 2025) enhances the debugging experience with LangSmith integration, in-place configuration editing, and tools for downloading production traces to run locally.

LangGraph makes it easy to reinforce HITL practices for agent reviews, thanks to interrupts paired with a persistence model that lets agents restart without friction after they go past a human-in-the-loop checkpoint.

The caveat for these debugging capabilities is that most of them live on the LangGraph Server, not the open-source library. Enterprise teams work around this by self-hosting the server and preventing vendor lock-in, while smaller projects typically use Platform instead.

LlamaIndex: 7/10

Unlike LangChain ecosystem products, LlamaIndex does not have a LangSmith-like one-stop shop for evaluation. To have a unified view of datasets, costs, and alerts, teams have to pair the framework with third-party tools like Arize, WhyLabs, TruEra, or EvidentlyAI.

HITL reviews in LlamaIndex rely on application-level wiring or third-party observability tools for review interfaces and alerts rather than a first-party Studio/Platform experience. To address out-of-the-box observability shortcomings, LlamaIndex has built-in integrations with LangFuse and OpenTelemetry.

Prometheus metrics are baked directly into the server to monitor the performance of multi-service systems.

On top of that, LlamaIndex features in-framework debugging, on-demand graph visualization, and event streaming.

For evals, LlamaIndex offers LLM-based evaluators and datasets, as well as integrations with third-party platforms, including Cleanlab, Ragas, and DeepEval.

State management

The ability to pause tasks and restart them, maintain context across all workflow steps, and scale agent resources on demand are all part of state management. Ideally, you want to build a multi-agent system with a framework that accommodates all of the above.

Here’s how each framework approaches state management for multi-agent applications.

LangChain: 6/10

LangChain’s state management capabilities are quite rudimentary since most tools for complex management are now part of LangGraph. As such, core LangChain has no thread timelines, ‘time-travel’ debugging, and only offers limited memory implementations.

LangGraph is a terrible state machine, though, if you have any kind of complicated logic that requires persistence, subgraphs, and humans-in-the-loop interactions.

A r/langchain user on LangChain’s state management capabilities

Available capabilities focus on simple session management: Developers can wrap chains with RunnableWithMessageHistory and connect to storage backends for basic persistence.

For simpler states like chat context or key-value data, a common strategy is not to set up separate orchestration environments altogether and keep the state in-memory or inside a memory store.

LangGraph: 9/10

In LangGraph, on the other hand, state is a fundamental building block for agentic systems; therefore, the state management toolset is among the best on the market.

Advanced state capabilities include:

- Complex, typed schemas supporting arbitrary data structures and relationships

- Comprehensive persistence via LangGraph Server storing checkpoints, memories, thread metadata, and assistant configurations

- Flexible storage options supporting local disk or third-party backends based on deployment needs

The most impressive part is that state management keeps evolving with new versions of LangGraph. One of the major Context API updates in v.0.6. was type-safe context injection.

Complexity may be the only significant hurdle to mastering state management in LangGraph.

For each state, engineers have to define a schema, reducers, and checkpoints, which is a more advanced configuration compared to LangChain’s simple ‘on-chain’ orchestration.

LlamaIndex: 7/10

LlamaIndex’s Workflow module supports engineers with a powerful state management toolset. Developers can manage context and share data between the steps of agentic workflows, keep it stable across runs, and restore it if it is lost.

The framework supports both structured (Pydantic-like) and unstructured (dictionary-like) approaches to state management.

Unlike LangGraph, which implies a state by design, in LlamaIndex, default workflows are stateless. State is explicit via the provided Context store rather than implied by a global graph state

Similarly, the framework views checkpointing as a development accelerator rather than a prescriptive production runtime with HITL reviews and ‘time-travel’ semantics built into the engine.

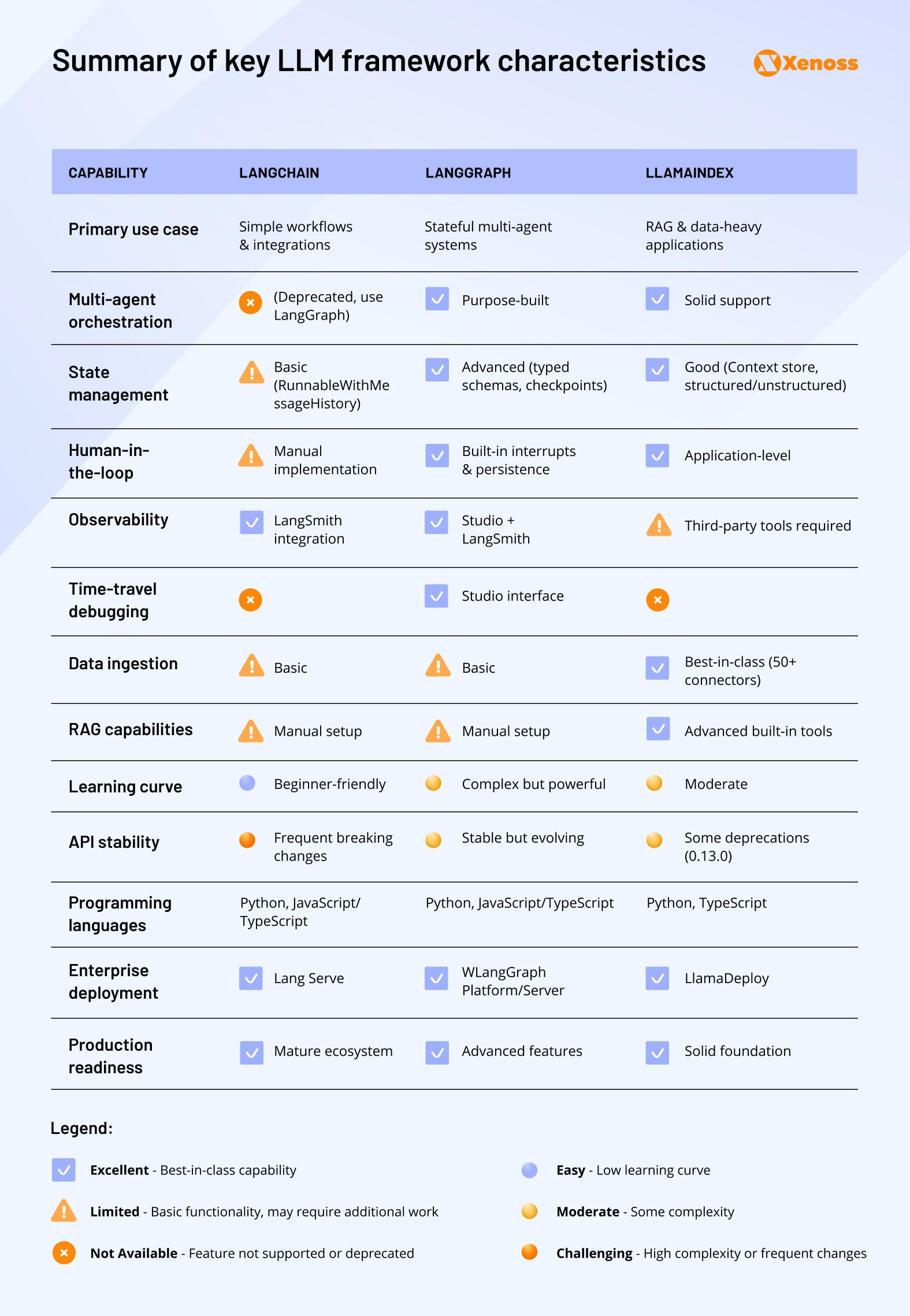

Here is the summary of the high-level comparison of leading LLM frameworks across critical dimensions for building and managing multi-agent applications.

LangChain vs LangGraph vs LlamaIndex: Full-feature comparison

In April 2025, Harrison Chase, the founder of LangChain and LangGraph, published a feature-by-feature breakdown for top LLM frameworks.

He examined how flexible orchestration was for each framework, if it was declarative or not, and the notable low-level features each framework came with, aside from agent abstraction.

Note that, since LangChain is not an agent orchestrator by default, Chase did not include it in the spreadsheet. Also, since April, all three frameworks have released major updates, so we saw it fit to review and update this table, sticking with the author’s original criteria.

Here is the updated feature-by-feature comparison for LangChain, LangGraph, and LlamaIndex valid for August 2025.

Bottom line: When to use LangChain, LangGraph, and LlamaIndex?

Based on our assessment, LangGraph delivers the most comprehensive toolset for building complex multi-agent systems. The framework provides stateful abstractions with time-travel debugging, human-in-the-loop interrupts, and robust fault tolerance capabilities.

LangGraph’s integration with LangSmith creates a powerful observability layer, enabling teams to track agent performance, resource consumption, and system behavior across complex workflows. This combination makes LangGraph the strongest choice for production multi-agent applications.

However, LangChain and LlamaIndex excel in specific scenarios where their focused capabilities outweigh LangGraph’s complexity.

Choose LangChain when speed and simplicity matter. As the most straightforward framework in this comparison, LangChain enables rapid prototyping and quick wins for teams building linear workflows or simple agent interactions. Its extensive integration ecosystem and beginner-friendly API make it ideal for teams new to LLM frameworks or projects with tight development timelines.

Choose LlamaIndex for data-intensive applications. The framework excels at building expert “knowledge workers”—agents that process PDFs, query SQL databases, and analyze BI data with a sophisticated understanding. While teams need third-party tools for advanced observability and state management, LlamaIndex’s data processing capabilities are unmatched for document-heavy workflows.

The decision ultimately depends on your project’s complexity, team expertise, and specific requirements. Start simple with LangChain for prototypes, graduate to LangGraph for complex production systems, or choose LlamaIndex when data processing dominates your use case.