Executives are increasingly driving AI initiatives, with 81% now leading adoption compared to 53% last year. However, their enthusiasm faces practical challenges, as 44% of companies identify infrastructure limitations as their primary obstacle. LLM self-hosting can be alluring, but to the point where a business needs to plough large sums into expensive on-premises infrastructure.

To reduce infrastructure maintenance costs, optimize resources, and run efficient AI workflows, nearly 74% of organizations opt for managed cloud services.

Major cloud providers, such as AWS, Microsoft, and Google, offer cloud-based generative AI platforms: Amazon Bedrock, Azure AI, and Google Vertex AI. They provide end-to-end AI management services, from proprietary data integration to model deployment.

In this comprehensive comparison study, we’ll examine how each platform approaches enterprise LLM hosting. We’ll explore their architecture differences, cost structures, evaluate governance capabilities, and provide a decision-making framework for selecting the optimal platform aligned with your business requirements.

The enterprise AI inflection point: Why 2025 changes everything

Enterprise AI deployment is approaching a critical milestone in 2025 as organizations accelerate from AI exploration to production. Production AI use cases have doubled to 31% compared to 2024, reflecting a fundamental shift in how businesses approach AI infrastructure decisions.

Business leaders began to see tangible financial results from early adopters. Competitors were cutting costs, improving decision speed, and launching entirely new products. And enterprises, as well as startups, moved to production because the risk of not using AI finally became greater than the risk of using it.

Startup companies report that 74% of their compute workloads are now inference-based, up from 48% last year. Large enterprises follow a similar pattern, with a 49% increase from 29%. This transition has driven substantial investment growth, with model API spending more than doubling from $3.5 billion to $8.4 billion annually.

As companies move their AI projects out of the lab and into daily operations, they now have to decide which cloud-based platform can handle the workload.

Amazon Bedrock: Multi-vendor model marketplace via a single API

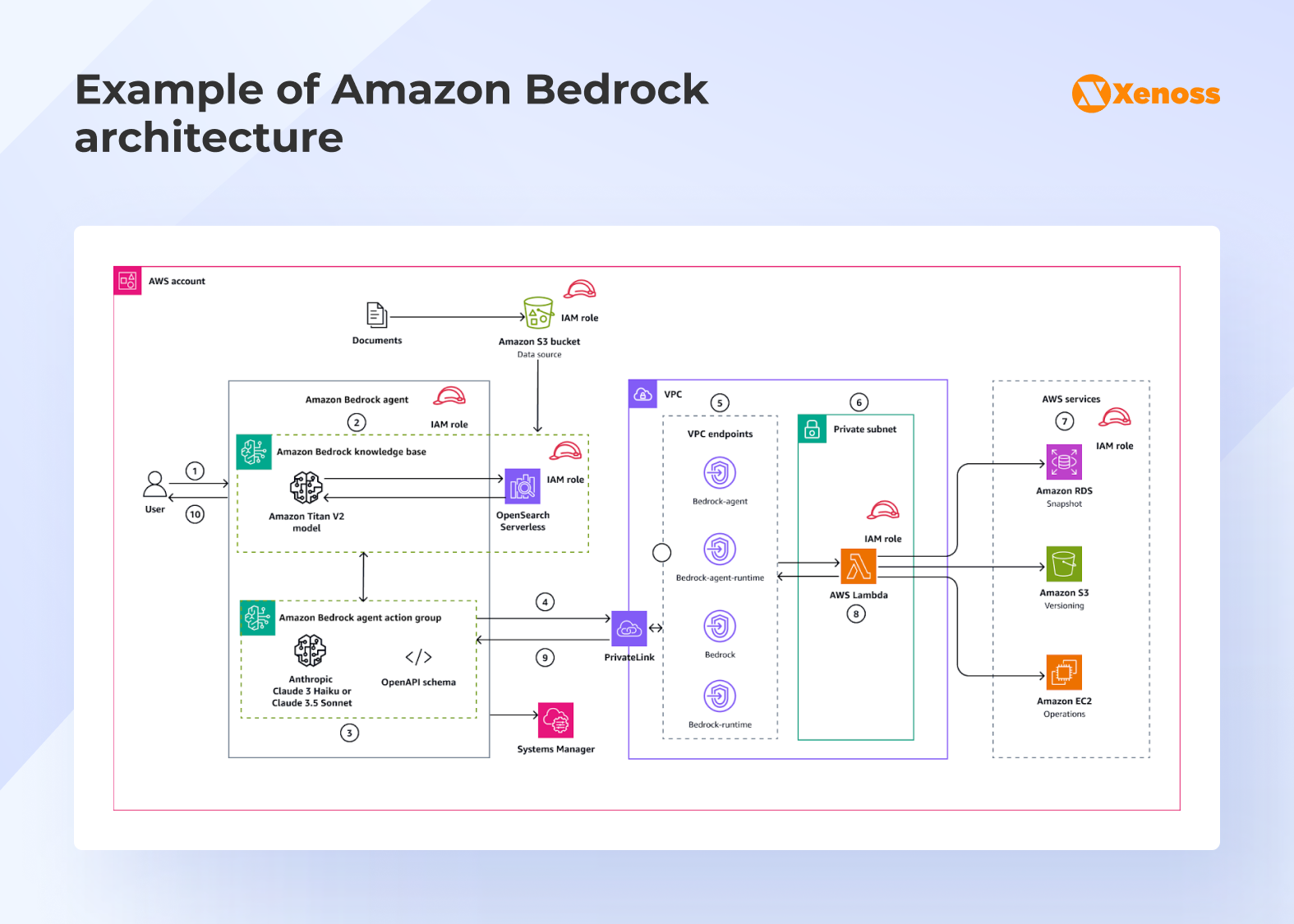

Amazon Bedrock is a fully managed service offering a choice of over 100 high-performing foundation models (FMs) from leading AI companies, such as Anthropic Claude, AI21 Labs, Cohere, Meta Llama, Stability AI, and Amazon Titan.

Amazon Bedrock provides access to any model via a single API, meaning that when businesses want to use multiple models, they don’t need to customize their infrastructure to separate providers’ APIs and can leverage only their AWS account.

Another advantage of Bedrock lies in its seamless integration with the broader AWS ecosystem. It natively connects to Lambda for event-driven automation, S3 for data storage, and CloudWatch for monitoring and observability.

Latest enterprise-grade capabilities

Bedrock’s service offering for enterprises revolves around performance, cost efficiency, and security. With features like Bedrock Guardrails (now available for text and image outputs) and Automated Reasoning Checks, businesses can validate the content that FMs produce and block up to 88% of harmful outputs and hallucinations.

Amazon Bedrock also features Intelligent Prompt Routing, which automatically reroutes prompts between models to enhance performance and reduce costs. For instance, Bedrock can switch requests between Claude 3.5 Sonnet and Claude 3 Haiku to ensure a better output.

In October 2025, Bedrock officially launched their full-scale agent builder, AgentCore. With its help, companies can build enterprise-grade agent systems that feature robust access management, observability capabilities, and security controls. For streamlined agent development, Bedrock provides a Model Context Protocol (MCP) server that integrates with tools such as Kiro and Cursor AI.

Here’s a customer review from G2, which highlights Bedrock’s benefits for building agents:

As a developer of AI agents, Bedrock can provide:

– Rapid prototyping: test concepts in hours rather than months.

– Neighborhood access to basic models without specific infrastructure.

– Scalability: AWS can handle the demand regardless of the number of users, 10 or 10,000.

– Automatic connectivity to other AWS services in memory management, orchestration, and data processing.

Concisely, Bedrock removes the emphasis on infrastructure management and puts you on the path of building successful agents.

Real-life implementation for DoorDash

DoorDash reduced the time required for generative AI application development by 50% by implementing Anthropic’s Claude model via Amazon Bedrock. The company needed to develop a contact center solution that enables generative AI for self-service in Amazon Connect. This solution had to unburden their contact center team.

For this purpose, DoorDash has chosen Amazon Bedrock, as they needed a quick rollout at scale with access to high-performance models while maintaining a high level of security. In the span of eight weeks, DoorDash launched a solution, ready for production A/B testing.

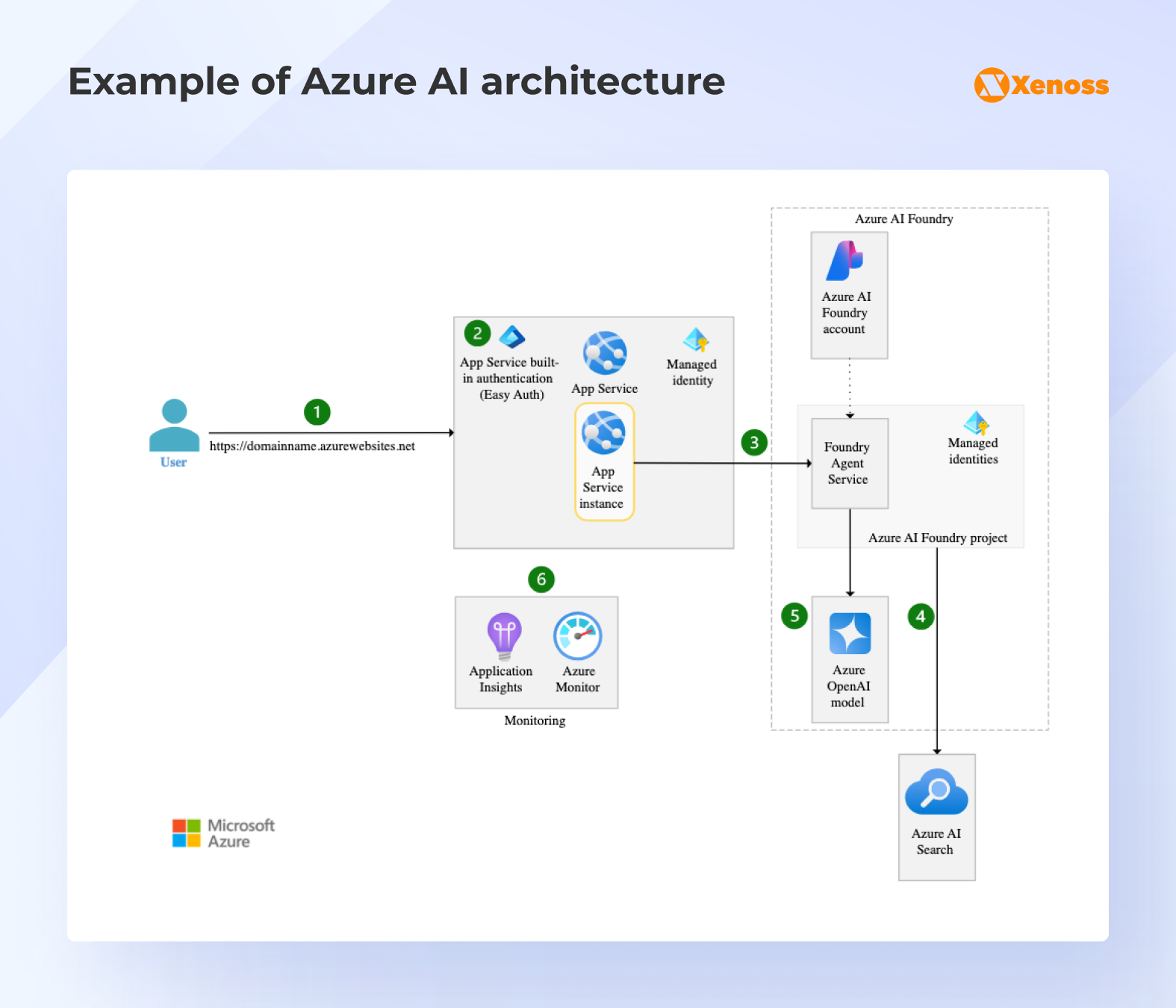

Azure AI: OpenAI exclusivity with Microsoft ecosystem integration

Azure AI is considered an AI developer’s hub for building AI solutions, offering the most flexibility with a variety of the best generative AI tools:

- Azure OpenAI, exclusive access to the most recent OpenAI models

- Azure AI Foundry, a generative AI development platform

- Azure AI Foundry Models with access to more than 1,700 models

- Azure AI Search for building centralized solutions that enable retrieval-augmented generation (RAG)

- Phi open models with access to diverse small language models (SLMs)

- Azure AI Content Safety for advanced AI guardrails to protect content in GenAI applications

Such a breadth of AI services enables enterprises to customize their generative AI solutions to different needs. Plus, Azure AI’s architecture prioritizes integration with Microsoft’s enterprise tooling, making it compelling for organizations heavily invested in Microsoft’s cloud environment.

Exclusive access to OpenAI (which is still the most widely used model provider, with 63% of companies using it), availability of SLMs, and a wide range of other models via Foundry Models service make Azure AI the most optimal choice for companies that value autonomy, flexibility, and deep integration with their existing Microsoft ecosystem.

Latest enterprise-grade capabilities

Unlike other cloud providers, which combine various AI features within a single AI development platform, Azure AI offers lots of standalone services. However, within each service, they provide a specific set of capabilities that enable enterprises to develop production-ready generative AI solutions.

For instance, Azure AI Content Safety now includes Spotlighting to strengthen Prompt Shields, which is responsible for detecting and preventing prompt injections and other adversarial attacks in real time.

A Deep Research feature in Azure AI Foundry enables enterprises to build contextually rich agents and generative AI solutions that can be integrated with internal systems, powering enterprise-wide research capabilities. For example, users can trigger deep research with questions like: “What are the recent regulatory changes in EU data privacy, and how might they impact our business in Q4 2025?”.

Similar to Bedrock, in October 2025, Azure also launched the Microsoft Agent Framework, an open-source SDK for developing multi-agent systems via the MCP server and Agent2Agent (A2A) protocol.

Real-life implementation for Acentra Health

Acentra Health leveraged the Azure OpenAI service to develop MedScribe, a tool that responds to appeals for healthcare services and increases the productivity of nurses. With the help of this generative AI solution, the company saved almost 11,000 nursing hours and $800,000.

They opted for Microsoft due to its HIPAA compliance and native integration with Microsoft Power BI, which enables the visualization and tracking of Medscribe’s performance and quality.

Google Vertex AI: Open ecosystem with native data analytics integration

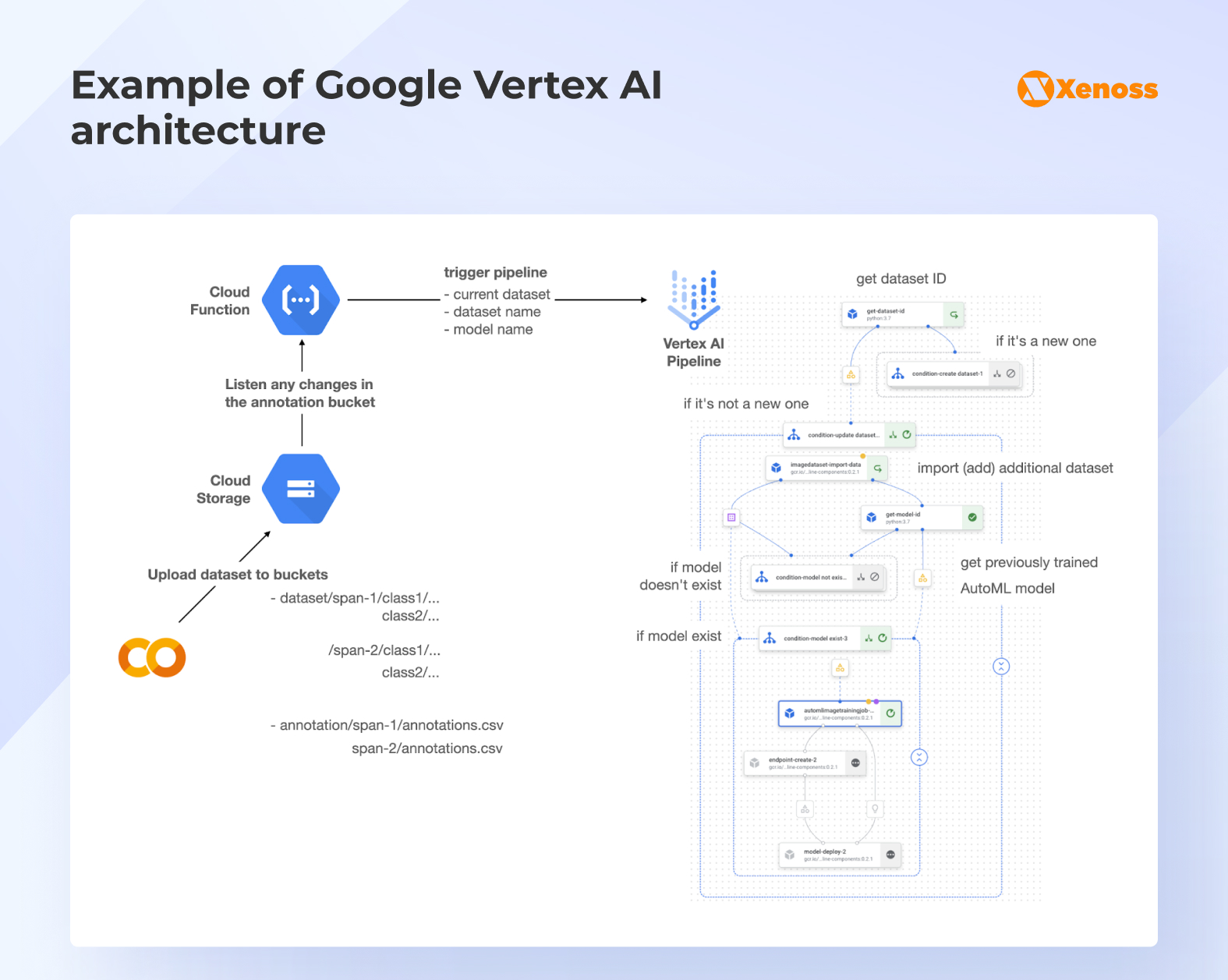

Google Vertex AI is a machine learning platform that offers various ML tools and services to simplify model training and deployment. Vertex AI’s popularity is primarily attributed to Google’s extensive adoption and recognition. In 2024, Forrester named Google a leader in AI foundation models for language, and Gartner acclaimed them a leader in the cloud AI developer services.

Vertex AI architecture is built on powerful GPUs (NVIDIA Tesla) and Google’s custom-designed tensor processing units (TPUs) that accelerate large-scale AI workloads.

This ML platform offers access to a flourishing Model Garden, featuring over 200 pre-built foundation models, including Google’s renowned Gemini models. The Google team thoroughly curates this list to include only the best-in-class solutions from major AI providers, such as Anthropic, Meta, Mistral AI, and AI21 Labs.

The Vertex Managed Datasets feature enables companies to continuously update their models with new datasets and seamlessly deploy them via Vertex Pipelines. This helps keep models up-to-date and relevant for the business.

Latest enterprise-grade capabilities

The Vertex AI Search and Conversation modules now natively support RAG and customizable chat interfaces, allowing organizations to build domain-specific assistants grounded in their internal data.

For advanced automation, the Vertex AI Agent Builder enables enterprises to deploy reasoning agents at scale, powered by Gemini models and Google’s orchestration APIs. These agents can execute multi-step workflows, connect to business data, and collaborate across different applications, making Vertex AI suitable for operational intelligence use cases in sectors like finance, logistics, and manufacturing.

Security and compliance remain deeply embedded across the Google stack. Vertex AI incorporates VPC Service Controls (VPC-SC) to protect data boundaries, Customer-Managed Encryption Keys (CMEK) for encryption control, and Vertex AI Governance for granular access management, auditing, and model oversight, ensuring enterprises can innovate confidently within strict regulatory environments.

A customer’s review on G2 expands on the Vertex AI offering:

The seamless integration among services such as BigQuery, Vertex AI, and Cloud Functions makes this platform particularly well-suited for data-driven and AI-powered applications. It is very developer-friendly, offering excellent documentation and SDKs that make development smoother. GCP’s strong focus on innovation, especially in the areas of AI and machine learning, really distinguishes it from other cloud providers.

Real-life implementation for General Motors

General Motors (GM) collaborated with Google Cloud and used Google’s AI platform to integrate the conversational AI solution, Dialogflow, into their vehicles. GM is a trusted partner of Google, as their cars have included Google Assistant, Google Maps, and Google Play over the years.

This technology enables GM to process over 1 million customer queries per month in the US and Canada, helping to increase customer satisfaction by continuously answering questions such as “Tell me more about GM’s 2024 EV lineup.”

Here’s a comprehensive comparison table that recaps key differentiating features of each cloud-based generative AI platform.

| Feature | Amazon Bedrock | Azure AI | Google Vertex AI |

|---|---|---|---|

| Platform architecture | Multi-vendor marketplace with serverless execution | OpenAI exclusivity with Microsoft's ecosystem integration | Open ecosystem with native data analytics integration |

| Model access | 100+ foundation models through unified API | Exclusive access to OpenAI models and 1700+ models available via Azure AI Foundry Models | 200+ foundation models (first-party, third-party, open-source) |

| Model coverage | Text, image | Text, image, audio | Text, image, audio, video |

| Security | - Guardrails that block harmful inputs and outputs -Private cloud environment - Data stays unused for model training | - Microsoft Defender integration - Azure AI Content Safety with Spotlighting feature - Live security posture monitoring | - Content moderation systems - Transparency features - Output explanation capabilities |

| Integration capabilities | - Knowledge Bases for RAG - Multi-LLM orchestration - Built-in testing | - Smooth Microsoft ecosystem integration - Azure AI Search for RAG - Semantic kernel framework | - Multi-modal embeddings - Data analytics services integration - Agent Development Kit |

| Key strengths | - AWS ecosystem integration - Model diversity and flexibility - Model evaluation capabilities - Unified API access | - Microsoft's ecosystem integration - Exclusive OpenAI partnership - Enterprise-grade tools | - Strong data analytics integration - IP protection guarantees - Data sovereignty controls |

Cost structure: Pricing models, hidden expenses, and optimization strategies

Cloud services are more cost-efficient than LLM self-hosting, but deploying AI solutions in the cloud can also have hidden costs, as you often will need to pay for extra services.

Multiple customer reviews on G2 about Bedrock and Vertex AI claim that they have complicated pricing systems, as using different services and features in gen AI platforms incurs different charges. And with a heavy AI workload, a monthly bill can be a big surprise.

To avoid such unexpected expenses, collaborate with hands-on AI engineers who have experience with all major cloud platforms and can help you alleviate the pricing complexity.

AWS Bedrock pricing

Amazon Bedrock employs a pay-as-you-go model based on input and output tokens, complemented by a Provisioned Throughput mode (charged by the hour and most suitable for large inference workloads) for customers who require consistent capacity. This makes it flexible for both experimentation and production workloads.

With Provisioned Throughput, companies can reserve dedicated throughput in exchange for predictable latency and stable costs, particularly useful for enterprises running customer-facing systems. For example, one throughput unit for a foundation model is billed at approximately $39.60 per hour, which translates to continuous monthly costs of nearly $28,000.

Bedrock also offers Bedrock Flows billing, charging $0.035 per 1,000 workflow node transitions for orchestrated agent operations. With its help, enterprises can run multi-step AI workflows (linking different models, prompts, agents, and guardrails) and incur charges only when a step (or node) is executed (e.g., switch between models or agents). This feature opens customization capabilities and enables organizations to control costs.

However, the total cost of ownership of the cloud AI infrastructure often extends beyond token usage. Expenses for data storage (Amazon S3), monitoring (CloudWatch), API integrations (Lambda), and compliance management add up, particularly when applications scale globally.

To offset that, Bedrock’s Model Distillation and Prompt Routing features can reduce inference costs by up to 30–75%, making it a cost-efficient option for long-term production workloads.

Azure AI and Azure OpenAI service costs

Azure’s pricing follows a token-based consumption model similar to OpenAI’s API, where enterprises pay separately for input and output tokens across GPT-4, GPT-4 Turbo, and other variants. Rates vary per model. For instance, GPT-4 is significantly less expensive (prompt mode costs $0.03 for an 8K context per 1,000 tokens) than GPT-4-32k ($0.06 for a 32K context per 1,000 tokens), allowing for optimization through selective deployment.

Azure also offers Provisioned Throughput Units (PTUs) that provide guaranteed capacity and predictable latency for production-critical workloads. However, the platform also offers a Reservations function. It involves pre-purchasing a set of PTUs beforehand, which helps reduce costs by up to 70% on predictable AI workloads.

Beyond token pricing, organizations should budget for Azure Cognitive Search, Data Zones, Fabric storage, and AI Foundry orchestration, which contribute to total spend. Azure’s advantage lies in its enterprise licensing structure. Many organizations that are already consuming Microsoft 365 or Azure credits can apply those toward AI workloads.

Combined with caching, model routing, and built-in compliance tools, Azure’s cost profile benefits enterprises seeking predictability, governance, and integration within existing Microsoft infrastructure.

Google Vertex AI pricing

Vertex AI employs a character-based billing model, charging per 1,000 input and output characters, rather than tokens. It also includes separate compute charges for model deployment, fine-tuning, and prediction jobs.

Vertex AI’s Generative AI Studio and Model Garden allow companies to use Gemini, PaLM, or third-party models under a single billing umbrella.

A major advantage is that Google offers a $300 free trial and additional credits through enterprise onboarding, lowering entry barriers for testing.

Hidden costs often stem from data operations, such as moving or processing large datasets via BigQuery, Dataflow, or Cloud Storage, as well as the compute hours associated with Vertex Pipelines.

Still, Vertex AI’s tight integration with Google’s data analytics stack enables cost optimization by running models directly within BigQuery, minimizing data movement. For enterprises running continuous training and analytics workflows, this can significantly reduce infrastructure overhead.

Cost structure comparison

| Aspect | AWS Bedrock | Azure AI & Azure OpenAI Service | Google Vertex AI |

|---|---|---|---|

| Primary pricing model | Pay-as-you-go based on input/output tokens | Token-based model, similar to OpenAI API | Character-based pricing per 1,000 input/output characters |

| Reserved / Provisioned options | Provisioned Throughput mode billed hourly (≈ $39.60/hr ≈ $28K per month per unit) for consistent inference capacity | Provisioned Throughput Units (PTUs) and Reservations for predictable capacity (up to 70% cost reduction on steady workloads) | No hourly provisioning; compute billed separately for model deployment, tuning, and prediction jobs |

| Included / Free credits | None; pay-per-use | Credits may be applied from existing Microsoft 365 or Azure commitments | $300 free trial and additional enterprise onboarding credits |

| Hidden / Add-on costs | Data storage (S3), monitoring (CloudWatch), API integrations (Lambda), and compliance management | Azure Cognitive Search, Data Zones, Fabric storage, Foundry orchestration services | Data operations (BigQuery, Dataflow, Cloud Storage) and Vertex Pipelines compute hours |

| Optimization features | Model Distillation & Prompt Routing → 30–75% inference cost reduction | Caching, model routing, and PTU reservations for predictable cost optimization | Run models within BigQuery to reduce data movement and infrastructure overhead |

Decision framework: Mapping platform strengths to enterprise requirements

Selecting the optimal enterprise LLM platform requires aligning technical capabilities with specific business objectives, as the long-term aim of managed AI services is to benefit the business by increasing revenue, enhancing employee productivity, and optimizing workflows.

When Amazon Bedrock makes strategic sense

Bedrock makes the most sense for companies deeply invested in the AWS ecosystem (Lambda, S3, SageMaker, Redshift), as this way they don’t need to come up with workarounds for data ingestion and infrastructure setup.

Amazon also offers a wide range of compliance standards, including ISO, SOC, CSA STAR Level 2, GDPR, HIPAA, and FedRAMP High, making this AI solution a safe option for regulated industries such as finance, healthcare, and government institutions. In enterprise compliance and reliability, Amazon surpasses Google and Azure.

Organizations that need a multi-vendor model flexibility without managing separate integrations can also benefit from Amazon Bedrock.

When Azure AI delivers maximum value

Organizations heavily invested in Microsoft 365 and the Azure ecosystem can benefit even more from the broad service offerings available with Azure AI.

Apart from exclusive and quick access to the latest and most recently updated OpenAI models, Azure AI also provides seamless integration with AI development tools such as GitHub Copilot (created by GitHub and OpenAI). This enables a streamlined development process, saving developers’ time. In this respect, Azure wins in the Bedrock vs OpenAI rivalry, as it provides flexibility of the reliable cloud provider with modern AI tooling.

For organizations handling sensitive or regulated data, Azure offers OpenAI on Your Data, which grounds LLMs in private datasets without model retraining, ensuring full compliance with data residency, security, and privacy requirements.

Where AWS Bedrock emphasizes infrastructure simplicity, and Google emphasizes data-first integration, Azure AI’s strength lies in aligning generative AI with existing enterprise workflows, compliance guardrails, and a vast, flexible model catalog, making it the ideal platform for organizations that want AI deeply embedded in business.

When Google Vertex AI offers competitive advantages

Google Vertex AI stands out for data-intensive, analytics-driven enterprises that need to blend AI with large-scale data pipelines.

With native integrations into BigQuery, Dataflow, and Looker, Vertex AI enables organizations to train, deploy, and query generative AI models without transferring data across systems, providing a significant cost and latency advantage.

For manufacturers, logistics providers, or any enterprise optimizing operations through predictive analytics, Vertex AI offers the best fusion of AI and data intelligence, turning complex data ecosystems into competitive business assets.

Decision-making cheatsheet from Xenoss engineers

Based on our experience delivering enterprise-grade LLM solutions of varying complexity, we’ve compiled a list of questions distributed by categories to simplify the selection process for you.

| Category | Key enterprise questions | Decision guidance |

|---|---|---|

| Data environment and infrastructure | • Where does your core enterprise data live, AWS S3, Azure Blob, or Google BigQuery? • Do you need regional data processing boundaries (e.g., EU/US segregation)? | Stay close to your existing data gravity to minimize latency, security overhead, and integration costs. Azure AI Data Zones and AWS Bedrock’s region-limited deployment may be a better fit than Google’s global-first model. |

| Use case and deployment | • Are your use cases multi-model or single-model dependent? • Do you need to fine-tune, train, or integrate custom models rather than only consuming APIs? • How critical is latency and SLA consistency for your workload (e.g., trading systems, chat interfaces)? | If you need to experiment across multiple FMs (Claude, Llama, Titan), AWS Bedrock is an ideal choice. If you rely on OpenAI reasoning models, Azure is the way to go. Vertex AI offers the most mature custom model-training ecosystem, while Bedrock and Azure focus on inference and orchestration. |

| Compliance and governance | • What are your regulatory obligations (HIPAA, FedRAMP, GDPR)? • Do you require auditability and access control integration with existing IAM / Active Directory? | Both Bedrock and Azure offer mature certifications for the healthcare and public sector. Google is expanding coverage. Google’s IAM is robust but more data-platform oriented. Azure has the edge for Active Directory integration, followed by AWS. |

| Cost and flexibility | • Do you prioritize pay-per-use simplicity or predictable reserved pricing? • Will you benefit from multi-cloud redundancy or deep single-vendor optimization? | AWS Bedrock’s pay-as-you-go is flexible for experimentation. Azure’s Provisioned pricing offers predictability for large deployments. Vertex AI charges per compute hour, making it suitable for steady pipelines. If resilience matters more than vendor lock-in, Bedrock’s multi-model openness and Vertex’s open-source stance are safer. |

| Strategic AI maturity | • Are you primarily integrating AI into business workflows or building AI-driven products? • Do you have internal ML/AI engineering expertise? | Vertex AI and Azure offer the most technical control but require stronger in-house data science teams. Bedrock abstracts most infrastructure complexity. |

Why strategic alignment beats platform loyalty

Every platform has strengths: AWS offers unmatched model diversity and enterprise compliance, Azure delivers deep productivity and governance integration, and Google drives analytical scale and open innovation. However, enterprises that succeed in production-scale AI start with strategic alignment rather than focusing only on the platform’s strengths.

Strategic alignment means selecting tools and platforms that support the company’s long-term operating model. Instead of locking into a single ecosystem, forward-thinking enterprises design their AI systems with flexibility from the outset by investing in migration-ready architectures.

This often involves introducing abstraction layers to switch between providers without requiring code rewriting, containerizing workloads for portability, and maintaining centralized observability to monitor costs, latency, and performance across multiple clouds.

Such an approach transforms cloud AI from a dependency into a competitive advantage. When costs spike, regulations shift, or new model capabilities emerge, these companies can adapt instantly, rerouting inference, retraining models, or scaling workloads where it makes the most sense. They measure success not by the number of models deployed, but by how efficiently and securely those models drive business outcomes and ROI.

In a market that evolves every quarter, strategic alignment supported by migration flexibility is what future-proofs enterprise AI investments.