Optimizing training data via data curation often delivers greater performance gains than model tweaks alone.

A 2025 study found that multiple dimensions of data quality strongly influence performance across 19 ML algorithms, proving that fixes to inconsistency, noise, and imbalance translate into real gains.

Large-scale LM work shows the same pattern. Meta, for one, attributes major quality jumps in Llama 3 to aggressive data curation and multi-round QA.

Where should engineering teams source data for curation?

Peer-reviewed scientific content brings rigor, provenance, and easier compliance, but narrower coverage of real-world language patterns.

User-generated content (UGC) captures diverse linguistic variations and edge cases at scale, but the data can get messy and biased.

In this article, we’ll map the benefits, trade-offs, concrete patterns, and real-life success stories for combining the two to build accurate, compliant enterprise models.

How data curation frameworks improve AI model performance

Data curation turns raw data into a carefully reviewed dataset. Implementing a data curation framework helps engineering teams enhance AI performance and reduce compute usage.

DBS Bank proved this by replacing bulk data collection with a systematic six-step curation framework. Their engineering team now cleans, contextualizes, and enriches every input before training, turning their data pipeline into a competitive advantage.

Outcome: the new approach slashed data preparation time by over 50%, accelerated model development cycles, and ensured regulatory compliance with GDPR and industry mandates.

What makes data curation different from raw data collection

Raw data collection aims to get as much data as possible.

Data curation helps boost the value of raw data through organized preparation. Here are the steps AI engineering teams take to transform raw data into a dataset fit for training algorithms.

- Deduplication and validation: Getting rid of duplicate records and dealing with missing values

- Format standardization: Fixing inconsistencies in data formats and units

- Normalization: making numerical data uniform across sources

- Variable encoding: Coding categorical variables in the right way

- Noise reduction: Getting rid of outliers when it makes sense

Curated datasets trade size for traceability and balance across domains. That’s why engineering teams enrich datasets by combining them and bringing in outside context.

In 2023, a CleverX study revealed that 78% of AI project failures were directly linked to inadequate labeling practices. Even sophisticated machine learning systems used in the research depended heavily on accurately annotated datasets to build relationships between data points.

On the other hand, organizations that implemented robust labeling workflows, like schema-first approaches, pilot testing, and inter-annotator agreement protocols, achieved 64% higher model performance scores across diverse applications from healthcare diagnostics to natural language processing.

Data quality failures: Why volume without curation degrades AI systems

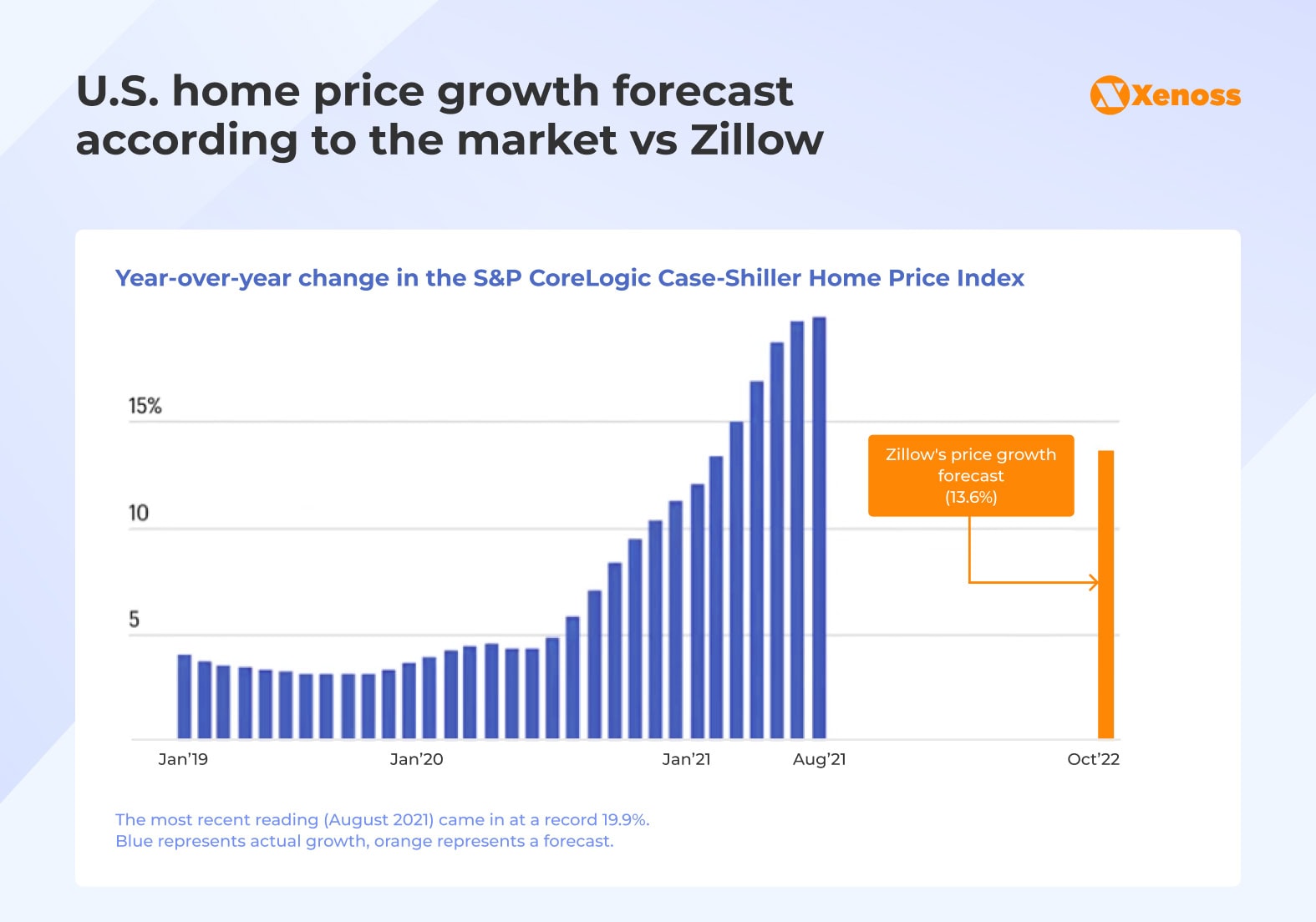

Zillow’s iBuying division is a poster example of poor data quality, costing the company millions in the long run. The startup relied on machine learning models to predict resale prices and streamline home purchases across several U.S. markets.

Slow, mixed-up data feeds, fuzzy labels, and shaky features caused the training and serving distributions to diverge.

The faulty model forced Zillow to take write-downs of hundreds of millions, and cut about 25% of staff linked to the unit. The failure demonstrates that sophisticated algorithms cannot compensate for fundamental deficiencies in data quality.

Costs go up

Unstructured datasets need more computing power and take longer to train. Data cleaning and proper curation reduce both training time and infrastructure costs.

Gartner estimates that enterprise organizations lose nearly $13 million per year on average due to poor data quality.

Accuracy hits a wall

Mislabeled examples, duplicate records, and irrelevant samples increase gradient variance.

They make training slower and cause models to focus on random noise instead of useful patterns. Teams add extra data and computing power to fix this, but results improve as training time grows longer and infrastructure costs climb, while validation metrics stop improving.

Clean datasets, free from duplicates, balanced to cover a range of cases, and verified for accurate labels, bring back efficiency. They help models generalize well without needing massive amounts of data.

Bias gets worse

Biased sampling and labeling lead to higher false-positive rates for protected groups, unstable calibration across segments, and fragile performance when data distributions change.

This creates real risks, like exposure to adverse actions, problems found in audits, and expensive human interventions when models don’t work well for minority groups.

Selecting data through stratified sampling, removing duplicates, using proxies for sensitive attributes, and adding counterfactual examples stabilizes machine learning models. The SlimPajama-DC study showed that after eliminating low-quality and duplicate text, a 49.6% smaller corpus (627B vs 1.2T tokens) outperformed the larger, noisier dataset.

Improvements slow down

Once the data threshold reaches a certain size, piling on more of the same messy or repetitive data doesn’t help much. The noise in the gradients goes up, and the test loss stops improving. Careful data selection by finding edge cases, choosing what to learn from, and removing duplicates makes each example more significant.

It focuses on learning what matters. In real-world situations, these hand-picked batches can enhance your validation scores when simply adding more data is no longer effective. This makes your learning more efficient and helps it work better in new situations.

Scientific content curation: Strengths and limitations

Scientific content builds trust through careful validation. Understanding its structural limitations helps design effective data integration strategies that compensate for inherent gaps in coverage and linguistic diversity.

Why is scientific data dependable?

Peer review lies at the core of scientific data dependability. Researchers review each other’s work to ensure the accuracy and value of the method before it is published.

This review process serves as a gatekeeper, allowing credible studies to be included in the research archives while excluding flawed ones.

Advantages of using peer-reviewed content in AI training

Using peer-reviewed material provides three significant benefits to advance AI systems.

| Advantage | Impact |

|---|---|

| Reliability | Every claim is traceable to a credible source, reducing baseless outputs |

| Rigorous validation | Multi-level verification minimizes errors and intentional distortions. |

| Domain-specific accuracy | Models trained on domain-specific scientific data (e.g., finance, healthcare) achieve higher accuracy compared to general-purpose LLMs. |

Language and coverage limits bring big challenges

Organizing scientific data comes with several main hurdles that guide how AI training is shaped.

Approximately 98% of scientific publications appear in English, despite English representing only 18% of global language speakers. This language divide leaves huge gaps in knowledge in non-English AI settings.

Stanford researchers discovered that large language models work well for English speakers but struggle with languages that are less common. The main issue is the lack of good-quality data in those languages.

Even databases like Dimensions, which include 98 million publications, are small when compared to the wide range of data found online.

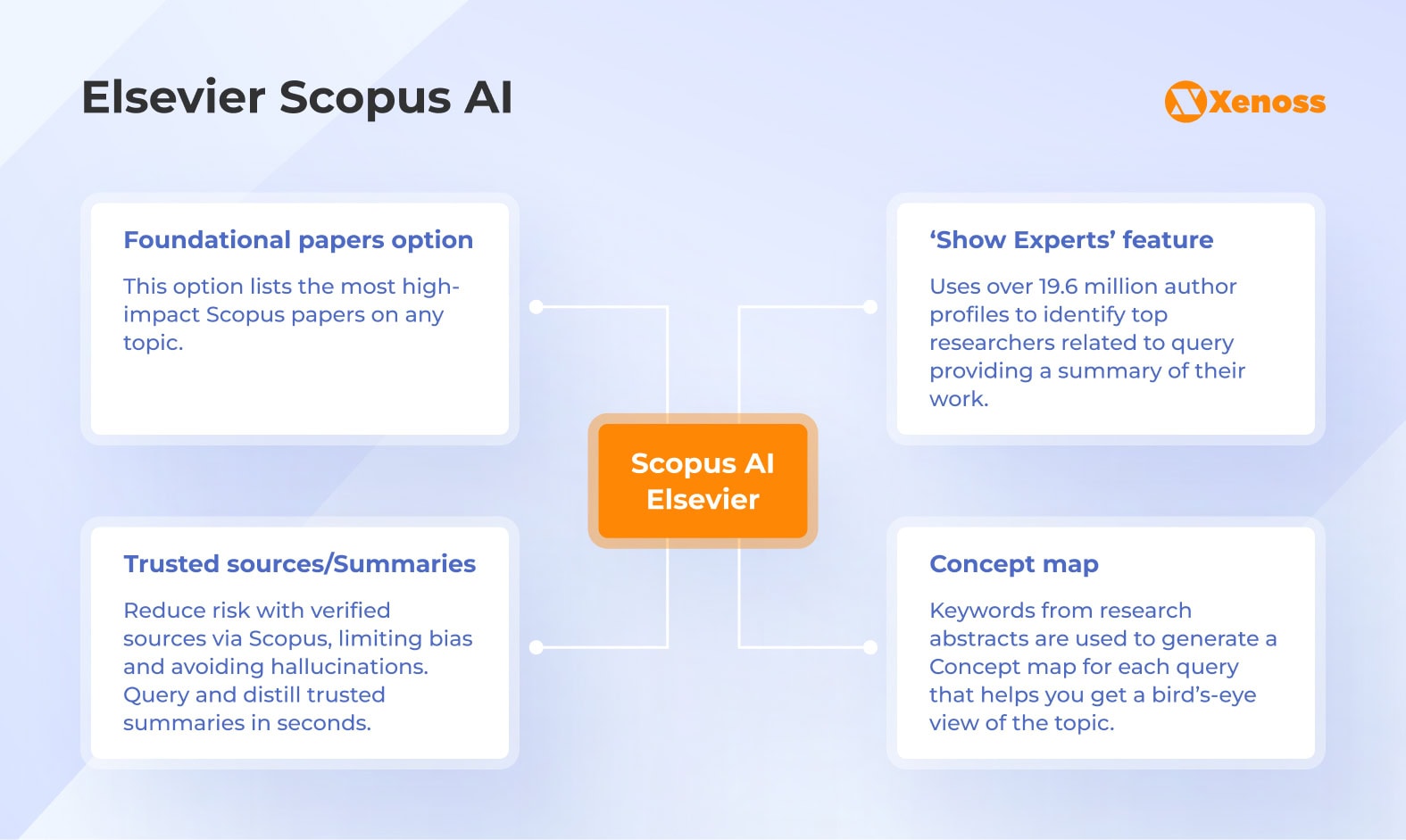

Case study: Elsevier Scopus AI

Scopus AI is an example of a powerful machine model trained on scientific content. It understands natural language queries and creates evidence-based summaries using Scopus-reviewed materials. Each summary includes references to specific sources and shows how confident the system is in its answers.

The “Deep Research” tool on the platform relies on agentic AI to create research plans, search through literature, and tweak strategies as new insights appear.

If it cannot locate enough evidence, the system mentions this gap instead of fabricating believable but false answers. This cuts down the chances of errors caused by hallucinations.

User-generated content (UGC): Value and risks

User-generated content is the fastest-growing data source for AI training, capturing language patterns that scientific literature misses entirely.

However, UGC deployment requires careful risk management to prevent model degradation and regulatory exposure.

What enterprises gain from UGC integration

UGC encompasses social media posts, forum discussions, reviews, videos, blogs, and community-generated materials that reflect how users actually interact with technology.

Unlike scientific content, UGC captures emerging slang, regional dialects, and problem-solving approaches that users employ in real conversations.

This linguistic authenticity translates into measurable business advantages. AI systems trained on UGC respond more naturally to customer queries, reducing support escalation rates and improving user satisfaction scores.

UGC provides real-time signals about market trends, product issues, and customer sentiment. These signals typically precede formal documentation by months or years, enabling organizations to detect emerging patterns and respond to user needs before competitors identify the same opportunities.

How UGC improves AI model performance

Scientific content supports AI models with accurate facts and explainable ideas, but it underrepresents edge cases, human error, and real-world changes to the target domain.

That’s where user-generated content is helpful in providing a testing ground that challenges models with scenarios they’ll face when deployed.

Integrating user-generated content into model training yields three important benefits.

| Category | Description | Benefits |

|---|---|---|

| Long-tail coverage and robustness | User-generated content brings up edge cases, messy inputs, and real-world failure scenarios | Training on realistic distributions makes models robust, resilient to out-of-distribution data, and resistant to prompt drift. |

| Implicit labels to improve objectives | User engagement indicators (clicks, time spent, problem resolutions, thumbs-up/down) give the model guidance to learn preferences and create pairwise ranking losses. | Engineers can fine-tune models for retrieval, summarization, and recommendations without expensive manual labels, boosting P@k/NDCG where it counts. |

| Context for retrieval and tool use | User-generated content has a lot of extra information (a poster’s location and device, posting time, etc.) that helps the model make better choices and ground AI responses. | Using these details helps find better examples and non-examples and improve the accuracy of generated answers. |

Case study: OpenAI tapped into StackOverflow’s UGC to improve the quality of AI coding

OpenAI needed high-quality, human-authored programming data with governance guarantees to fine-tune and ground coding assistants. Stack Overflow, on the other hand, needed a sustainable, attribution-preserving way to license its community knowledge.

Solution: The companies formed an API licensing deal that allowed OpenAI to consume validated Q&A via OverflowAPI and return attribution/links in ChatGPT.

Stack Overflow, in turn, enforced quality through reputation-weighted moderation and formal terms that cover provenance and permitted use. This structure couples community validation with contractual compliance and technical integration paths (RAG and fine-tuning).

Technical risks and mitigation strategies for UGC integration

Despite its utility in expanding data coverage and offering a real-life picture of the problem, UGC integration introduces operational risks that can damage model performance and expose organizations to regulatory scrutiny.

Synthetic-drift (model collapse)

Training on AI-generated UGC shrinks support and skews token frequency, lowering entropy and hurting OOD generalisation.

Solution: Implement a mixture-of-data weighting approach that anchors on human-authored corpora, synthetic content filters, KL divergence regularization to a reference model, and strict deduplication and freshness gates.

Bias amplification at training time

UGC often has label/representation imbalance and toxicity that drive slice-wise calibration errors.

Solution: Use stratified sampling, group-aware reweighting, counterfactual augmentation, and constraint-based objectives (e.g., equalised odds, group calibration). Validate with slice/equity evals and per-segment robustness tests.

Provenance, consent, and enforceability

Beyond policy, you need technical guarantees: content-addressable storage with licenses/consent as immutable metadata, lineage tracking, DLP/PII redaction, license-aware data loaders that block at batch-build time, and periodic re-scans.

Solution: Add differential privacy where required and version datasets to audit exactly which model is being trained.

Case study: Reddit’s licensing agreement with Google triggered an FTC investigation into UGC commercialization

Challenge: Reddit’s $60M licensing agreement with Google caught the FTC’s attention, prompting an investigation into how platforms make money from user-generated content to train AI models.

Takeaway: Regulators are keeping a closer eye on whether users receive clear information about AI training uses, what they get in return, and how their data is handled from start to finish. Be ready to show proof: license agreements, permission wording, data tracking on how opt-outs work, and ways to enforce the rules.

Combining scientific and UGC data: What works best

Scientific sources and user-generated content solve different problems.

Scientific data offers reliability but fails to capture everyday language patterns. UGC reflects real-world usage but adds noise and bias.

Clever integration tactics allow you to reap the advantages of both methods while reducing their separate flaws.

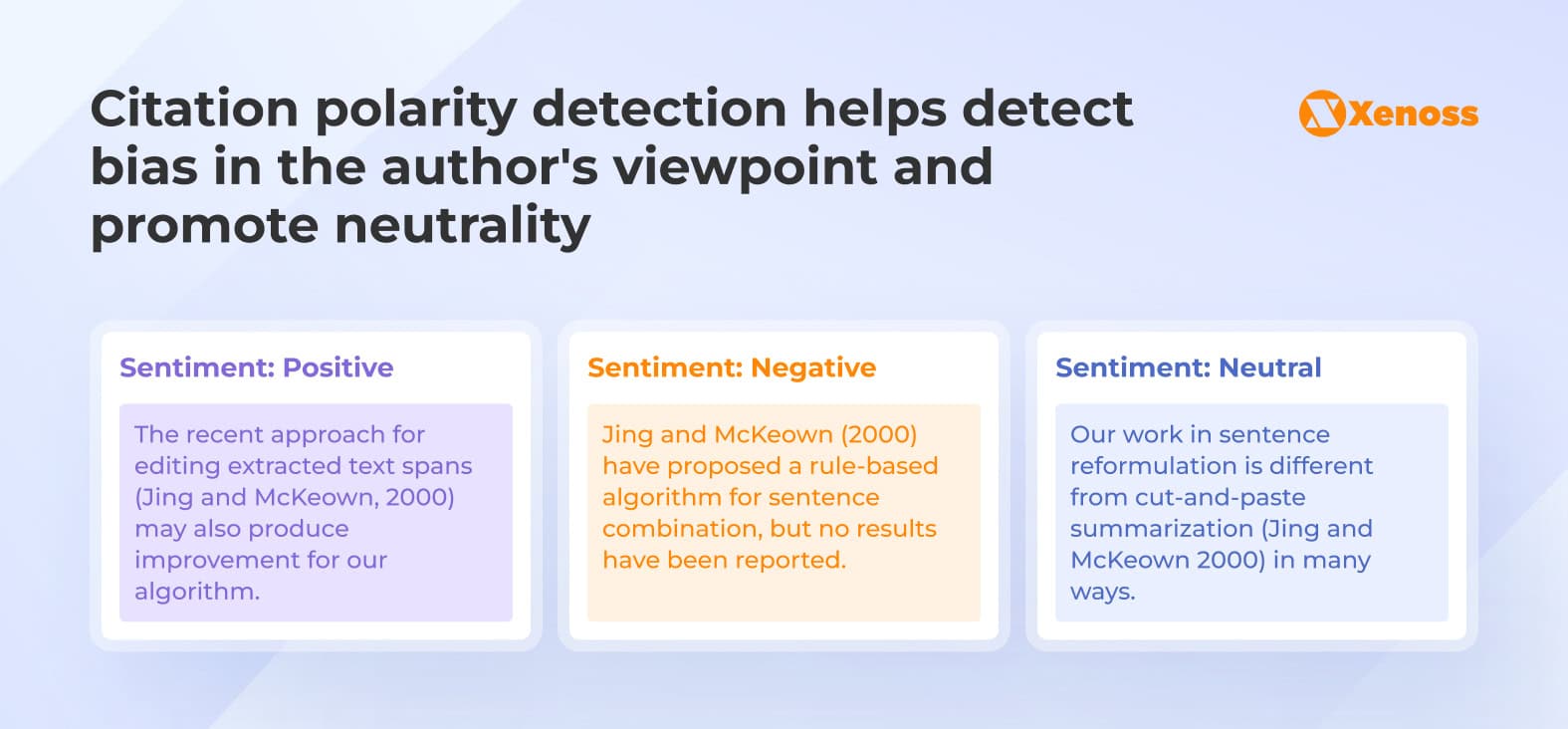

Citation handling based on polarity

Citation polarity analysis examines whether sources back up, disagree with, or mention specific claims. This new NLP research field helps assess a paper’s true impact beyond basic citation counts.

Today’s AI systems gain from understanding these sentiment patterns. Positive citations acknowledge strengths, negative ones point out limitations, and neutral citations just compare findings.

Giving AI systems the ability to handle citations with polarity awareness improves their accuracy in presenting scientific debates and promotes a neutral stance.

This becomes essential when training models for fields where scientific knowledge keeps changing, like climate science or medical research.

Case study: Karger Publishers

In 2024, Karger Publishers teamed up with Scite to add “Smart Citations” to all its journals. This move brought in badges that show how each reference is used (supporting, disagreeing, mentioning) for over 1.3 billion cited statements.

This change gives readers more details about claims right when they need them and makes finding information easier.

Evidence-first generation with an abstain policy

Policy-makers face an “evidence dilemma”: act with limited proof or wait for stronger evidence while risking harm. AI systems run into similar issues when they create responses based on incomplete information.

Evidence-first approaches create responses when there’s enough proof and hold back when information is lacking. This method cuts down on the risk of making things up while staying trustworthy,which is key for business uses where being right matters more than giving a full answer.

Case study: Lexis+AI

Lexis+AI, a legal assistant, launched with an evidence-first design that offered linked citations to underlying authorities and citation-validation checks that ground responses in vetted sources.

The model offered auditable, source-backed outputs. A study by Stanford’s HAI Center benchmarked legal RAG tools like Lexis+ AI against general models and found that they had lower hallucination rates.

Expert validation workflows for user-generated content quality control

Professional moderation has an impact on user-generated content quality control before it becomes part of training datasets. Experts review to catch mistakes, take out harmful material, and check accuracy while keeping the real-world language patterns that make UGC valuable.

This human-in-the-loop approach maintains data quality standards while capturing emerging topics and contemporary usage patterns not yet documented in peer-reviewed literature.

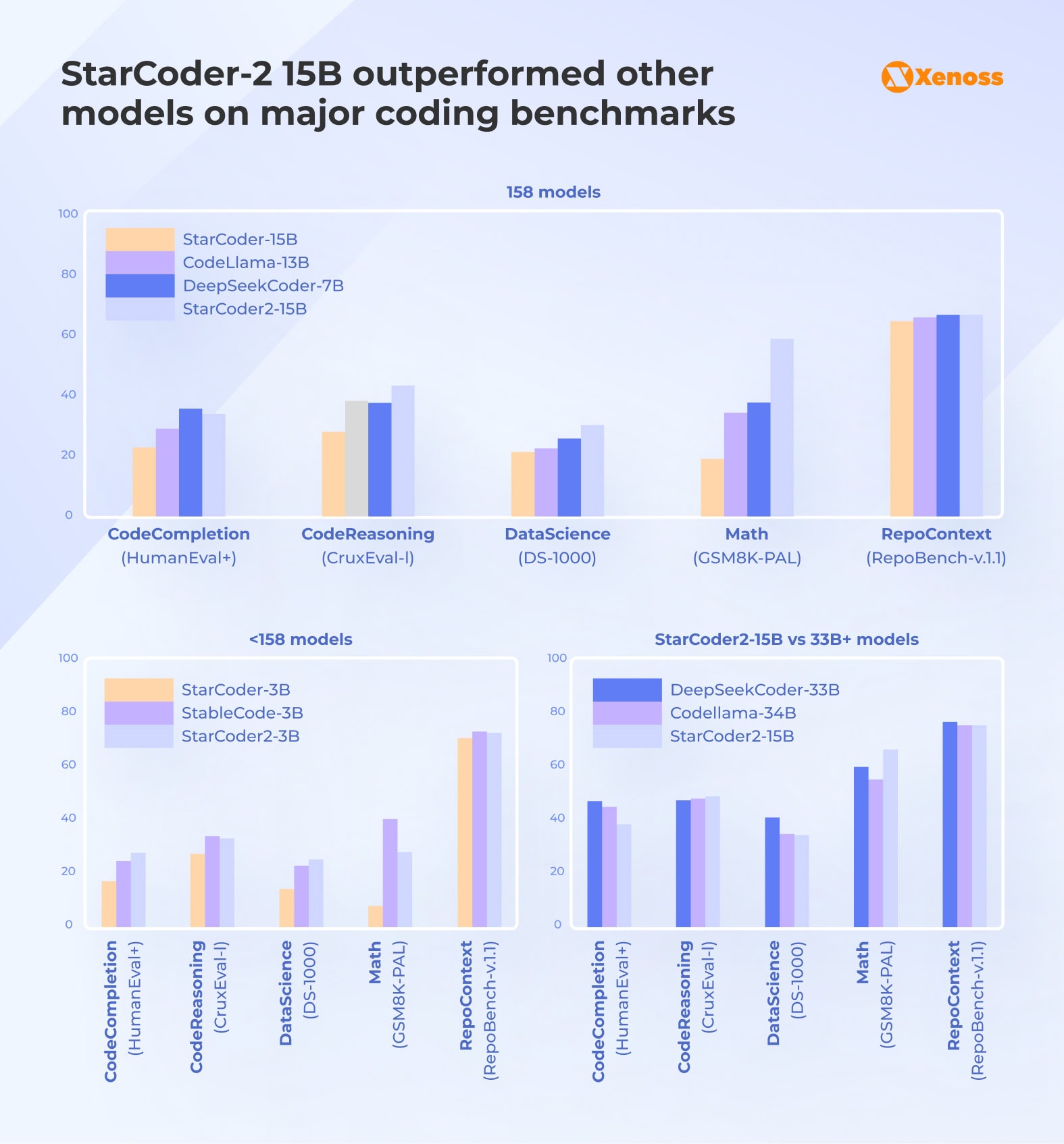

Case study: StarCoder2 improved the accuracy of AI coding with expert-reviewed UGC

Coding LLMs that train on raw GitHub user-generated content run the risk of leaking secrets using code with license restrictions and learning from poor-quality snippets. This can harm both model safety and performance in the long run.

To address this problem, the BigCode program (a team-up between ServiceNow and Hugging Face) set up community review sessions, checked licenses and where code came from, got rid of duplicates, and removed personal info and secrets.

The dataset they used, The Stack v2, helped create a higher-performing model, StarCoder2. StarCoder2-15B matched or outperformed larger benchmarks on MBPP and HumanEval.

Real-world data enrichment

Specific data enrichment plans tackle biased training data distribution by creating samples that show underrepresented areas. This method of semi-supervised learning fills gaps with useful content instead of just gathering more data.

Amazon’s study shows this method cuts down error rates by up to 4.6% across fields. This approach works by spotting where your training data falls short and tackling those gaps with chosen examples instead of random extra data.

Case study: Meta’s Massively Multilingual Speech (MMS)

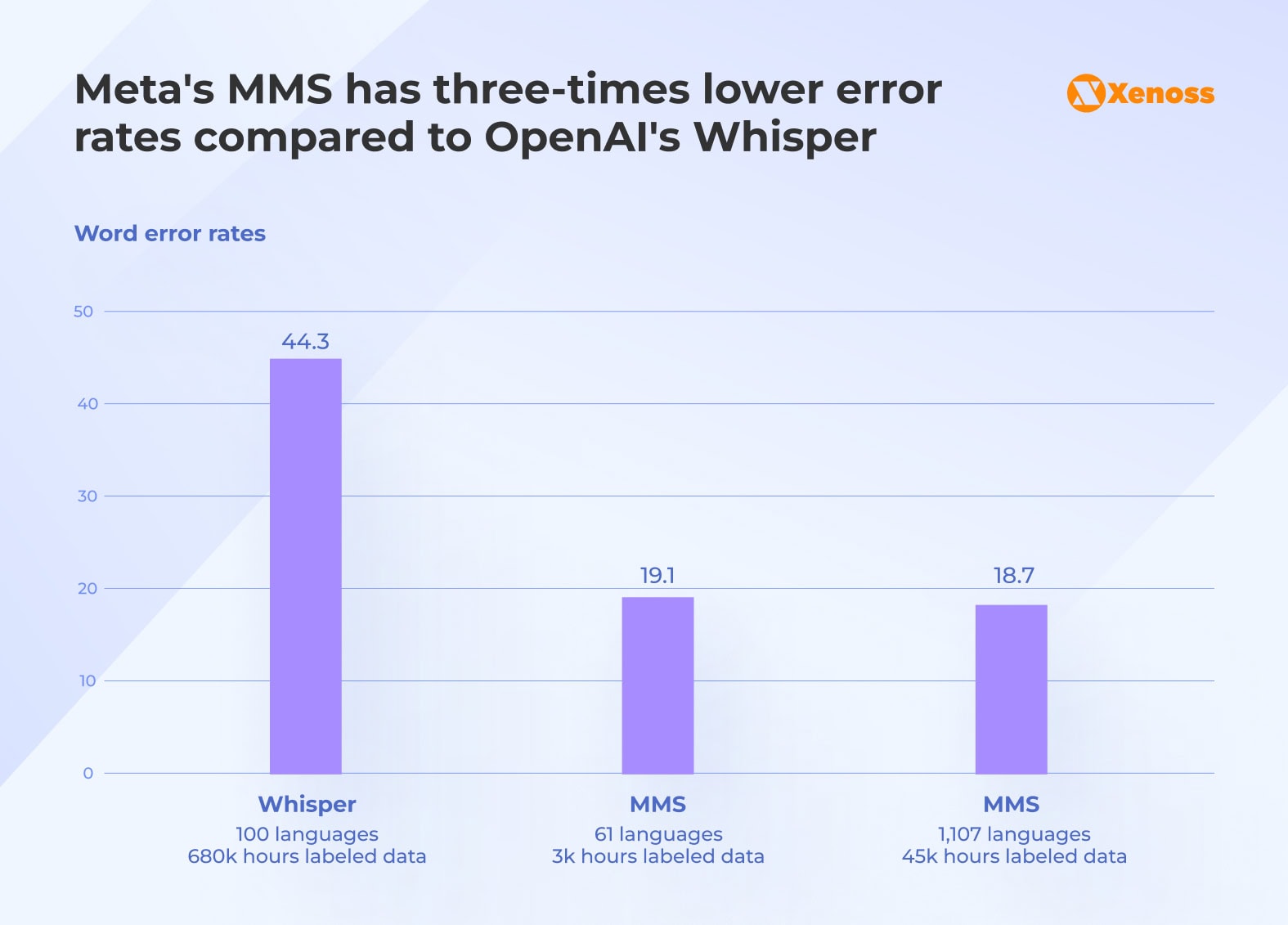

Many speech models underperform on low-resource languages because the training data tends to skew toward a few well-represented languages. Meta’s Massively Multilingual Speech (MMS) program targeted those gaps by mining real-world speech–text for 1,100+ underserved languages and leveraging self-supervised pretraining to enrich precisely the underrepresented slices.

Outcome. The resulting models expanded coverage ~10× and more than halved word-error rate versus Whisper on 54 FLEURS languages.

Split roles for copilots to give feedback and manage oversight

Splitting AI system oversight between different roles boosts both performance and accountability. Tech experts handle data sources and quality while domain experts give feedback on what the system produces.

This setup establishes clear lines of responsibility while promoting ongoing improvements through well-organized feedback systems.

Tech teams zero in on data quality and how well the system runs, while experts in specific areas check if the outputs are on point and accurate in their fields.

Case study: Morgan Stanley’s wealth management assistant

To provide personalized wealth management to its clients and maintain compliance in a regulated market, Morgan Stanley needed trustworthy, auditable AI while steering clear of hallucinations in advisor workflows.

Solution: Morgan Stanley’s machine learning team divided data oversight. Technical teams took charge of retrieving evaluation datasets and conducting daily regression tests, while financial advisors reviewed outputs and provided domain insights before launch—an evaluation-driven process with humans in the loop.

Outcome:The assistant gained firmwide acceptance (98% of advisor teams used it), improved document access from 20% to 80%, and shortened turnaround from days to hours for client follow-ups.

For Morgan Stanley, joint responsibility between ML engineers and domain experts helped boost both copilot performance and confidence.

Strategic integration of scientific and user-generated training data

The choice between scientific content and user-generated content isn’t binary. Scientific sources provide the reliability enterprises need for specialized domains, but miss the language patterns users actually employ. UGC captures real-world diversity but requires expert filtering to prevent bias and noise from degrading model performance.

Successful AI training programs combine both approaches strategically.

Polarity-aware citation handling prevents false consensus when scientific debates exist. Evidence-first generation policies reduce hallucinations by requiring sufficient supporting data before generating responses. Expert-moderated UGC loops maintain quality while preserving authentic language patterns.

Companies implementing these hybrid strategies gain competitive advantages that extend beyond accuracy metrics. Their AI systems cost less to train, consume fewer computational resources, and earn user trust through consistent performance.