A Med-Gemini model once made up a brain part, “basilar ganglia”, by merging two real ones, “basal ganglia” (helps with motor control) and “basilar artery” (transfers blood to the brain). It even diagnosed a patient with a non-existent condition: “basilar ganglia infarct”. If missed, this seemingly minor error could mislead a radiologist, resulting in dangerous treatment or a lack of it.

When using AI for decision-making in customer service, legal, healthcare, or financial industries, frequent AI hallucinations can undermine the value of AI and further investment in this technology. For instance, in legal space, AI hallucination rates can range from 69% to 88% and that’s for highly customized models.

A recent OpenAI study reveals that AI models hallucinate because they guess instead of admitting they don’t know something, a behavior similar to that of students during tests. However, unlike students’ errors, AI hallucinations in business can lead to severe consequences, including compliance violations, brand damage, lawsuits, loss of customer trust, or human health risks.

In 2022, Douglas Hofstadter, an American cognitive scientist, said that “GPT has no idea that it has no idea about what it is saying.” ChatGPT hallucinations are a case of double ignorance, but it can be controlled.

Our in-depth analysis examines what AI hallucinations are in production, their business implications, and potential mitigation strategies. While entirely eliminating hallucinations may be impossible, strong pre-training, training, and post-training validation can lead to near-perfect AI outputs.

Understanding AI hallucinations in enterprise systems

Broadly, AI hallucinations can be divided into two categories: factuality and faithfulness hallucinations. Factuality hallucinations occur when the output differs from verifiable real-world facts, such as claiming that the USA has 52 states instead of 50.

Faithfulness hallucinations occur when the AI model fails to consider the prompt context and deviates from the instructions, such as when an AI assistant, instead of fetching requested data from the CRM, pulls it from an Excel spreadsheet, thereby frustrating the sales team.

In fact, 47% of employees are concerned about the decisions their companies make based on AI outputs. As in the case with the Med-Gemini model, clinicians said that they don’t trust human judgment enough to verify every AI output, as validation also requires experience and time, which some medical workers may lack.

On a more granular level, enterprise teams can encounter the following hallucinations:

AI hallucinations examples and types

Type Description Enterprise relevance Extrinsic vs intrinsic hallucination Extrinsic: output claims facts not present in the input/knowledge base;

Intrinsic: output contradicts itself or internal logicParticularly dangerous when the model contradicts known policies/data Contextual or domain hallucination The model misinterprets domain-specific jargon or context and “hallucinates” domain-specific facts (e.g., inventing a regulation name) High in regulated industries (finance, healthcare, legal) Overconfident misstatements The model expresses certainty about a statement that is incorrect Users may not question it and propagate errors across the enterprise Citation or reference hallucination The model fabricates references, DOIs, court cases, whitepapers, or internal document identifiers that don’t exist Misleads audits, research, and compliance

How hallucinations differ from traditional software bugs

There is a three-fold approach to understanding AI hallucination examples when compared to traditional software systems:

Origin. Traditional software failures follow predictable patterns. A database query either returns correct results or fails with an error message. By contrast, AI hallucinations generate outputs based on probabilistic patterns, meaning that an LLM estimates the most statistically likely next word in a sentence based on the knowledge it gained from the training data. That’s why an AI system confidently provides incorrect information that looks completely legitimate, as it’s convinced that this output is correct.

Behavior. Traditional systems work and fail predictably, but an AI solution is a black box. Data science teams can impact AI models during pre-training, training, and post-training, but the process of running queries remains a mystery.

Detection. System administrators can debug traditional software using logs, stack traces, and reproducible error conditions. Hallucinations require domain expertise to identify and often slip past technical reviewers who lack subject matter knowledge.

What are the possible business consequences of frequently dealing with AI hallucinations?

Business risks from AI hallucinations

Beyond the common financial losses that companies often incur due to AI hallucinations, the latter can also lead to regulatory penalties, damage customer relationships, and expose organizations to litigation.

Brand value destruction through hallucination incidents

Market reactions to AI-generated hallucinations demonstrate how quickly fabricated information can destroy enterprise value. Google lost $100 billion in market capitalization within 24 hours after Bard provided incorrect information during a product demonstration.

The way customers see things is more important than just getting the technical details right. Users don’t distinguish between “The AI made an error” and “Your company published false information.” They hold the brand accountable for every piece of content delivered through official channels.

As was the case in the famous incident with Air Canada, when the company sought to avoid responsibility for the false information provided by their chatbot to a customer, claiming that the technology is a “separate legal entity.” However, the British Columbia Civil Resolution Tribunal took a different view and found AI Canada liable for misinformation, awarding a fine.

Recovery from hallucination-driven reputation damage often requires months of remediation efforts, customer communications, and process changes, which can cost significantly more than the original incident.

Compliance exposure in regulated industries

Healthcare and financial services face amplified risks because AI hallucinations can trigger regulatory violations with severe penalties.

For instance, 77% of US healthcare non-profit organizations identify unreliable AI outputs as their biggest obstacle to deployment.

Medical AI hallucinations can lead to incorrect treatment recommendations, diagnostic errors, and patient safety violations.

Financial services companies face similar compliance challenges when AI systems generate incorrect regulatory reports, miscalculate risk exposures, or provide false customer information that violates consumer protection laws and regulations.

The regulatory environment continues to tighten as agencies recognize AI-specific risks and develop enforcement frameworks that hold enterprises accountable for automated decision-making systems.

To address these risks effectively, organizations should treat AI hallucinations seriously and examine the root causes driving unreliable outputs within large language models: from limitations in training data to architectural and operational design choices.

Risk area Warning signs Quick fixes Brand trust Customer complaints about AI errors Add HITL reviews + disclaimers Compliance AI generates regulated content Implement RAG + automated fact-checking Financial/Legal AI used for contracts/advice Human validation for all outputs Operational AI drives workflows (e.g., CRM) CoT prompting + flagging uncertain outputs

Root causes behind hallucinations in enterprise LLMs

When deploying enterprise LLMs, organizations need to understand why hallucinations occur to build effective safeguards.

Training data limitations and noise

AI models reproduce and propagate every flaw present in their training data. If you train models on datasets containing biases, errors, inconsistencies, or incomplete information, you’ll see those same problems amplified in production AI outputs.

Static training data creates another business challenge, as models lack up-to-date knowledge after their training cutoff and can produce inaccurate outputs. AI systems show higher reliability and prove more effective when trained on extensive, relevant, and high-quality data.

To the question of what exciting things a Dell team is doing with AI, their CEO, Michael Dell, responded by emphasizing the importance of data:

The fun thing about your question is that almost anything interesting and exciting that you want to do in the world revolves around data. If you want to make an autonomous vehicle or advance drug discovery with mRNA vaccines, or you want to create a new kind of company in the financial sector, everything interesting in the world revolves around data. All of the unsolved problems of the world require more compute power and more data, and this is why I love what we do.

To feed custom AI models with high-quality data, enterprises should implement robust data governance frameworks that include regular auditing for biases and continuous quality monitoring throughout the AI lifecycle. It’s better to identify and address data issues before they manifest as model hallucinations.

Additionally, by implementing real-time data integration pipelines, you can keep models current with the most up-to-date information, particularly in specialized or rapidly changing domains.

Stochastic generation and next-token prediction

LLMs are stochastic in nature, meaning they operate in a world of controlled randomness, where each content generation involves selecting from multiple possible tokens (or words in a sequence). That’s their beauty and curse at the same time. On the one hand, it helps them produce creative, uncommon, and personalized responses. On the other hand, the probability of AI hallucinations increases. That’s why the more sophisticated and verbose AI models get, the higher the chances of hallucinations.

The best solution here is to stop treating LLM outputs as deterministic software responses. Heeki Park, a Solutions Architect with more than 20 years of experience, suggests that you should focus on how best to tackle a problem, whether by prompting a model or by writing code:

When considering whether to write code or to prompt a model within agents, let’s first define the problem space as it pertains to hallucinations, then discuss scenarios when one or the other is appropriate. When leveraging models for reasoning and task execution, remember that the output is non-deterministic. Agent developers could certainly lower certain parameters, like temperature, to reduce how stochastic the response is, but it still has some degree of randomness in the response.

In scenarios where your use case requires absolute determinism, i.e., the same exact output every time with mathematical precision, then it’s likely appropriate to write code for the task or tool, as code execution is deterministic. For example, if you have a dataset on which you want to perform statistical analysis, you should write code with standard analytical packages to do that work. That said, you could certainly use an AI assistant to help you write that code.

On the other hand, if you are conducting work that is fuzzier in its output, e.g., summarizing an academic paper, extracting insights from a financial analysis paper, then this is a scenario where models excel and could be a great tool for knowledge extraction.

Thus, depending on the level of determinism, your current problem needs, you select either a coding (could be with the help of AI) solution or a prompting one.

Temperature settings and prompt ambiguity

Model configuration, such as setting the temperature, can also affect hallucination frequency. The temperature hyperparameter controls randomness in token selection: lower settings (0.2-0.5) produce more predictable outputs, while higher values (1.2-2.0) increase creativity but simultaneously raise hallucination risks.

Ambiguous prompts with unclear terms or missing context also often trigger inconsistent or incorrect responses. This AI hallucination problem compounds when prompts contain negative instructions that introduce “shadow information,” confusing the model. Inaccurate prompts outweigh the temperature setting, as even reducing temperature values shows only minor improvements in handling ambiguous queries.

This presents a dilemma for enterprises: the same creativity settings that make AI outputs engaging also increase the likelihood of producing false information.

It’s essential to strike a balance between temperature settings and prompt details, so as not to overwhelm the model with too much information or deprive it of its creative capabilities.

To achieve this, work with an expert data science team that can perform thorough testing and validation during model training and define those model parameters that work for your business and data.

Lack of grounding in external knowledge sources

LLMs randomly manipulate symbols without a genuine understanding of the physical world. This fundamental limitation produces outputs that appear coherent but may disconnect entirely from reality. Without external verification mechanisms, models cannot validate their generated content against trusted sources.

Knowledge Graph-based Retrofitting (KGR) presents a promising approach, enabling models to ground their responses in external knowledge repositories and reduce factual hallucinations.

Mitigation strategies for reducing generative AI hallucinations

AI hallucinations aren’t inevitable. With the right safeguards, enterprises can reduce errors by 70% or more. Here are the most effective approaches.

Retrieval-Augmented Generation (RAG) integration

Apart from KGR, RAG techniques can also provide LLMs with access to verified knowledge sources, such as external or internal documentation, enabling models to access them in real time.

RAG implementation involves connecting AI systems to enterprise knowledge bases, product catalogs, or regulatory databases. When a query arrives, the RAG system retrieves relevant documents first, then uses that context to generate responses. There are three distinct types of RAG-based LLM architectures: Vanilla RAG, GraphRAG, and Agentic RAG.

Vanilla RAG is effective for simple queries (e.g., “What are the key benefits of our insurance plan?”) with datasets stored in vector databases for simplified retrieval. However, this approach isn’t capable of differentiating between data types, such as sensitive, regulatory, or customer data.

GraphRAG connects disparate data in a unified graph, with clear relationships between datasets, to enable more complex queries, such as multi-hop reasoning queries (e.g., “Which suppliers are linked to vendors involved in delayed shipments last quarter?”).

And Agentic RAG is a multi-agent LLM architecture, where each agent is responsible for a particular set of data, such as regulations, marketing, or customer support, and can provide more precise responses to specialized queries (e.g., “Does our latest marketing email comply with GDPR guidelines?”). These systems are easily scalable, as the more difficult and domain-specific queries become, the more agents an organization can add.

Depending on the complexity of your use cases, data quality, and budget constraints, Xenoss can help you select the most efficient RAG approach.

Chain-of-thought prompting

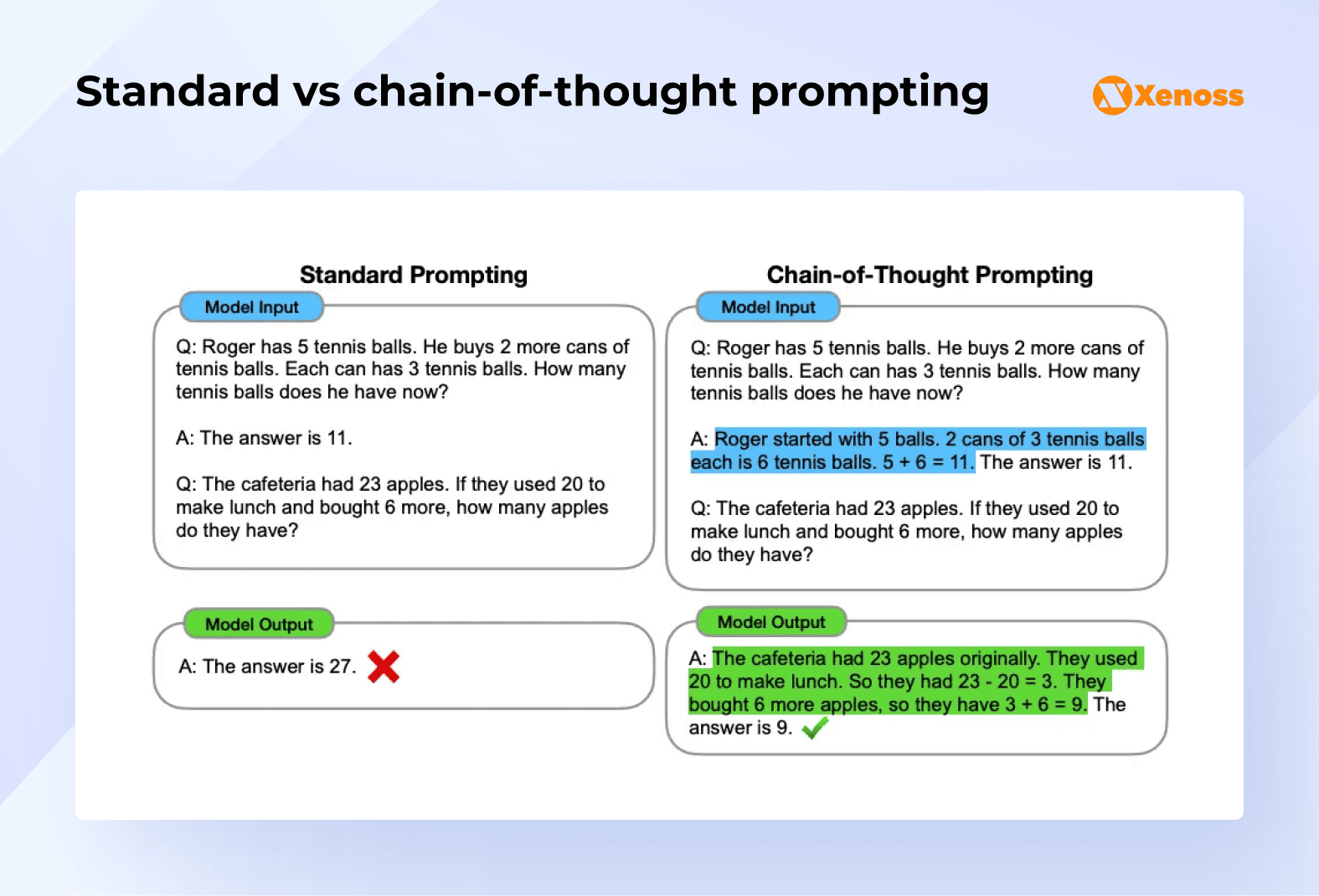

Step-by-step reasoning processes help models break complex problems into verifiable components and produce more accurate outputs. One example is chain-of-thought (CoT) prompting, which guides AI systems through logical sequences, making reasoning transparent and reducing errors in multi-step calculations. Below is an example of CoT with a simple math task.

For instance, in financial analysis or legal research applications, CoT prompting requires models to show their work in a step-by-step manner: “First, I’ll identify the relevant regulation. Second, I’ll analyze how it applies to this scenario. Third, I’ll determine the compliance requirements.” This approach helps models keep a continuous focus on user instructions.

However, even with CoT, organizations should validate outputs, particularly for high-stakes decisions.

Context engineering

Context engineering is an emerging discipline that extends beyond simple prompt engineering. As Andrej Karpathy notes, it’s the “art and science of filling the context window with just the right information for the next step.”

In practice, context engineering means curating every piece of data the model sees, from task instructions and few-shot examples to retrieved documents, historical state, and tool outputs.

For example, a clinician can make the following prompt: “Summarize a patient’s record in under 100 words”, and include a few examples of correctly formatted summaries for the model to imitate the style and structure. A clinician can also attach their previous human-written summaries (to serve as historical records) for the model to produce the most up-to-date output.

By ensuring the model operates within a precisely framed, verified, and relevant context, organizations can drastically reduce hallucinations caused by missing, outdated, or noisy information.

Unlike generic prompting, which often leaves the model guessing, well-designed context engineering provides AI systems with the right evidence at the right time, thereby improving factual accuracy, model stability, and overall trustworthiness.

However, you should keep in mind that context engineering comes with its flaws, as Heeki Park puts it:

When building agentic applications, context is important for ensuring that agents have the ability to provide responses that are personalized and targeted. However, context engineering is emerging as an important skill to ensure that the agent has just the right amount of context.

There are issues that can arise with context, even in the presence of a memory system, e.g., context poisoning (a hallucination or other error makes it into the context), context distraction (context gets too long), context confusion (superfluous or irrelevant content is used), context clash (information or tools conflict). Memory doesn’t solve those context issues. Context engineering needs to be applied to prune and validate that the appropriate context is maintained for the lifecycle of a session or user interaction.

To avoid these issues and prevent hallucinations, both context and prompts should be thoroughly checked and evaluated.

Human-in-the-loop review workflows

Human-in-the-loop (HITL) validation focuses on factual accuracy, contextual appropriateness, and potential bias issues before AI outputs reach end-users. HITL involves:

- Automated flagging of inappropriate or incorrect outputs

- A basic human review for edge cases and to catch errors that automated systems may miss

- Validation from subject matter experts (SMEs) for domain-specific queries

Financial institutions often require compliance officers to sift through AI-generated outputs, while healthcare organizations require clinical staff to approve AI-assisted diagnoses. The key is matching reviewer expertise to the domain where AI operates.

The combination of all three HITL approaches is the most effective way for a comprehensive evaluation of AI outputs. You can set custom rules as to when each HITL pattern should be triggered (e.g., automated flagging for factual inaccuracies, SME validation for business-critical decisions, and basic human review for ambiguous queries that require human resolution).

Monitoring hallucinations with feedback loops

Continuous monitoring analyzes production conversations, comparing AI responses against known facts and flagging suspicious outputs for review and further investigation.

These feedback loops create learning opportunities. When reviewers correct AI mistakes, those corrections improve the system’s future performance.

All of these mitigation strategies are theoretically sound, but how do different companies apply them in practice to increase AI reliability?

Real-world success patterns

Successful AI deployments mean that business value far outweighs the occurrence of errors or hallucinations. The following companies have developed methods to control hallucination rates, ensuring they don’t undermine the value of AI.

Truist Bank’s approach: Building trust in AI through human validation

Financial institutions process millions of transactions daily while maintaining strict regulatory compliance. To implement AI effectively without incurring brand damage, they should have rigid safeguards in place.

Chandra Kapireddy, head of generative AI and analytics at Truist Bank, shares his reflections on how to restrain AI hallucinations. In particular, their company places a strong emphasis on human oversight for high-stakes decisions.

…whenever we build a GenAI solution, we have to ensure its reliability. We have to ensure there is a human in the loop who is absolutely [checking the] outputs, especially when it’s actually making decisions. We are not there yet. If you look at the financial services industry, I don’t think there is any use case that is actually customer-facing, affecting the decisions that we would make without a human in the loop.

Truist Bank has established a set of rules for employees to employ AI, a cross-company AI policy, and a training program that helps AI users create accurate prompts and understand the flow of output verification.

The company holds their employees accountable for making decisions based on AI without first verifying its output. When everyone in the company is on the same page and understands the consequences of misuse, it’s easier to control AI and prevent financial or reputational damage.

How Johns Hopkins improves AI reliability in critical care decision support

With the increasing volume of medical data and the need for rapid diagnosis and treatment, medical organizations see considerable promise in AI. But hallucinations can pose a risk for the healthcare setting and harm patients.

To avoid such scenarios, researchers at Johns Hopkins Medicine are exploring ways to efficiently use healthcare AI. For instance, to address a pressing issue in predicting delirium in patients in an intensive care unit (ICU), they developed two models: a static and a dynamic model.

A static model provides outputs based on data provided by the patient after admission to the hospital, and a dynamic model works with real-time patient data. As a result, the static model’s accuracy was 75%, while the dynamic model showed a staggering 90%. This proved the effectiveness of feeding models with real-time internal data to increase their reliability and accuracy.

Before launching models into production, the team thoroughly tested and validated their outputs across different datasets.

These implementations demonstrate that hallucination risks can be managed through systematic validation, human oversight, and feedback mechanisms that continuously improve system reliability.

Bottom line

AI hallucinations present enterprise leaders with a clear choice: address the risks proactively or discover them through costly business disruptions.

By addressing the root causes of hallucination with high-quality data ingestion, the right choice of determinism level, and optimal temperature settings, enterprises can prepare to implement near-perfect AI systems. And RAG, prompt and context engineering, HITL, and continuous monitoring are effective strategies for reducing AI hallucinations in production environments and mitigating issues in post-production.

When applied together, all of the above practices create a reliable AI lifecycle. Over time, organizations move from reactive error correction to proactive quality assurance, ensuring AI systems remain trustworthy as they scale.