“We have no moat, and neither does OpenAI”.

This was the title of a leaked post shared internally by a Google employee. The full essay is worth a read, but it sums up as “No one is winning the race for a better model”. For Google, the outlook itself is too pessimistic (many machine learning experts argue that “the big G” does have a powerful moat).

But, a year later, the bottom line holds.

Almost every month, a new powerful model or capability hits the market. At the time of writing, the Internet is discussing GPT Agent and GLM 4.5 – a Chinese model released by Z.ai that reportedly was cheaper to train than DeepSeek. Just a few weeks ago, the news was all about Grok 4 and Kimi K2. A little before that, the AI community was praising Gemini 2.5 as “the best large-language model”.

One can’t help but wonder – now that good models flood the market, does superior benchmark performance matter?

And, if it no longer does, what should teams building AI projects focus on to have a competitive edge?

In this article, we will look into things that used to be a competitive advantage but no longer make a tangible difference, as well as tangible moats that engineering and product teams should zone in on to keep winning in a cutthroat market.

AI project types: wrappers, open-source, vertical, and foundational models

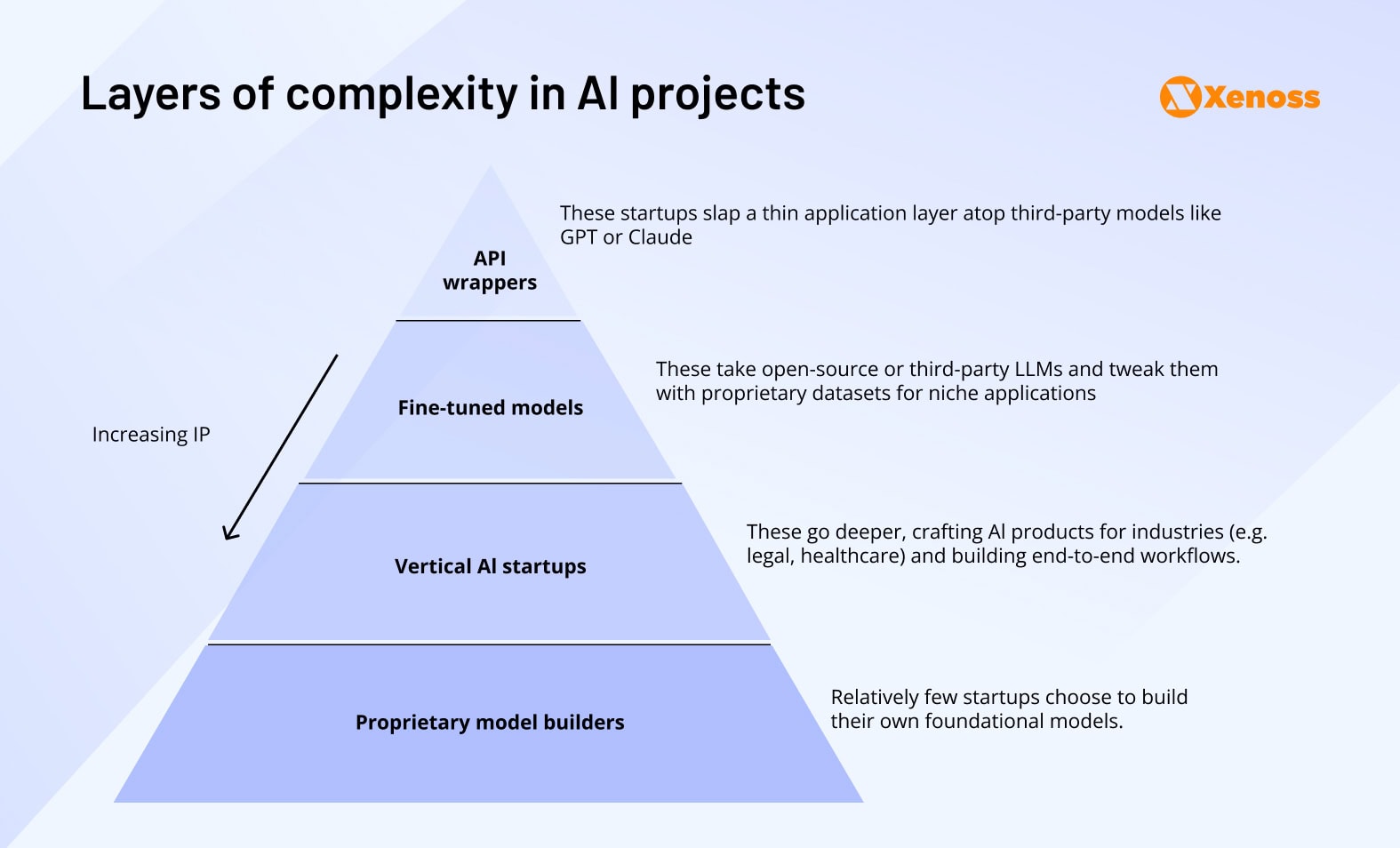

Before one starts exploring what is and is not a moat for an AI project in 2025, it’s important to remember that the concept itself is an umbrella term covering four key types of solutions.

Wrappers are the most superficial level, as teams behind them don’t host the model or own the data, only the interface they built over it.

The growth of the open-source LLM space allowed teams to have more control over the model without having to train one from scratch. Mistral, Llama, and, most recently, DeepSeek empower hundreds of internal projects trained on company-specific data to assist employees, improve marketing and sales campaigns, or make winning decisions based on up-to-date BI.

The last two layers, vertical projects and foundational models, allow for much more involvement in both building the dataset and training the model. Claude Code is a poster case for a successful vertical AI use case (although based on the foundation model). The number of smaller teams building general-purpose foundation models like GPT or Gemini also goes up as training costs go down (teams behind DeepSeek or KimiK2 showed that the barrier to entry for general-purpose LLMs is no longer on the order of magnitude of hundreds of millions).

Now, let’s examine how each of these applications stacks up against the modern AI market.

Wrappers: If your moat is an API call, then you have no moat

After the release of ChatGPT, many founders jumped at the opportunity to use OpenAI’s API, embedded into a custom interface, to create chatbots for all possible purposes – productivity, learning, financial management, you name it.

First-movers in this space, like Jasper.ai with a “ChatGPT for marketing”, gained massive traction. But, after the company raised $125 million in Series A in 2022, its growth slowed down (Crunchbase now rates it at 72 out of 100, with a -19 pts loss in the last quarter).

Three reasons make the defensibility of LLM wrappers practically non-existent.

Having to compete with the original. The first hurdle companies need to break through is “Why would anyone use the wrapper if they already pay for ChatGPT Plus”? In the early days of LLMs, offering better ways to manage prompts or integrate them into the client’s other tools would have solved this problem. However, as GPT, Claude, Gemini, Grok, and others become better products, they no longer require a separate interface.

Low barrier to entry. The only hope thin wrappers had for survival was to “raise fast and grab land” by getting a share of the market faster than other competitors. These businesses have no proprietary IP, unique data, or specialized user loops, setting them apart, making “copycatting” just a matter of time.

If you’ve proven a product-market fit for a new ‘ChatGPT for X,’ you may discover that you’ve essentially conducted free market research for a large incumbent company in that area, such as Salesforce, which can easily add a clone of your product to their portfolio.

No control over mission-critical infrastructure. There isn’t much fine-tuning a “wrapper” can put on top of its baseline technology. Going for open-source LLMs like Mistral, DeepSeek, and Llama gives teams more control, but without PhD-caliber talent, effectively fine-tuning these models is still difficult. The lock-in to the baseline model vendor slows down the ability to iterate and innovate, and gives competitors who have built proprietary models the upper hand.

The cases of Jasper.ai, Character.ai, and a few other successful wrappers are inspiring, but they are the exception to the rule: in the long run, “thin wrappers” lose to incumbents with broader user bases, companies with custom models trained on domain-specific proprietary datasets, or even the creators of the very models teams are embedding.

Over the years of helping build resilient and competitive machine learning projects, Xenoss engineers see a common trend: successful companies often start with plug-and-play models but bring development and training in-house as soon as they reach product-market fit.

A proprietary model is a moat, then? Well, not really

Models are by far the most important component in the ML pipeline, but they carry little to no competitive advantage.

Here is why.

Models are not difficult to replicate. In 2022, Stability and Runway both worked on Stable Diffusion. Stability beat Runway to the punch and shipped their model faster, but Runway released the next version of SD before Stability did. Both companies had legal drama to deal with in the aftermath, but they taught the industry a valuable lesson: you never fully “own” your models. Competitors are watching, taking notes, and building better algorithms.

The talent working on your “state-of-the-art” model can leave and fight against you. The modern AI ecosystem is practically shaped by ex-OpenAI alumni. Dario Amodei founded Anthropic to build “safe AI” that he claims not to have seen at his previous company. OpenAI’s ex-CTO Mira Murati founded Thinking Machines Lab, which now stands at a $12-billion valuation. The company’s Chief Scientist, Ilya Sutskever, also raised billions for his superintelligence lab, Safe Superintelligence, Inc. The war for AI talent is raging, so the risk of your knowledge holders being poached by a competitor with deeper pockets should always be part of the equation.

Teams use “distillation” to train better and cheaper models based on leading frontier algorithms. OpenAI’s Terms of Service technically prohibit prompt injection (i.e., using GPT outputs to train new models). Practically, DeepSeek did exactly that, ended up with a successful reasoning model built on a fraction of GPT’s training costs, and faced few legal repercussions.

The bottom line is: You can’t keep a model under wraps for long.

There comes a point when the industry figures out the tech under the hood, and what used to be a competitive advantage becomes a commodity.

Data is the deepest moat

The idea that diverse and large datasets in and of themselves are a deep enough moat to carry AI startups is becoming a truism, but it wasn’t always obvious.

In 2019, Andressen Horowitz analysts published an article on “The empty promise of data moats”, where they suggested that the benefits we attribute to data are simply the benefits of scale.

They even claimed that, over time, the cost of adding new data to the dataset increases, but the gains are no longer game-changing.

Instead of getting stronger, the data moat erodes as the corpus grows.

The Empty Promise of Data Moats (Adreessen Horowitz)

This view made sense in the pre-LLM world, but for generative AI and emerging technologies like agentic systems, it quickly falls on its head.

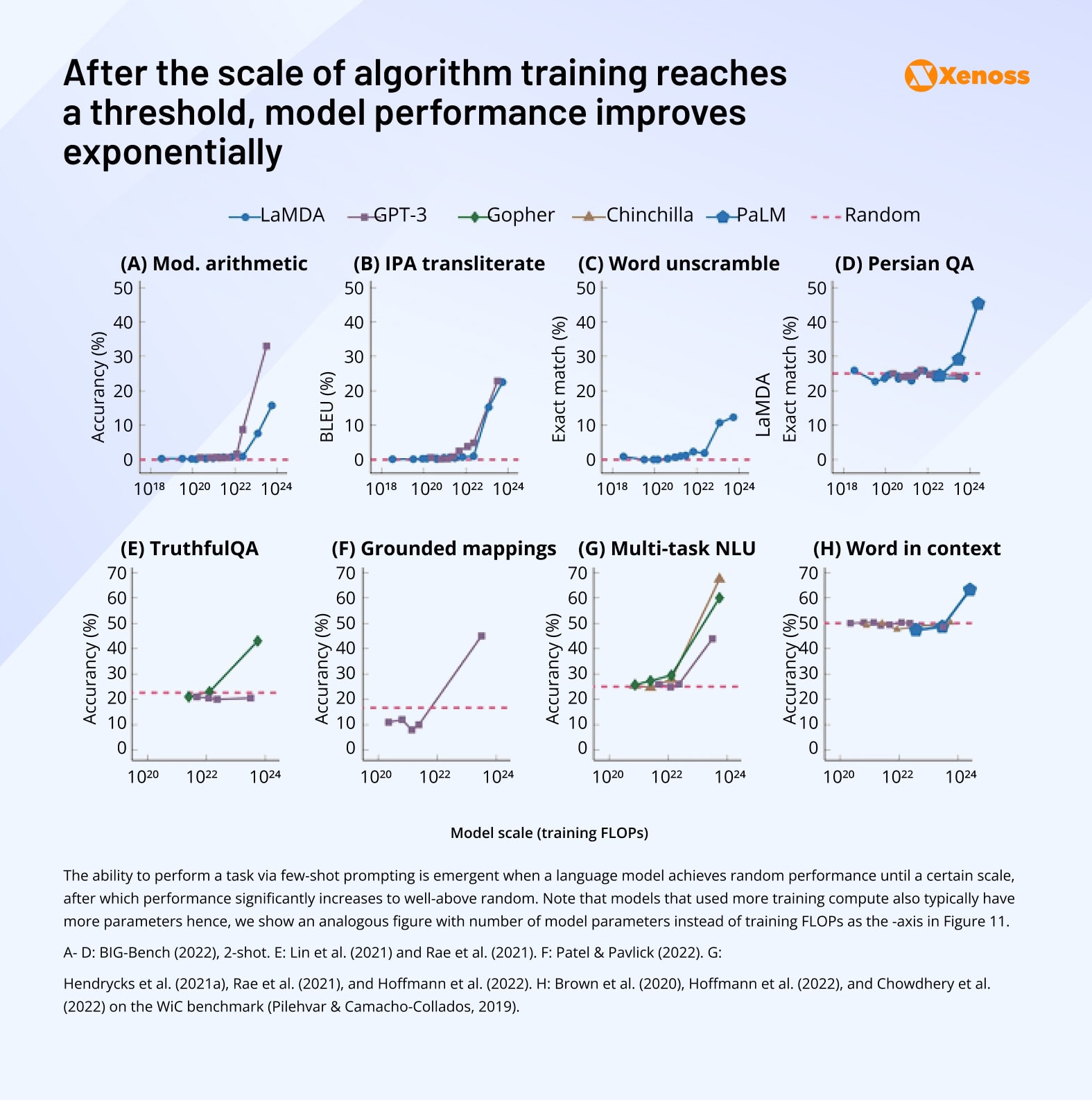

There is plenty of credible research showing that, once LLM datasets are past a certain volume threshold, the reasoning and problem-solving abilities of downstream models improve dramatically.

Researchers are exploring this sudden performance leap as “Emerging Abilities of Large Language Models”. The industry knew about the virtuous cycle that’s created when users bring new data for the algorithm to train on, the model improves and brings in new users (who, in turn, share new data) for a while – we call it a “data flywheel”.

Users create data, data brings in new users: How the data flywheel helps models improve

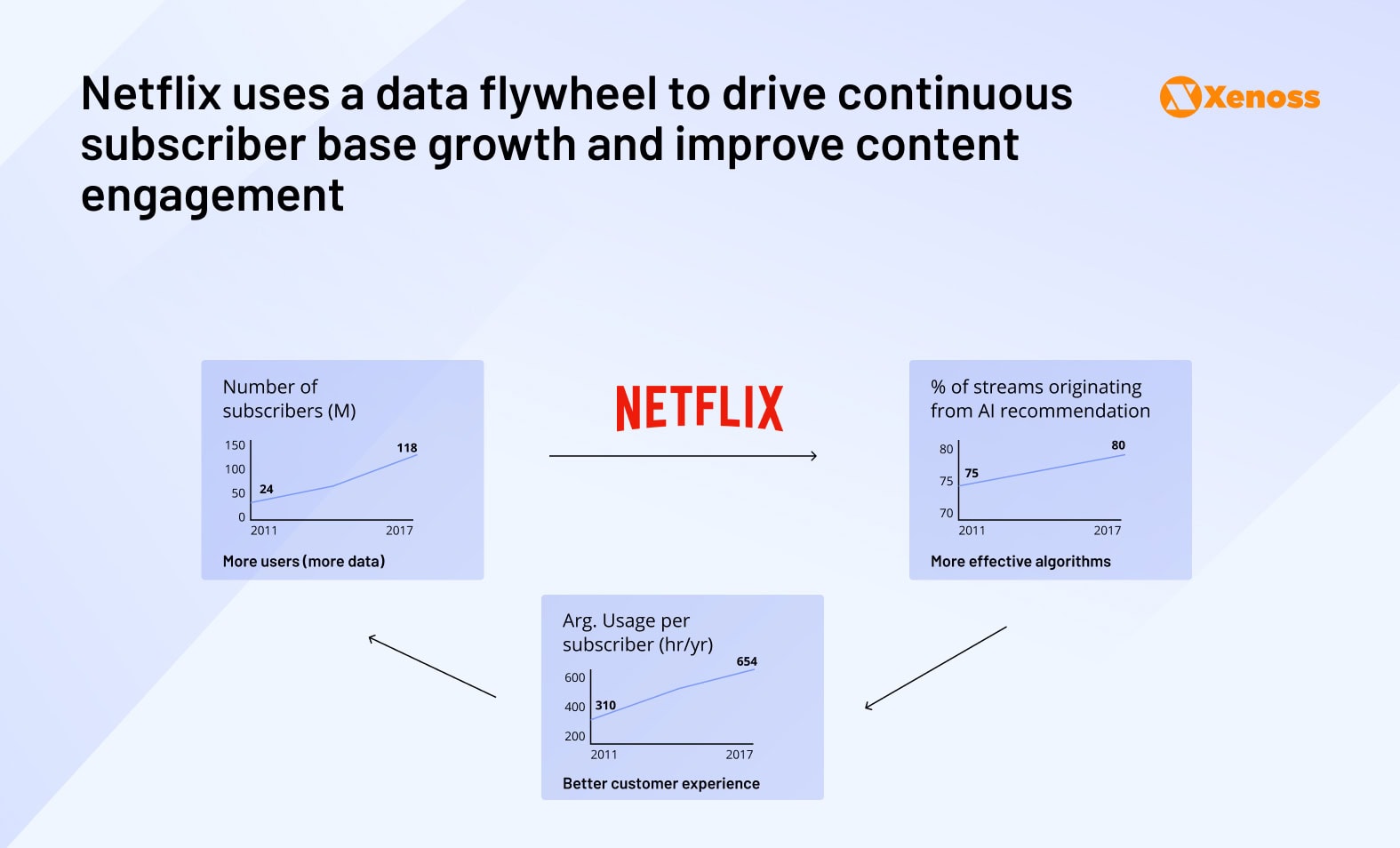

The benefits of a data flywheel are most obvious when it’s running in recommendation systems. Netflix tracks a user’s on-site behavior – clicks, number of hours spent watching specific shows, ratings, previews, etc. and feeds this data to the model to keep personalizing recommendations and accurately match a viewer’s tastes.

One look at the streaming company’s rate of growth shows how effective the “data flywheel” is in driving paid user acquisition and engagement.

Outside of recommendation engines, data flywheels propel the most powerful AI projects.

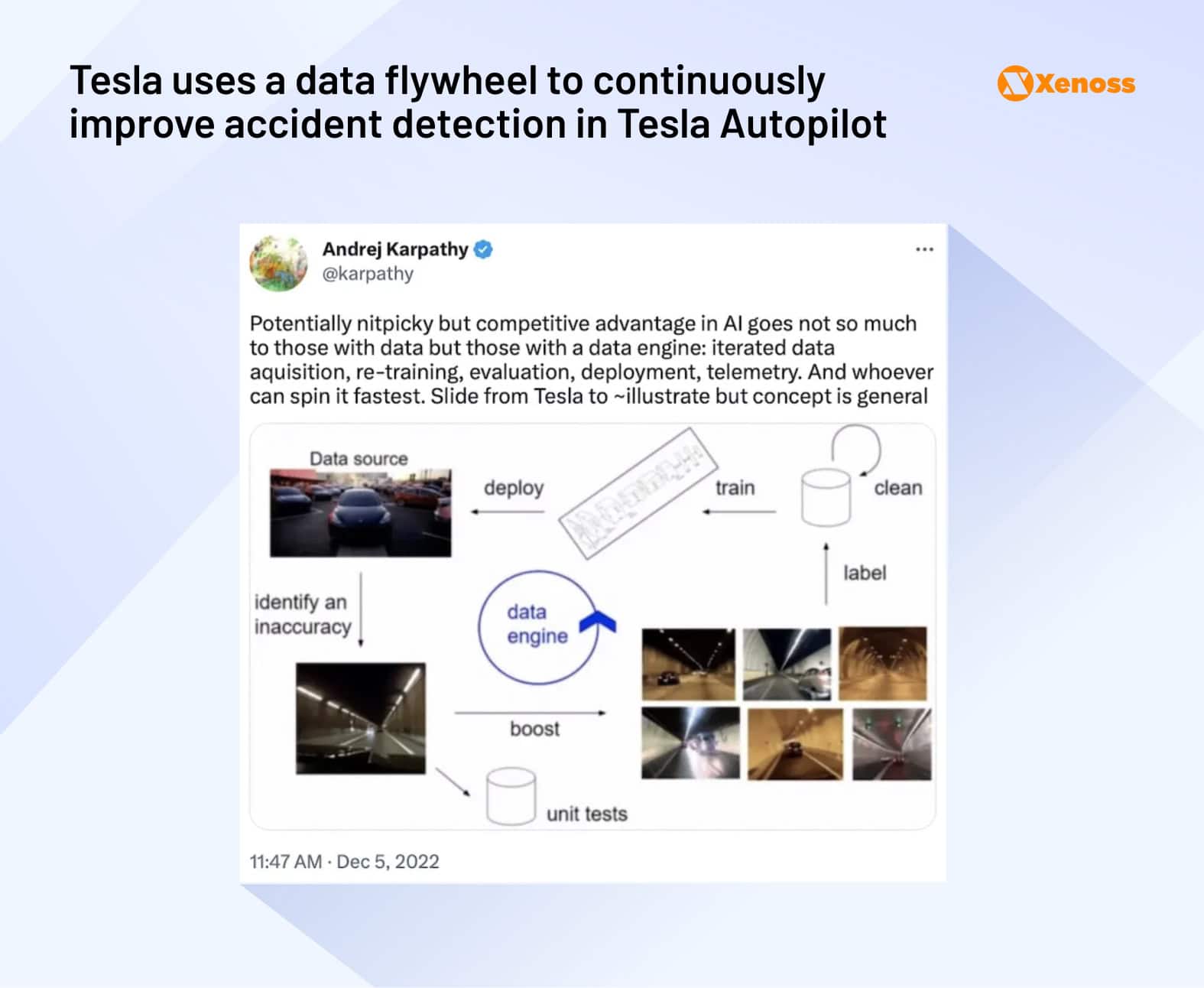

Andrej Karpathy shared how his team at Tesla got the “data flywheel” (he called it a “data engine”) spinning in Tesla Autopilot’s AI.

The Tesla data engineering team collected images and videos from vehicles, labeled and processed this data, and applied it to retrain models. Improved models were safer and more reliable than their predecessors, improving Tesla’s brand reputation and driving new buyers, who were also valuable data providers.

So, as important as it is to have an initial dataset that’s large and diverse enough to cover user needs and enable accurate model performance, the key is to build a positive feedback loop where “data begets data” and the model keeps improving continuously.

Getting the model to a performance level that encourages users to share data with it is a difficult engineering problem.

Simple models most teams use as PoC can make mistakes that a human would simply consider stupid: confusing the order of words in a sentence, failing to recognize simple object characteristics like shape and color, or producing poor predictions riddled with heuristics.

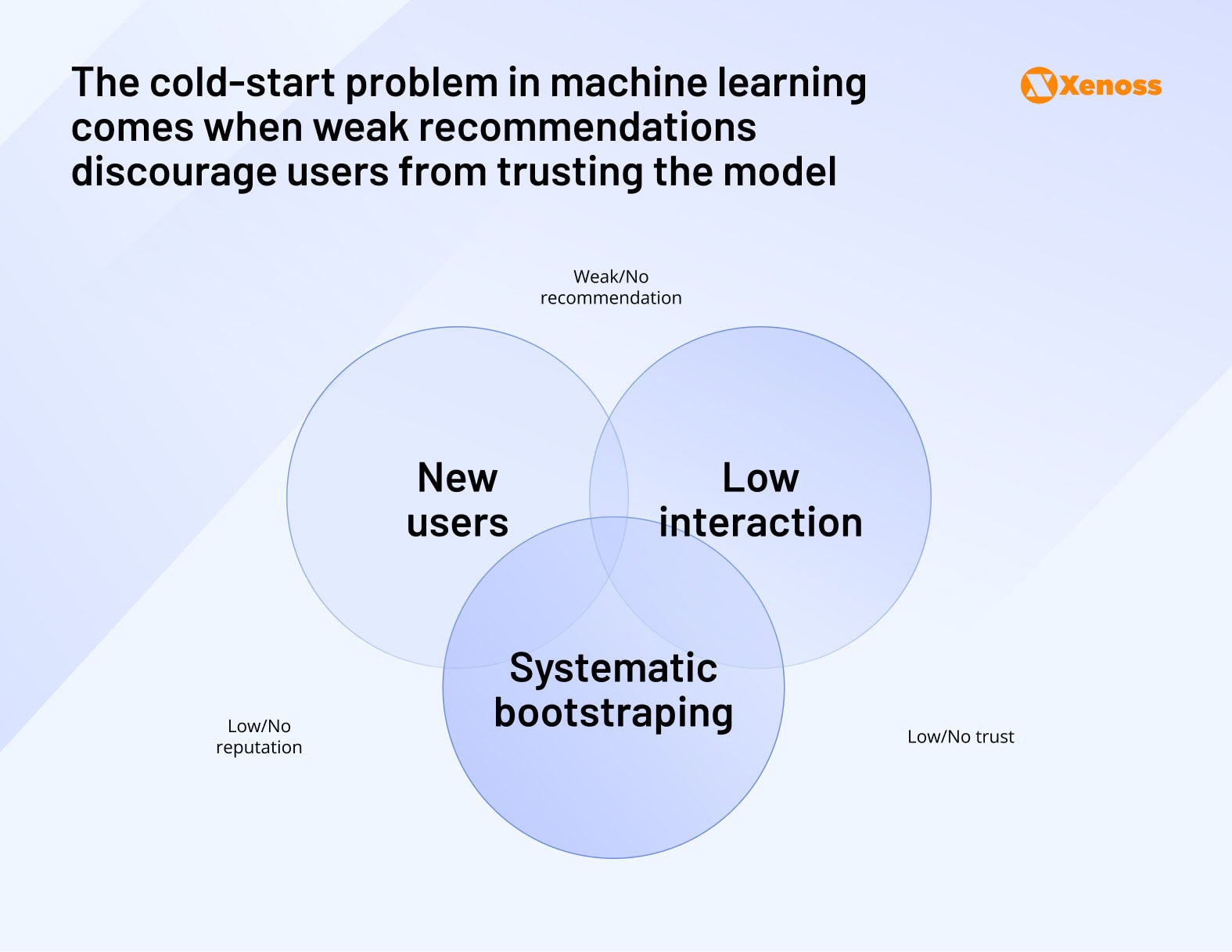

If these algorithms had more user data to train on, they would improve, but pilot projects are yet to develop the required authority. Hence, teams are stuck with a “cold start problem” – the need to get enough data to get the model to acceptable intelligence despite lacking a broad enough user base.

We wrote an article on tackling this challenge on many levels – at the level of a business model, product development, and engineering.

The tips we share help teams remove the data bottleneck and speed up their progress towards the data flywheel.

Data poisoning attacks: When data flywheels work against AI models

An important caveat to data flywheels is that they can be used against AI projects. Just as a model improves when trained on high-quality data, it can also regress after being trained on a low-quality dataset. This type of attack, data poisoning, has become a weapon of choice in artist communities to deter projects like Suno.ai or Midjourney, trained on copyrighted work.

Platforms like Nightshade use the “reverse data flywheel” by feeding the model a slightly tweaked image that looks practically unchanged to the human eye but significantly distorted to the algorithm.

These “shaded images” downgrade the performance of AI models until they generate unrealistic and anatomically incorrect designs.

Setting up strict data quality gates and ensuring a mix of automated and human validation helps deter the harmful impact of data poisoning and protect model performance.

All in all, an AI project team that created a flywheel, which supplies the model with a stream of domain-specific and diverse data, has ensured a long-term competitive advantage and a solid position in the target market.

The end game: Will horizontal models win?

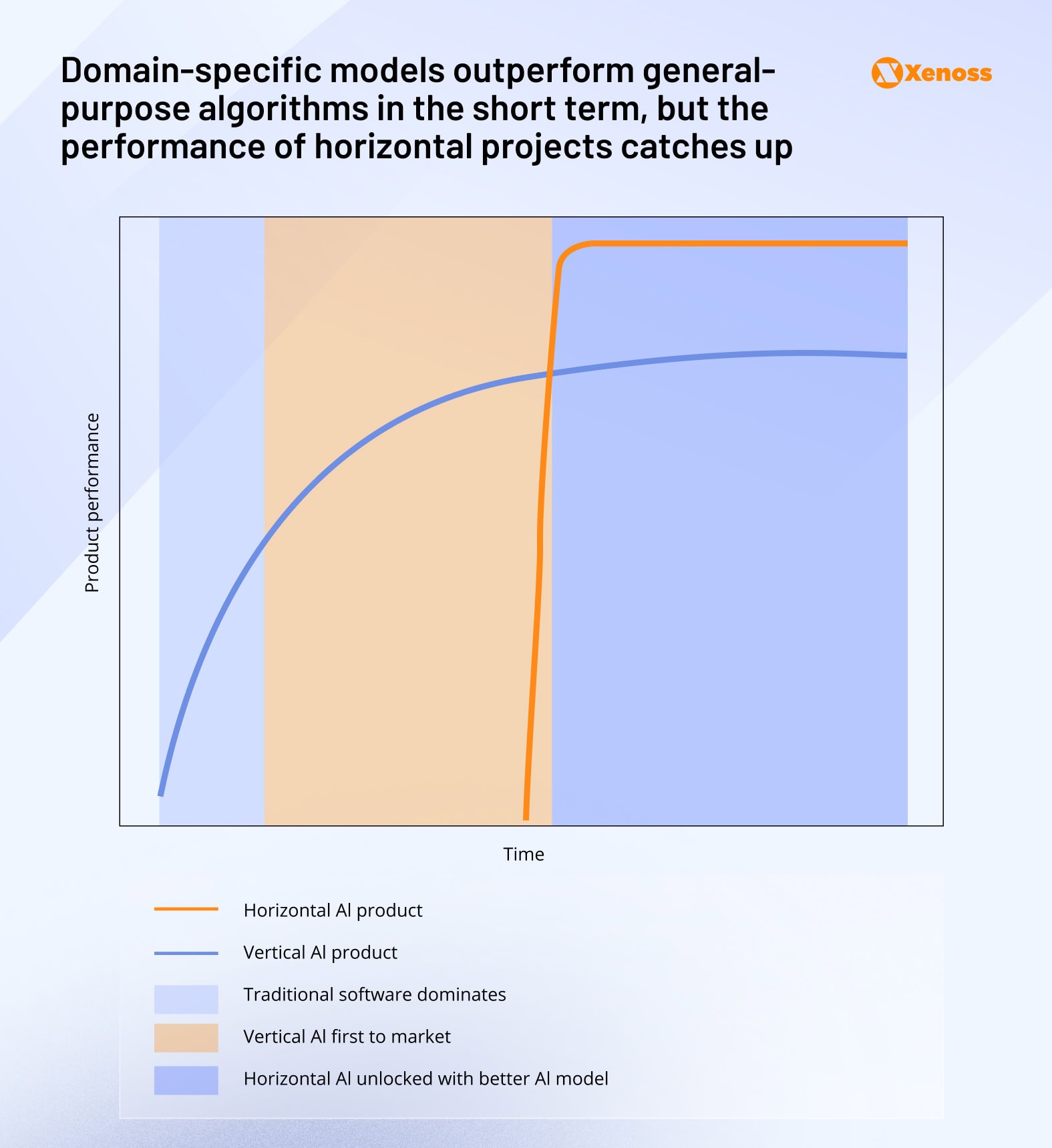

Proprietary models trained on domain-specific data are holding up incredibly well in today’s landscape.

- In healthcare, there’s OpenEvidence: a clinical LLM that answers point-of-care questions from peer-reviewed literature, guidelines, and evidence databases; built specifically for medical professionals.

- In legal, Luminance is the frontrunner with a similar positioning: a proprietary model trained on over 150 million verified legal documents.

- Tractable, a set of proprietary computer-vision models trained on millions of annotated auto-damage images to automate insurance estimating, pushes the frontier of industrial AI.

These models have the data moat and, with millions of active users, their flywheel will keep revolving and improving output accuracy.

But they are not immune to competition.

This year, a new trend has been emerging among AI projects: Foundational models are going after domain-specific applications. Anthropic is leading the pack here with Claude Code and the recent announcement of Claude for Finance. OpenAI just announced “Study Mode” for ChatGPT, to create an education-specific application layer.

What should vertical niche leaders like OpenEvidence or Luminance bet on to make sure their competitive advantage persists? What these companies need is a cornered resource: coveted data that a) is essential to support the project’s use case; b) is off the market so competitors cannot replicate it.

It is immediately obvious how little data fits into this category. OpenEvidence’s dataset is either publicly available (open datasets), obtainable via licensing agreements (science journals), or simply not unique even if users are ones sourcing it (medical scans), hence it is not a cornered asset and cannot be an evergreen source of competitive advantage.

Eventually, a foundational model team with a much more powerful data flywheel may enter the healthcare industry with GPT, Claude, or Gemini for medical professionals, and OpenEvidence will lose its moat.

On the other hand, behavior data collected by Netflix is unique to this organization, vital to target verticals, and cannot be acquired in any other way. Netflix’s recommendation system will have a deep moat regardless of how big AI labs decide to strategize next year or in five years.

The success formula is “data obtained from privileged access multiplied by feedback loops”

After GPT-4, AI models have pretty much become commodities. Building a foundational model that would rival big AI labs is now cheaper and easier, as all the know-how and hardware are easily accessible.

Access to domain-specific data that is vital to the vertical and inaccessible to competitors is a powerful moat, especially if user behavior is built around sharing new, valuable data with the model.

A feedback loop created in the process – a data flywheel – gives AI projects a long-lasting competitive advantage.

At the moment, few companies in the market own both highly valuable unique data and the infrastructure that helps extract and sustain its value. That’s why up to 80% of AI projects are getting swept by the next wave of change, even those currently riding it.

Only those who managed to become both a data source and a machine learning powerhouse will maintain long-term viability.