If you were to ask an ML engineer for a simple definition of “machine learning”, you’d likely get some variation of machine learning = model × data.

In the last decades, models were the more glamorous and glorified component.

Much more research was done on state-of-the-art algorithms than on data generation, annotation, and validation.

But, in recent years, the tables have been turning.

The progress in machine learning models has hit a plateau; most leading companies and research labs have access to leading techniques, and the hardware used to enable them is becoming ubiquitous.

Today, data sets are a valuable model apart from a useless one. AI companies are racing to build a data flywheel – a self-propagating system where the model keeps improving with little input from the engineering team based on the data users share with it.

OpenAI, Google, Amazon, Netflix, Tesla, and other large-scale companies have enough data to keep the flywheel going.

But early-stage machine learning projects struggle to get robust enough datasets to make a qualitative leap from stupid to intelligent models.

This challenge is famous in machine learning as the “cold start problem”.

This article explores product and engineering workarounds that machine learning teams have successfully used to solve the dilemma and create intelligent algorithms that keep users coming and bringing in new data.

Practical ways to solve the cold start problem

Instead of tackling the cold start problem as a purely engineering challenge, it’s better to approach it from product and business development perspectives as well.

For example, suppose there’s no data to power a complex machine learning algorithm. In that case, it’s up to the product team to accept taking AI features off the table and settling for a statistics-based solution instead.

Here is why this is often the most reasonable approach for early-stage machine learning pilots.

It’s easier for early-stage teams to create value without AI

In a rulebook, Martin Zinkevich, Research Scientist at Google, wrote for machine learning engineers, the first rule states, “Don’t be afraid to launch a product without machine learning”.

Hamel Hussain, former Staff ML engineer at GitHub, also recommends solving the problem with statistical methods before attacking it with novel technology.

I think it’s important to do it without ML first. Solve the problem manually, or with heuristics. This way, it will force you to become intimately familiar with the problem and the data, which is the most important first step. Furthermore, arriving at a non-ML baseline is important in keeping yourself honest.

Hamel Hussain, former Staff ML engineer at GitHub

The reason why these and many other engineers take the heuristics-first approach is that frontier AI products are powerful only when there’s a large dataset to support them. Without enough data to train them, machine learning models are highly inaccurate, but, most importantly, they are unpredictable.

Heuristics, on the other hand, won’t give you a complex predictive model, but they can be accurate in tracking one baseline metric and deliver a correct result every time.

In recommendation engines, heuristics help track a simple baseline metric like “most popular product for a given user segment over a period of time”.

Such a basic approach won’t yield the feeling of “magic” users are accustomed to getting with modern foundational models, but at least your predictions will not be wildly wrong. Besides, heuristics are a great tool for testing machine learning models because, as Monica Rogati, former Senior Data Scientist at LinkedIn, wrote in her blog “The AI hierarchy of needs”, “simple heuristics are surprisingly hard to beat, and they will allow you to debug the system end-to-end.”

Once a product drives minimal value with heuristics alone, and users are satisfied with it enough to keep sharing data, it’s time to switch to simple machine learning models and gradually add layers as your dataset gets new signals.

The problem with heuristics is that they do not have the same “ring” in marketing materials and investor pitches. That’s why companies are forced to act like their products are running best-in-class AI under the hood – even when the “AI” itself is a meticulously planned trick.

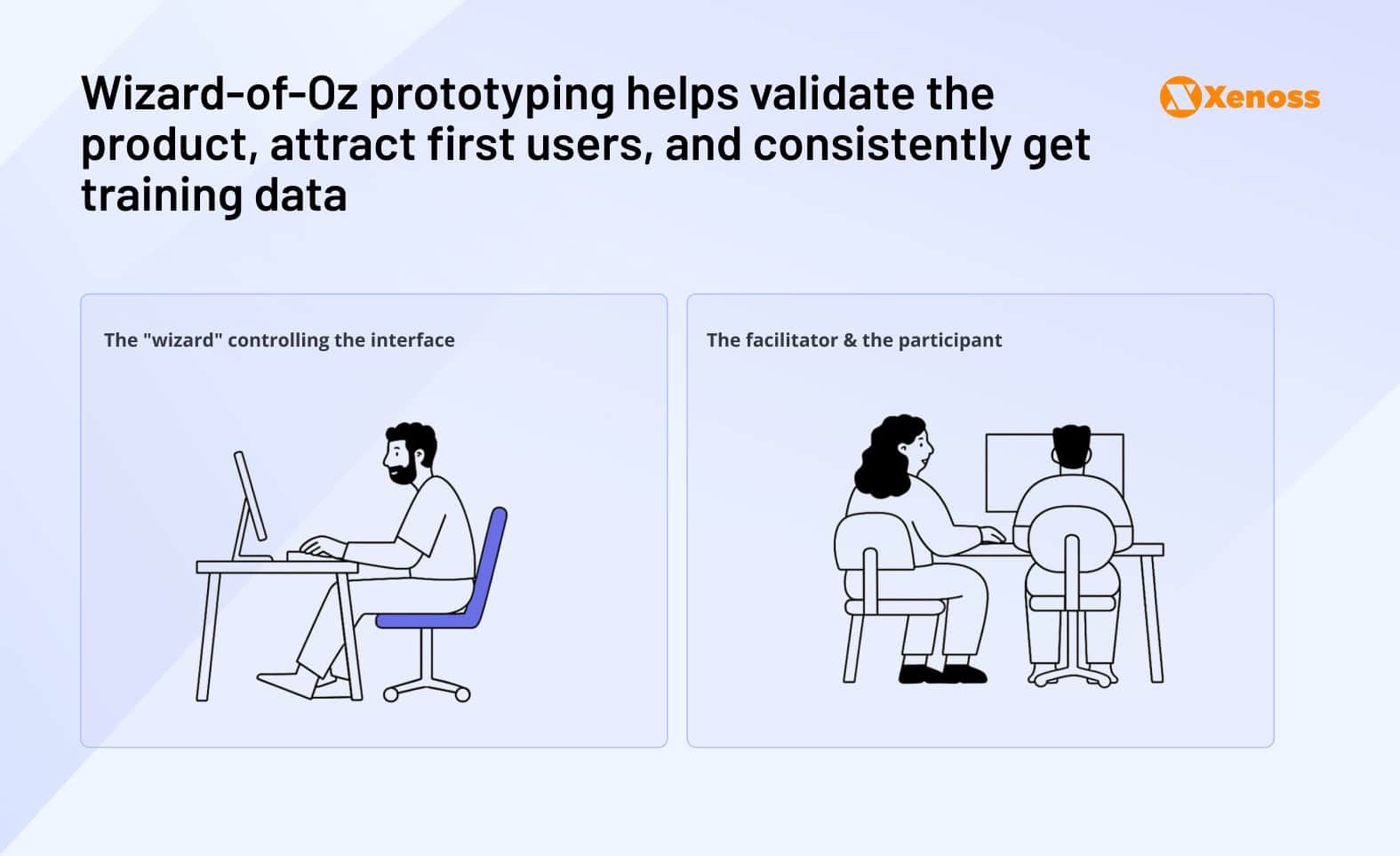

The trend of Wizard-of-Oz-ing AI applications

‘Fake it till you make it’ is Silicon Valley’s favorite motto, which, when taken too far, gets founders in jail, but, if implemented correctly, is a smart way to solve the “cold start problem”.

In the pre-AI age, Nick Swinmurn, the CEO of Zappos, was a poster case for ‘Wizard-of-Oz’ prototyping. To validate his risky idea, selling shoes online without having to invest in

warehouses and a supply chain, he would go to shoe stores, take pictures of shoes, and post them on the website.

When a customer placed an order on Zappos, Swinmurn bought the ordered pair and manually shipped it to the customer. It was an ad-hoc, manual, and entirely non-scalable fulfillment strategy, but as far as shoppers were concerned, they got a streamlined end-to-end customer journey.

Now, ‘Wizard of Oz’ is a helpful prototyping approach that helps product teams validate a feature without coding it end-to-end.

In machine learning, rolling out pseudo-AI is so common it feels more like an industry’s open secret than a hidden trick.

Back in 2016, Bloomberg turned the spotlight on human teams who worked 12-hour shifts pretending to be calendar scheduling chatbots.

Similar cases have been reported multiple times since: humans were scanning receipts, transcribing voice calls, and running therapy sessions, and end-users had no idea.

Gregory Koberger, the founder of ReadMe, called this practice out in a ‘two-step guide’ to building an AI startup.

Even though the idea of “pretending to be AI” has Orwell written all over it, it’s incredibly effective in addressing the cold start problem.

Having humans in the loop helps attract users to a product that wouldn’t be reliable enough without model output validation. In high-stakes verticals like healthcare or finance, this added layer of verification can be mission-critical.

That’s why, when marketing MedLM, a set of foundation models specialized in medical tasks, Google emphasizes that all document drafts created by the system are reviewed by a clinician.

Just Walk Out, Amazon’s automated checkout, also employs humans to validate edge cases where computer vision algorithms fail to read the context precisely.

In a world where VCs strive to fund scalable and fully automated AI systems, engineering teams often have to keep their reliance on humans away from the public eye, but practically everyone in the industry is either still doing or has done it during the early stages of their pilots.

Get started with public datasets (but remember, they won’t get you far)

For a long time, machine learning models have been standing “on the shoulders of giants” – namely, open datasets that helped train and compare models. MNIST and CIFAR-10 are so commonly used by engineers, they are now household names in the AI community.

Without open data repositories, like Google Open Data, Hugging Face datasets, or Kaggle, machine learning research would lag far behind its current milestones.

Open data was a reliable starting point for a handful of commercially successful projects.

- Aperture, a Stanford-born agriculture intelligence platform, was trained on publicly available Earth observation data combined with household survey statistics. The platform was adopted by Fortune 500 logistics teams to predict supply-chain and food security hotspots.

- Casetext was an early GPT-4 wrapper customized for law professionals and trained on U.S. case law, which is part of the public domain. Casetext was acquired by Thomson Reuters for $650 million and is now part of the company’s in-house tool CoCounsel.

- Google used a public dataset with 5.2 million synthetic 3D molecular structures to train SandboxAQ, a model that helps predict small-molecule drug affinity to target receptors.

These products achieved both wide user adoption and market recognition despite being trained entirely on public data.

But they are outliers among tens of thousands of similar projects that failed to take off due to the limitations of open datasets. Incorporating public data into an engineering team’s proprietary dataset is one such challenge, especially in industries that heavily rely on research data (e.g., biotech).

Acquiring labeled datasets for a healthcare-facing model is difficult because labeling scans or anatomical pathology slides requires domain expertise that machine learning teams do not have at their disposal (or, at least, not at the scale required to build a highly accurate reasoning model).

That’s where public datasets seem like an excellent workaround, they are organized, classified, and labeled. Discrepancies appear only when that data enters production alongside the team’s proprietary lab records.

The binary nature of public datasets is hard to reconcile with continuous measurements taken in a lab. More often than not, public datasets are an amalgamation of multiple datasets, each with its own definitions (at times poorly documented) and classification practices.

The transparency of an open dataset leaves a lot of room for improvement. Open data does not offer engineering teams full context about experimental conditions (for biotech, temperature, pH, molecular composition, or others), which makes it unreliable for training.

The quality of open data itself is also debatable.

An inspection of ImageNet, the go-to image dataset on the web with over 50,000 citations, revealed many errors:

- Wrong or misplaced labels: The dataset labeled a white-haired fox terrier as a lakeland terrier and vice versa.

- Class imbalance: Blurry or irrelevant images that fall out of the scope of the dataset

- Poor F1 score (accuracy metric) performance: 71% for the dataset chosen by the machine learning team

Most importantly, open datasets are static.

They freeze as soon as they enter the public domain and stop accurately representing the reality they are supposed to model over time. When a model trained on “inert” datasets faces real-world user data, the accuracy of its predictions drops. That is a textbook example of “data drift”.

Because of its limitations in production, Eric Ma, Principal Data Scientist at Moderna Therapeutics, recommends “reaching for public datasets only as a testbed to prototype a model” and rarely as a “part of the final solution to scientific problems”.

If there is no publicly available data that precisely matches the project’s purpose, and the product team has no users who would share such data, generating test data using other models is becoming increasingly viable as AI capabilities improve.

Ways to tap into synthetic data

Artificially generating data for training machine learning models was a theoretical concept introduced in the 90s to solve two key challenges in data gathering: scarcity and privacy concerns.

Creating artificial patient or financial data helps biotech and banking teams build AI algorithms without exposing sensitive data and navigating the messy compliance landscape.

In the 2020s, the AI community saw a lot of success with “early scaling laws”, empirical observations that the larger your dataset is, the more powerful the model will be. Even though newer foundational models, particularly Llama and DeepSeek, challenged this idea, today, “the bigger, the better” still holds true.

Now that datasets reach seven orders of magnitude and frontier labs are grappling for more data, synthetic generation is relevant even outside of intrinsically data-scarce domains like finance and healthcare.

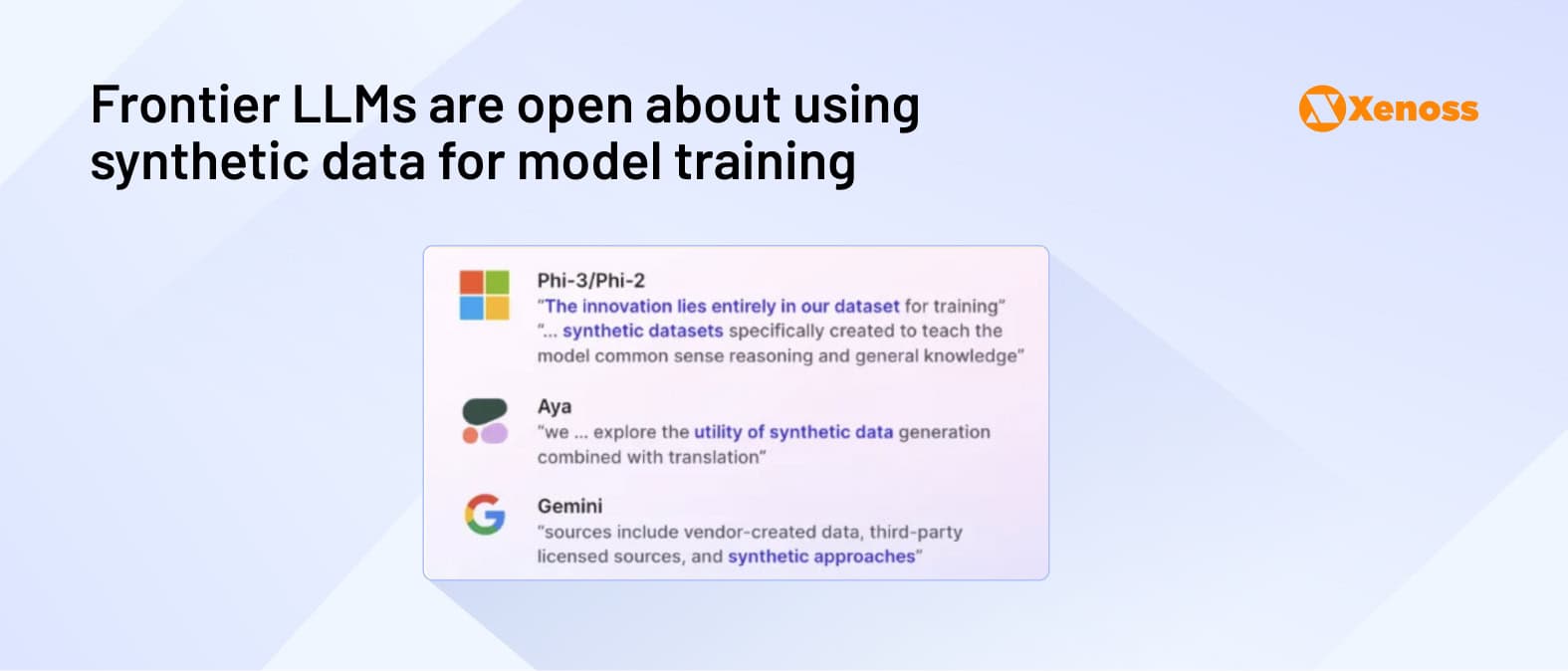

Virtually every frontrunner LLM admits to using artificially generated data to train algorithms.

There are multiple ways to generate synthetic data (with new approaches emerging by the day), but they can broadly be categorized into self-instruction and distillation.

Self-improvement generates new data from the model’s output while distillation learns from the output of a stronger model.

Out of the two, distillation is both more powerful and computationally efficient, but it carries legal risks for violating the Terms of Use of the API provider (for LLMs, the most commonly distilled model is ChatGPT).

Some AI labs, like High-Flyer, the creators of DeepSeek, got away with distilling ChatGPT prompts to build a computationally efficient model.

Others, like ByteDance, got their OpenAI accounts suspended for distillation.

Jacob Devlin, an AI researcher and the lead author of BERT, left the company after suspecting that Google’s engineers were distilling ChatGPT to build Bard.

But, even with risks involved, distillation is gaining traction among synthetic data generation techniques.

Here are some of the approaches that helped engineering teams use foundational models to train new algorithms.

- Alpaca finetuned Llama-7b on instruction-response samples generated by GPT 3.5, with the total training cost of under $500.

- Microsoft’s WizardLM model created two types of instructions – in-depth and in-breadth – for GPT to fine-tune its model. To create complete instructions, the engineering team used several types of prompts: deepening, adding constraints, adding extra reasoning steps, and others.

- Magicoder applied few-shot learning to a small dataset of coding problems. Based on these samples, the engineering team generated new GPT outputs offering 1 to 15 random coding lines as an input prompt. The new dataset then helped fine-tune code-generating models.

Self-improvement is a self-sufficient approach to synthetic data. Instead of improving upon a third-party model, teams use the baseline model to generate training data via generation, self-evaluation, and fine-tuning.

Anthropic’s self-improvement method, Constitutional AI, combines supervised learning and reinforcement learning.

During the supervised training phase, the model evaluates and critiques its outputs and fine-tunes itself based on the revisions.

In the reinforcement learning phase, the fine-tuned model generates new samples, assesses their quality, and trains the “preference model” that outperforms its adversary.

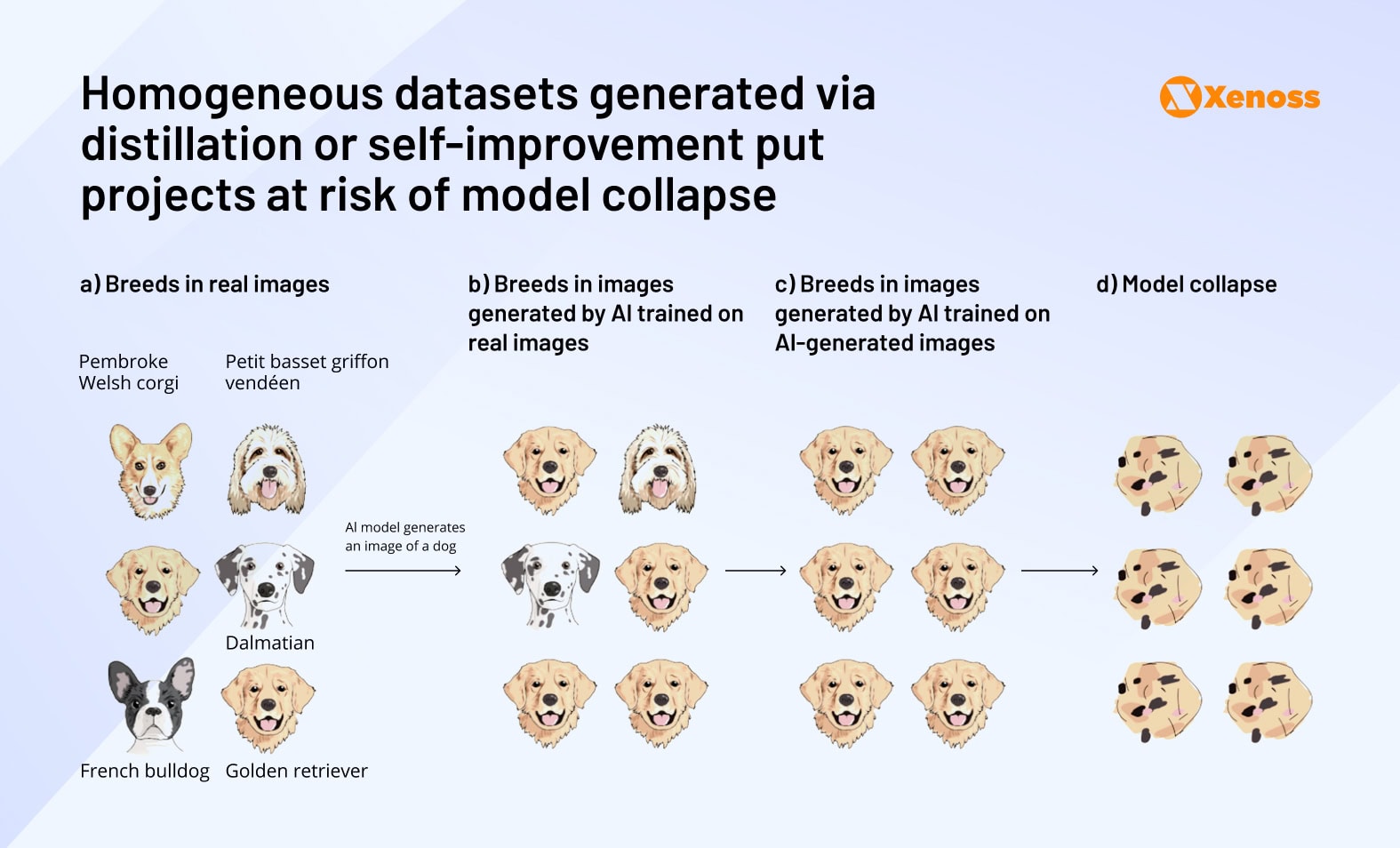

Generating synthetic data using an LLM as a judge helps enrich the dataset, but this approach also has logical weaknesses.

Recursive training methods like self-improvement tend to progressively degrade model performance – machine learning teams call this “model collapse”.

- In the early stages of collapse, the model starts losing track of the original data source and starts underperforming on less common data sources. The drop in accuracy shows up when a model encounters dataset outliers – that is why the start of collapse is difficult to detect, and it rarely shows up on performance benchmarks.

- As collapse progresses, the accuracy of model outputs drops across the board until the algorithm starts confusing tasks and crashing in front of an edge case.

The risk of model collapse is another argument in favor of using synthetic data purely for prototyping and continuing to look for high-value user data in the long run.

A Nature paper, ‘AI models collapse when trained on recursively generated data,’ backs this idea.

[Model collapse] must be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web. Indeed, the value of data collected about genuine human interactions with systems will be increasingly valuable in the presence of LLM-generated content in data crawled from the Internet.

A way to keep generating data if there are no users to contribute while mitigating the risk of model collapse is to build simulated environments.

Physical simulation helps build accurate machine learning models

AI applications that are deeply rooted in physical reality need to be trained on as much real-world data as possible to accurately detect objects and make reliable predictions.

Depending on the scope and complexity of the project, simulations can be as simple as creating basic 3D models of training objects or as complex as building mathematical models to predict the behavior of complex systems.

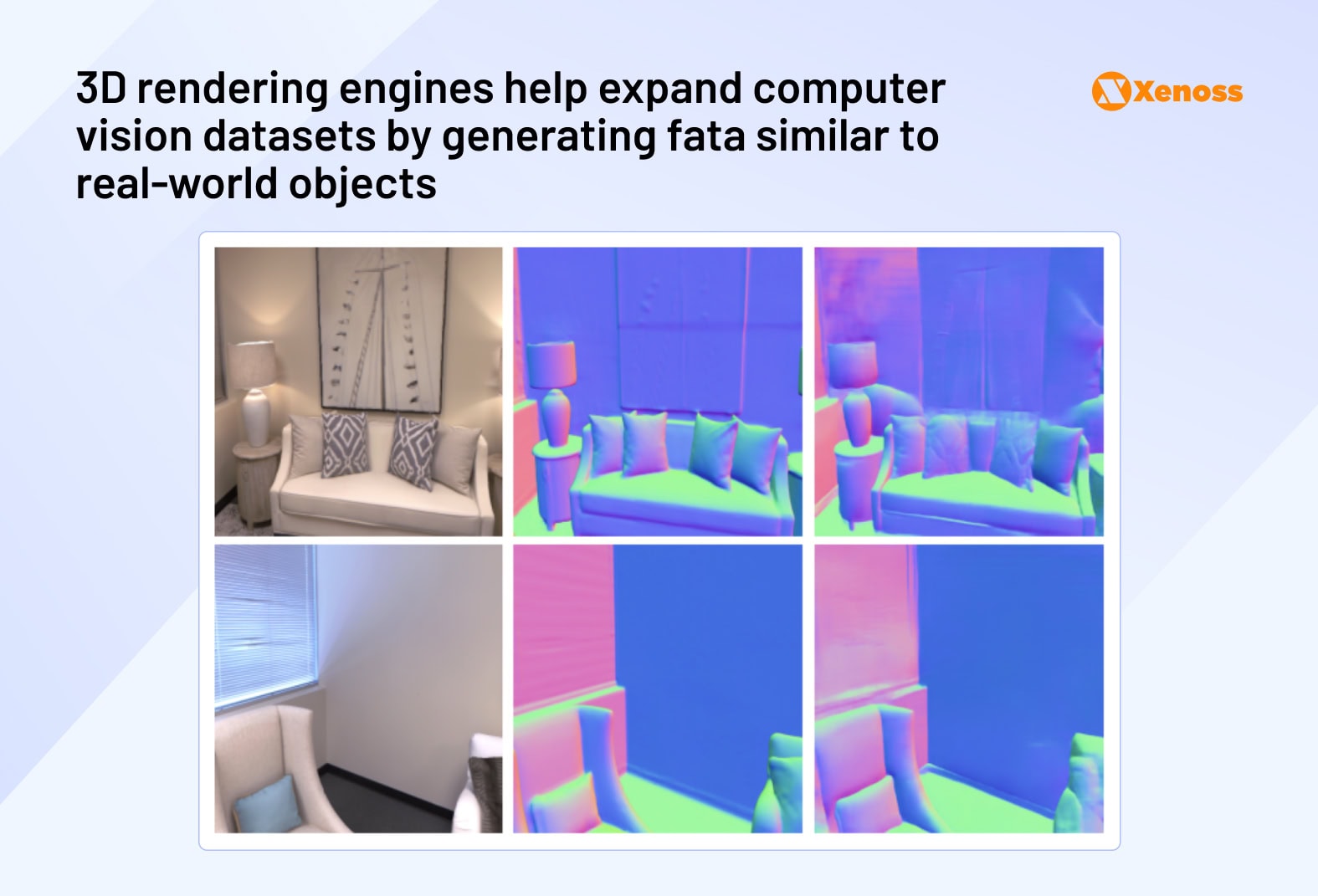

3D image generation

To add one more layer of dataset diversity beyond what’s accessible via self-instruction and distilling other generative models, researchers are tapping into 3D engines like Unreal, Unity, or Blender to build simulated training environments.

This process is fairly straightforward: CGI teams create 3D models of objects and environments and render these images for training. 3D rendering engines also have built-in annotation capabilities, giving machine learning teams a thoroughly labeled dataset.

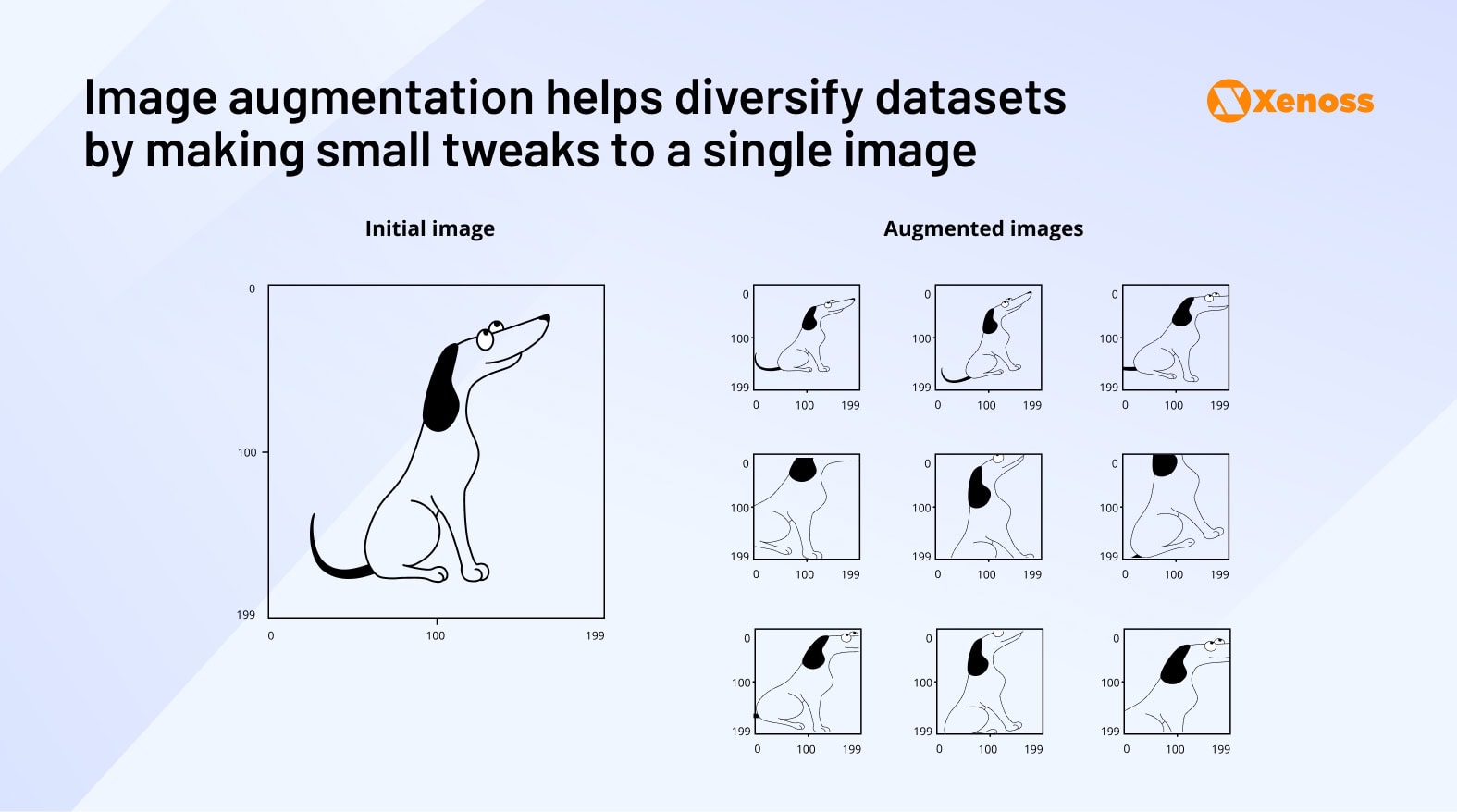

There’s a lot that experienced data teams can do with images rendered in 3D, like slightly changing their properties to create more variation of the same image. This technique, data augmentation, is useful to add diversity to the dataset. Rotating the image, zooming in on it, or flipping it helps create variety within a dataset and creates more samples of labeled data.

Creating simulated environments to model complex physical behaviors

There are subsets of machine learning projects where getting real-world data is too difficult and expensive, so engineers are replicating the behavior of real-world systems with models rooted in natural sciences.

Xenoss engineers faced this problem when building a virtual flow meter for a global oil & gas company. Getting enough data to train an accurate flow rate prediction model would require installing mechanical flow meters and a vast network of sensors that would track the flow of oil in the pipe.

This process is too long and time-consuming to be deployed at the scale engineers need to build a robust dataset.

The Xenoss team addressed the challenge team by creating a simulated environment based on the Navier-Stokes equations. This model helped simulate the behavior of liquid in a pipe and generate data used to train a virtual flow meter.

Now, operators can track pipe performance and schedule maintenance without having to install new mechanical flow meters.

All methods explored above help get an AI project off the ground if it does not have a solid data foundation to build upon.

But even successfully overcoming the “cold-start problem” and building a model users enjoy interacting with does not automatically guarantee a steady influx of high-quality data.

Long-term viability of AI projects comes from feedback loops

A good feedback loop should be integral to the system rather than an added burden placed on a user.

To understand why native feedback loops make a difference, let’s compare Google Search and ChatGPT.

All engagement Google gets from a user (clickstream data, time away, conversion rate) can be used to power the data flywheel and improve search algorithms. Over time, Google uses this data to put the most helpful result at the top of search rankings.

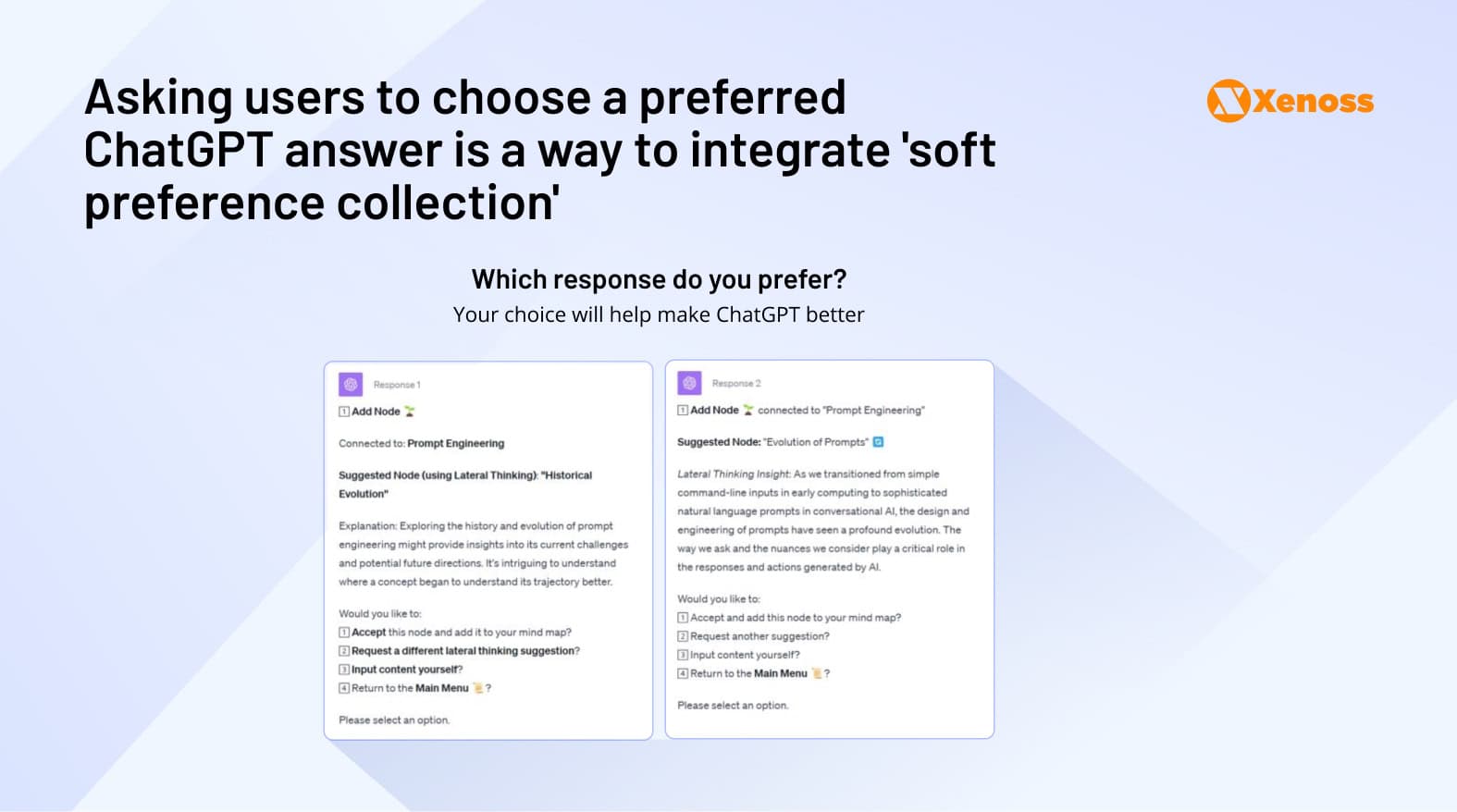

ChatGPT does not have a similar advantage because users do not implicitly rate the model every time they interact with it. Sometimes GPT asks a user to choose between two outputs, but the process is not as seamless as Google’s.

In the long run, Google’s native feedback makes it easier to keep improving the model powering search.

“It’s like having the home-field advantage, while OpenAI is playing an away game in a foreign land. It will be exciting to see what happens when Google does show it can dance. Their home field advantage means they get to choose the music”.

Sarah Tavel, partner at Benchmark and former Product Manager at Pinterest

The most intuitive way to build native feedback loops is to always have users choose between model outputs (e.g., Google Search queries or Netflix show titles).

But even if your AI project is not a recommendation engine, there are ways to build model evaluation into the user journey.

- Micro-confirmations are baked into the decision UI. Teams building classification models in high-risk areas like radiology or fraud detection can label edge case data by having a human confirm or reject uncertain model output with a ✓/? overlay. Asking users for an extra click will complicate the flow, so this technique is best applied only for “grey areas” where the accuracy of the model is uncertain.

- “Edit-diff” learning for NLP applications. If a model’s task is to auto-complete an email or a line of code, logging the delta between the output of the model and the final version is a source of high-quality RLHF signals. This feedback loop does not interrupt the user journey, but it is unbiased, accurate, and system-native.

- Soft preference collection. This is the method OpenAI uses to improve ChatGPT by requiring a user to choose between two different responses. This practice is seamlessly embedded into the chatting experience and is not easy to ignore, unlike ChatGPT’s “thumbs-up/thumbs-down” feedback loop.

A machine learning project that successfully carved out a product-market fit and built feedback loops that keep users sharing data with the model and rating its outputs gets a competitive edge in building diverse and fit-for-purpose datasets and has little to fear from competitors trained exclusively on static datasets.

Bottom line

All successful AI projects, from recommendation algorithms built by Netflix and TikTok, to chat interfaces or agents like Cursor, Vercel, or Lovable, have a shared ability to offer users an experience that feels almost “magical”. At the core of this experience is the model’s ability to understand user expectations and transform them into satisfying web pages or accurate content recommendations.

Nearly 100% accuracy is the final destination of every successful AI project because, as users keep coming and share new data with the model, the algorithm will keep improving. This “data flywheel” makes machine learning so impressive compared to heuristic-based algorithms.

To get to that point, engineering teams need to get past the “cold start problem”, find enough data for a model to train on, and reach the level of accuracy that can consistently attract new users and encourage them to share more data with the model.

The practices outlined in this post have all been adopted by successful projects, but they are just the first stepping stone towards competitive advantage.

Once these approaches give AI projects the momentum to fuel user acquisition, teams should focus on building feedback loops that promote data sharing and can, over time, lead the model to a data flywheel.