An executive benchmark survey found that 99% of companies now treat investments in data and AI as a top organizational priority, and 92.7% say interest in AI has led to a greater focus on data.

But knowing data matters doesn’t tell you where to start. Leaders keep asking: Do we need to fix data management issues first, or focus on AI-ready data instead to avoid losing competitive momentum? Should we hire an internal data team, or would it be better to outsource our data engineering solutions? For different companies, the answers to those questions vary.

In this guide, we examine data engineering services from a business standpoint to help you choose the right path. You will:

- Learn what to focus on when you’re just starting your data improvement journey

- Get a clear decision framework for selecting the right delivery model

- Understand how to select a suitable data engineering partner based on their service offering

What to focus on in the general data management strategy

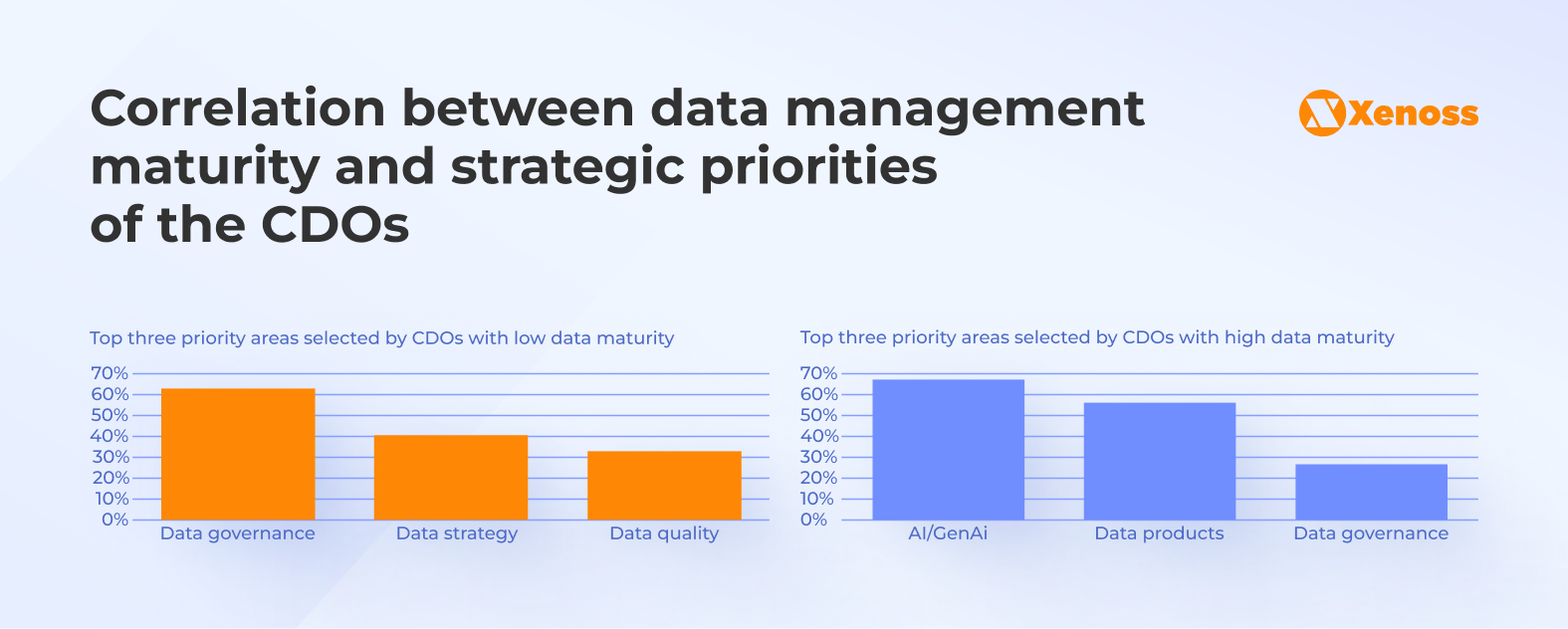

A Deloitte survey shows that, depending on their data management maturity level, Chief Data Officers (CDOs) set different priorities for their businesses. The graph below shows that starting with AI/GenAI initiatives is only worthwhile if you have a high level of data maturity. Whereas companies with less streamlined data management should prioritize data governance, strategy, and quality.

That’s why a “best practice” data strategy is rarely universal. To succeed in the coming years, you need to anchor your approach in two factors:

- Domain requirements (what data matters in your industry and why)

- Maturity level (how reliably your organization can manage, access, and operationalize that data)

The era of blindly following competitors and offering the same services with the same technologies is over. Now, companies plan to use data as fuel for their market differentiation.

Joe Reis, a Data Engineer and Architect, and an author of the Fundamentals of Data Engineering book, mentions in his post:

Many organizations say that AI-ready data is their top priority, yet they still struggle with basic data management and data literacy. That tension is catching up to CDOs and data leaders. Turns out, data matters more than ever.

More than that, a comprehensive study of 228 cases across sectors found that companies that align data initiatives with strategic business goals outperform those that adopt technology without a strategic context.

The problem is that many companies still treat data as “infrastructure work,” separate from commercial priorities. In fact, 42% of business leaders admit their data strategies are not aligned with business goals. The result is predictable: teams invest in platforms, pipelines, and dashboards, but struggle to translate them into revenue growth, improved customer experiences, operational efficiency, or risk reduction.

Once you define the right top-level priorities based on your maturity and domain needs, you can move from strategy to execution and select the data engineering services that will address the most urgent constraints first, one step at a time.

How to choose a fitting data engineering service depending on a business problem

Data engineering services often sound interchangeable on paper, but in practice, the right choice depends on what problem you’re solving and how urgently the business needs results. Some teams need a foundation (architecture, governance, standardization). Others need stabilization (pipelines, reliability, observability). And in many cases, the biggest lever is a targeted service that removes the constraint blocking analytics, AI, or cost control.

Use the table below as a decision map: start with your current business scenario, then match it to the service type that delivers the fastest and most sustainable improvement.

| Business need/scenario | Recommended data engineering service | What this service includes | Best for |

|---|---|---|---|

| Fragmented data across systems with no single source of truth | Data architecture & platform design | Target data architecture, data models, platform selection (data lake, warehouse, lakehouse), governance foundations | Companies early in data maturity or post-M&A |

| Data pipelines are unstable, slow, or frequently break | Data pipeline engineering & modernization | Ingestion, transformation, orchestration, monitoring, failure handling | Teams struggling with unreliable reporting or analytics delays |

| Growing data volumes are driving cloud costs out of control | Data platform optimization & FinOps | Cost audits, storage tiering, query optimization, and compute scaling strategies | Cloud-native organizations with rising data spend |

| Analytics exists, but business teams don’t trust the data | Data quality & observability services | Data validation rules, anomaly detection, lineage, and SLA monitoring | Regulated industries or KPI-driven organizations |

| AI/ML initiatives stall due to poor data readiness | Data engineering for AI & ML enablement | Feature pipelines, training data preparation, and real-time data access | Companies moving from BI to predictive, generative, or agentic AI |

| Legacy systems block modernization efforts | Legacy data migration & modernization | Data extraction, schema redesign, phased migration, parallel runs | Enterprises with mainframes or on-prem data stacks |

| Multiple teams build duplicate pipelines and dashboards | Enterprise data platform consolidation | Tool rationalization, shared pipelines, centralized governance | Large organizations with decentralized data teams |

| Need fast results to validate a business hypothesis | Data engineering PoC/MVP | Narrow-scope pipelines, rapid prototyping, measurable KPIs | Leaders testing ROI before scaling investment |

| Compliance, security, and audits are becoming risky | Data governance & compliance engineering | Access controls, audit trails, retention policies, and compliance mapping | Finance, healthcare, enterprise SaaS |

| Internal team lacks capacity or niche expertise | Dedicated data engineering team/augmentation | Embedded engineers, architects, and long-term delivery ownership | Scaling organizations with aggressive timelines |

With data issues clear and an understanding of how core data engineering services work, your next step is to define the delivery model you’ll use to start improving your current data infrastructure.

Decision framework: Build vs. buy vs. outsource your data stack

When choosing between these three paths: to buy, build, or outsource your data stack, you have to back up every decision with common sense and your current team’s capacity and skills. Tool- or hype-driven data strategies won’t work. Aim at avoiding situations like a Fractional Head of Data, Benjamin Rogojan describes in his post:

You know your data team is going to have a rough 18 months when a VP returns from a conference and tells you that the company needs to switch all its data workflows to “INSERT HYPE TOOL NAME HERE.” They’ve been swindled, and now your data team is going to pay for it.

To determine which option is best for your business, consider the aspects below.

| Decision factor (what leaders should evaluate) | BUILD in-house (own the stack) | BUY platforms/tools (managed stack) | OUTSOURCE/PARTNER delivery (partner-led execution) |

|---|---|---|---|

| Primary business goal | Create a durable competitive moat through proprietary data products and workflows | Accelerate time-to-value with proven, scalable capabilities | Ship outcomes fast when internal bandwidth or expertise is limited |

| Best fit maturity level | High maturity (clear ownership, strong data standards, platform mindset) | Low-to-mid maturity (need stable foundations quickly) or high maturity (optimize commoditized layers) | Low-to-mid maturity (needs structure) or high maturity (needs specialized execution) |

| Time-to-value expectation | Slowest initially (platform investment before payback) | Fastest path to usable analytics/AI workloads | Fast, especially when paired with bought platforms |

| Upfront cost profile | High (engineering time and platform build effort) | Medium (licenses/consumption and enablement) | Medium-to-high (delivery fees, but predictable milestones) |

| Long-term TCO profile | It can be the lowest if you have scale and strong operations; it can become the highest if maintenance is underestimated | Often predictable, but consumption can spike without FinOps | Predictable during engagement; it depends on the handover model afterward |

| Operational overhead (on-call, upgrades, reliability) | Highest (you own everything) | Lowest (vendor absorbs much of the ops burden) | Shared (partner builds/operates; you decide who runs it long-term) |

| Customization/control | Maximum control and custom logic | Moderate (configurable, but bounded by platform constraints) | High in delivery, moderate in tooling (depends on what’s selected) |

| Risk profile | Execution risk is high; success depends on talent and operating model | Vendor dependency risk; lock-in considerations | Delivery dependency risk; mitigated with knowledge transfer and documentation |

| Security & compliance needs | Best if you require deep customization and strict controls | Strong if the software provider supports the required certifications and controls | Strong if the partner implements governance and audit-ready data processes correctly |

| What leaders get wrong most often | Underestimate maintenance, incident load, and long-term ownership cost | Assume tools fix process/ownership issues automatically | Treat it as staff augmentation instead of outcome-based delivery |

| When NOT to choose it | If you need results in <90 days or lack platform engineering maturity | If you need extreme customization and can’t accept vendor constraints | If you can’t allocate an internal owner or want “set-and-forget” delivery |

Real-life case studies with measurable ROI

Let’s see what results teams achieve by following different delivery models.

Partner: Accenture helps the Bank of England upgrade a system supporting $1 trillion settlements in a day

The data management improvement story can also begin with updating a core processing system, as happened at the Bank of England. They partnered with Accenture to improve their Real-Time Gross Settlement (RTGS) service. To do this, the most important task was centralizing financial data in a centralized cloud storage system with APIs connecting the system to external financial entities across the globe.

In just the first two months after launch, the new platform successfully processed 9.4 million transactions valued at $48 trillion, including a peak of 295,000 transactions in a single day, demonstrating immediate performance at national-system scale.

The necessity of quickly launching a system of national importance without disruption justified the choice of partnership in the case of the Bank of England.

Build: Airbnb created Airflow to scale data workflows internally

Airbnb’s data team chose the “build” path when off-the-shelf workflow tools couldn’t keep up with the growing complexity of their analytics and ML pipelines. At the time, the company relied on a mix of practices, which made workflows expensive to maintain as the number of dependencies increased. To solve this, Airbnb engineers built Airflow, an internal workflow management platform that introduced a clear structure for pipeline orchestration.

As a result, teams could define workflows as code, reuse components, track execution state in one place, and reduce manual firefighting caused by broken jobs and invisible failures.

The strategic payoff of the “build” approach was that Airflow didn’t just stabilize Airbnb’s internal data operations; it became an industry-standard orchestration layer that Airbnb later open-sourced, turning a costly internal investment into a widely adopted data tool.

Buy: Snowflake AI Data Cloud in the Forrester study

Forrester conducted the Total Economic Impact study for the Snowflake product by interviewing four companies that use this service. Before purchasing the Snowflake solution, the companies used fragmented on-premises data solutions, which created data silos, operational overhead, and technical complexity.

The study highlights 10%–35% productivity improvements across data engineers, data scientists, and data analysts, translating into nearly $7.7 million in savings from faster time-to-value and streamlined workflows. It also reports more than $5.6 million in savings from infrastructure and database management.

However, the “buy” option required these companies to invest in internal labor costs to migrate data, set up data pipelines, and customize the platform to each company’s needs.

Our research revealed that only a few companies decide on the internal building strategy. They realize that upfront investments are high and the payback period is longer, which is a luxury in a world where AI wins the market so quickly.

Choosing the “build” approach should be well-justified and have a clear competitive edge, as was the case with Airbnb.

Selecting a data engineering partner based on their service offerings

A reputable data engineering services partner offers a comprehensive suite of end-to-end data capabilities, including building, optimizing, and maintaining your organization’s data lifecycle and infrastructure.

Data pipeline development and orchestration

This service involves designing, developing, and implementing data pipelines that ingest data from various data sources, transform it, and load it into target systems such as data warehouses or data lakes. It’s possible with the help of ETL (extract, transform, load) and ELT (extract, load, transform) processes.

Partners should demonstrate hands-on expertise in widely adopted orchestration and data integration tools and frameworks, such as Apache Airflow, Dagster, Prefect, Argo Workflows, and cloud-native options like AWS Step Functions, Google Cloud Composer, and Azure Data Factory to automate complex workflows end-to-end, from data ingestion and transformation to monitoring and recovery.

Data storage, management, and architecture strategy

Effective data storage and management are crucial for data accessibility and performance. Data engineering partners help design and implement optimal data architectures, whether that involves a traditional cloud data warehouse for structured data analytics (e.g., Amazon Redshift), a data lake for raw, unstructured data, or hybrid data lakehouse architectures.

All-around data storage services include strategies for data partitioning, indexing, and schema design to ensure efficient querying and cost management. Partners will guide you in selecting and configuring scalable data storage solutions or on-premises infrastructure, ensuring scalability and performance that align with your company’s business and data platform strategy (e.g, the choice between Snowflake or Google BigQuery).

Data quality, validation, and observability

A professional data engineering services company also provides robust data quality checks, profiling, cleansing, and standardization. Data specialists establish automated data validation rules and processes to identify and rectify data anomalies early in the pipeline.

Another key aspect is data observability: the ability to understand the health and performance of your data systems through monitoring, logging, and alerting. These procedures help engineers detect data issues and resolve them proactively, building trust in the business and customer data.

Data governance, security, and compliance

Qualified data engineering partners provide expertise in establishing frameworks for data ownership, data catalog, metadata management, data lineage tracking, and access control policies.

However, true experts also realize that the concept of data ownership has evolved. Malcolm Hawker, a CDO at Profisee, claims that modern data ownership is more flexible than it used to be.

Data doesn’t behave like an asset you can lock in a vault. It behaves more like a shared language, where its meaning, value, and risk profile shift based on context. That means effective governance isn’t about controlling data. It’s about orchestrating accountability across contexts.

Apart from data accountability, experienced partners ensure that data-handling practices comply with relevant regulations (e.g., GDPR, CCPA, HIPAA), safeguard sensitive information, and maintain data privacy. Plus, they implement strong security measures for data at rest and in transit to protect against unauthorized access and breaches.

Advanced analytics and AI/ML enablement

Beyond foundational cloud infrastructure, comprehensive data engineering services are critical for enabling advanced analytics and AI/ML initiatives. Data science and engineering specialists prepare and curate datasets, engineer features, and build the necessary data pipelines to feed machine learning models.

They ensure that data is accessible, well-structured, and performant for model training and inference. This includes integrating with AI/ML platforms, establishing MLOps pipelines, and ensuring data readiness for complex analytical workloads, thereby bridging the gap between raw data and actionable intelligence for data scientists and business users alike.

Future-proofing your data infrastructure

If you remember one thing from this guide, let it be this: your data infrastructure doesn’t need to be “perfect.” It needs to be reliable enough to run the business and structured enough to scale, without turning every new initiative into a fire drill. The fastest way to get there is to choose one priority bottleneck (trust, speed, cost, or governance), fix it with the right service, and ensure the solution is production-ready: monitored, documented, owned, and measurable.

As part of our end-to-end data engineering consulting services, Xenoss can help you assess your data maturity, design a realistic data improvement roadmap, and build the data foundation that supports large-scale analytics and AI.