Annually, downtime costs Global 2000 companies almost $400 billion. The reasons vary: 56% of incidents are due to cybersecurity attacks, and 44% are due to application or infrastructure issues.

For systems processing millions of transactions or managing real-time logistics, even a few minutes of interruption can result in millions of dollars in lost revenue, regulatory fines, and irreparable damage to customer trust. For instance, the UK fashion retailer ASOS lost more than $25 million in 2019 due to a glitch in their warehouse management system (WMS).

Legacy systems sit at the center of this risk. As they age, tightly coupled architectures, old codebases on languages like COBOL, undocumented dependencies, and brittle integrations make failures harder to isolate and recovery slower.

This is where zero-downtime app modernization services enter the picture, as an architectural and operational discipline focused on resilience, reversibility, and controlled change. Modernization without shutdown means isolating risk, deploying changes incrementally, and ensuring that failures remain contained rather than cascading across the organization.

In this guide, we outline how enterprises can rebuild and modernize critical systems while maintaining business continuity. We cover the architectural patterns, governance controls, and execution strategies that help reduce modernization risk without slowing delivery.

How to plan enterprise application modernization without downtime

Application modernization isn’t so much about never experiencing downtime as about becoming more resilient and flexible. Even if you experience downtime, you should be able to quickly resume work with minimal impact on your customers.

And that’s the core aim of modernization: how to increase your business’s vitality and resilience to withstand hardships and become even stronger with the help of modern architectures and technologies.

Here are three concepts that make this possible:

- Complete visibility into the current legacy systems, which means mapping every legacy component, including data flows, dependencies, SLAs, and risk thresholds.

- Certainty about how changes will affect operations. It comes from designing architecture that can coexist with legacy systems to enable safe and incremental modernization.

- Control over how new components are introduced. You can achieve this by implementing gateways, observability layers, and structured migration paths that ensure transitions are incremental and reversible.

These principles shift modernization from a risky “big move” to a sequence of measurable, low-risk steps. They also clarify why zero-downtime modernization is as much an operational discipline as it is an engineering one. Architecture, governance, and execution models must work together to ensure that progress never compromises availability.

Legacy app modernization patterns that reduce downtime

Zero-downtime application modernization strategy depends less on specific technologies and more on the architectural patterns used to introduce change. The wrong pattern can amplify risk, while the right one allows teams to modernize incrementally without disrupting core operations.

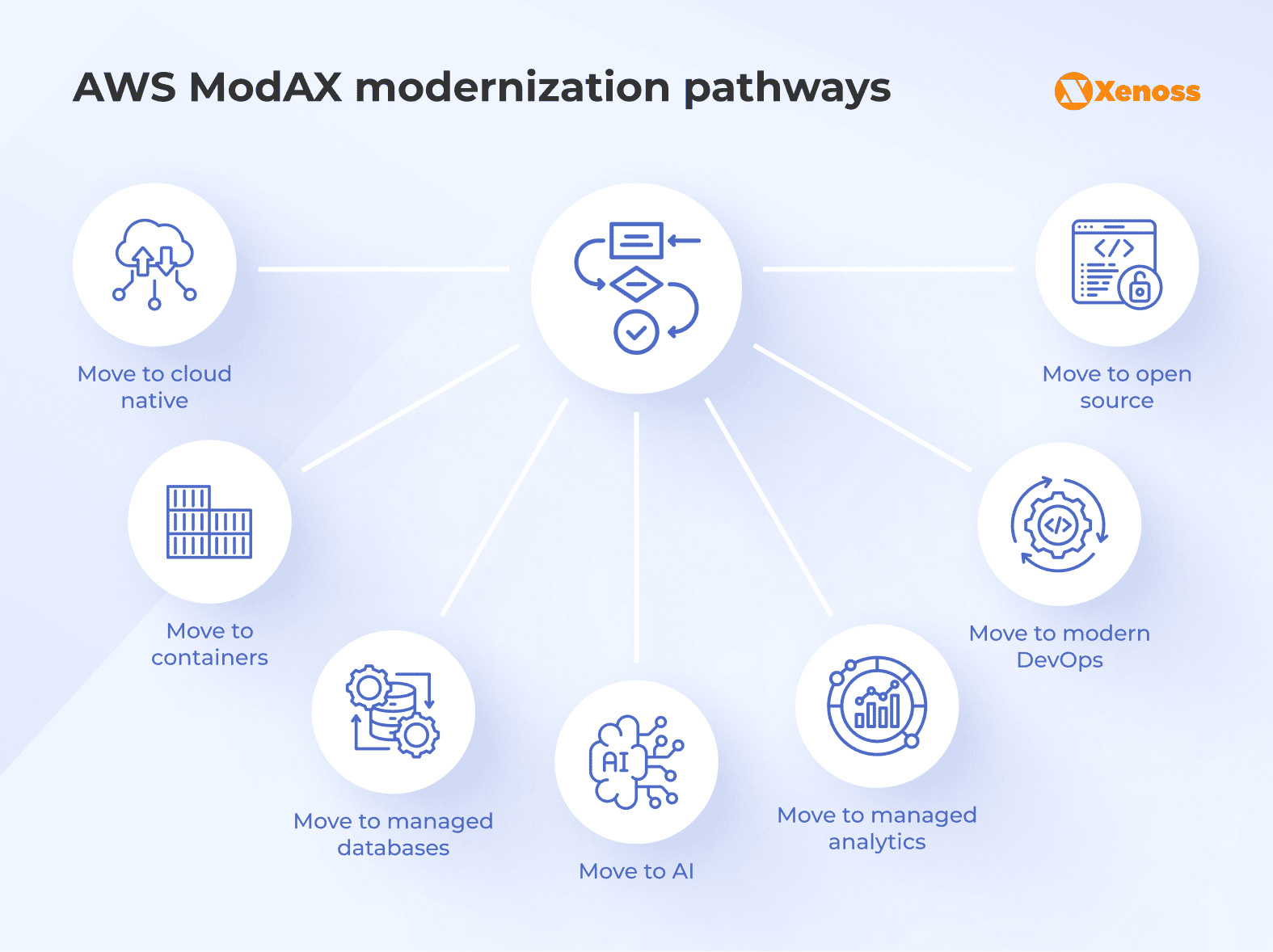

AWS modernization experience-based (ModAX) framework offers seven core cloud modernization services, which allow an organization to ensure a complete application overhaul and achieve the most lasting business benefits.

But companies don’t have to undergo all of them at once. It’s an incremental process that allows each business to choose the most suitable order of innovation. These pathways include moving to:

- A cloud-native environment, which means designing a new application architecture, such as microservices, event-based architectures, or serverless computing.

- Containers that require adopting container orchestration services, such as Kubernetes, to package applications for rapid deployment.

- Managed databases that presume offloading data management to the cloud provider, such as Amazon Redshift.

- Managed analytics, which means integrating advanced analytics solutions to extract relevant insights and use them in data-driven decision-making.

- Modern DevOps that requires automated CI/CD pipelines, test-driven development, and AI/ML-enhanced tools.

- Open source, which means reducing licensing costs by transitioning from commercial to open-source technologies, such as moving from Oracle to PostgreSQL.

- AI that means adding AI capabilities to future-proof applications.

Taken together, these approaches make legacy application modernization strategies safer and help businesses increase operational efficiency.

Companies modernizing their applications with ModAX report 42% faster IT resource provisioning, 86% more weekly feature deployment, and 25% faster time to resolve downtime incidents.

But to enable all of the above modernization strategies, you would need to make clear architectural decisions, and one of the most important ones is migration from a monolithic legacy architecture to agile microservices.

Decoupling monoliths to microservices

The core principle of zero-downtime modernization is architecture decoupling. The monolithic nature of legacy applications is their primary weakness, creating tight dependencies that make change risky and slow. The new design must break down the system into loosely coupled, independently deployable microservices.

Each domain service should own its data and expose its functionality through a well-defined API gateway.

To ensure safe decoupling from a monolith to microservices, engineers typically:

- Apply domain-driven design (DDD) to align business domains with technical boundaries, ensuring services reflect real business capabilities rather than technical convenience. DDD helps engineers avoid overly granular microservices that increase operational overhead and maintenance complexity.

- Adopt asynchronous communication where appropriate, using message-driven patterns for task-oriented workflows and event-driven architectures for real-time data propagation. This reduces tight coupling and prevents synchronous dependencies from becoming single points of failure.

- Introduce a service mesh to manage service-to-service communication, providing built-in observability, traffic shaping, retries, and fault isolation without embedding this logic into application code.

- Add an API gateway to handle client-to-server communication, routing, authentication, and rate limiting. Importantly, the gateway must remain infrastructure-focused and stateless, without embedding business logic, so it does not become a new dependency or bottleneck in a loosely coupled architecture.

- Enforce secure service-to-service communication, using mutual TLS (mTLS), strong authorization policies, centralized secrets management, and comprehensive audit logging to maintain security and compliance as the number of services grows.

Decoupling a monolithic architecture into microservices is a foundational step in legacy application modernization services. Once this separation is established, organizations can control the pace and scope of modernization, choosing which application services to modernize first, which to leave untouched temporarily, and how long old and new systems must coexist. Or, in other words, they select appropriate patterns.

Strangler fig pattern

The strangler fig pattern gets its name from a natural phenomenon in which the roots of a strangler fig gradually surround and replace a host tree. This approach allows you to modernize incrementally without the risks of complete system replacement.

Here’s how to implement it effectively:

Start with a facade layer. Create an interface layer that intercepts all requests to your legacy system. It passes requests through and gives you control over routing decisions as you build new microservice components.

Identify logical boundaries. Look for areas in a monolith where functionality can be extracted without breaking dependencies. Payment processing, user authentication, and reporting often make good candidates for early extraction.

Replace components gradually. Build new microservices to handle specific functions, then redirect traffic from the facade to these services once they’ve been proven to work correctly.

Run systems in parallel. Test new components alongside legacy code to ensure they produce identical results before cutting over completely.

Retire replaced components. Only after new functionality has been validated and is stable should you decommission the corresponding legacy code.

An incremental microservices implementation approach reduces risk while delivering immediate value. Each modernized component improves system performance and reduces technical debt.

Leave-and-layer pattern

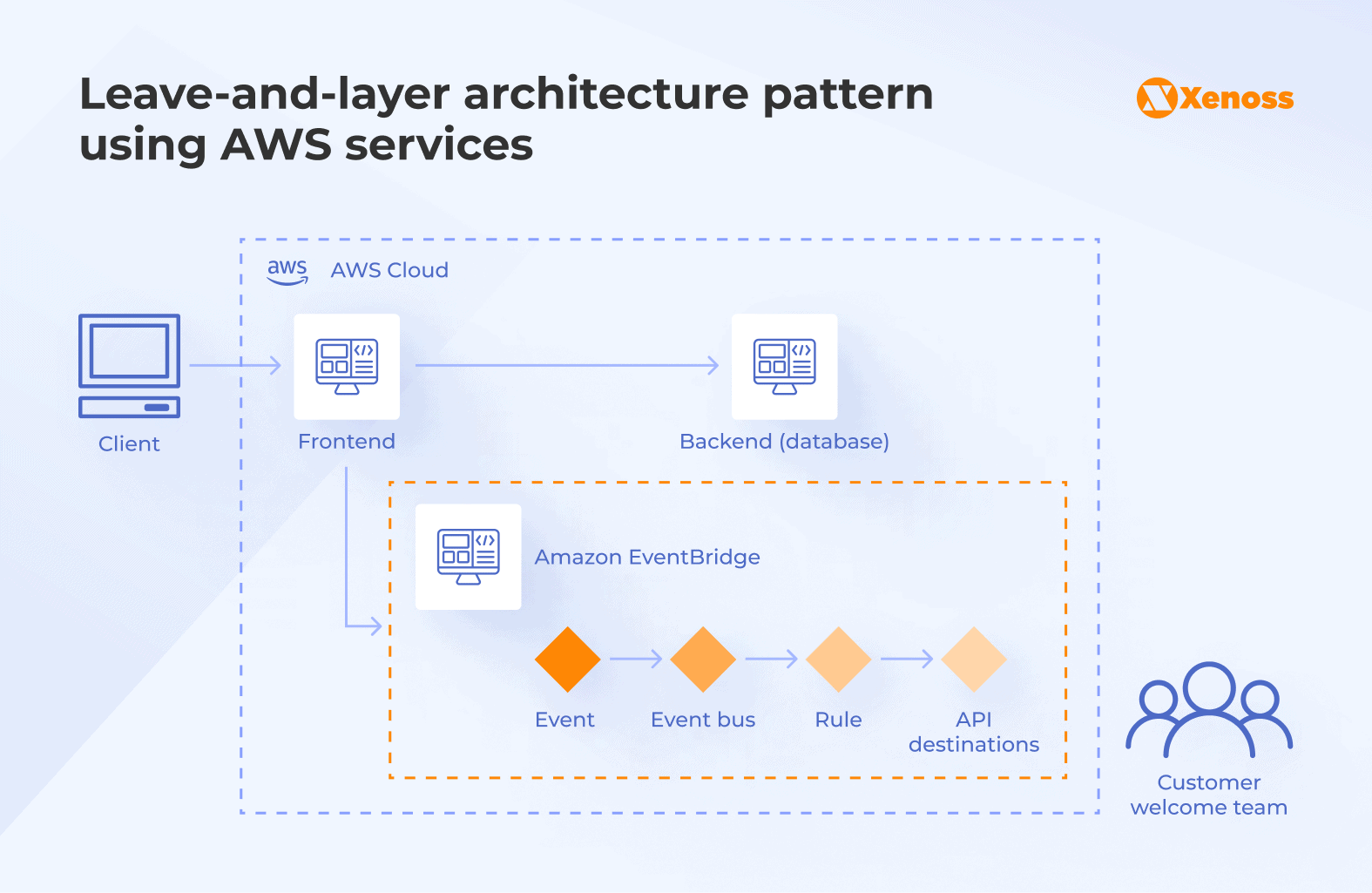

Leave-and-layer focuses on stabilizing the legacy core while introducing a modern layer on top. Instead of replacing existing systems, organizations expose legacy functionality via APIs and build new experiences, workflows, or analytics around it.

To enable this pattern, you need to build an event-driven architecture with an event bus service (e.g., Amazon EventBridge). A legacy application serves as an event producer, internal, external, or customer systems serve as event consumers, and EventBridge serves as an intermediary layer.

The first step in setting up a leave-and-layer pattern is to transform a legacy application into an event producer by integrating an abstraction layer that switches between event buses for asynchronous and synchronous event publishing. The possibility to switch allows enterprises to balance correctness, resilience, and availability without increasing the risk of downtime.

Asynchronous event publishing prevents downstream failures from cascading back into the legacy system. If a consumer fails or slows down, the core application continues to run. By contrast, synchronous publishing ensures the core application waits for an immediate response when a decision must be confirmed before proceeding, such as validating a payment or creating an order.

This pattern is particularly beneficial when you need to extend your application’s capabilities to get immediate, measurable business value without replacing the system entirely. You ensure zero-downtime application improvements and can release new features quickly. This approach could be particularly useful for extending your legacy application with AI/ML capabilities.

Anti-corruption layer (ACL) pattern

The ACL approach helps businesses enable gradual application modernization by maintaining communication between new microservices and the core system via an anti-corruption layer, so that the potential quality issues in legacy systems (e.g., convoluted data schemas) can’t affect or “corrupt” the newly created services. When the application is fully migrated to the new environment, an ACL can be retired.

The ACL works by isolating the core legacy system from the microservices subsystems, allowing microservices to call the monolith safely. For instance, in the architecture below, you can see the extraction of the User service from the monolithic application as a standalone microservice. If other monolithic services, such as a Cart service, call the User service directly, this can cause changes in the Cart service. To avoid this and enable safe data exchange between new and monolithic services, the ACL is introduced.

This pattern is useful when you need to keep your monolithic application functioning for as long as possible. But ACL is rarely a standalone solution. Instead, it is an additional layer of safety when modernizing legacy solutions.

How to choose the right modernization pattern

The table below helps you to quickly grasp the essence of the core application modernization patterns that prevent business downtime.

| Dimension | Strangler fig pattern | Leave-and-layer pattern | Anti-corruption layer (ACL) pattern |

|---|---|---|---|

| Primary goal | Incrementally replace legacy functionality with modern services | Add new capabilities without touching the legacy core | Protect new systems from legacy complexity and data models |

| Core idea | Gradually “strangle” the monolith by routing functionality to new services | Keep the legacy system intact and layer new services around it via events | Insert a translation layer between legacy and modern systems |

| Impact on the legacy system | Legacy components are progressively removed | Legacy system remains largely unchanged | Legacy system remains unchanged |

| Risk profile | Medium: requires careful dependency management | Low: minimal changes to legacy core | Low to medium: depends on integration complexity |

| Downtime reduction | High: parallel run and gradual traffic redirection | High: new features don’t interfere with legacy flows | High: isolates failures and behavior mismatches |

| Typical architecture changes | API gateway/facade, new microservices, gradual traffic routing | Event bus (e.g., EventBridge, Kafka), new consumers and services | Adapter or translation layer between systems |

| Data strategy | Often requires gradual data ownership migration | Legacy remains the system of record; data replicated via events | Data transformed and normalized at the boundary |

| Best starting domains | Payments, tracking, reporting, and authentication | Analytics, notifications, AI/ML features | Integration-heavy application services with complex legacy models |

| Time to first value | Medium: requires extraction and validation | Fast: new features can be added quickly | Fast to medium: depends on integration effort |

| Long-term outcome | Monolith shrinks and can eventually be retired | Monolith persists, but business evolves around it | Clean modern architecture insulated from legacy debt |

| Operational complexity | Moderate: parallel systems and routing logic | Lower: fewer changes to core operations | Moderate: translation logic must be maintained |

| When it works best | When the legacy system can be safely decomposed | When the legacy system is too risky or costly to modify | When legacy models are incompatible with modern design |

| When it struggles | Highly entangled monoliths with shared DB logic | When the legacy must eventually be retired | If ACL becomes overly complex or poorly governed |

The list of modernization patterns for efficiently decomposing the monolithic architecture is much longer, but we’ve covered the most common ones. Expert solution architects know which ones to choose depending on the business’s goals and the complexity of the legacy system.

Your modernization decisions should consider both immediate operational needs and long-term strategic goals. The patterns that work best maintain business continuity while building toward a more flexible, scalable architecture.

An engineering team can combine different modernization patterns to achieve an even more efficient application modernization. For instance, the strangler fig pattern works well in conjunction with the leave-and-layer or the ACL one, and this combination can help you accelerate monolith decomposition.

Phased modernization roadmap with the focus on business continuity

Zero-downtime modernization is rarely achieved through a single architectural decision. It is the result of a phased execution model that balances progress with control. A structured roadmap allows organizations to modernize critical systems while preserving availability, data integrity, and user experience.

Let’s take an illustrative example of an imaginative company and walk through the modernization process with it to see how to avoid downtime in practice. Here’s some background information about the company.

Disclaimer: The modernization path in this section is provided for illustrative purposes only and includes general points without a detailed explanation of every technical and architectural choice.

Phase 1: Comprehensive assessment

Our initial goal is to create a complete application map that highlights data flows, integration points, and hidden complexities that have accumulated over years of patches and modifications in the legacy system.

This profound deconstruction is essential for planning a safe, incremental legacy application migration. The company uses recovery time objective (RTO) as the metric, which defines the maximum acceptable time a function can be unavailable before causing significant organizational damage. The aim is, of course, to avoid downtime by all means, but if anything occurs, we need to know how much time a team has to restore the system.

Example outcomes:

- 26 cron jobs (scheduled automated commands) are silently orchestrating pricing, warehouse updates, and invoice preparation.

- The Oracle database holds business logic in the form of triggers, stored procedures, and views that the monolith relied on.

- A “simple” tracking API turned out to involve five external carriers, each interacting differently via XML files exchanged via SFTP (a manual, inflexible approach).

Phase 2: Architectural design for resilience and incremental transition

The company decides to decouple a monolith into microservices using the strangler fig pattern. A microservices architecture will allow the company to rebuild the legacy system piece by piece, deploying new services independently without affecting the rest of the application.

Example decisions:

- Introducing an API Gateway as the single point of entry to route traffic between old and new components without disrupting users.

- Selecting Tracking as the first application service to extract from the monolithic architecture because it had fewer dependencies and a clean boundary.

- Postponing modernization of Invoicing because it’s deeply intertwined with the old data schema and poses a high business risk.

Phase 3: Incremental rebuild and parallel operation strategies

Instead of shutting down the monolith or migrating everything at once, the organization rebuilds individual domains in a parallel operating model. New services are constructed beside the existing system, initially hidden behind facades until fully validated.

Each component processes real production inputs in “shadow mode,” allowing engineers to compare outputs from old and new logic without impacting users. This approach exposes behavioral inconsistencies early, whether due to undocumented legacy rules or complex integration flows, and allows teams to correct them before any user traffic is redirected.

Parallel operation becomes the backbone of the modernization strategy: a way to introduce new functionality safely, test thoroughly, and transition gradually.

Example outcomes:

- The new Tracking service processed mirrored production traffic, exposing edge cases like timezone offsets buried in legacy helper classes.

- Engineers rebuilt the pricing logic in a standalone service and validated it by comparing 100,000 pricing computations against those from the monolith.

- Legacy warehouse update scripts were replaced with event-driven components that ran in parallel until their output matched exactly.

Over time, more and more functionality is migrated until the legacy system is fully encapsulated and can be safely decommissioned.

Phase 4: Data migration

A zero-downtime rebuild hinges on the ability to migrate data from the legacy database to the new data stores without interrupting data access or compromising integrity. The new microservices architecture typically calls for a decentralized data model, with each service managing its own database. The challenge is to move data from the old, centralized model to the new, distributed one while the application is live and actively writing new data.

Example transitions:

- Tables that only served reporting workloads were moved first, freeing the monolith from heavy analytical queries.

- A change data capture (CDC) pipeline began streaming updates from Oracle to Postgres, enabling real-time synchronization during migrations.

- Batch jobs that imported warehouse files were replaced with a Kafka-based data pipeline that immediately processed updates.

- The new cloud-based data stores were configured with robust access controls, encryption at rest, and comprehensive auditing capabilities.

The Oracle DB didn’t disappear, but it became smaller, simpler, and less risky, turning into a legacy shell rather than the single source of truth.

Phase 5: Rigorous testing

The cost of application modernization failure is high, as 72% of senior IT decision-makers report significant downtime due to resilience issues with the IT infrastructure. That’s why building a comprehensive testing environment is crucial to validate the new system’s functionality, performance, and resilience without disrupting the live production environment.

For instance, contract testing ensures that the APIs of the new microservices are compatible with their consumers. End-to-end tests must be carefully designed to run against the live, hybrid environment, validating user journeys that may span both the legacy monolith and new services.

Example test insights:

- Side-by-side testing revealed undocumented pricing exceptions applied only on weekends.

- Performance tests identified a bottleneck in the new API caused by a legacy XML parsing dependency.

- Integration tests exposed that one warehouse partner returned malformed XML files incompatible with the new parser.

Phase 6: Orchestrated cutover and seamless transition

The cutover is the moment of truth, where production traffic is fully and finally directed to the new system. The company decided on the blue-green deployment, which involves running two identical production environments: “blue” (the existing system) and “green” (the new system). Once the green environment is thoroughly tested and validated, the router is switched to direct all traffic to it. If any issues are detected, the switch can be flipped back to blue instantly.

Every step of the cutover must be reversible. A rollback strategy is as important as the deployment plan itself. It means having automated procedures to instantly switch traffic back to the legacy system if a critical issue is discovered post-cutover.

Example cutover actions:

- 5% of tracking traffic was routed to the new service, then 20%, then 100%.

- Partners were gradually moved from SFTP-based XML feeds to a modern REST API.

- Legacy modules were kept running for weeks after cutover to validate output and ensure no silent failures were introduced.

Phase 7: Post-rebuild optimization

The journey of application modernization is never truly over. But agile microservices architecture allows different teams to work on different parts of the system independently, accelerating innovation and helping the company maintain system responsiveness.

Example retirements:

- Cron scripts replaced by event-driven flows were disabled one by one, each after a 30-day observation window.

- Tables no longer used by any service were archived and removed from Oracle, reducing maintenance complexity.

- The monolith lost responsibility for tracking, pricing, notifications, and reporting, shrinking by roughly 70%.

Engineers now ship weekly releases because the new services deploy independently.

Observability dashboards made issues visible in minutes instead of days. New features, such as real-time shipment visibility, were delivered on infrastructure that won’t break under load.

The logistics company went from “don’t touch anything, it might break” to “we can ship new features safely any time.”

Checklist for choosing an external application modernization partner

Even a gradual application modernization framework can still be complex, and you might need an external partner to help you prepare and manage the process. A partner can make or break your modernization initiative. To make sure you select the right one, use our detailed table below.

| Evaluation area | What to assess | Why it matters | Questions to ask | Red flags | What “good” looks like |

|---|---|---|---|---|---|

| Legacy system understanding | Ability to analyze complex, undocumented legacy environments | Most failures come from hidden dependencies and assumptions | How do you map business logic, data flows, and integrations before starting? | “We’ll figure it out as we go.” | Structured discovery, dependency mapping, and system forensics |

| Zero-downtime experience | Proven track record of modernizing live, mission-critical systems | Downtime equals revenue loss, SLA breaches, and reputational damage | Can you show examples of live systems modernized without outages? | Case studies focus only on greenfield builds | Concrete examples with parallel runs and controlled cutovers |

| Incremental migration approach | Use of patterns like strangler fig, leave-and-layer, phased rollouts | Big-bang rewrites dramatically increase risk | How do you migrate functionality while the system stays live? | One-time cutover plans | Clear, step-by-step migration roadmap with rollback paths |

| Data migration & integrity | Strategy for real-time data sync, CDC, and consistency guarantees | Data issues are the hardest and most expensive failures | How do you prevent data loss or divergence during migration? | Data migration is treated as an afterthought | Dual writes, CDC pipelines, validation, and reconciliation plans |

| Operational maturity | CI/CD, observability, SRE, and incident response practices | Modern systems fail without strong operations | How do you monitor, test, and roll back changes in production? | Manual deployments, weak monitoring | Automated pipelines, SLOs, and real-time observability |

| Business & governance alignment | Ability to align tech decisions with business priorities and constraints | Modernization is a business-oriented program | How do you work with CIOs, architects, and procurement? | Purely technical delivery mindset | Clear governance, transparent planning, business-aligned milestones |

A strong modernization partner can explain each technological decision in business terms and develop modern architectures, but in a way that aligns with your domain needs rather than simply applying the same cookie-cutter modernization logic to every client.

They should treat your legacy software with respect, as one of the most critical components of your IT infrastructure, and aim for the least disruptive way to modernize it. Avoid vendors who overly criticize your current architecture or systems and suggest a complete overhaul within 6 months. This is disrespectful and unrealistic, the worst combination in an engineering partner.

Key takeaways

Once you find a balance between visibility into your legacy stack and its integration with your business operations, certainty in technological and architectural decisions, and control over gradual system updates, legacy modernization becomes manageable, measurable, and far less likely to stall halfway through.

The key takeaways are as follows:

- Plan meticulously. Success begins with a clear, business-aligned modernization roadmap.

- Architect for transition. Design the system that can coexist with the legacy one, enabling an incremental migration.

- Embrace automation. DevOps, CI/CD, and automated testing are essential for managing risk and complexity.

- Data is the backbone. A real-time data synchronization strategy is the linchpin of a seamless, no-data-loss transition.

- Cutover with control. Use modern deployment strategies, such as blue-green, to make the final transition a low-risk, reversible event.

Our solution architects, business analysts, and data engineers work with CIOs and engineering leaders to provide software modernization services as a managed program, breaking down large-scale legacy system transformation into well-planned, measurable phases that deliver value without disrupting business operations.