A Reddit user stated that their large-scale financial data migration project has been ongoing for over five years, involving nearly 100 people. Hard to imagine that data migration could take this long. But many factors could’ve led to this. Possibly, a lack of documentation and proper planning at the beginning, the team’s inefficiency (judging by the number of people involved), or scope creep.

The data migration project, which initially aimed to optimize costs and enhance decision-making through improved analytics, evolved into a rushed “firefighting” effort with lots of money and time lost in the void.

Companies overrun their allocated budgets for data migration by $0.3 million per data set. When migrating numerous datasets simultaneously, costs can quickly spiral out of control. At the same time, stakeholders will fail to see the promised value of moving to a new system or environment.

To prevent wondering mid-process why data migration is taking so long and costing so much, it’s better to begin it with a thorough estimation stage and compose a risk mitigation plan, which can help determine how to resolve issues before they escalate. This is the way to conduct data migration with minimal disruption.

In this article, we’ll analyze the entire data migration lifecycle to identify potential risks that can increase budgets and duration, and provide you with clear strategies and best practices to predict and mitigate them.

Why data migration projects fail

Businesses plant the seed of data migration success or failure before beginning it by following one or three of the mindset patterns below.

Tech-first approach

Data migration isn’t a purely technical job. In reality, it’s an enterprise-wide transformation that requires alignment, governance, and continuous validation at every stage. Treating it as a purely technical project can lead to misalignment with business objectives.

For instance, a global retailer decided to migrate its legacy ERP data from on-premises to the cloud. In the process, the team overlooked the impact of the move on existing analytics workflows, resulting in significant disruption. Beyond simply transferring data, they should have accounted for all ERP integrations and ensured they were configured correctly in the cloud.

This would have required gathering requirements from every team relying on ERP data, as well as preparing training materials to help staff adapt to the new environment more seamlessly.

Underestimating data migration planning and ownership

Even on a small scale, data migration can be a complex process, requiring thorough preparation by listing every dataset that needs to be migrated. Assumptions that all it takes is just moving data from a source to the target system, and any data will fit seamlessly into the target system, can cause disruption not only to the migration process but also to the business workflow.

In practice, legacy logic, proprietary formats, and integrations make data migration far more complex. That’s why to migrate data between systems and environments successfully, organizations need to invest time into a data migration plan and assign a data migration owner to control the process and prevent unexpected issues.

Overreliance on cloud and migration tools

Businesses may consider that choosing a modern cloud platform or an ETL tool automatically solves data migration challenges. But tools are only enablers. They won’t replace planning, governance, and skilled execution. For instance, tools alone won’t define and solve data quality and compatibility issues. This task requires engaging competent data specialists with domain expertise to perform data auditing and profiling, and then validating the results and ensuring ongoing data quality monitoring.

By prioritizing technology over planning and ownership, teams will struggle to control the progress of data migration and ensure it stays within the estimated budget, scope, and timeline.

Data migration demystified: Types, strategies, risks, and tools

Before planning data migration, businesses should define the type and strategy of the migration. It’s like choosing the path towards the desired destination.

Data migration types and strategies

There are five types of data migration:

- Storage migration. When companies upgrade hardware with more on-premises servers or need to move to cloud storage to set up a centralized data repository.

- Database migration. This type involves shifting between database vendors (e.g., migrating from Oracle database to PostgreSQL or MongoDB).

- Application migration. During such migration, businesses are moving entire applications with their data into the new environment (between on-premises data centers or into the cloud).

- Cloud migration. For this type, organizations typically choose between public, private, or hybrid cloud solutions for their data migration.

- Business process migration. A complex type of migration that requires reengineering workflows along with data.

Often, multiple types of data migration co-occur, such as migrating a few applications along with their databases from on-premises to the cloud or to a hybrid environment.

Depending on the use case at hand and the complexity of migration, the following data migration strategies exist:

- Big bang. All data is moved in a single, large-scale transfer, offering speed but carrying a high risk if something goes wrong. This data migration strategy leaves no room for proper incremental testing and can lead to a cascade of issues in the post-migration environment.

- Phased. This approach underscores that data is migrated in stages (by department, function, or dataset), which reduces risk but extends the overall timeline. With phased migration, it’s crucial to accept the tradeoff of extended timelines in exchange for fewer risks.

- Parallel. During this migration, both old and new systems run simultaneously, ensuring business continuity. However, this approach can potentially increase operational costs, as you may need larger or more skilled teams to support parallel processes.

- Lift and shift. Data and applications are moved “as is” to a new environment, delivering quick results but with minimal modernization benefits. It’s a cost-effective and fast approach, but it may not be suitable for legacy software.

- Strangler fig. This pattern enables the gradual replacement of legacy components with modern ones while both systems run in parallel.

- Rearchitecting. When there is no way for data flows to support cloud-native or modern architectures, redesigning entire systems can be inevitable.

A thorough cost-benefit analysis, combined with a technological assessment, can help you make an informed decision regarding your data migration strategy.

For instance, you can define early on that your software requires rearchitecting and then decide on a phased migration to verify that everything is running smoothly. A timely system redesign at the beginning of the data migration can prove more cost-efficient than realizing mid-process that your legacy system is not suitable for migration.

Risks at different data migration stages and how to overcome them

The more unresolved risks accumulate before and during the data migration process, the longer the migration will take and the harder it’ll be to resolve those risks in the post-migration environment.

Being aware of the risks at each data migration stage and avoiding them is the first step in the successful data migration checklist.

#1. Assessment and planning

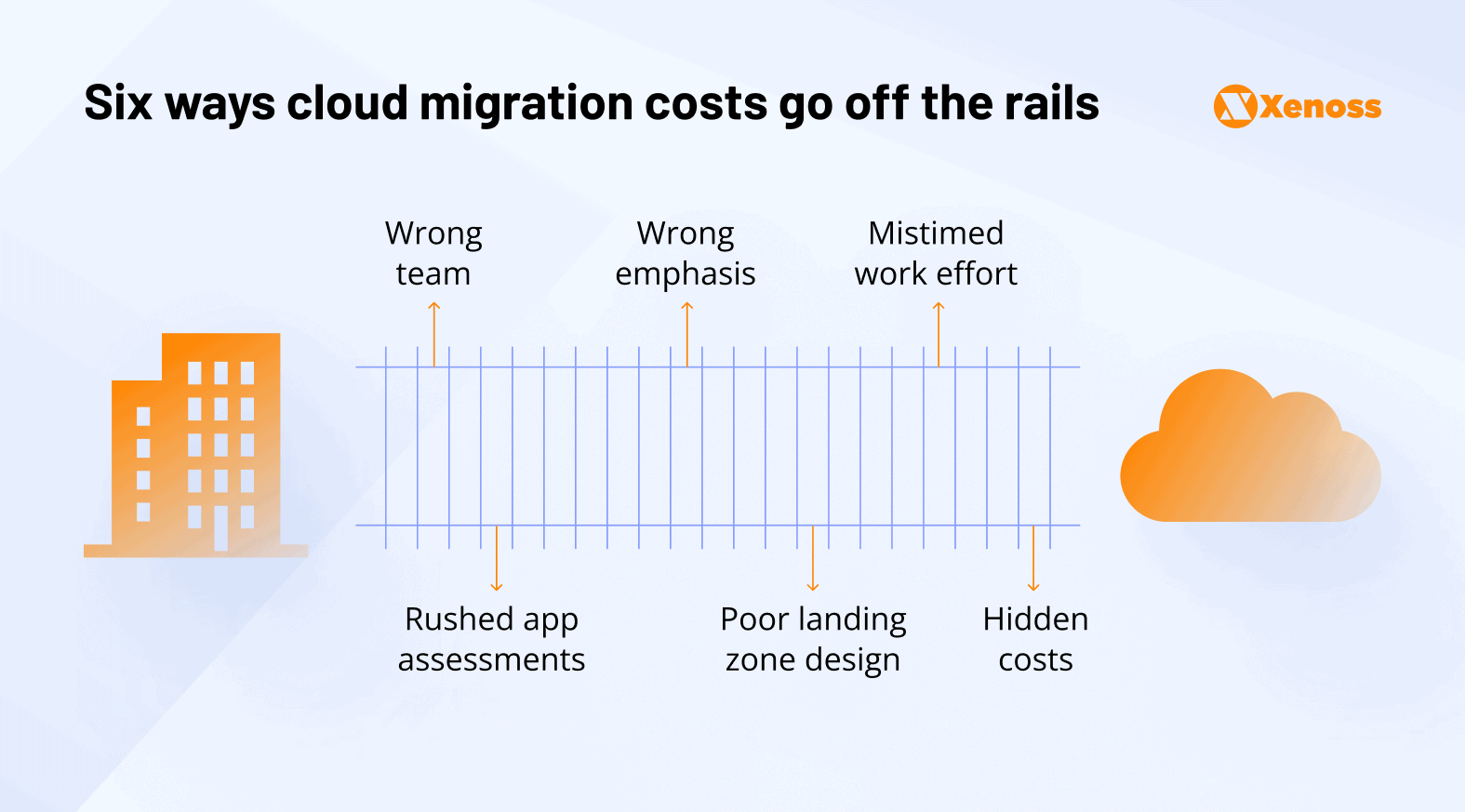

At this stage, it’s important not to rush things and consider every component of the future migration with due caution. Otherwise, you risk running into issues such as a lack of stakeholder buy-in or understanding, as well as unrealistic budget and time constraints. Gartner has identified six ways in which businesses can overrun their cloud migration costs. And all of them can be mitigated at the pre-migration stage.

The right data migration team includes:

- Data engineers to handle ETL/ELT pipelines.

- Business analysts to map workflow impacts.

- Compliance experts for GDPR/HIPAA audits.

- A project owner to enforce deadlines and budgets.

The next step would be to choose a clear goal, in collaboration with both IT and business leaders, that will guide the entire data migration process. Avoid generic, vague goals, such as “improve analytics”. Instead, set specific, measurable, achievable, relevant, and time-bound (SMART) goals, such as “reduce on-premises storage spend by 40% within 3 to 6 months”, “cut report generation time from 24 hours to 2 within 1 month after migration go-live”, or “achieve zero downtime during cutover”.

Once goals and metrics are defined, your team will be ready to perform an assessment. The assessment phase is necessary to carefully audit the source system, specify which datasets are prepared for migration, which need to be modified, which can’t be migrated due to compliance, and which need to be deleted entirely. Assessment results feed the final data migration scope.

And finally, to minimize cost-related surprises during the migration, identify compliance needs and budgets, but also establish contingency buffers in terms of time and budget (according to the Project Management Institute, 10-25% depending on the project complexity) to be prepared for unexpected expenses, as even the most careful planning cannot account for all possibilities.

The assessment and planning stage involves composing a team, defining scope, budget, timeline, and contingency plan. Once done, you can proceed with data preparation, much like a surgeon preparing the operating room for a patient with a specific health issue.

#2. Data preparation

Poor data quality will make data migration more challenging, as nothing is more frustrating than discovering that your customer data is incomplete or duplicated after migration is complete.

For example, Sun Microsystems undertook a massive data migration project to consolidate over 800 legacy systems into a single, centralized hub. From the technical standpoint, data deduplication was one of their biggest challenges. The data quality team has established ongoing data deduplication processes, which will continue even after the migration is complete. Such an approach enabled the company to execute a successful five-phase migration without incurring data quality issues in the production environment.

Dalton Cervo, a Customer Data Quality Lead at Sun Microsystems, said that:

Data migration was a huge challenge, where we had to adapt all legacy systems idiosyncrasies into a common structure. We had to perform a large data cleansing effort to avoid the data from falling out, and not being properly converted.

To avoid costly rework after migration, leverage data cleansing and profiling techniques to find data inconsistencies, duplication, incompleteness, or old and irrelevant data through data parsing, matching, enrichment, or standardization.

For instance, data cleansing involves merging records with fuzzy matching (e.g., “Jon Smith” vs. “Jonathan Smith”). For profiling data, you can use tools like Monte Carlo, Great Expectations, or custom AI/ML-powered solutions to flag anomalies (e.g., null values, mismatched formats).

During the data preparation stage, you should also classify and tag sensitive data (e.g., social security numbers, personal health information, credit card details) to ensure regulatory compliance with standards such as GDPR, HIPAA, or PCI DSS.

Combine both manual and automated efforts to monitor key data quality metrics, including error rate, volume of dark data, and duplicate record rate.

#3. Design and mapping

Data modeling, mapping, and schema design establish connections and relationships between data elements, facilitating the understanding of data and its context. What works in a source system might not work the same way in the target system. For instance, legacy systems can have data types, encoding formats, and structures that are incompatible with modern platforms.

Develop a high-level design of your data migration process with source-to-target mapping, which involves matching fields, table formats, and data formats. Mapping should also consider integrations and API dependencies of the source systems. The next step would be to apply data lineage tools, such as Collibra, to document which transformation logic (e.g., data conversion) is necessary to better match data in different storage environments.

Data mapping techniques can be manual (code-dependent and prone to errors), semi-automated, or fully automated, using tools such as Hevo Data and CloverDX. Incorrect manual-only data mapping can lead to data loss or corruption. Therefore, it’s better to opt for a semi-automated data design and mapping workflow, reserving complete automation for the maintenance and monitoring stage when minor fixes are required.

#4. Data conversion and execution

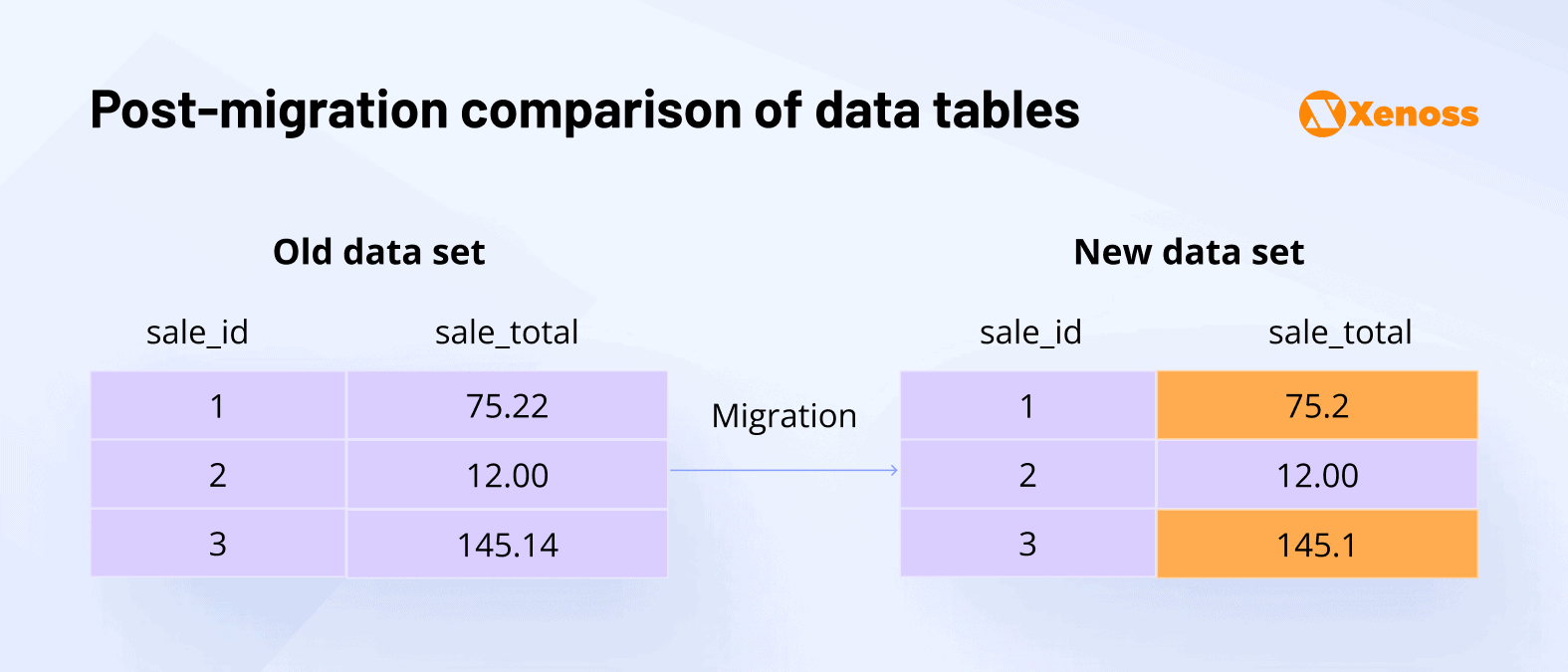

With established data transformation logic, you can proceed to data conversion, making your datasets comfortable in the new environment and ensuring quick querying and analytics. Accurate data conversion is necessary, as even minor differences in decimal values, as illustrated in the table below, can impact data analytics reports and lead to inaccurate revenue calculations.

Depending on the destination, you may need to convert your data to different formats. In particular, when transferring data in a data warehouse or data lakehouse, you’ll have to convert your data from CSV or JSON formats into Parquet, ORC, or Avro formats.

Apart from different formats, legacy data can have poor indexing strategies, suboptimal partitioning, and incompatible query patterns. To mitigate this, a skilled data engineering team can develop custom conversion engines that handle proprietary formats, COBOL data types, and mainframe encoding schemes (e.g., Unicode, ASCII).

Data cleansing, transformation logic, and conversion are all pre-migration steps that enable the execution of extract, transform, load (ETL) or extract, load, transform (ELT) data pipelines. With their help, data migration begins. You start incrementally loading data into the target systems while carefully validating and testing the whole process.

#5. Validation and testing

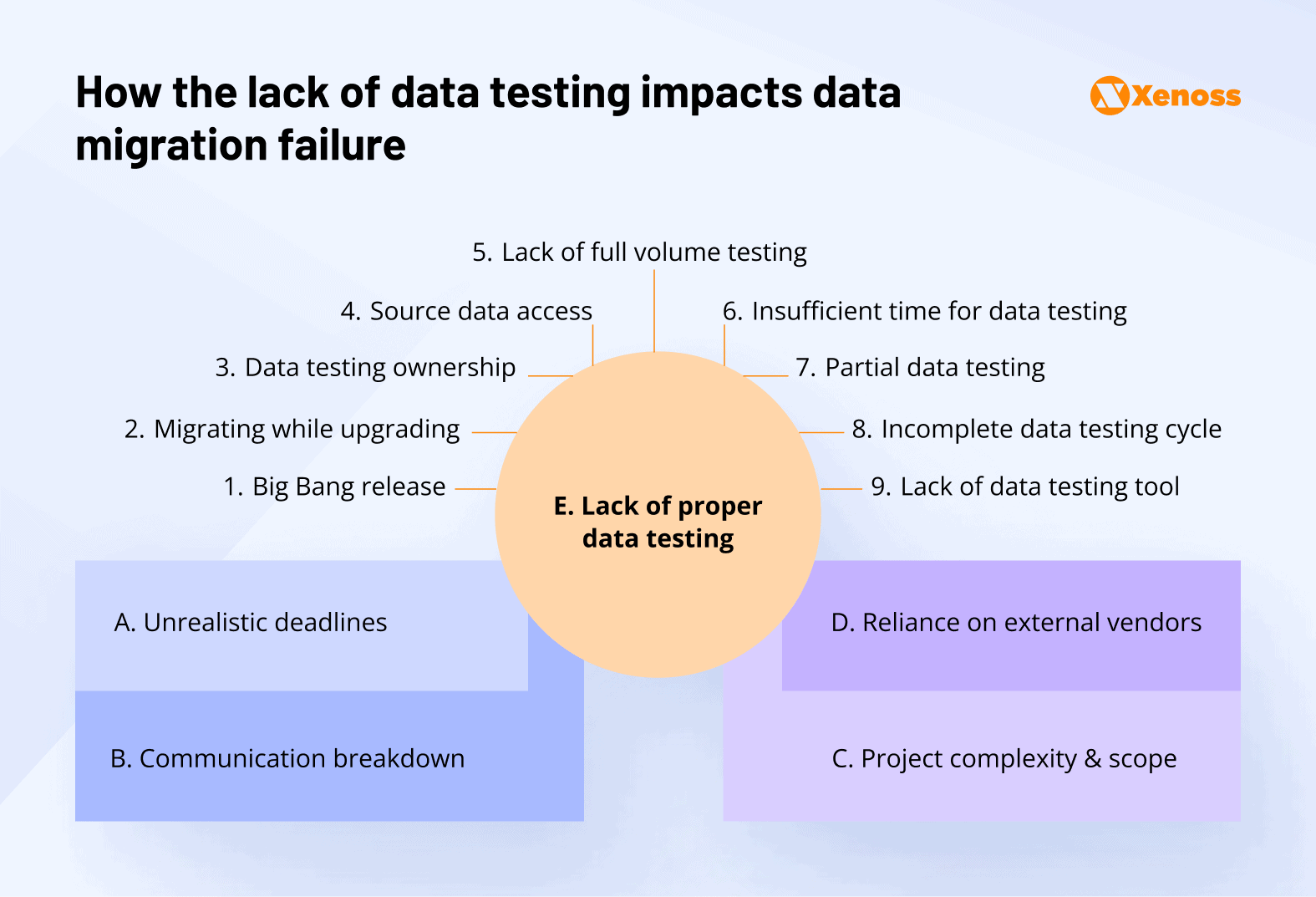

Thorough validation and testing can save you from numerous headaches in the future, including hefty compliance fines and lost customer trust. This is what happened with a TSB bank. In 2018, they were acquired by another company and planned to quickly transfer the data of 5 million customers to a new environment in a hastened big bang release. As a result, customers lost access to their accounts, ATM money withdrawals and digital operations were suspended.

The TSB’s migration team didn’t assign a data testing owner to enforce full-volume and complete data testing. They manually tested only small sample datasets and skipped edge cases. That meant many real-world scenarios never got validated. When the system went live, previously unseen data combinations and corner cases caused errors, account login failures, and transaction mismatches.

To avoid following the TSB example, it’s recommended to develop a data testing framework that involves sampling, checksums, and reconciliation reports, with clear data testing ownership to control the process. Multiple trial data migration runs can also help the business validate the effectiveness of data migration pipelines before rolling out a full-blown migration.

As data migration can run continuously, engineering teams can set up 24/7 data checks with automated real-time data validation and alerting systems to catch data issues before they impact business operations. But it’s also essential to combine manual supervision with automation tools, such as ETL Validator and QuerySurge, to save time and ensure no problems are missed or overlooked.

A comprehensive data validation and testing process can require some time, so it is essential to secure stakeholder buy-in for an extended timeline during this stage and support your argument with relevant examples, such as those from TSB.

#6. Cutover and monitoring

From cautious and gradual data migration execution, proceed to the final cutover in either a big bang, parallel, or phased manner. To secure the cutover phase, develop a custom backup and rollback strategy to quickly restore previous system operations and reduce harmful business impact in case of any emergencies or unexpected system outages.

AWS provides the following key aspects of the cutover phase, including backup, final data synchronization, and rollback.

After the final cutover, monitor performance and user feedback, and continue to validate data quality in production. Xenoss also helps clients create comprehensive post-cutover data monitoring dashboards that track migration progress and results, immediately detecting data inconsistencies and verifying integrity.

Data migration stages: Duration, deliverables, and benefits

| Data migration stage | Duration (in weeks) | Key deliverables | Business benefits |

|---|---|---|---|

| Assessment and planning | 2–6 | Migration strategy and roadmap Risk register Scope and success metrics Contingency plan | Clear scope Budget predictability Alignment across IT and business |

| Data preparation | 3–8 | Cleansed and standardized datasets Sensitive data classified and tagged Governance rules documented | Reliable and compliant data foundation Reduced downstream errors and rework |

| Design and mapping | 2–4 | Source-to-target mapping catalogue Documented transformation logic Data lineage records | Accurate schema blueprint Preserved business rules and relationships |

| Data conversion and execution | 4–12 | Conversion engines for legacy formats Automated ETL/ELT pipelines | Operational continuity during migration Improved performance |

| Validation and testing | 4–6 | Validation framework (sampling, checksums, reconciliation) Automated alerting system | Verified data integrity Smooth and secure go-live |

| Cutover and monitoring | ongoing (biweekly, or monthly iterations) | Backup and rollback strategy Post-cutover monitoring dashboards Incident response plan | Stable operations Continuous validation Ability to detect and resolve issues early |

Data migration isn’t a process that happens overnight. It requires patience at every stage. However, the reward for a well-planned and well-executed data migration is a competitive business workflow with systems that run with modern capabilities, enabling you to deliver improved customer service.

Data migration tools: Buy vs build

Choosing between out-of-the-box and custom-developed tools depends mainly on your business case. Ready-made migration solutions can be effective for more straightforward tasks, such as migrating structured datasets (e.g., customer records) from one well-supported relational database to another.

However, businesses may need to develop proprietary automation frameworks for data preparation, validation, monitoring, and governance to minimize manual effort and errors. For instance, this may involve building custom data enrichment pipelines that cleanse, transform, and augment data during the migration process.

Xenoss can evaluate your data migration scope and complexity to determine which tools to purchase and which to develop in-house. Custom development can also vary in complexity and, for instance, involve the development of simple scripts with a single task, which can prove both more effective and cost-efficient than ready-made solutions. Here are some examples of custom data migration solutions we offer:

Data migration tools for different scenarios

| Scenario | Tool type | Example tools | When to avoid |

|---|---|---|---|

| Structured data (e.g., SQL to SQL) | Off-the-shelf ETL | Talend, Informatica | Complex transformations needed |

| Legacy mainframes | Custom connectors | Custom parsers Schema analysis tools to map legacy data | Budget < $50K |

| Real-time validation | Hybrid (build and buy approaches) | Great Expectations Custom scripts | Static data sets |

| Performance-driven workloads | Optimization frameworks | Indexing, partitioning, and caching solutions | Low-query workloads |

| Compliance-heavy industries (finance, healthcare) | Security and compliance frameworks | Encryption pipelines, access controls, and audit logging | Non-sensitive datasets |

Each data migration case is unique, and tool selection should be well-aligned with core objectives and stakeholder expectations.

Data migration examples across industries

The data migration examples below demonstrate how a combination of thorough preparation for the data migration process and the selection of appropriate tools helped different companies perform successful data migrations.

From a costly healthcare mainframe to an agile cloud

The academic hospital relied on a legacy mainframe for its data and applications. Maintenance costs reached approximately $1 million annually. Instead of supporting legacy infrastructure, the money could have been invested in improving patient care. But beyond the financial burden, another risk was the dwindling number of specialists capable of supporting decades-old infrastructure.

To reduce maintenance costs, future-proof infrastructure, and ensure stable service delivery for patients, the hospital decided to undertake data migration. Their choice fell on cloud migration as it promised lower TCO compared to on-premises maintenance.

Together with Deloitte, the hospital team evaluated their current applications to define their value for the business and form a detailed inventory of all the assets, which will then be prioritized for migration. To comply with retention policies, the team enabled access to historical mainframe data through Tableau reports and an SQL Server.

By migrating core applications (54) and databases (53), as well as patient data, to the cloud, the hospital not only cut costs by 95% but also ensured long-term sustainability and unburdened its specialists.

FinTech company achieved a near-identical data migration

A leading digital banking provider, 10x Banking, successfully migrated around 25 terabytes of financial data with near-identical precision. Thousands of databases had to be moved while preserving complete integrity.

The company achieved this by breaking the migration into carefully managed stages, applying continuous validation, and securing strong stakeholder alignment.

Due to the project’s complexity, off-the-shelf cloud data migration tools weren’t an option for the 10x team, and they developed a custom streaming pipeline using several ready-made tools. To ensure that migration does not alter data integrity, the company established three independent validation processes, with the target and source systems generating checksums for comparison during extraction and after import. Then, a separate system performed a row-by-row and field-by-field analysis of the source and target tables.

The result was a seamless transition with zero data loss, showing that even the most complex financial systems can be migrated without sacrificing trust or compliance.

Xenoss’ best practices to balance risks, costs, and speed during data migration

Successful data migration is about striking a balance between technological fit, risk mitigation, and business needs. Moving too quickly can lead to errors or downtime. Over-engineering for risk can lead to costs spiraling out of control. Focusing solely on savings can cause the business to overlook its value.

As an experienced data migration services company, our best practices are designed to manage these forces effectively by putting business outcomes at the center. This means defining success in terms of making better decisions, achieving stronger compliance, or providing improved customer experiences.

From there, we help organizations align IT, compliance, and business leaders on priorities, focus first on the datasets that carry the most value, and keep every stakeholder engaged through the process. This balance is what turns data migration from a painful necessity into a growth enabler.