Enterprise AI faces a critical challenge in 2026: fragmented technology and disconnected workflows.

Modern enterprises manage 250-500+ applications that create data silos, hindering effective automation. 95% of IT leaders see integration challenges as a primary barrier to AI value, and only 28% of enterprise applications are effectively connected.

This fragmentation makes traditional single-agent AI approaches insufficient because no single agent can effectively navigate hundreds of disconnected systems and data formats.

Multi-agent systems where specialized agents coordinate autonomously are emerging as a more scalable and flexible alternative to support complex enterprise-grade operations.

The orchestration market is growing rapidly and is expected to surge from $8.5 billion in 2026 to $35 billion by 2030. MCP, released in late 2024, has become a viral sensation among machine learning engineers.

In April 2025, Google joined the orchestration market by releasing Agent2Agent (A2A), an open-source protocol designed to foster seamless collaboration between agents.

This post explores how A2A is transforming enterprise AI by enabling coordinated, scalable automation across complex ecosystems.

What is Agent2Agent?

Beyond this discovery mechanism, A2A supports sharing images and structured data for richer collaboration. For enterprise teams automating complex workflows, A2A offers a way to keep their entire AI estate connected.

How A2A works

To connect agents, A2A uses a server-client model where models exchange structured JSON messages over standard web protocols like HTTP.

A typical A2A interaction works as follows:

- Discovery: Agents find each other via Agent Cards and select a communication method.

- Secure connection: TLS encryption protects data transport; authentication credentials attach to each request.

- Task sharing: The caller sends a task message containing text instructions, file references, and structured data.

- Execution: Tasks run asynchronously, allowing agents to start long-running workflows while handling other work. Callers can poll for status or receive push notifications via webhooks.

- Input requests: If the server agent needs more data, it sets the task status to “input required” and waits for additional context.

- Completion: The server returns output as an artifact (document, structured data, or other format), marks the task “complete,” and logs the interaction for audits.

This approach enables agent-to-agent communication without compromising enterprise security standards or exposing proprietary algorithms.

How A2A is different from MCP

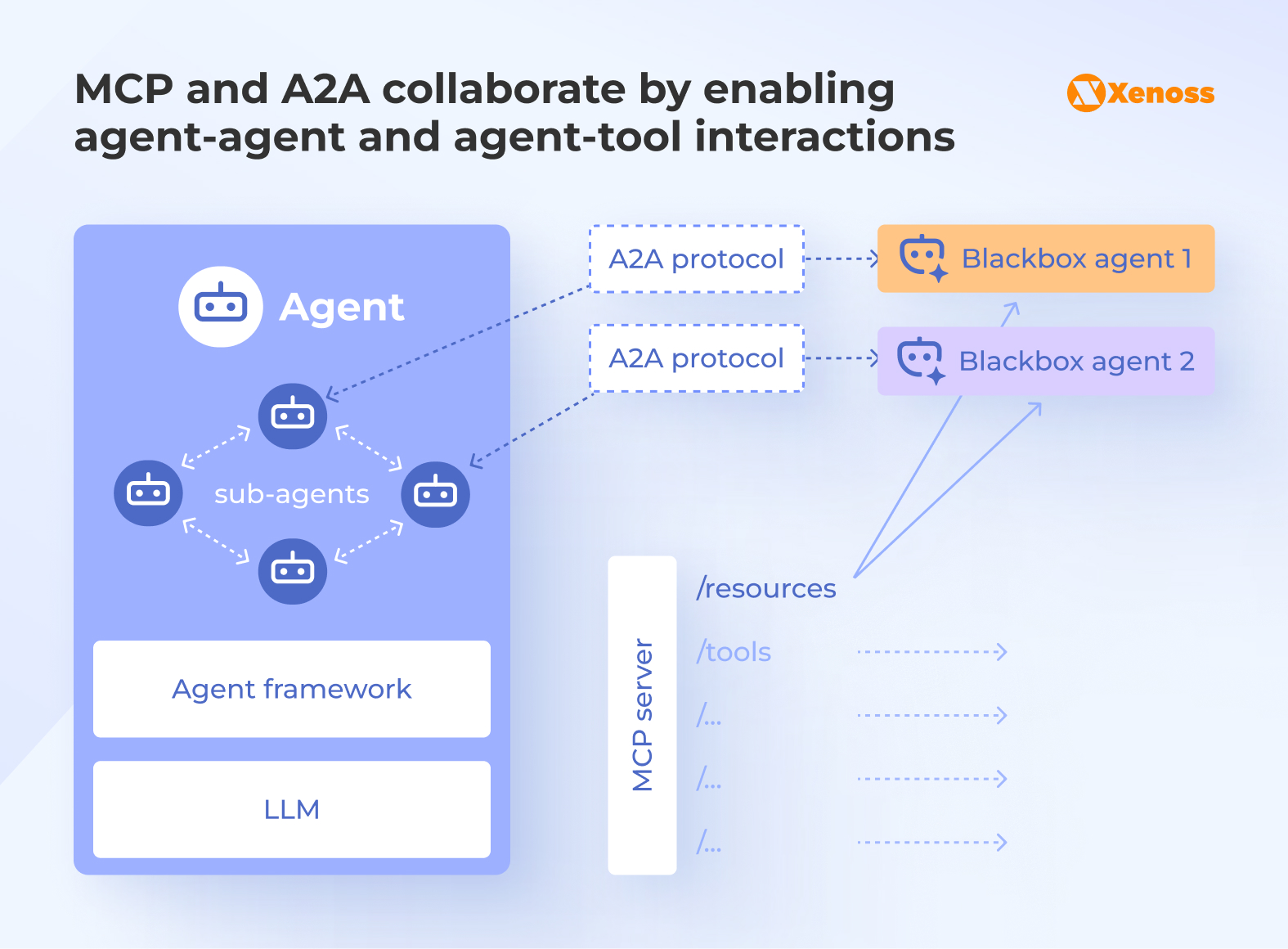

Google positions A2A as complementary to Anthropic’s Model Context Protocol (MCP), not a competitor, because they solve different problems.

MCP connects a single agent to third-party tools (Slack, Figma, coding IDEs) for real-time data and context.

A2A provides a shared language for multiple agents to communicate with each other.

It’s quite common for enterprise teams to combine both protocols to build orchestrated multi-agent workflows that ingest real-time data from external tools.

PayPal deployed this architecture in a merchant-facing agentic workflow connecting a sales agent to a checkout agent that creates and sends PayPal invoice links from natural-language conversations.

How A2A is used: The merchant’s agent contacts an A2A broker to discover a PayPal-provided agent and retrieve its Agent Card. The merchant agent then verifies authenticity, completes authorization, and delegates payment operations. Once delegation is complete, A2A steps out of the execution path.

How MCP is used: After the A2A handshake, the receiver agent uses an MCP client to call PayPal tools for invoice creation and delivery through a PayPal-hosted MCP server.

Together, A2A and MCP created a standardized, secure way for PayPal to orchestrate agents and provide them the context needed to complete tasks.

How Agent2Agent is transforming enterprise AI

By the time Google released A2A, the enterprise market was already sold on multi-agent systems. 79% of organizations were experimenting with AI agents, and over 60% of new pilots involved multiple agents working together.

But reliably connecting agents was still a problem. Without a standard protocol, agents deployed across teams and branches couldn’t easily communicate, leading to format inconsistencies and duplicated effort.

A2A’s standardized approach to agent orchestration is helping organizations reduce that friction and improve how agents work together in practice.

Shift from monolithic to modular architectures

How it worked before A2A: Connecting AI agents required custom point-to-point integration that risked falling apart when either system changed. Adding a new agent or swapping vendors meant rebuilding connections from scratch, slowing deployment, and increasing downtime risk.

What’s different with A2A: A2A enables a shift from monolithic AI builds to modular architectures where capabilities are assembled as interchangeable components rather than tightly coupled code.

In a multi-agent ecosystem, teams can introduce new capabilities, swap vendors, or upgrade parts of the system without costly rewrites or downstream disruptions.

This modularity shortens time-to-value, reduces delivery risk, and extends the life of AI investments by making systems easier to govern, scale, and maintain.

Improved governance and traceability

Before A2A: Agent monitoring and governance relied on ad-hoc solutions, leaving organizations exposed to unwanted actions or data loss. The gap was palpable to enterprise leaders, 47% of whom reported negative consequences from genAI deployments.

What’s different with A2A: The protocol’s built-in capabilities weave governance and traceability into the foundation.

- Declared identity and capabilities. Each agent exposes an Agent Card stating who it is, what it can do, and its trust and policy constraints.

- Auditable delegation. Structured A2A messages make agent-to-agent requests, context sharing, and responsibility transfers fully loggable.

- Centralized enforcement. Orchestration layers can enforce routing, approvals, and policy checks at delegation time, creating a verifiable chain of custody across workflows.

For enterprise organizations, the ability to monitor the actions of AI agents with detailed logs and centralized control helps prevent unwanted actions or irreversible data loss.

A robust ecosystem fueling the growth of A2A

Before A2A: Organizations adopting multi-agent architectures faced a fragmented landscape with no standard way to package or deploy agents across the enterprise.

What’s different with A2A: A2A isn’t emerging in isolation. Google is building a partner ecosystem that standardizes how agents are packaged, discovered, and deployed.

The foundation is built on two powerful moats.

- Strong enterprise backers. At launch, A2A was supported by 50+ enterprise technology partners, and the list of backers is expanding as the protocol is gaining traction.

- Distribution infrastructure. Google has connected A2A to the AI Agent Marketplace and Agentspace, allowing enterprise organizations to deploy third-party agents through cloud buying with little to no procurement friction.

Google is also integrating A2A with purpose-built protocols.

With Agent Payments Protocol (AP2), A2A now has a common language for secure, compliant agent-to-merchant transactions, designed to prevent fragmented payment integrations.

Universal Commerce Protocol (UCP) standardizes the end-to-end commerce journey, helping agents move from “recommend” to “transact” across retailer systems.

Together, these integrations help enterprises move from pilots to monetizable, governed deployments.

Limitations of A2A in enterprise AI

A2A’s positioning as the “HTTP for agents” addresses the problem of AI silos that enterprises are already grappling with, and that will only intensify as teams manage thousands of specialized agents handling high-stakes decisions.

That said, despite strong technology and ecosystem support, the protocol still has functional and operational gaps that should give enterprise leaders pause before treating it as a default.

Slow traction in the enterprise

A2A is still early in enterprise rollout. The A2A Python SDK shows ~1.37M downloads per month, compared to 57.3M for Anthropic’s MCP SDK, and large-scale deployments remain sparse. This may have to do with the fact that Google engineers themselves position the current version as a draft on the path to production readiness.

Still, committing to an evolving framework puts enterprise teams at risk for vendor lock-in, which is why engineering teams still default to simpler, framework-native agent wiring instead.

I think binding to a certain protocol could lead to being tied to a specific vendor. Especially since A2A isn’t as common as MCP. If you use an open-source framework, just sticking with that framework is enough for agents to work together, no need to add more complexity.

Reddit comment highlights the common view on A2A adoption

How to solve this challenge: Position A2A as an optional interoperability layer for specific needs, like cross-language services, independent scaling, and multi-model governance, rather than a default framework. This way, engineers will be encouraged to deploy the protocol only when operational gains justify the setup complexity.

Improving agent connectivity does not necessarily increase reliability

While A2A optimizes communication between agents, it doesn’t solve the harder problem of behavioral control under uncertainty.

Neither A2A nor MCP prevents agents from making inconsistent decisions, losing track of context, or acting outside their intended scope as workflows grow.

The black-box nature of LLMs means orchestrated AI systems remain fragile, hard to audit, and prone to failure when many agents are chained together.

How to solve this challenge: To scale multi-agent workflows safely, build hierarchical orchestration where AI models primarily route and coordinate work, while clear rules, enforced limits, and verifiable execution logs constrain decision-making.

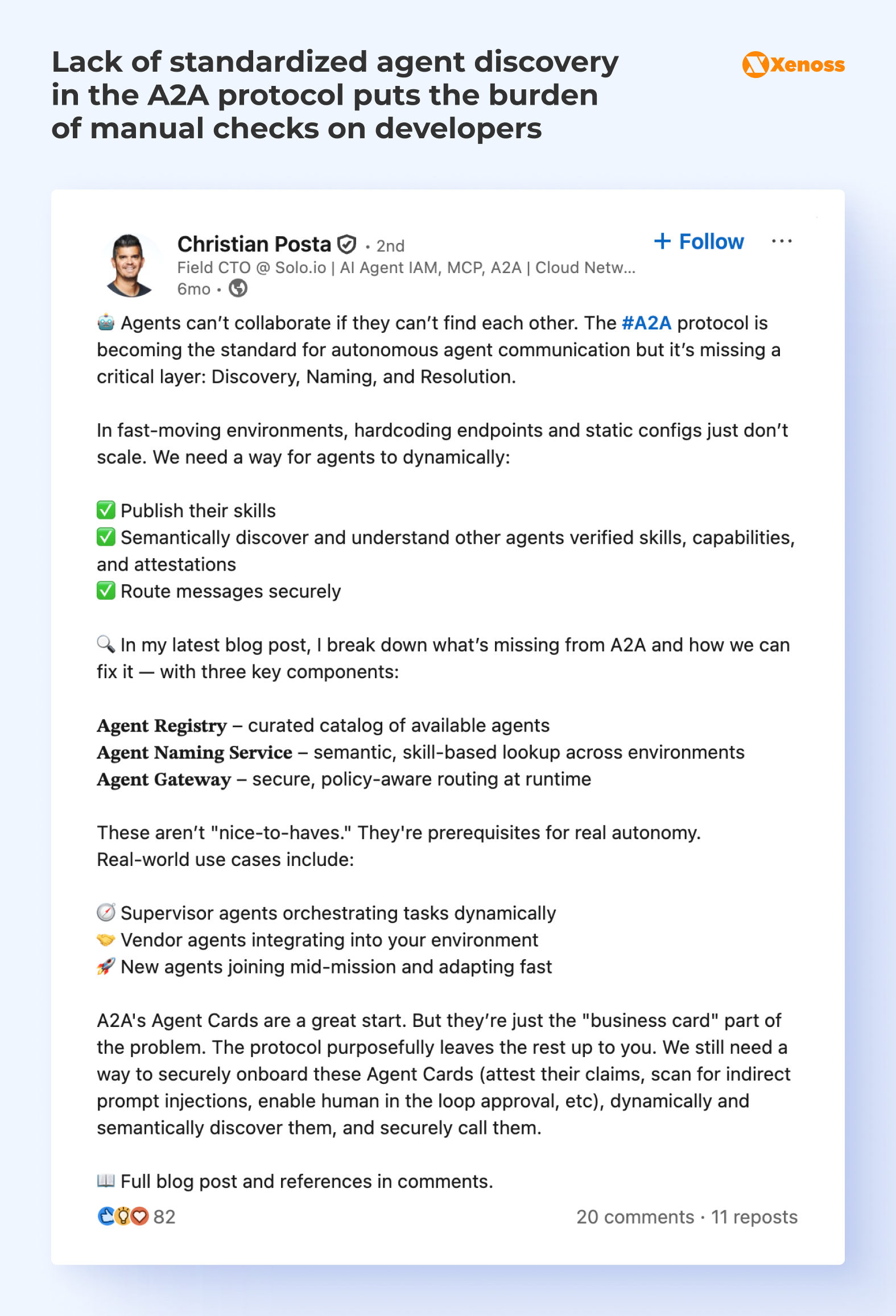

No standardized discovery layer

A2A standardizes how agents introduce themselves through Agent Cards, but doesn’t define how agents find each other in real-world systems.

Christian Posta, CTO at Solo.io, points out that A2A is missing three foundational components for autonomous discovery:

- Agent registry: A curated catalogue of agents

- Agent naming service: Skill-based lookup across environments

- Agent gateway: Secure, policy-aware routing at runtime

Without a discovery plane, teams rely on fixed endpoints, manual configuration, or custom registries that only work within one organization or vendor stack. As agents are added, updated, or removed, these setups become hard to maintain, and A2A-based systems struggle to scale beyond tightly controlled deployments.

How to solve this challenge: Introduce a dedicated agent registry listing approved agents and verified capabilities instead of hardcoding connections.

Add a simple naming and routing layer that resolves requests like “an agent that can do X” to the right agent at runtime.

Until A2A defines this layer itself, treating discovery as a shared platform service is the most practical path to scalable adoption.

Best practices for enterprise A2A adoption

While A2A standardizes how agents talk to each other, it deliberately leaves core responsibilities, such as security controls, trust boundaries, failure handling, and operational resilience, in the hands of adopters.

Until the protocol matures further, enterprise teams should consider A2A as a foundation instead of a safety net, and define clear best practices to avoid building poorly secured or unreliable agent ecosystems.

Best practice #1: Design agents as domain-specific services

Limiting an agent’s scope means defining it around a single decision or transformation step in a workflow, such as validating inputs, enriching data, or producing a specific recommendation, rather than an end-to-end task.

This discipline brings order by eliminating overlapping responsibilities between agents and making A2A message flows deterministic and easier to reason about.

Managing narrow-scope agents allows engineers to predict exactly which agent should be invoked for a given request.

As a result, teams spend less time tracing emergent behavior across agents and more time improving discrete components with measurable impact.

Implementation tips

- Define a single business capability per agent and enforce strict input/output contracts with versioning.

- Keep domain logic inside the agent and push shared concerns (auth, logging, rate limits, policy) into platform middleware.

- Treat agents as replaceable components: test them in isolation, deploy independently, and monitor with SLA-style metrics per agent.

Best practice #2: Prioritize structured inputs and outputs

Although A2A supports plain text, relying on it in production can create interpretation problems at task handoff.

Getting caller agent tasks in plain text requires server agents to infer intent, extract fields, and filter what’s required from what is optional.

Structured payloads formatted in JSON solve this problem because they make each exchange explicit, with clearly named fields, constrained types, and instantly detectable missing data.

When faced with a clearer task message, server agents start behaving deterministically instead of making best-effort attempts.

JSON prompting provides clarity, ensures consistency, reduces ambiguity, and scales across complex workflows. When “normal prompts rely on interpretation, JSON prompts give explicit, predictable outputs.

Prem Natarajan, AI Transformation & GTM leader at Capco

For engineering teams, structured formatting enables faster debugging and safer change management thanks to schema versioning and reduced room for interpretation.

Implementation tips

- Treat every A2A message as an API call, with a defined schema, versioning, and documentation for required and optional fields.

- Implement strict validation at ingress/egress and return typed error objects so failures are actionable, not conversational.

- Keep text outputs as an auxiliary “explanation” field while all routing, tool calls, and state updates use structured fields only.

Best practice #3: Plan for robustness and scalability

To ensure robustness in A2A systems, teams need to ensure multi-agent workflows maintain business continuity and cost control, without risking customer-facing incidents or runaway compute spend.

Although AI agents are intrinsically unpredictable, engineering teams can limit risks by setting guardrails.

- Timeouts: Kill requests that take too long before they cause a bottleneck.

- Retry limits: Cap how many times a failed request can retry to prevent infinite loops.

- Circuit breakers: Temporarily stop calling a failing service so it has time to recover.

- Concurrency caps: Limit how many requests run in parallel to avoid overloading resources.

With these checkpoints in place, teams can add new agents without a single slow or failing component cascading into system-wide downtime or retries draining the compute budget.

Agent guardrails need to be specific to the underlying use-case, and implemented in their respective platform components and layers .

Debmalya Biswas, AI CoE Lead at UBS

Implementation tips

- Set explicit limits per workflow (max latency, retries, cost per request) and enforce them at runtime.

- Use circuit breakers and fallbacks so a failing dependency returns a controlled partial response, not a system-wide stall.

- Use historical data to plan capacity for typical traffic patterns and throttle concurrency so growth doesn’t convert directly into higher incident rates and cloud bills.

Best practice #4: Train agents to give clear guidance when context is missing

A generic “can’t proceed” error message from a server agent forces callers to guess what went wrong and start trial-and-error debugging that compounds across multi-agent chains and often requires human intervention to unblock.

On the other hand, detailed error messages can help the caller agent to provide the server with the necessary data with minimal human intervention.

AI agents may need more rules. But they definitely need more verbose error messages. Your AI agents will get smarter. They’ll learn. They’ll do it right next time; they might not even break flow, just readjust and act accordingly. This is behavioral engineering at scale.

Kendall Miller, founder and CEO at Maybe Don’t AI

Implementation tips

- Return a structured InputRequired payload that lists missing fields, accepted formats, and an example request object, rather than free-text instructions.

- Include “how to fix” guidance with every validation error (cause + corrective action), and ensure it is consistent across channels and clients.

- Log missing-context patterns and promote them into pre-validation (or upstream UI checks) to prevent recurring failures and reduce rework.

Bottom line

A2A offers enterprise teams a standardized way to connect AI agents across vendors, tools, and workflows, enabling modular and scalable architectures.

At the time of writing, the protocol is still maturing, with limited discovery infrastructure and some behavioral unpredictability. Even in this landscape, enterprise organizations can extract a lot of value from A2A’s vendor-agnostic approach to vendor communication, but its adoption will require careful guardrails.

Organizations that treat A2A as an optional interoperability layer rather than a default framework, invest in structured payloads and robust error handling, and plan for graceful degradation will be best positioned to scale multi-agent systems safely.