For the last three years, AI teams have increasingly turned to synthetic data as a core training strategy. They have compelling reasons to do so.

In 2022, OpenAI could get away with scraping the open web to get access to all available data, but the rules have since changed.

Companies that used to be major data sources in the past (news media and, most notably, Reddit) now protect their IP and sue AI labs for non-compliance.

Cloudflare introduced pay-per-crawl, demanding that AI companies pay for accessing any of the 37.4 million websites (as of August 2025) hosted by the provider.

In an increasingly more stringent data landscape, AI engineers are pressured to find other ways to solve the cold start problem, and synthetic data is an affordable and effective solution.

At the same time, GPT-5, heavily trained on synthetic data, was OpenAI’s most disappointing release yet. Multiple experiments confirm that the accuracy of synthetic datasets is lower compared to purely authentic ones.

How should engineering teams find the balance between synthetic and human data? This article explores the benefits and risks of artificial data generation and examines real-world examples of teams successfully combining authentic and synthetic inputs to build accurate and effective models.

Synthetic data explained: computer-generated training datasets for AI models

Synthetic data refers to information generated by algorithms rather than captured from real-world events or human interactions. While this data doesn’t originate from actual occurrences, it’s not randomly fabricated either.

Synthetic datasets are created using a smaller number of authentic items (‘seed data’) as a reference. Algorithms analyze patterns, relationships, and distributions in this real data, then generate new data points that maintain similar statistical properties while protecting privacy and expanding the dataset size.

Initially, synthetic data was used to avoid using sensitive data (e.g., phone and credit card numbers) and protect users’ privacy.

Over time, the capabilities of synthetic data generation evolved to a point where it is possible to artificially generate photorealistic images, videos, and sound. For example, Microsoft’s Digiface dataset is fully synthetic and boasts over 1 million unique AI-generated faces.

The sophistication of current synthetic data generation enables teams to create training datasets for virtually any domain: financial transactions for fraud detection, medical images for diagnostic algorithms, customer service conversations for chatbot training, and manufacturing sensor data for predictive maintenance models.

This technological maturity explains why synthetic data has transitioned from a privacy workaround to a strategic training approach that addresses data scarcity, reduces costs, and accelerates model development timelines.

Why synthetic data is trending: data scarcity and cost pressures

The easiest answer to this question is that we have to use synthetic data because the entirety of the Internet is no longer sufficient to train state-of-the-art foundational models.

Research estimates that, if AI keeps developing at its current rate, machine learning teams will run out of high-quality data to train models by the end of next year. Even low-quality language data is expected to run out between 2050 and 2060.

For AI labs pushed to produce bigger and more powerful models, using synthetic data is becoming non-negotiable.

But, aside from being constrained to do so because there isn’t much good data to go by, researchers have other reasons to explore artificial data generation.

Cost and time savings

For engineers building open-source models with VC backing, synthetic data presents an opportunity to try out forefront techniques without allocating millions into authentic data acquisition and manual annotation.

Nathan Lambert estimates that leading AI labs are spending upwards of $10 million on inference compute needed to build datasets, while smaller teams can use data generation techniques like distillation to have a reliable instruction dataset at a total cost of $10.

In computer vision projects, tapping into synthetic image generation reportedly helps engineers reduce data acquisition costs by up to 9500%, compared to traditional photography and manual labeling approaches, making previously unaffordable projects economically viable.

As for leading labs, synthetic data gives them a chance to scale capabilities much faster, so that they hold on to their competitive advantage in the AI race.

Safety and guardrails

Teams building open-weight models run the risk of users fine-tuning the algorithm for nefarious purposes, ranging from creating cybersecurity exploits to social engineering at scale and brainstorming bio-terrorism applications.

To prevent these use cases, AI engineers need a powerful guardrail system that would make tricking a model into complying with a malicious request next to impossible.

Synthetic data partially enables this assurance by helping AI labs generate millions of high-risk scenarios and train models to recognize and appropriately respond to each of them.

There have been speculations that OpenAI trained its open-weight OSS models on a large amount of synthetic data precisely.

That would make sense given that synthetic data gives OpenAI an opportunity to implement stricter guardrails and avoid legal and reputational damage that would follow if a user-fine-tuned version of OSS went rogue.

Robustness and edge case handling

An ongoing challenge in training machine learning models is getting an algorithm to correctly detect and classify outliers. A model gets better at understanding context when it’s exposed to similar scenarios multiple times.

However, in a data distribution, outliers are rare by definition, which is why an algorithm in training may only come across them a handful of times.

Synthetic data addresses this by helping engineers create more samples of scenarios that don’t happen often naturally and add them to the distribution. Repeated exposure to rare facts, tasks, and data points helps models be more specialized and reliable.

The risks of training ML models only on synthetic data

Considering how much using synthetic data slashes training costs and timelines, as well as its diversity and safety benefits, a reasonable assumption would be to use only synthetic data to train machine learning models.

That approach has been tested in practice: Microsoft-backed family of Phi models is trained only on synthetic data. After the real-world testing of these LLMs, a few caveats to using only synthetic data emerged.

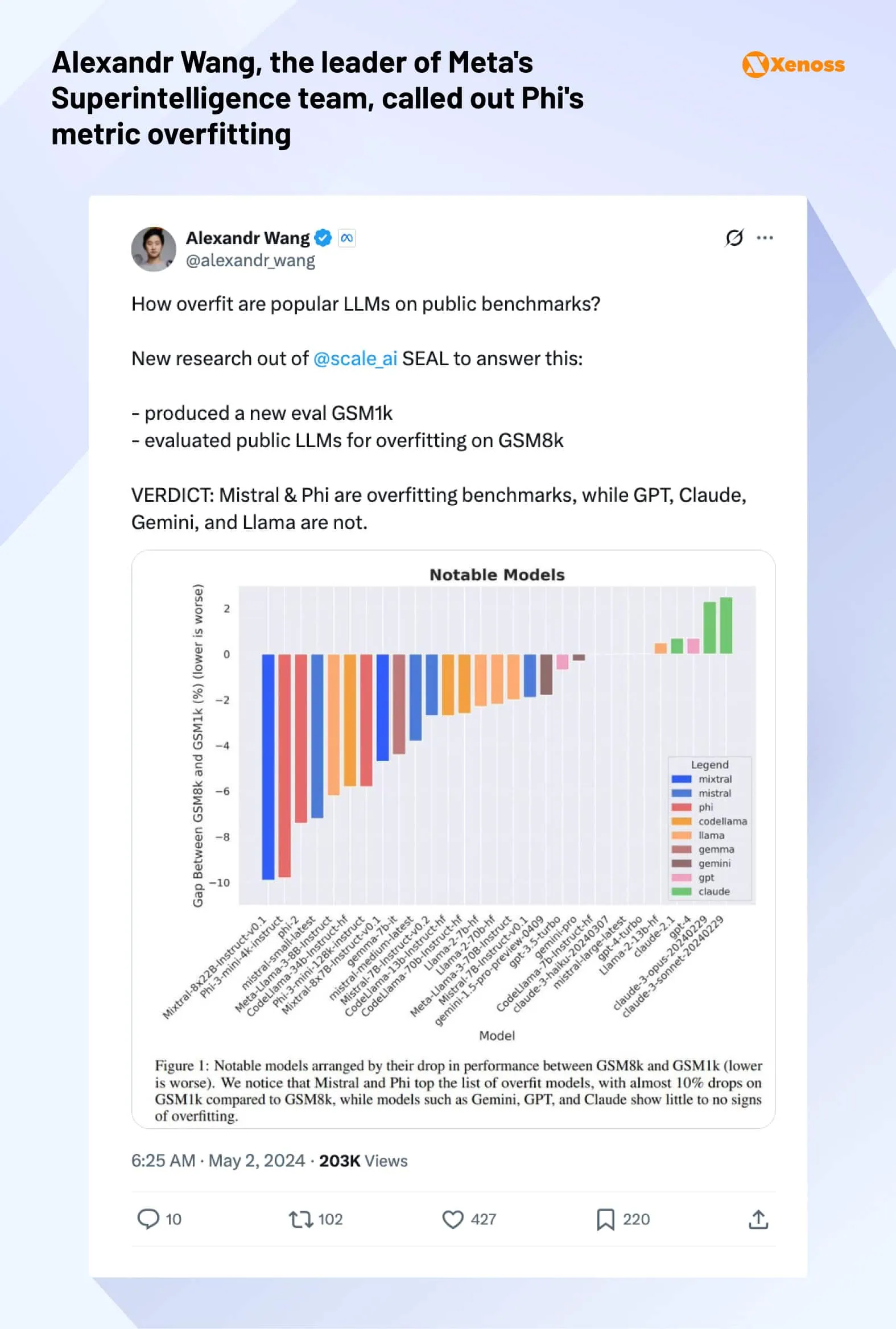

Perception gap between benchmarks and real-world performance

The number-one complaint about Phi models centers on a significant disconnect between benchmark performance and practical utility. Despite achieving impressive evaluation scores, these models consistently underperformed in real-world applications.

The phi model reminds me of that one smart kid in class who always nails the tests but struggles with anything outside of that structured environment. On paper, it’s brilliant. In reality, it just doesn’t measure up.

The reason models trained on synthetic data perform so well on evaluations and much more poorly in real-life contexts is what Drew Breunig, the founder of the Overture Maps Foundation and an AI blogger, calls a ‘perception gap’.

Top-level benchmarks mostly assess quantitative tasks, such as solving math or coding problems. Synthetic datasets effectively accommodate these use cases but fail to drive meaningful progress in the qualitative realm because they do not generate novel knowledge and ideas.

As AI labs increasingly shift their focus to synthetic data, we are likely to see lopsided performance improvements: noticeable intelligence spikes in technical domains and a slower rate of progress in scenarios such as writing, assisting with everyday tasks, or solving complex business problems.

Data distribution distortions and representation problems

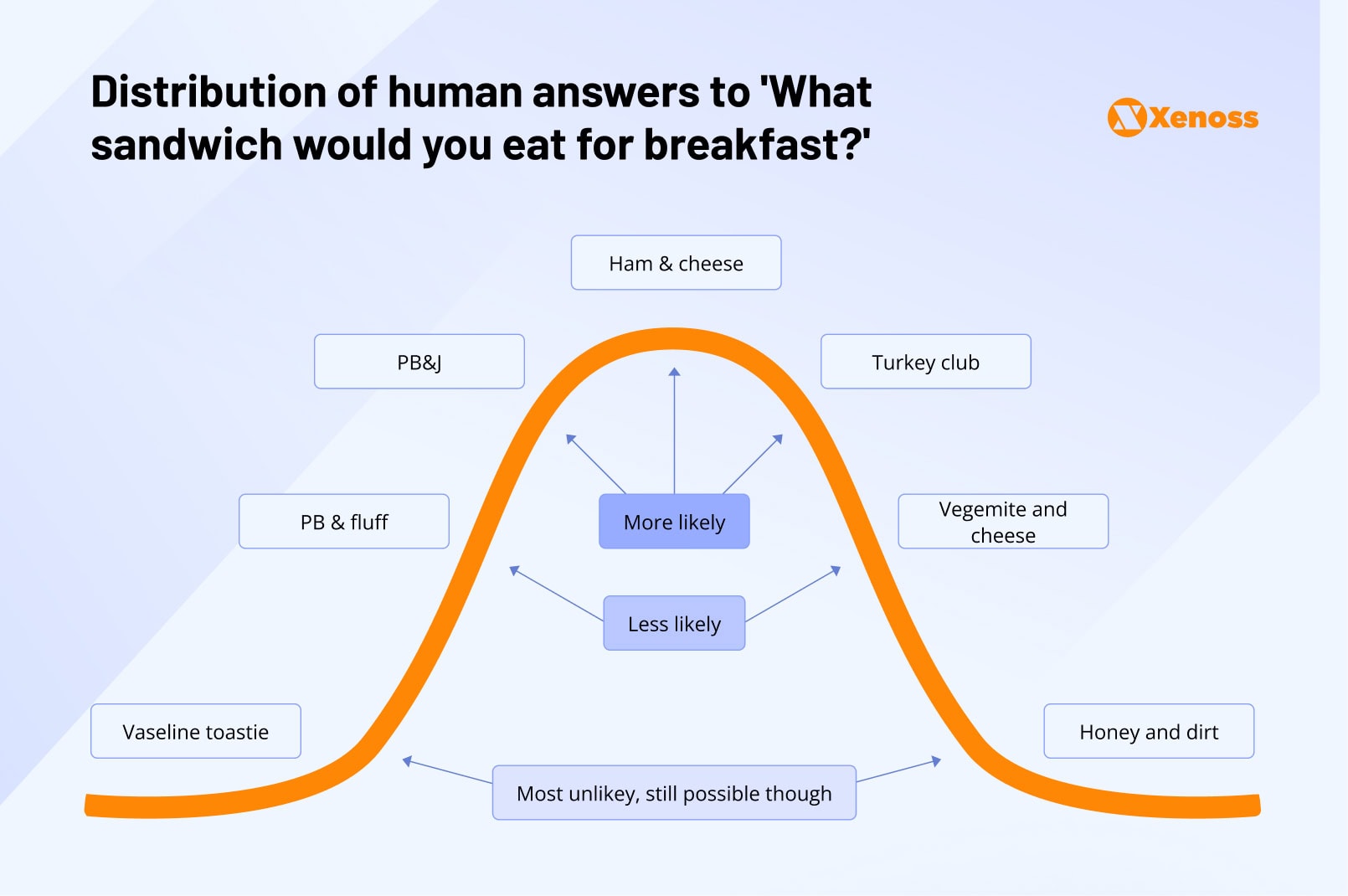

The growing concern among machine learning researchers is that training models purely on synthetic data will cut off rarer but still probable responses, and the resulting distribution bell curve will be different from the one you would see in the real world.

Alexandra Barr, who is leading Human Data at OpenAI, explains the concept with an analogy of a ‘What sandwich would you like to eat for breakfast?’ question.

In a real-world data distribution, there will be a few highest-probability answers (‘ham and cheese’, ‘turkey club’), but there will also be unexpected outliers (‘vaseline toastie’ or ‘honey and dirt’), which can be plotted as a bell curve.

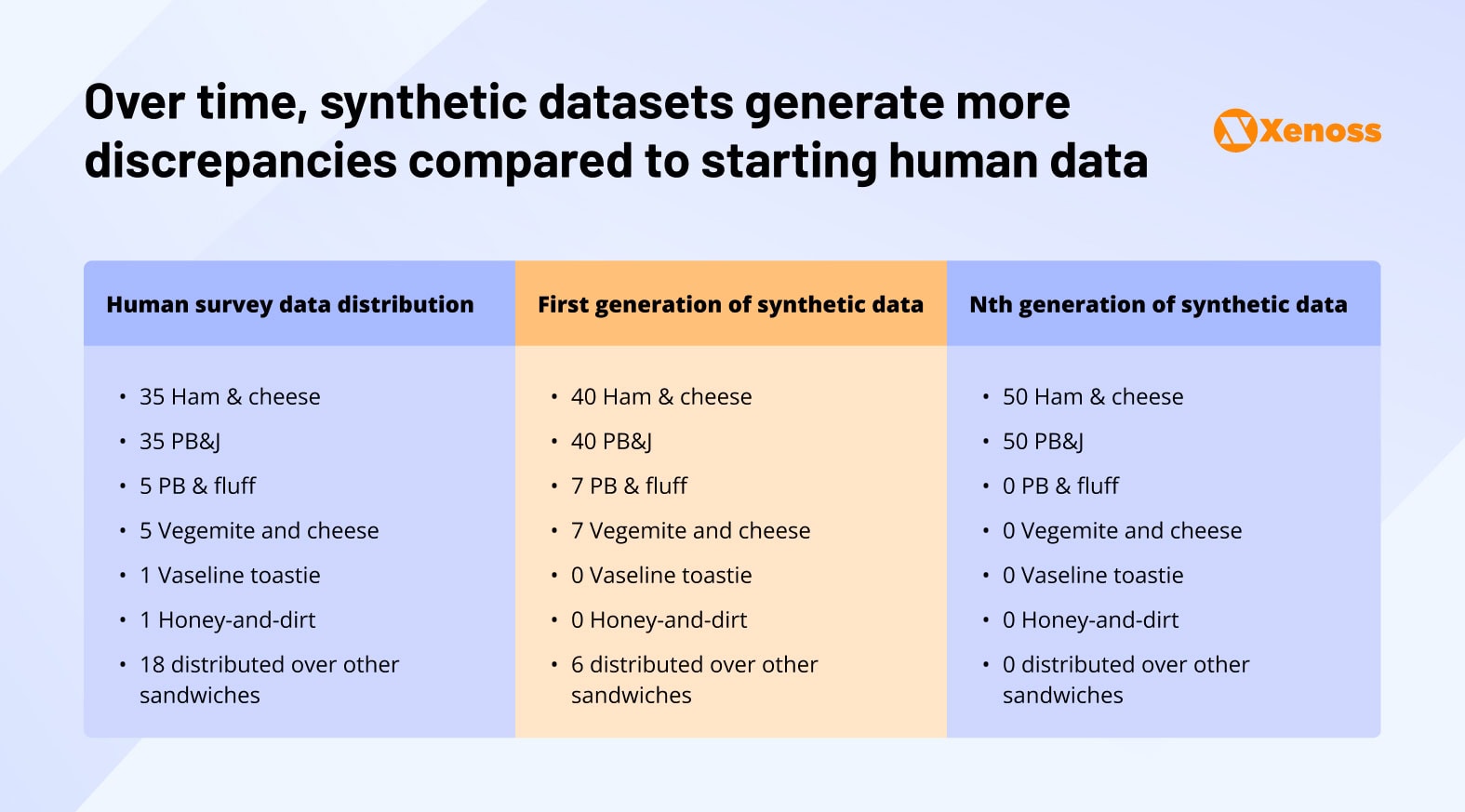

When a synthetic data generator creates a dataset that simulates the real-world answer distribution, it does so probabilistically.

The model tends to return higher-probability answers first, until eventually it stops returning low-probability outputs.

Over multiple iterations, synthetic datasets develop both over-representation (exaggerated common responses) and under-representation (eliminated rare but valid responses) problems. The resulting distribution becomes unrealistically narrow compared to authentic human behavior patterns.

Plotted as a Gaussian curve, synthetic datasets will have shorter tails and high and thin bells.

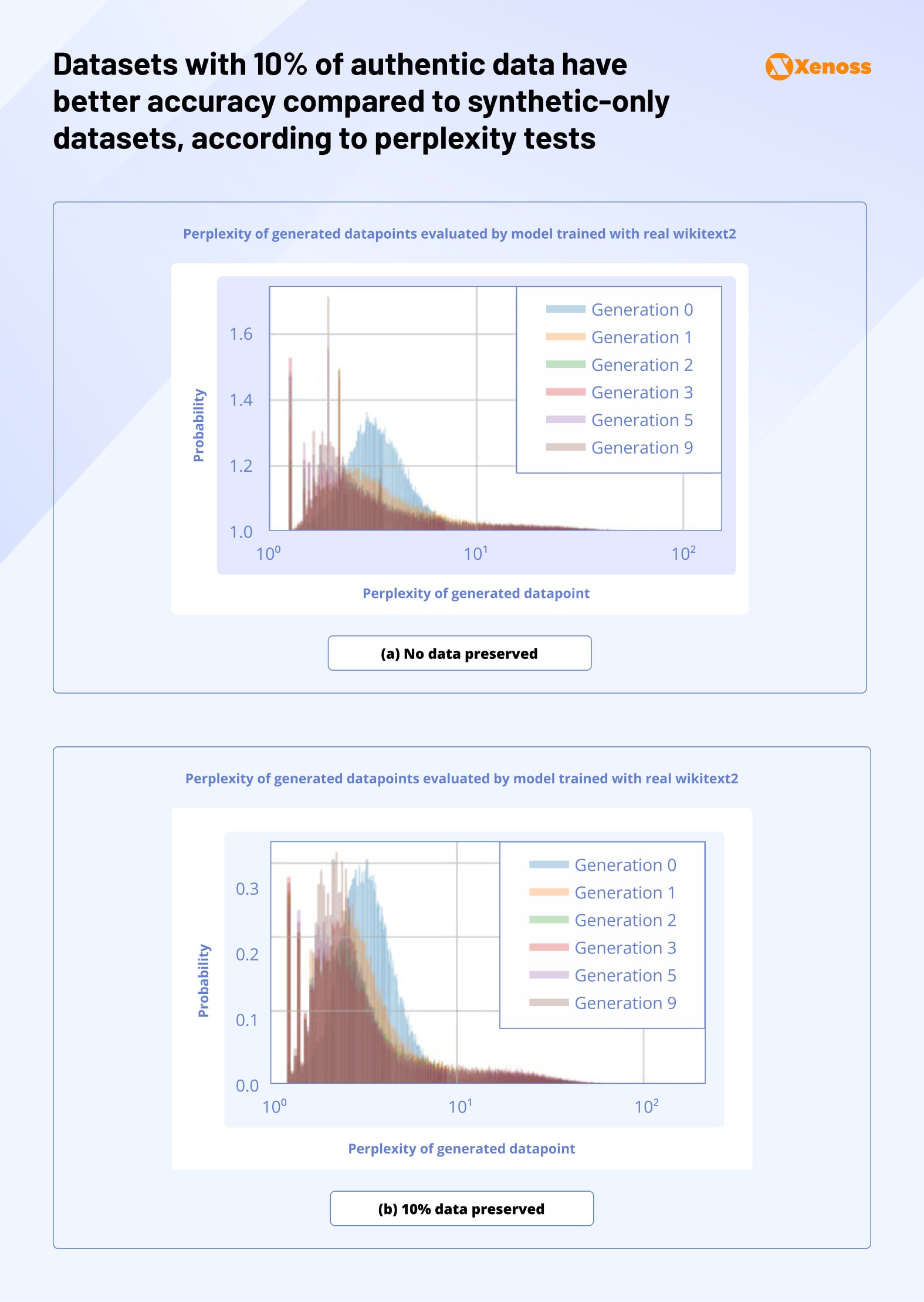

At its extreme, the over-reliance on synthetic data without rigorous data quality testing puts models at risk of collapse.

Model collapse: when synthetic training becomes self-defeating

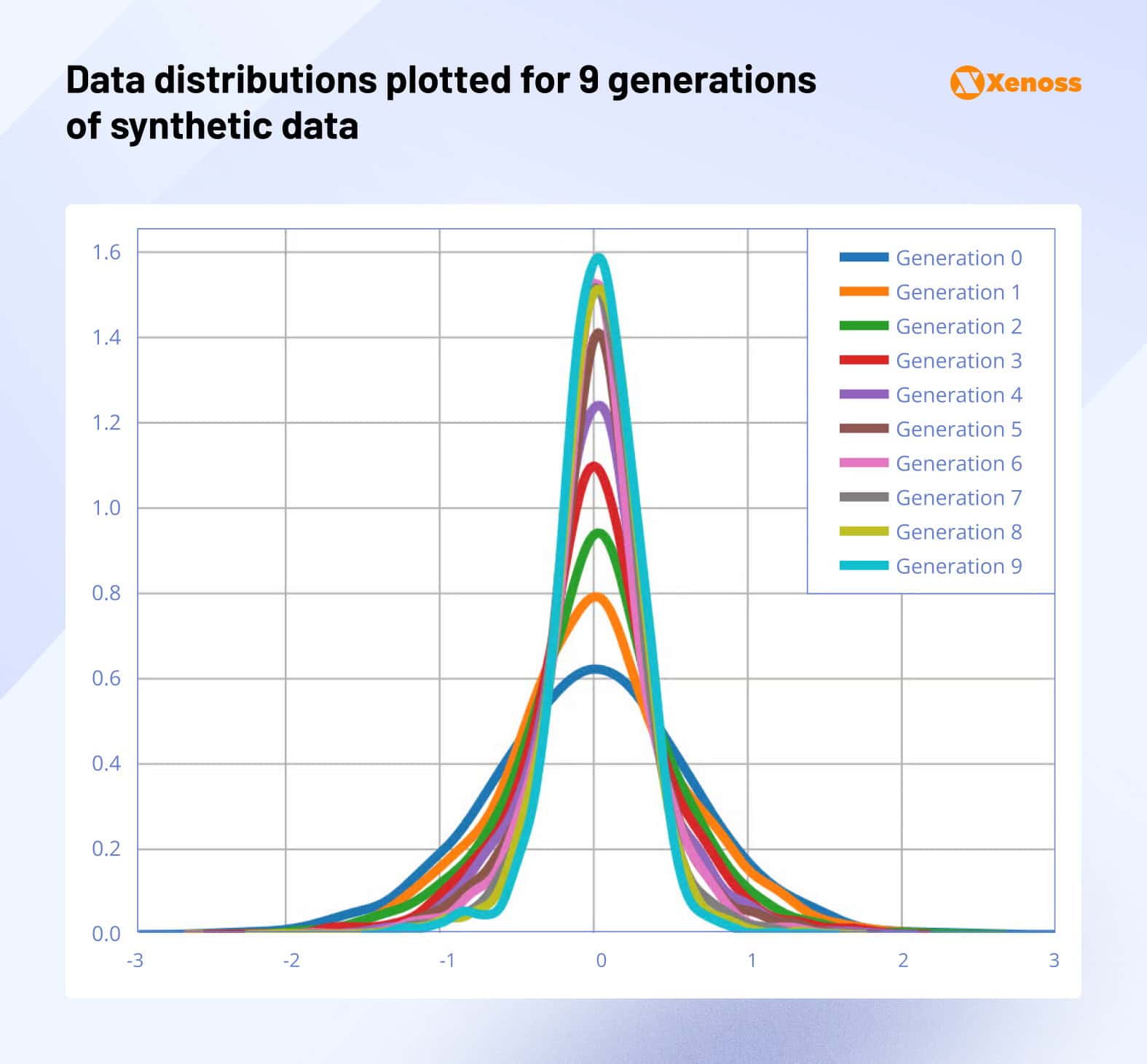

Model collapse happens when the discrepancies between synthetic and real-world datasets pile up over time, creating ‘polluted’ datasets for models to be trained on.

Approximation errors, such as rounding probabilities, are a common cause of model collapse. If models implement stricter cut-offs, they underrepresent a wider portion of real-world data, creating unrealistic and factually wrong distributions.

Functional approximations can also precipitate collapse. If a model has no awareness of how likely a specific input is to appear in the real-world data distribution, it may generate data points that do not respect physical constraints.

Here’s an analogy of what this looks like in practice.

A team of researchers is training a model on the boiling points of known elements. It learns that hydrogen boils at −253 °C, oxygen at −183 °C, water at 100 °C, etc.

At one point, it may start applying the same logic to a query: “What’s the boiling point of an element with atomic number 500?”. There is no such element, but the model will extrapolate anyway. If retrained on those outputs, it builds a periodic table of imaginary matter that doesn’t map to real chemistry anymore.

Model collapse has two dangerous consequences.

- Catastrophic forgetting: Models lose previously learned capabilities when exposed to new synthetic data

- Data poisoning: Models begin preferring synthetic approximations over accurate real-world examples

Interestingly, the outcomes of model collapse tests show that adding as little as 10% of human-generated data to an otherwise synthetic dataset significantly improves model confidence.

To harness the benefits of synthetic data and preserve output accuracy, researchers are building training pipelines that rely on hybrid data: a mix of real-world and generated datasets.

Hybrid data strategies: combining synthetic and real-world datasets for production AI

To harness the benefits of synthetic data and preserve output accuracy, researchers are building training and validation pipelines that rely on hybrid data: A mix of real-world and generated datasets.

Three case studies from Netflix, Instacart, and American Express demonstrate practical implementations of hybrid data strategies for production AI systems.

How Netflix used hybrid data to build a robust pixel error detector

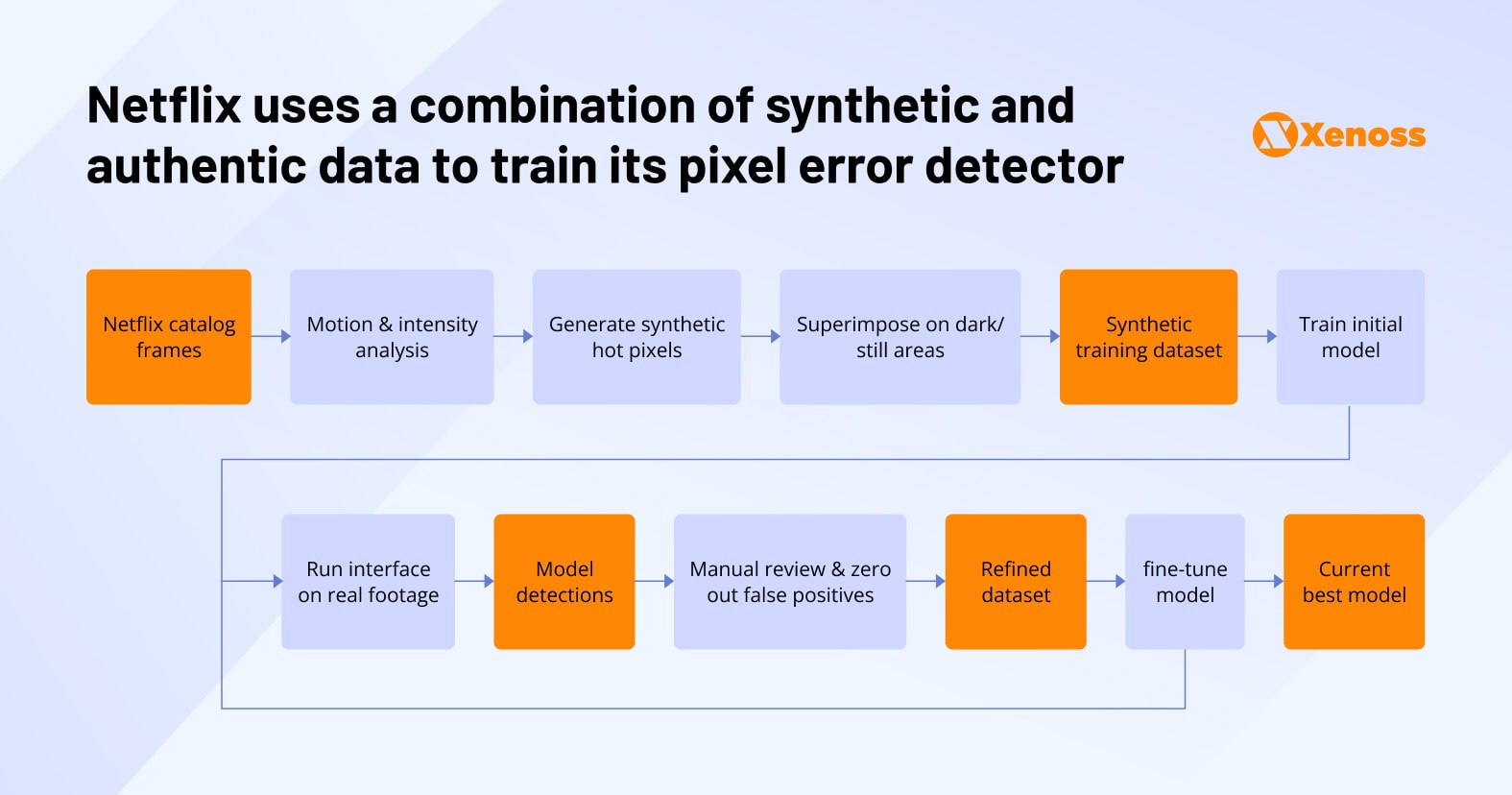

In a recent Netflix technology blog post, the company’s engineers share their experience of building a machine learning tool for automated quality control that detects pixel-level issues. The automated scanner helps reduce the amount of manual work needed to scan these issues and maintain high-quality standards across the streamer’s portfolio.

In the process, Netflix engineers had to deal with a lack of training data because pixel errors are both rare and short-lasting. To amplify the dataset, the team developed a training pipeline that combined the use of simulated pixel error data for initial training with a refined real-footage dataset used for model fine-tuning.

Step 1. Building a synthetic pixel error generator

To expose the model to the two most common types of pixel errors (symmetrical and curvilinear), Netflix engineers built an ‘error generator’ that created pixel errors superimposed on real frames from the streamer’s catalog.

Engineers strategically place these errors in dark, static scenes where they would be most visually apparent to human viewers.

Step 2. Initial model training on synthetic data

The model learns to recognize common error patterns through extensive exposure to synthetic examples. This phase builds foundational detection capabilities across various error scenarios and content types.

However, synthetic data creates a “domain gap” compared to production footage, requiring additional training for real-world reliability.

Step 3. Fine-tuning the model on real-world datasets

Netflix built a three-step fine-tuning pipeline to improve the reliability of the model and prepare it for real-world scenarios.

- Inference: Engineers ran the model on new data that did not have synthetic pixel errors.

- Eliminating false positives: Netflix engineers manually reviewed and removed labels for false positives.

- Iteration: The model ran a few training cycles on refined real-world datasets until its accuracy matched that achieved with synthetic data only.

According to Netflix engineers, this synthetic-to-real fine-tuning is an ongoing process at the company. The machine learning team continues refining the model, but productivity gains are already noticeable.

The automated pixel error detector saves creative teams hours of manual footage review, letting them focus on more impactful storytelling challenges.

Instacart combines real-world data with synthetic datasets to improve the relevance of its search engine

For Instacart, one of the leaders in e-commerce with a large catalog, building an accurate product search engine is not a trivial task. The distribution of most popular searches is highly uneven: There are fewer than 1000 popular search terms and a long tail of less popular queries that nevertheless should not be disregarded.

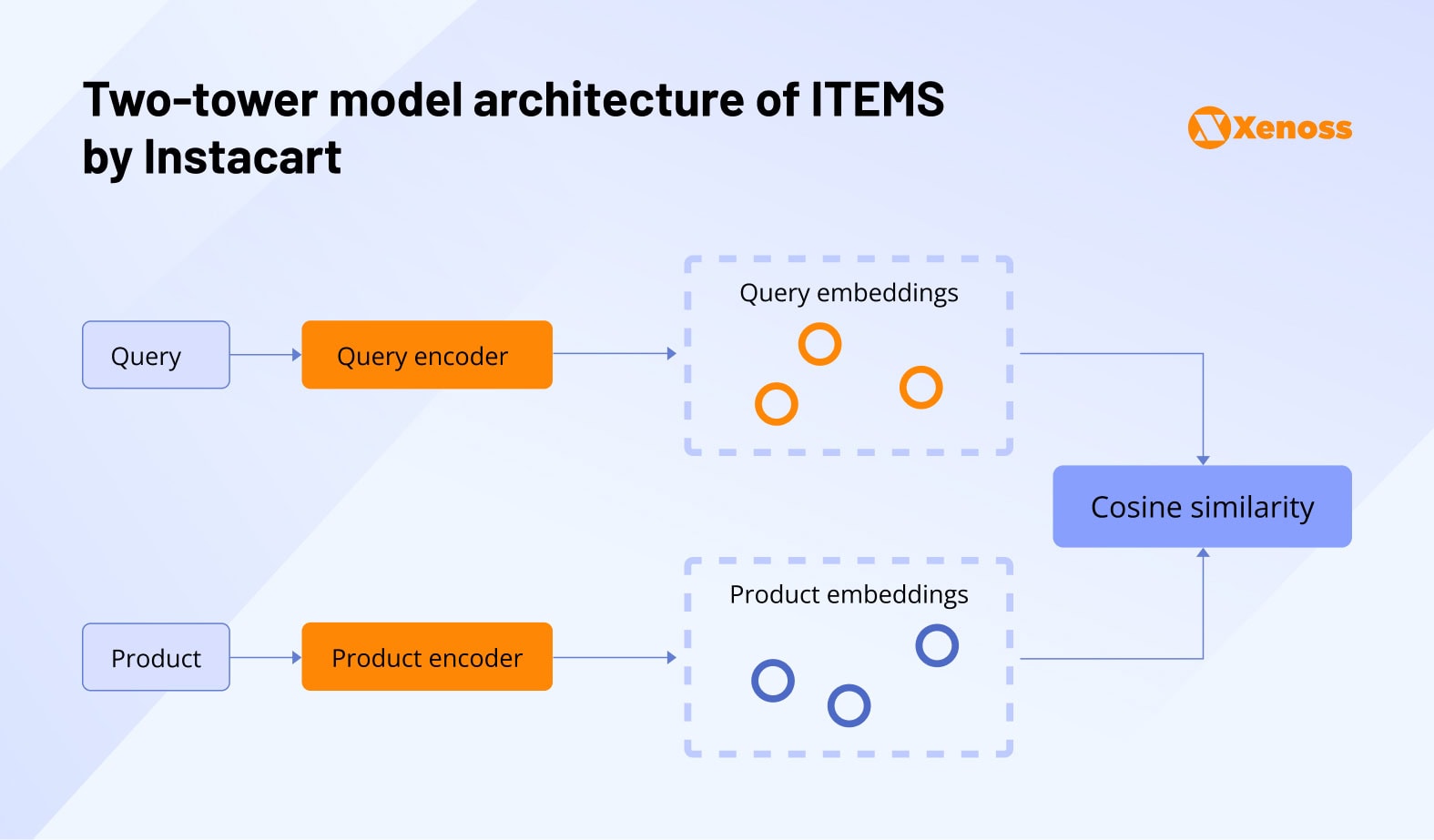

To help shoppers find the groceries they need with any type of search, Instacart engineers built ITEMS (the Instacart Transformer-based Embedding Model for Search), a deep learning model designed to create a dense representation of search terms and products.

The model architecture is designed as ‘two towers’, queries and products. Each is encoded, computed, stored, and retrieved independently.

To enrich the training dataset for query embeddings, Instacart added synthetic data even though the company’s engineers point out that the percentage of generated queries relative to real-world data is very low.

Artificially generated queries help Instacart models improve at recognizing rare product categories and attributes.

For example, a product data point “Organic 2% Reduced Fat Milk from GreenWise, 1 gallon” generates synthetic queries:

- “GreenWise”

- “Milk 1 gal”

- “GreenWise Organic Reduced Fat Milk”

- Additional variations based on attributes and brand names

This data is then combined with human queries to cover a more diverse and complete range of queries. The percentage of synthetic data in the final dataset is ‘very low’ to prevent it from training biased models.

American Express uses a hybrid data strategy to accurately predict credit scores

In a recent paper, American Express engineers describe how a mix of synthetic and authentic data helped build an accurate self-learning filter that monitors the outputs of the internal credit scoring model.

This filtering layer, designed to detect outliers, center values, and straighten skewed variables, was largely trained on synthetic data using a process similar to the one Netflix used for pixel error detection.

Step 1. Training EDAIN to recognize common distortions with a heavily controlled synthetic dataset

American Express engineers artificially generated time-series datasets that included common errors: skewed variables, outliers, multimodality, heavy tails, and others.

Having full control over the ‘challenges’ developers planted into the dataset made it easier to understand how accurately the neural network detects data distribution errors.

By the end of early training, EDAIN consistently beat previous adaptive methods and static normalizations.

Step 2. Challenging pre-trained EDAIN with real-world data

After it consistently showed reliable performance on a synthetic dataset, American Express engineers applied EDAIN to real default-prediction datasets. EDAIN then assessed 177 dataset features and outperformed (albeit slightly) the standard z-score scaling and other baselines on the Amex metric.

Even though the accuracy improvement was not significant individually, its utility scales exponentially when applied to millions of accounts.

Bottom line

AI teams have a data problem. Building better models requires more training data than what’s naturally available, and creating high-quality human content is both slow and expensive.

The good news is that machine learning models can still learn from imperfect data, and having more examples often matters more than each one being perfect. That’s why synthetic data has become so popular – it’s a practical way to get the volume you need without breaking the budget.

Most AI teams now use synthetic data in some form. Smaller open-source projects like DeepSeek rely on it to compete with well-funded labs, while companies like OpenAI and Anthropic use it alongside other techniques to train their models.

The benefits are pretty straightforward: you can train models faster, spend less money, avoid privacy issues with real user data, and create examples of rare situations that would be expensive to find in the real world.

But there’s a catch. If you rely too heavily on synthetic data, your models start making weird predictions, forget things they learned earlier, and gradually get worse as the artificial data compounds over time.

That’s why engineering teams at leading startups and enterprise companies use a mix of synthetic and authentic data to build datasets that are both representative and diverse. Exploring how Netflix, American Express, and other global teams set up their training pipelines can help engineers build a scalable playbook for using hybrid data in their training pipelines.