Claims adjusters are missing a single valuation report. Underwriters are working from outdated inspection photos. Onboarding teams are repeatedly attempting to verify the same ID.

In regulated industries, organizations process up to 250 million documents each year across claims, underwriting, onboarding, and invoicing workflows. Documentation gaps in any of these workflows become compliance risks that surface later as audit findings, denied claims, and abandoned applications.

Automation has helped with throughput, but regulators now expect evidence-grade data: proof that every decision ties back to the correct source, that nothing critical is missing, and that extracted data is accurate. That’s a higher bar than most document capture systems were built to clear.

Therefore, investments shift from throughput-focused automation toward compliance-driven document processing, where document intelligence validates completeness, checks consistency, and flags problems before they propagate into core systems.

The sections below break down how this works across insurance claims, underwriting, banking onboarding, and manufacturing invoicing, with practical benchmarks for evaluating compliance-ready initiatives.

Document capture vs. intelligent document processing

Standard document capture focuses on field-level extraction. However, regulated workflows require traceability to the right source document.

A claim file missing an adjuster note or a valuation report with mismatched fields might pass through a capture pipeline without a “system error,” but fail an audit. That same mismatch can route a claim straight to adjudication, only to trigger a denial-and-appeal cycle that costs more to resolve than the original claim.

In health insurance alone, 19% of in-network and 37% of out-of-network claims were denied in 2023, with documentation gaps cited as a leading cause.

Document processing for regulated industries reduces these risks by:

- Validating packet completeness upfront

- Checking document consistency

- Flagging missing or outdated evidence before a case reaches a core system.

Core components of document intelligence for regulatory compliance

Document intelligence relies on a defined set of components designed to meet regulatory expectations for accuracy, traceability, and control.

Data extraction with confidence scoring

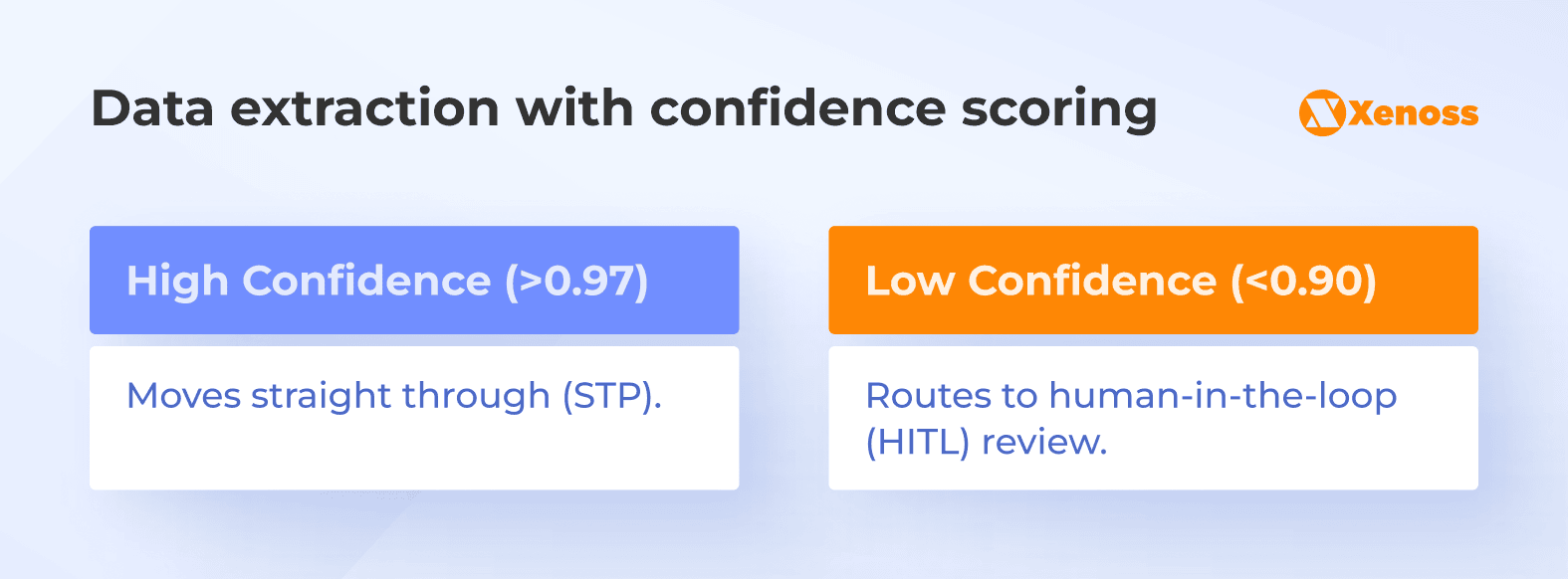

Every extracted field carries a confidence score, typically expressed as a probability between 0 and 1.

A service date pulled cleanly from a structured form might score 0.98; the same field handwritten on a faxed document might score 0.62. That score determines what happens next: high-confidence values move straight through, while low-confidence values route to human review.

Rossum’s automation framework, for example, uses a default threshold of 0.975, meaning documents are auto-exported only when the system is at least 97.5% confident in each extracted field.

Confidence also rolls up to the packet level. If three of twelve documents in a claim file have low extraction confidence, the system flags the entire submission for intake review before it enters adjudication.

Document governance across ingestion, review, and release

Governance defines what happens before data reaches core systems.

Validated ingestion channels ensure documents enter through approved sources. File-type and format checks reject submissions that don’t meet requirements. Role-based review enforces segregation of duties, so the same person can’t submit and approve a case.

Override controls matter here, too. When a reviewer changes an extracted value, the system requires a rationale, logs the change, and locks the record against silent edits.

Accuracy metrics for regulated document workflows

Overall accuracy numbers can be misleading. A system might report 96% extraction accuracy, but if errors concentrate in high-impact fields like claim amounts or policy dates, the operational risk is much higher than that number suggests.

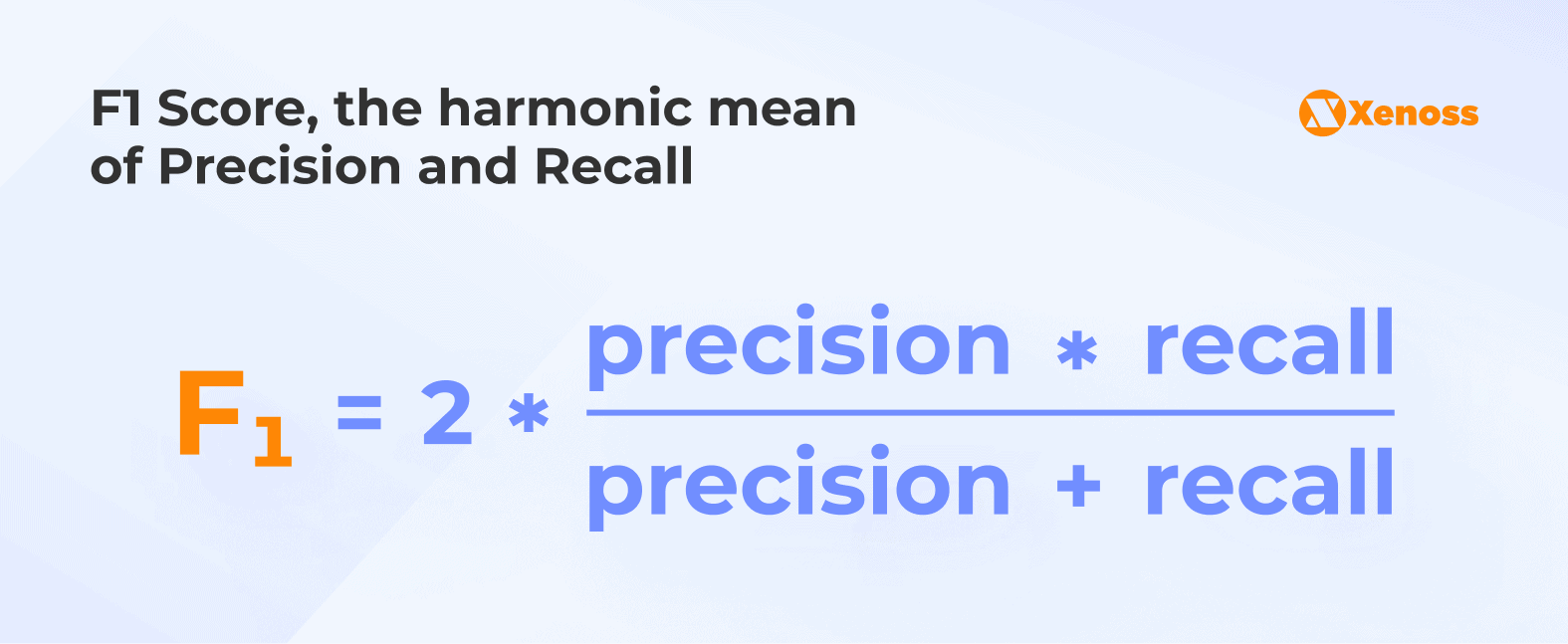

In ML terms, precision measures the proportion of correct positive predictions, while recall measures the proportion of positives the model identified. The F1 score balances these two metrics into a single number.

In document processing terms:

- Precision: How often the system’s extracted values are correct.

- Recall: Did we find all the values we were supposed to find?

Mature programs track this specifically in critical fields, calibrating the trade-off between false acceptance (bad data that gets through) and false rejection (good data that gets flagged unnecessarily).

End-to-end visibility across document packets

Regulated decisions rarely depend on a single document.

A claim file might include bills, clinical notes, adjuster reports, and policy documents. An underwriting submission might combine inspection reports, loss histories, and financial statements.

Packet-level visibility ensures completeness across all required documents, checks that identifiers align (same claimant, same policy, same dates), and surfaces inconsistencies before the case moves downstream.

Document intelligence for insurance claims

Insurance claims operations run on documentation: itemized bills, clinical notes, adjuster reports, policy records, and supporting evidence that arrives in dozens of formats. Small inconsistencies in any of these documents can determine whether a claim moves straight through to payment or falls into an exception queue that takes weeks to resolve.

Nearly 15% of all claims submitted to payers for reimbursement are initially denied, and 77% of those denials stem from paperwork or plan design rather than medical judgment. Administrative issues account for 18% of in-network claim denials.

Estimates show that hospitals and health systems spend $19.7 billion annually on fighting denied claims, at an average cost of $47.77 per claim. More than half of those denials (51.7%) are eventually overturned and paid, meaning billions go toward resolving claims that should have been approved in the first place.

Document intelligence addresses this by catching problems before they trigger denials.

Claim packet assembly and completeness validation

Each line of business carries its own documentation requirements. A workers’ compensation claim needs different evidence than a health insurance claim; an inpatient stay requires a discharge summary that an outpatient procedure wouldn’t.

Document intelligence models these requirements as a library of expected and conditional documents.

When a claim arrives, the system classifies each document, attaches it to the correct record, and evaluates the packet against completion rules. If an inpatient claim is missing a discharge summary, it flags immediately rather than waiting for a reviewer to notice.

This produces a packet-level completeness score. High-completeness claims flow straight into adjudication. Low-completeness claims route to intake review with specific prompts: “missing wage statement” or “adjuster note not attached.”

Since 45% of providers cite missing or inaccurate data as their top cause of denials, upfront completeness checks are among the highest-leverage interventions available.

Data lineage and versioning for audit trails

Regulators expect every decision to be reproducible. Data lineage tracks three things

Origin: Where did the value come from?

Transformation: How was the data cleaned or mapped?

Human intervention: Who approved an override and why?

This makes outcomes auditable. When a reviewer modifies an extracted field, the system logs the original value, the correction, the rationale, and the reviewer ID.

Banks that have adopted modern data lineage tools report 57% faster audit preparation and roughly 40% gains in engineering productivity.

Extraction accuracy and claims adjudication alignment

Even minor extraction errors can alter outcomes. For example, a misread service date can shift a claim outside the coverage window or trigger the wrong prior-authorization rule.

Up to 49% of claims are affected by routine coding and documentation issues. Document intelligence reduces this by cross-checking extracted values against other evidence in the packet:

- Service dates validated across clinical notes and billing records

- Billed amounts reconciled against itemized line items

- Procedure codes checked against supporting clinical documentation

When values don’t match, the system routes the claim for review with a clear explanation rather than allowing it to proceed to adjudication, where it’s more likely to be denied.

As a result, claim files remain fully reproducible during audits, with complete version histories and transformation logs.

Document intelligence for underwriting

Underwriting depends on interpreting evidence (inspection reports, valuations, financial statements, etc.) and turning that evidence into consistent, defensible risk assessments. Unlike claims, underwriting is not about adjudicating a past event, but about predicting future loss. That prediction is only as reliable as the documentation behind it.

Manual document review remains a bottleneck. Industry data shows that underwriters spend up to 40% of their time on administrative tasks, including gathering and verifying supporting documents. For commercial lines, turnaround times for standard policies have dropped from 3-5 days to as little as 12.4 minutes with AI-assisted processing, provided extraction and validation are tightly integrated.

Extracting structured insights from supporting evidence

Underwriters rely on diverse documents, such as loss histories or property photographs, that were never designed for automated processing. Underwriting document automation transforms these materials into structured inputs by classifying each document, extracting key attributes, and validating them against business rules. For example, inspection reports are parsed for building characteristics, and financial statements yield revenue, debt, and liquidity indicators.

Building reliable documentation chains

Underwriting files must show how a conclusion was reached. Document intelligence links extracted values to their source pages, maintains version histories, and records reviewer adjustments. During peer review or audit, underwriters can replay the file to see which evidence supported a pricing decision and why conflicting information was resolved a certain way.

Reducing decision variability across underwriters

Variation between underwriters is a well-known source of pricing inconsistency. By standardizing document classification, completeness checks, and extraction logic, document intelligence ensures that every submission enters review with the same normalized evidence set.

Document intelligence for banking onboarding and KYC automation

In the 2025 Fenergo global survey, 70% of institutions lost clients in the past year due to inefficient onboarding, with abandonment rates averaging around 10%.

Corporate client onboarding is particularly slow: full KYC reviews take an average of 95 days. Document intelligence helps reduce this drop-off by standardizing how evidence is captured, checked, and reconciled across the KYC process.

Structured validation of ID documents and supporting materials

Document intelligence classifies each document, extracts regulated fields, and validates them against jurisdiction-specific rules:

- ID expiration dates

- MRZ consistency

- Issuer authenticity

- Address-issuer validity

- Income-document coherence

- Ownership declarations

that must match corporate registries or supporting evidence.

These checks reveal issues that basic extraction misses: outdated IDs, incomplete address proofs, or income statements that contradict declared information.

Mismatch detection and risk signaling

Extracted fields from IDs, proofs of address, income statements, and declarations are cross-checked against each other and against external records. When values diverge (name variations, addresses that don’t match public records, ownership that conflicts with company filings), the system raises a structured alert and routes the case for additional review.

Audit-ready onboarding trails

What makes onboarding audits difficult is showing exactly which version was used when a decision was made, how discrepancies were resolved, and why a reviewer cleared a risk flag.

Document intelligence creates an audit-ready onboarding record by preserving every document and decision in a reproducible, time-indexed chain. Each upload becomes an immutable version with provenance metadata; extracted fields are stored alongside the model version and validation rules used to generate them; and every reviewer action is time-stamped and linked to an individual.

Document intelligence for invoice automation in manufacturing

Manufacturing invoicing looks structured on paper, but in practice, formats vary by vendor, plant, and region. Quantity and price discrepancies, missing receipts, and incorrect cost centers all create exceptions that finance and plant teams must resolve manually.

Across industries, accounts payable teams still spend about $9–10 to process a single invoice, with cycle times averaging 9.2 days and invoice exception rates around 22%.

Line-item validation and structured reconciliation

Document intelligence normalizes vendor-specific invoice layouts into a consistent schema, then applies PO-based and three-way matching rules at the line level.

Quantities, unit prices, tax amounts, and freight charges are checked against purchase orders and goods receipts within defined tolerances. This catches issues such as overbilled units, duplicate freight, or misapplied discounts before the invoice is posted.

Cross-system lineage for financial accuracy

Each line item that passes validation ultimately feeds ERP, AP, inventory, and forecasting systems. Document intelligence maintains lineage from invoice line to PO line, receipt, and GL posting, so controllers and auditors can see precisely how a billed amount flowed into COGS, accruals, or capital projects.

Discrepancy tracking and exception clustering

Not all issues are one-off errors. Some vendors systematically overinvoice freight, certain plants may miscode cost centers, and specific product lines may have recurring mismatches between shipping documents and invoices.

By aggregating and clustering exceptions, document intelligence highlights these patterns: which vendors generate the most mismatches, and which plants or buyers approve out-of-tolerance invoices.

Document intelligence benchmarks: accuracy and efficiency gains by workflow

The table below summarizes typical performance bands used in enterprise evaluations of document intelligence programs.

| Workflow | Extraction accuracy | Efficiency impact | Cycle-time improvement |

|---|---|---|---|

| Insurance claims | 95-99% (vendor-reported; varies by document type) | STP rates for low-complexity claims: 30-40% achievable, up to 95% potential for P&C | 25-40% faster for simple claims |

| Banking onboarding | 92-97% (document-dependent) | 40%+ of onboarding time consumed by KYC/account opening | Baseline: 49 days average (commercial); improvement varies by maturity |

| Manufacturing invoicing | Measured by exception rate and touchless processing | Touchless rate: 23.4% average → 49.2% Best-in-Class; Exception rate: 22% → 9% | 7.4 → 3.1 days (82% faster for Best-in-Class) |

Architectural requirements for audit-ready document intelligence

Architecture determines whether extracted data holds up under regulatory scrutiny months or years after a decision. Three layers matter most.

Controlled ingestion and extraction

Documents entered through validated channels are checked for format and integrity, and the system rejects or flags inputs that fail prerequisites.

At the extraction layer, every transformation is logged and versioned. Each field ties back to a source page, extraction logic, model version, and timestamp. Reprocessing the same document under the same configuration must yield identical results.

Governance and lineage

The governance layer maintains end-to-end traceability from source document to decision input, records reviewer actions and overrides, and enforces segregation of duties. Overrides require justification, approval, and permanent audit trails.

Ongoing accuracy monitoring

Document formats change, vendors update templates, and rules evolve. Mature programs track discrepancy rates on high-impact fields (amounts, dates, identifiers) rather than headline accuracy alone. A rise in discrepancies signals degradation before overall metrics show it. Override patterns, such as frequent fixes to the same fields or document types, identify gaps in extraction logic. Model updates undergo formal retraining cycles, are tested on validation sets, and are versioned for auditability.

Conclusion: Document intelligence as a compliance multiplier

In regulated industries, document processing is a foundation for defensible decision-making. Accuracy, completeness, and traceability now determine whether claims are paid correctly, risks are priced consistently, clients are onboarded compliantly, and invoices are approved without downstream disputes.

Document intelligence reframes automation around these requirements. By combining field-level accuracy metrics, document lineage, and embedded governance controls, organizations can drive cycle-time reduction in document workflows while limiting downstream rework.

As regulatory scrutiny increases, this compliance-first approach turns document processing from a source of risk into a measurable, scalable advantage across claims, underwriting, onboarding, and invoicing.