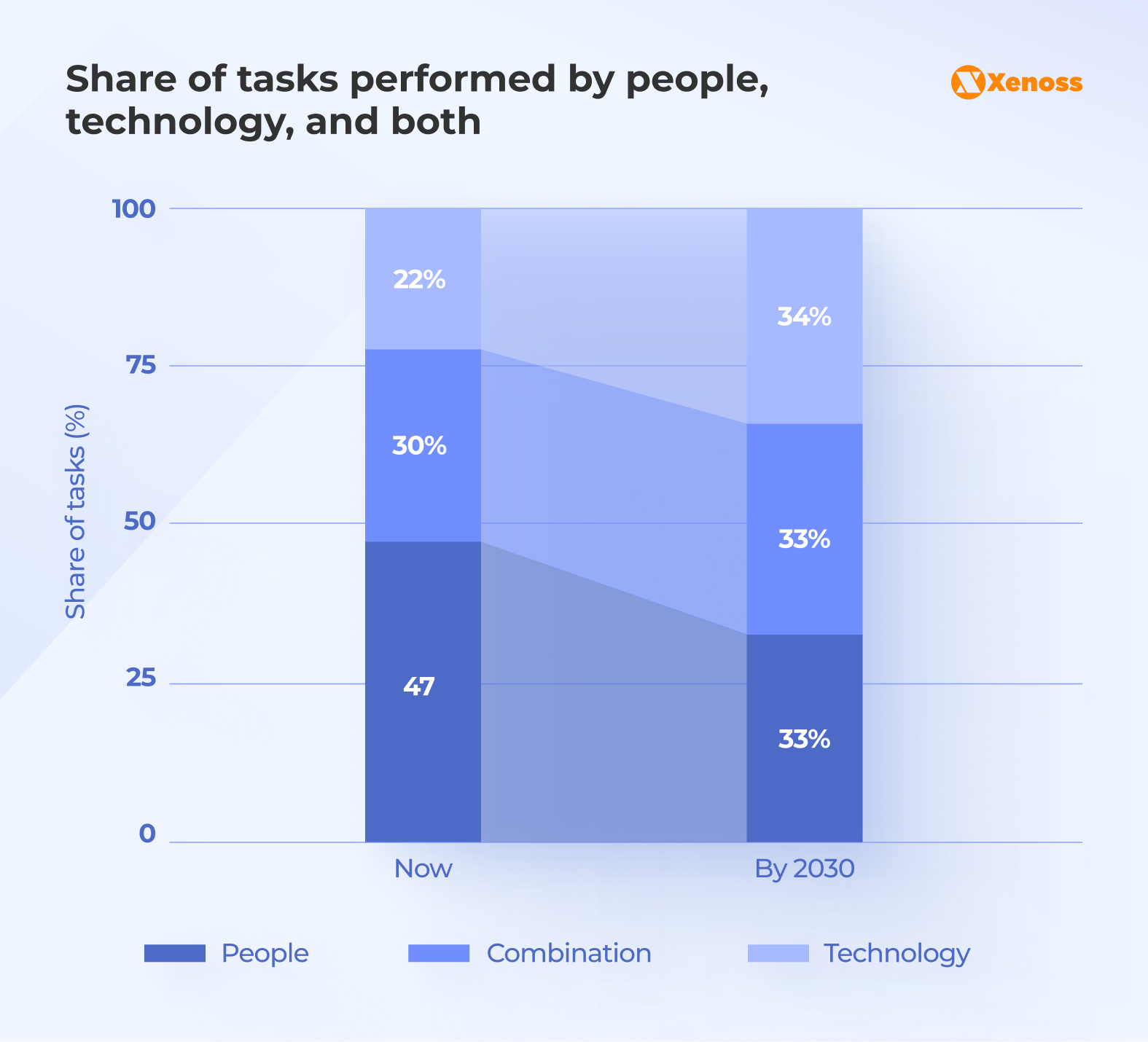

By 2030, the share of tasks performed by technology, people, and a combination of both will be equal. This doesn’t reduce the amount of work people do. Instead, it concentrates human effort on tasks that require judgment, creativity, analytical thinking, and communication.

That’s precisely the aim of hyperautomation. It combines multiple automation tools and AI systems into a unified operational engine, where you can combine them to achieve maximum efficiency and enable end-to-end operational management.

This article gets into what hyperautomation entails, how to identify high-impact use cases, the technology stack required for success, and practical examples of hyperautomation implementation across the manufacturing, insurance, and banking industries.

Why implement hyperautomation in operations

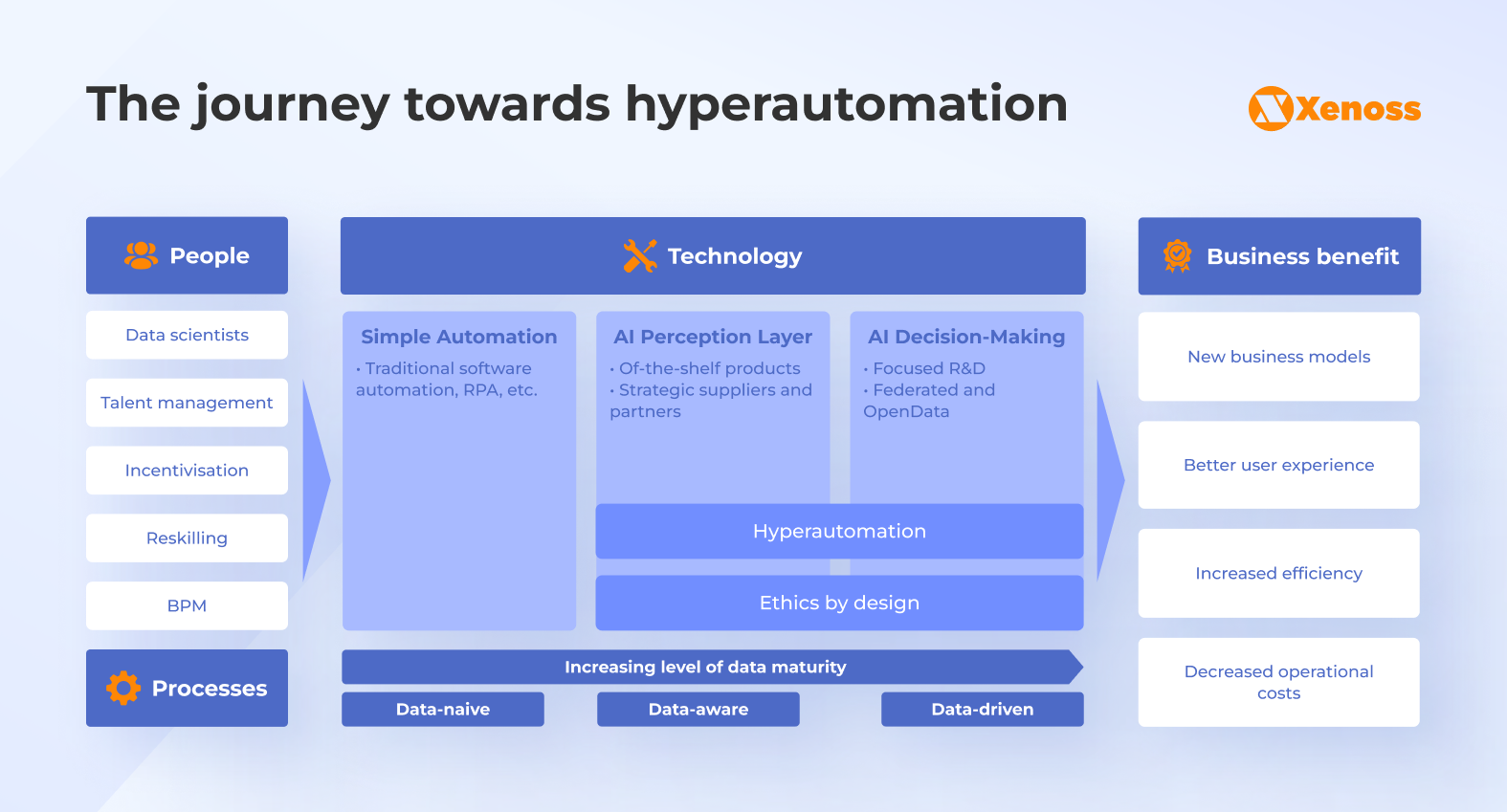

Traditional automation using RPA and BPM tools isn’t new to businesses. For a period, they offered temporary operational relief, but these tools address isolated tasks rather than transform end-to-end business processes. They’re helpful if a company is still at the data-naive maturity level (i.e., reactive decision-making based on siloed data), as illustrated below.

Shifting towards hyperautomation means becoming a data-driven business, which, in turn, means you not only know where your data is stored and who uses it (data-aware maturity level), but can also use these datasets to your advantage. In other words, hyperautomation helps you turn data into revenue, decrease operational expenses, and gain a competitive advantage. But that’s a double-edged sword; without a well-organized data infrastructure, hyperautomation won’t work, and without hyperautomation, your data won’t bring expected value.

As operations become more complex, companies increasingly use multiple tools simultaneously to reduce costs, effort, and risks. But this distorted use of technology only adds to an already overgrown operational complexity.

Modern companies deal with almost 50 endpoints in their current automation processes, which include separate use of legacy enterprise systems, RPA tools, AI chatbots, and APIs. That’s why 85% of businesses say they need end-to-end hyperautomation solutions to orchestrate automation and gain visible operational improvements.

Hyperautomation solutions amplify and organize existing automation technologies to reduce operational and tool management complexity.

Power of process mining: Uncovering hidden automation opportunities

Process mining is essential at the hyperautomation discovery phase. By analyzing event logs from your existing IT systems (e.g., ERPs, CRMs, and BPM systems), process mining creates a dynamic, visual representation of your business processes. It provides an objective, data-backed view of which processes are the most time-consuming, error-prone, or costly, highlighting them as candidates for automation.

Where to start for maximum operational value

A simple and effective process mining model assesses opportunities along two key axes: potential business impact and implementation complexity.

- High-value, low-complexity. These are the “quick wins.” They often involve rule-based, high-volume, and repetitive tasks that can be addressed with technologies like RPA. Examples include invoice processing or employee onboarding paperwork. But hyperautomation can also be efficient when you need to extract insights from large-scale business processes.

- High-value, high-complexity. These are transformative projects that are well-suited to hyperautomation. They need a larger investment but offer the greatest long-term ROI. For instance, end-to-end supply chain optimization or dynamic fraud detection.

- Low-value, low-complexity. These can be automated, but should be lower on the priority list.

- Low-value, high-complexity. These should generally be avoided, as the effort outweighs the potential reward.

Focus first on high-impact, low-complexity tasks and processes, as they deliver the quickest ROI. Then proceed to high-impact and high-complexity tasks to scale hyperautomation initiatives and sustain long-term business value.

Hyperautomation technology stack

Each hyperautomation technology plays a distinct role in creating a cohesive, intelligent system. Understanding these roles will help you develop a custom hyperautomation architecture that fits into your IT infrastructure.

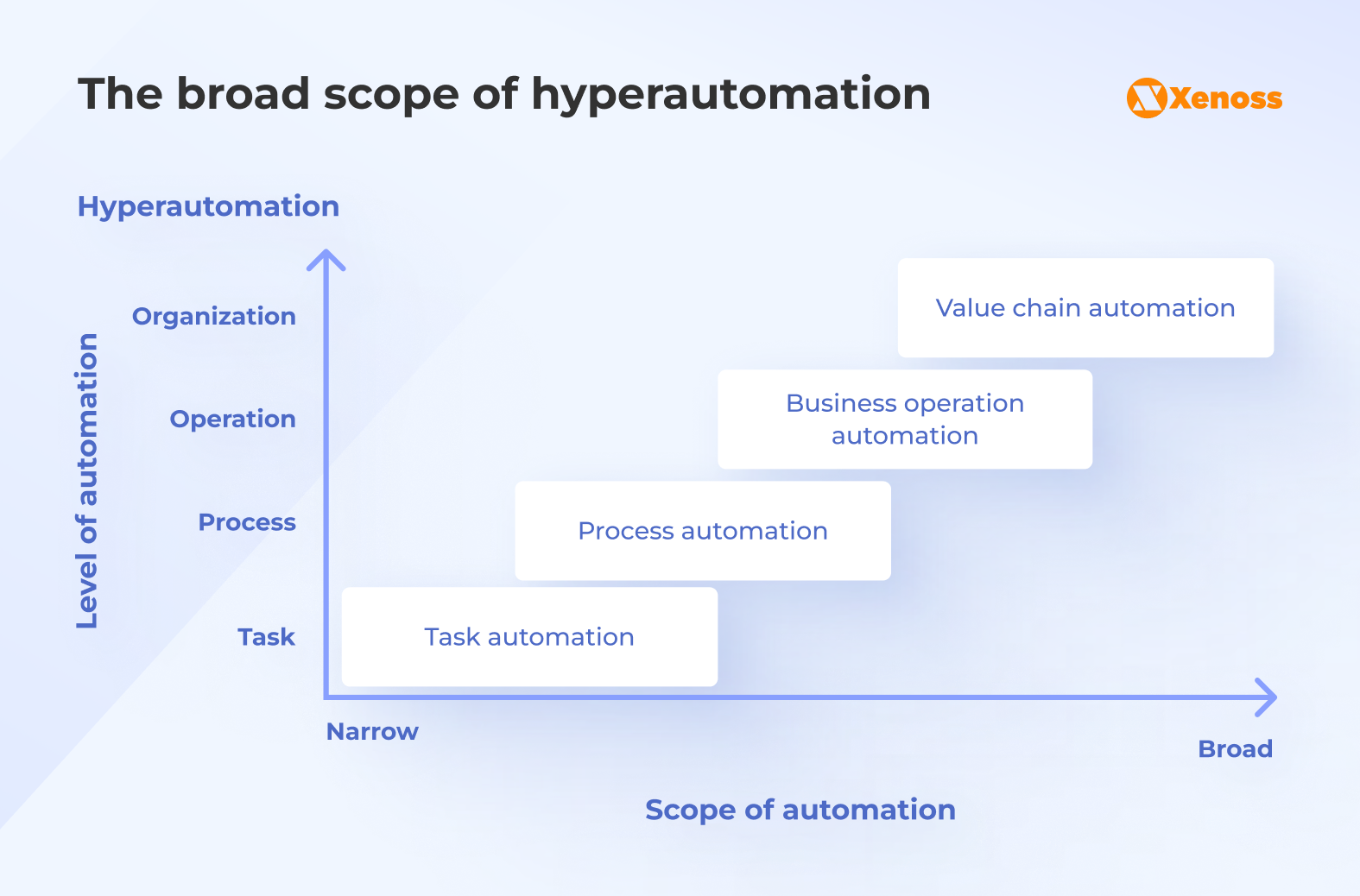

According to Gartner, a hyperautomation tech stack is defined by the scope and level of automation. It encompasses the whole spectrum: from automating simple tasks to automating entire value chains.

Task and process automation: RPA and workflow automation

RPA is ideal for automating repetitive, rule-based tasks that mimic human interaction with digital systems. RPA bots can log into applications, copy-paste data, fill in forms, and extract information from documents.

Workflow automation, often managed through BPM platforms, goes a step further by orchestrating entire sequences of tasks that may involve multiple systems and human decision points.

Business operation and value chain automation: AI, ML, and agentic AI

This layer provides the “brains” of the hyperautomation stack, elevating it from simple task execution to intelligent decision-making across business operations and value chains. AI and ML algorithms analyze historical data to identify patterns, make predictions, and adapt processes over time. They bring human-like capabilities to automation, including:

- Natural language processing (NLP). Understanding and interpreting human language from emails, support tickets, and chat logs.

- Computer vision. Analyzing images and videos for tasks like quality control inspection in manufacturing or damage assessment from photos in insurance claims.

- Intelligent document processing (IDP). Moving beyond simple OCR to extract, classify, validate, analyze, and make recommendations based on unstructured data from complex documents like invoices, contracts, and medical records.

- AI agents and multi-agent solutions. Extending RPA by combining task execution with reasoning. They can: interpret instructions, decide which action to take next, verify outputs, escalate edge cases, and collaborate with other agents. A multi-agentic system, in turn, is a whole “team” of agents responsible for executing diverse tasks. These systems have an orchestrator as their “manager,” which monitors their performance and reports back to humans.

Connecting the dots: Data fabric, integration platforms as a service (iPaaS), and data analytics

For hyperautomation to work, you should ensure a connection between data and systems. A modern data fabric architecture provides a unified data management layer, ensuring that all automation tools have consistent, secure, and governed access to the right data at the right time, regardless of where it resides.

iPaaS (e.g., Zapier, SnapLogic, Informatica) providers offer pre-built connectors and APIs to seamlessly connect disparate cloud and on-premises applications, breaking down critical data silos. In the hyperautomation context, these systems ensure that RPA and AI agents or models can exchange data between each other and enterprise systems.

iPaaS providers also often offer low-code/no-code tools to build customized drag-and-drop applications that enable business users and operations specialists to monitor hyperautomation workflows.

These applications include data analytics modules with dashboards that track operational KPIs and feed insights back into enterprise software for workflow optimization, or into AI/ML models for continuous improvement.

Emerging operational enablers: Internet of Things (IoT) and digital twins

In many industries, IoT and digital twin technologies are pushing the envelope in advanced hyperautomation. IoT provides a stream of real-time data from sensors installed on machinery, vehicles, and in facilities. Sensor data triggers automated workflows, such as scheduling predictive maintenance when a machine shows signs of wear.

A digital twin, a virtual model of a physical process or asset, uses IoT data to simulate operations. This allows businesses to test process changes, predict outcomes, and optimize performance in a risk-free virtual environment before implementing changes in the real world.

IoT data and digital twins can provide extra context for AI/ML solutions and close the loop in automating processes across the physical and digital worlds

Real-life operational workflows using hyperautomation technologies

Discover how companies in manufacturing, banking, and insurance tied together different automation technologies with the help of AI.

Siemens automated industrial operations with AI agents

Siemens shifted from using AI assistants that answer simple queries to autonomous AI agents that execute entire industrial workflows without human intervention. The company developed an entire Industrial Copilot hub comprising separate copilots that perform different automated tasks, including design, planning, engineering, operations, and services. Their agentic AI architecture includes a central orchestrator that manages these specialized copilots.

The agents continuously learn, improve, and communicate with each other and enterprise systems to develop a sophisticated multi-agentic network for automating industrial operations.

As to results and future objectives, Rainer Brehm, CEO Factory Automation at Siemens Digital Industries, says:

By automating automation itself, we envision productivity increases of up to 50% for our customers – fundamentally changing what’s possible in industrial operations.

Bank of Montreal (BMO) implements AI as a strategic asset

82% of banks are integrating AI with additional technologies to maximize impact, such as RPA, and 84% are adopting autonomous agentic AI solutions. As one of the largest banks in North America, BMO belongs to both groups.

The bank combines RPA, agentic AI, and ML to foster digital and human collaboration at the company. Their first attempts to automate financial processes with RPA have generated millions of dollars in value. Implementing AI is an even more promising area.

For instance, the company uses ML-powered optical character recognition (OCR) in combination with RPA to extract insights from scanned financial documents. Such a workflow helps BMO improve document processing accuracy with ML while also automating data entry with RPA.

To ensure efficient AI adoption and use within the organization, BMO has established an AI center of excellence (CoE). The AI CoE also helps the company to manage risks and support continuous ingestion of high-quality data.

To measure AI performance, BMO focuses on return on employee (ROE), aiming to enhance human work with AI rather than replace people. Here’s how Kristin Milchanowski, Chief AI and Data Officer at the BMO Financial Group, puts this:

We’re thinking about the ROI that every agent we deploy will generate and tying it to the balance sheet up front. We believe that scaling technology with value is how we are going to win at this.

For the strategic AI implementation, the BMO group has already been ranked #1 globally in AI talent development.

Allianz uses AI agents for claims processing

An insurance company, Allianz, has implemented an agentic AI system to automate claims processing for food spoilage. The “Nemo” project employs seven task-specific AI agents to reduce processing time from days to hours. The agents handle all work, such as validating coverage and detecting fraud, while the final payout decision is made by human workers using all previously gathered data.

The company launched “Nemo” in 100 days and aims to implement this solution globally to achieve seamless agent-human collaboration for the sake of better and fairer insurance services.

Maria Janssen, Chief Transformation Officer at Allianz Services, is fascinated by the results:

With ‘Project Nemo’ as our first integrated agentic AI solution, we’re achieving an impressive 80% reduction in claim processing and settlement time. This does not only boost productivity in our claims departments but also significantly enhances insurance customer satisfaction.

Janssen also added that:

This initiative not only demonstrates the power of hyperautomation but also sets the foundation for integrating AI across Allianz’s operations—enhancing both efficiency and decision-making processes.

This solution has helped the company focus on more complex claims and deliver much better service to customers, especially in high-stress situations such as natural disasters.

Each of the companies we discuss prioritizes their employees and customers when implementing hyperautomation solutions. They’re basically asking themselves a question: “How can we deliver better, quicker, and more efficient services to our customers while ensuring that our workers aren’t swamped with routine tasks?”

This question sets the tone for responsible adoption. It reflects a shift from automation for cost-cutting to automation for capability-building, where technology amplifies human expertise, strengthens customer experience, and supports sustainable operational growth. This is precisely the mindset when adopting hyperautomation.

Operational hyperautomation roadmap: From pilot to a company-wide scale

A phased approach to implementing hyperautomation enables you to measure ROI and scale with value rather than accumulate technical and process debt in a rushed rollout.

Phase 1: Proof of concept (PoC)

Begin with a small, focused project designed to prove the value and feasibility of hyperautomation within your organization.

- Select the right use case. Choose a high-impact, low-complexity process identified through your prioritization framework. It should have clear, measurable outcomes, such as reducing processing time by X% or cutting error rates by Y%.

- Define success metrics. Establish clear KPIs (e.g., process cycle time, throughout, first-time-right (FTR) rate, customer satisfaction, net promoter score) before you start. This is crucial for evaluating the PoC and building a business case for further investment.

- Assemble a cross-functional team. Include representatives from operations, IT, and the specific business units. This way, you ensure alignment and incorporate subject matter expertise to fine-tune the automation processes.

- Execute and measure. Implement the automation solution on a small scale. Meticulously track the “before” and “after” metrics to quantify the impact. The goal is to create a clear success story.

Phase 2: Building a hyperautomation CoE

The next step is to establish the governance, standards, and expertise needed to scale effectively. This is the role of an automation CoE. The CoE is a team responsible for:

- Governance and best practices. Defining the standards for developing, deploying, and managing automations to ensure quality, security, and consistency.

- Technology and vendor management. Evaluating and selecting the right hyperautomation tools for the enterprise technology stack.

- Opportunity pipeline management. Creating a systematic process for identifying, evaluating, and prioritizing new automation opportunities from across the business.

- Training and enablement. Providing training and support across business units to foster adoption and use.

Phase 3: Scaling hyperautomation across operations

Once your CoE is established and you have a repeatable model for success, you can begin scaling your hyperautomation initiatives across departments and business processes.

- Federated development model. While the CoE provides central governance, you should encourage individual business units to develop their own automations within the established guidelines.

- Focus on end-to-end processes. Move from automating individual tasks to re-engineering entire value streams, such as the complete procure-to-pay or order-to-cash cycles.

- Invest in reusable components. Develop a library of reusable automation components (e.g., a bot for logging into SAP) that can be easily deployed in multiple workflows, accelerating future development.

Phase 4: Continuous monitoring

Hyperautomation is an ongoing discipline with a continuous cycle of improvement fueled by data and analytics.

- Monitor performance. Use analytics dashboards to continuously evaluate your automated processes against defined KPIs.

- Identify optimization opportunities. Analyze performance data to identify new bottlenecks or areas for improvement. AI and ML models can learn from this data to automatically suggest process optimizations.

- Refine and redeploy. Regularly update and refine your automations to improve their efficiency, resilience, and business impact. This creates a virtuous cycle where your operational processes become progressively more intelligent and efficient over time.

These phases offer a generalized approach to hyperautomation deployment, but you can change tack and skip or substitute certain phases with more applicable ones. For instance, if scaling won’t be your priority for the next six months or a year, you can skip phase 3 and move to phase 4 after establishing CoE.

Also, if the CoE building contradicts your company culture, consider passing responsibility for hyperautomation management to your AI and data governance team (if you have one) or to an external, experienced vendor with an established governance department.

How to measure hyperautomation ROI in operations

One of the most efficient ways to measure hyperautomation ROI in constantly changing business operations is through real-time analytics dashboards. They provide a consolidated view of all key operational KPIs.

As illustrated in the example below, you can compare operational performance before and after hyperautomation. This way, you’ll gain visibility and will be able to justify further investment in scaling hyperautomation initiatives.

| Metric | Before hyperautomation | After hyperautomation | Impact / ROI |

|---|---|---|---|

| Average processing time per case | 28 minutes | 6 minutes | 79% faster cycle time |

| Manual data entry workload | 72% of the workflow requires human input | 18% requires human input | 54% reduction in manual effort |

| Error rate in submitted data | 3.8% | 0.6% | 84% fewer errors |

| Cost per transaction | $4.20 | $1.10 | 74% cost reduction |

| Backlog volume | 1,200 cases pending weekly | 180 cases pending weekly | 85% drop in backlog volume |

| Compliance exceptions | 62 exceptions/month | 11 exceptions/month | 82% fewer compliance issues |

| Employee time spent on repetitive tasks | 30 hours/employee/month | 7 hours/employee/month | 23 hours saved per employee |

| Customer response time | 12 hours | 2.5 hours | 4.8x faster |

| Operational throughput | 1,500 items/day | 4,600 items/day | 3x increase |

| FTE capacity released | — | Equivalent to 6 FTEs | Reallocated to higher-value tasks |

| Annual operational cost | $2.4M | $1.1M | $1.3M annual savings |

Hyperautomation benefits compound over time, creating a stronger foundation for growth and innovation. Therefore, consider evaluating the first benefits after 6 to 8 weeks of implementation.

Takeaways

When traditional automation reaches its limits, hyperautomation becomes the architecture that connects data, systems, and intelligent agents into a unified operational engine. It enables the business to grow without the operational complexity and overhead that often accompany scale.

Key takeaways you can garner from this guide:

- Process mining is essential for identifying high-impact automation opportunities, ensuring teams focus on what delivers meaningful ROI.

- Success depends on clean data, strong governance, and the right mix of AI, RPA, workflow tools, and integration platforms.

- Hyperautomation is a continuous project that requires ongoing monitoring, optimization, and reinvestment to maintain long-term value.

- Identify early hyperautomation adopters and champions within the business. Publicly celebrate their successes and the positive impact of pilot projects to build momentum and inspire others.

- Governance is the invisible backbone of scalable hyperautomation. Without clear standards, automation grows faster than the organization can safely manage.

Xenoss can help you start a hyperautomation project with a well-organized data infrastructure, high-impact operational use cases, and established AI and data governance, delivering quick ROI and laying the groundwork for long-term business value.