.In fields like automotive and consumer electronics, physical products include digital features.

Users have come to expect regular over-the-air updates and new features.

In automotive, 36% of auto owners want to get over-the-air updates at least once every three years.

With a strained supply chain, a shortage of blue-collar workers, and a turbulent economy, the strain on manufacturers is compounding. Nine in ten manufacturing executives surveyed by McKinsey in 2024reported facing visibility challenges and shortages with their supplier partners.

At the same time, technologies like machine learning (ML) and the Internet of Things (IoT) are becoming easier to implement. AutoML now automates large parts of the ML workflow, reducing manual effort and the need for deep ML expertise.

In IoT, the Matter 1.3. Spec released last year expanded device coverage and can now capture data from a wider range of devices.

Manufacturers are seeking ways to use new technologies to fix operational issues.

In this piece, we will explore how digital twins, platforms that blend AI, IoT, cloud, and advanced analytics, help manufacturers create better, cheaper products, plan operations effectively, and manage multiple facilities from one central hub.

What are digital twins?

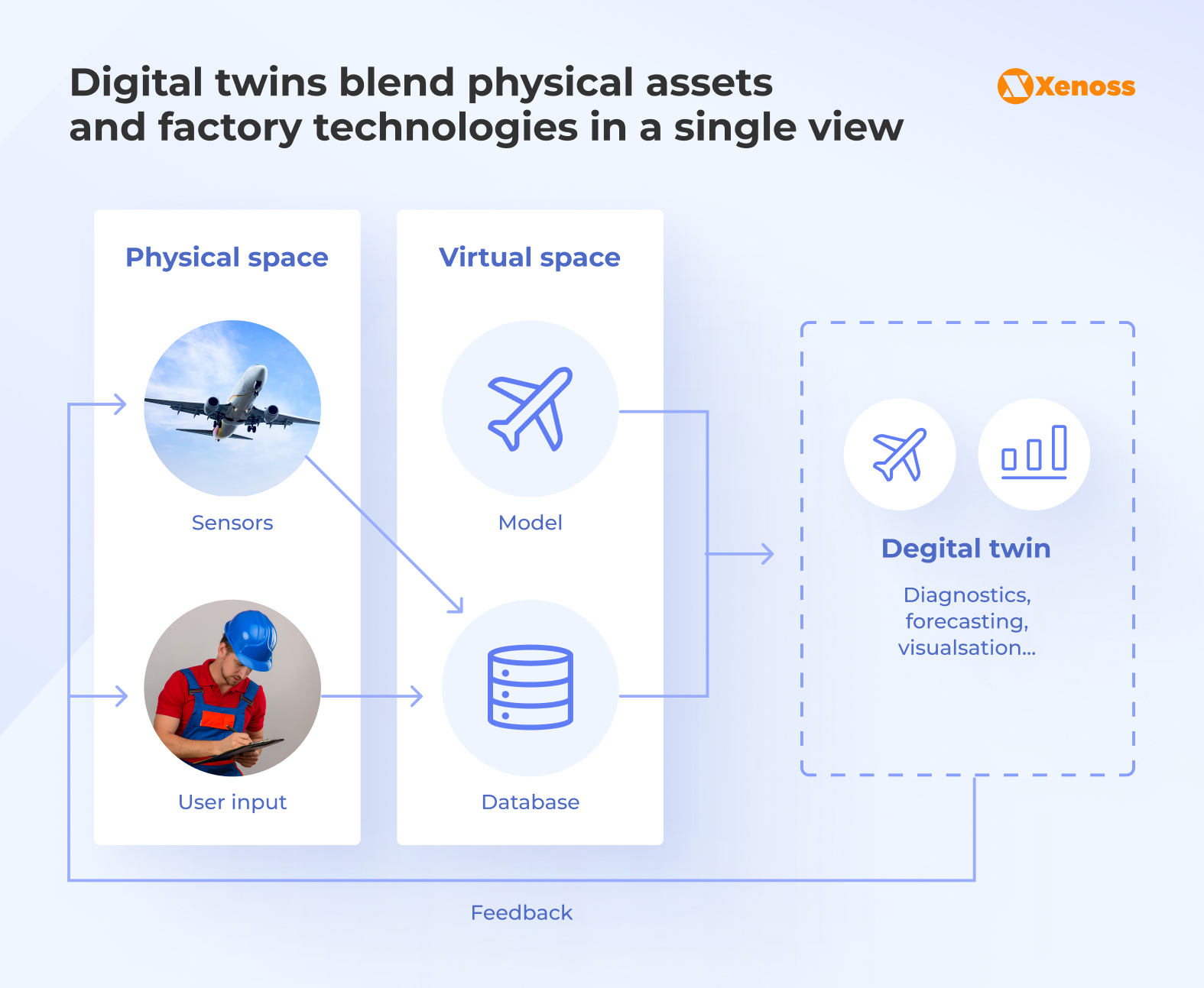

Digital twins are the digital representation of the physical world: the factory itself, the final product, equipment, or the supply chain.

There’s no single rulebook on what a digital twin should look like. In fact, these platforms come in different shapes and sizes depending on the manufacturer’s needs.

Product twins

Product twins are exact replicas of the final product. They include every part of the physical design and follow the rules of math and physics.

Manufacturers create these systems to help engineers run simulations. These simulations are cheaper and less risky than real-world tests.

Asset twins

Asset twins are models of specific factory assets, like equipment. They connect to IoT sensors and gather data on energy use, device performance, and maintenance needs.

Manufacturers create asset twins to spot early signs of equipment failure. This helps factory operators act before critical machinery stops production.

Factory twins

Factory twins offer a complete view of the factory: its layout, IT systems, and external partnerships with suppliers and distributors.

Manufacturers create these systems to clearly visualize production, optimize planning, and simulate ‘what-if’ scenarios.

As digital twins are adopted in factories and other facilities, they gather more data, which helps streamline operations.

Digital twins are delivering clear ROI in manufacturing

According to McKinsey, three top-of-mind challenges manufacturers face in day-to-day operations are high material costs, labor constraints due to talent gaps, and a lack of end-to-end visibility into factory operations.

Digital twins, especially when augmented with ML and IoT, are growing in popularity as practical and financially feasible workarounds to these hurdles.

Among 100 manufacturing leaders surveyed by McKinsey, 86% believe digital twins can help streamline operations at their factories. 44% already use a digital twin, and 15% are considering implementing one in the near future.

Large language models (LLMs) and enterprise AI agents are now creating new ways for digital twins to improve processes and support business decisions.

Now manufacturers can use predictive analytics capabilities, design simulations powered by ML, or build AI agents that run complex end-to-end workflows with no human supervision.

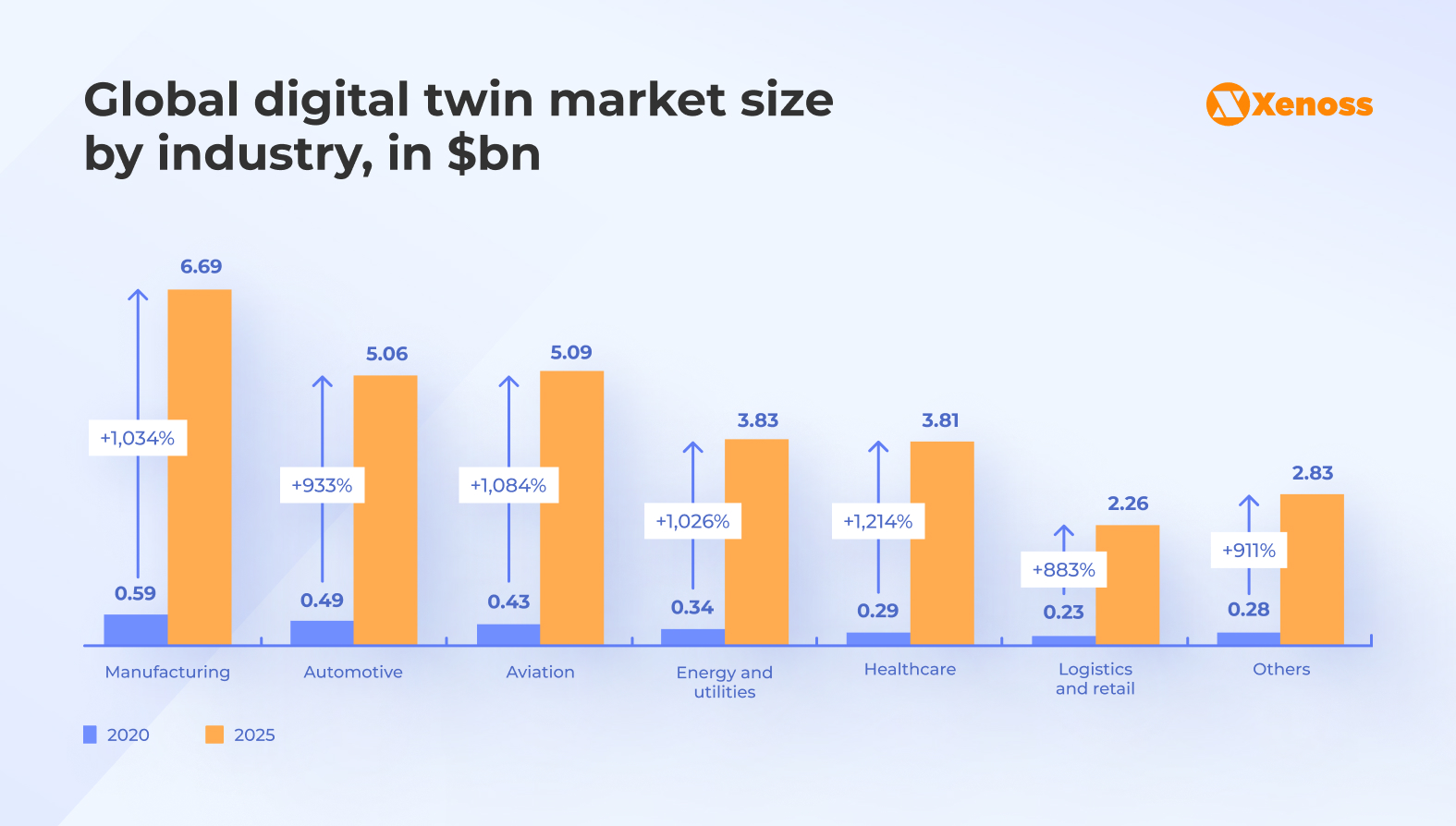

PwC data proves that AI is making digital twins appear more lucrative to manufacturers. Between 2020 and 2025, digital twin adoption in the sector has grown by over 1,000%.

How manufacturers apply digital twins: Real-world examples

As factories move towards increased digitization, the value that digital twins can bring to facilities is growing exponentially. McKinsey reports that its clients are using digital twins to fully revamp production schedules and cut monthly costs by up to 7% by compressing overtime requirements.

Digital twins are equally powerful at pinpointing production bottlenecks and supporting facility managers with recommendations on product line sequencing, warehouse storage, and capacity management.

Let’s review four promising digital twin applications and real-world case studies that highlight the impact of this technology.

#1. Improving productivity with real-time production simulations

Manufacturers use digital twins to create digital replicas of factory floors and test responses to production challenges in these environments.

For example, these platforms help factory managers assess the consequences of equipment malfunctions and create response checklists to reduce downtime. Similarly, team leaders can test and measure the impact of changes in maintenance schedules, headcount, and other impactful events.

Real-world impact: BMW’s iFactory is a high-fidelity mirror of real-life facilities and processes. It fully reflects the company’s production pipeline, logistics, and supply chain, and helps simulate the impact of disruption in these areas.

The automaker is now integrating generative AI into the digital twin to simulate a broader range of scenarios and suggest effective troubleshooting strategies based on its up-to-date component catalog, supplier list, quality assurance checklists, and other data.

Building and testing high-fidelity product prototypes

Aerospace or automotive manufacturers working on high-complexity products have limited room to experiment and test real-life components. If a company, like SpaceX, aims to apply the “fail fast” approach to high-stakes manufacturing, R&D costs skyrocket.

The cost of each failed test is estimated at $90-100 million. In 2023, the company spent $2 billion on Starship R&D alone.

Digital twins help large aerospace and automotive manufacturers reduce R&D costs by creating high-fidelity product replicas that enable engineers to test product development decisions and validate new design choices across a range of real-world conditions, from everyday to extreme.

Real-world impact: Airbus engineers use digital twins to simulate how concepts would perform under real-world conditions. The system ingests real-time data from the company’s in-service aircraft and uses it to make predictions.

We’re effectively building each aircraft twice: first in the digital world, and then in the real one.

Airbus Newsroom statement

The digital replicas of Airbus planes enabled the manufacturer to cut time-to-order by spotting quality issues and fixing them before they require time-consuming maintenance interventions.

The company’s digital twin platform, Skywise, now hosts over 12,000 aircraft replicas and helps streamline operations for the company’s 50,000+ employees.

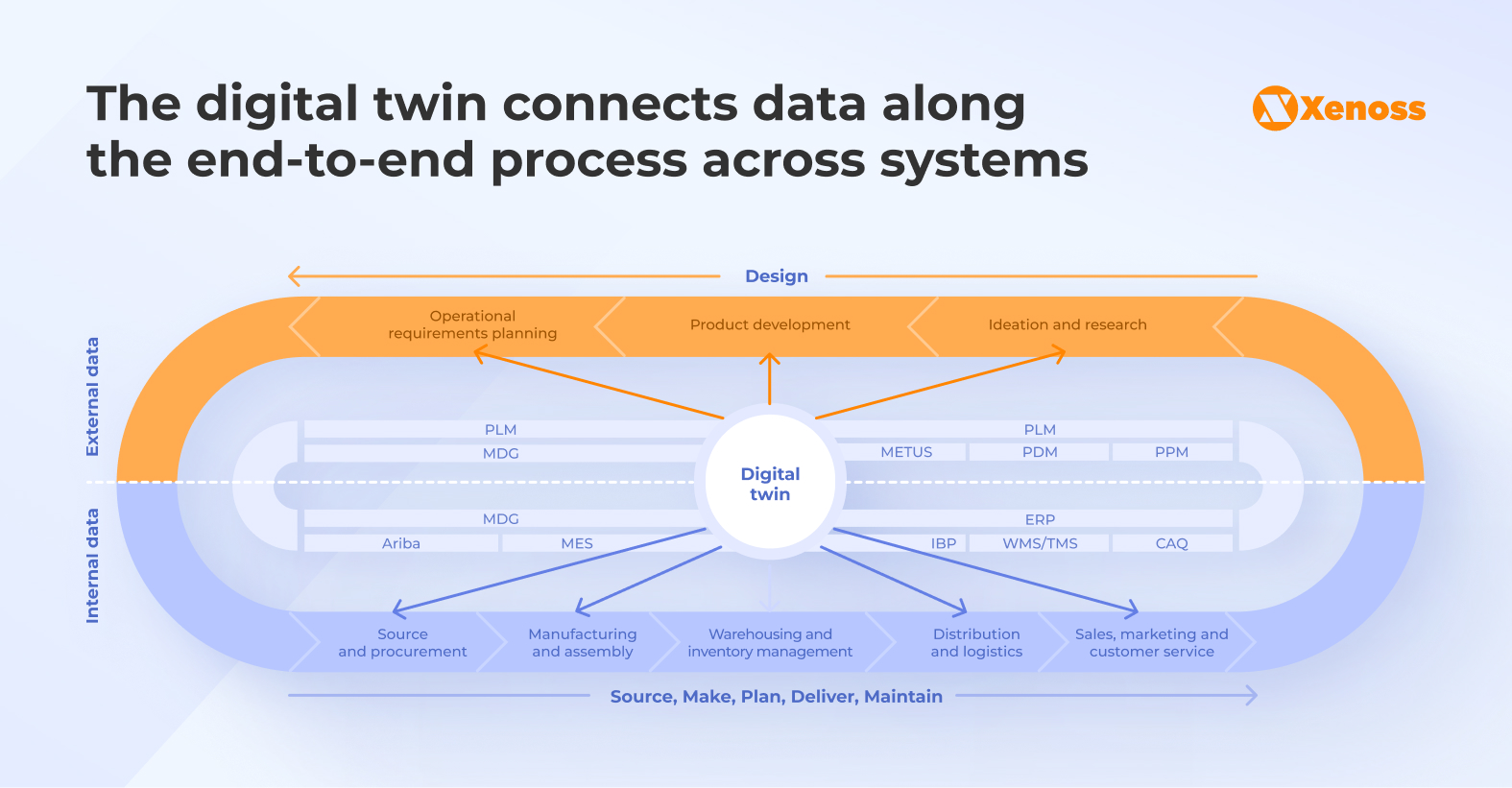

#2. Creating a connected environment in the factory

A manufacturing pipeline is a process with multiple moving parts: component sourcing, product design, equipment maintenance, process scheduling, quality assurance, feedback loops, and more.

Each of these is typically managed by a separate team, supported by dedicated technologies, and frequently spread across multiple facilities. The more complex the process becomes, the more fragmentation and lack of end-to-end visibility become a challenge.

Becoming a centralized control tower that breaks silos and gives teams access to the big-picture view is perhaps the most promising application of digital twins.

A single platform will now give manufacturers access to all relevant data:

- Product development: concept designs, preliminary tests, cost, and production time estimates

- IT systems: a single access point to computer-assisted design (CAD) software, warehouse management platforms, advanced planning and scheduling software, supplier databases, and other components of the company’s technology stack.

- Supply and procurement data: vendor performance metrics, material lead times, purchase order histories, pricing fluctuations, and supplier quality ratings

- Distribution and logistics logs: shipment tracking records, delivery times, transportation costs, route optimization data, and warehouse inventory movements

- Sales, marketing, and customer service insights: demand forecasts, order patterns, product returns, warranty claims, customer feedback, and market trend analytics

Real-world impact: Digital twins help BASF, the world’s largest chemical manufacturer, break down data silos at its production site in Antwerp.

This is BASF’s second-largest factory in the world, managed by over 3,500 employees and supporting over 50 production pipelines.

For over 20 years, BASF has used a digital twin platform, Smart Sites, to connect data from hundreds of sources, including CAD software, building information modeling (BIM), ERP, and workforce management systems.

The digital mirror of the factory’s structure and operations gives factory teams instant access to data, contributes to faster decision-making, and keeps everyone on the same page.

#3. Generating new data to get insight into production

Besides effectively applying real-world data to accelerate product development, digital twins are a powerful source of synthetic data that drives real-world testing and R&D.

Consider glass melting — an area of manufacturing known for the difficulty of achieving optimal production conditions. The temperature inside a melting furnace is approximately 1600 ℃, which is higher than the melting point of standard silicon sensors.

Digital twins help glass manufacturers simulate the conditions inside the melting furnace without relying on sensor data and create reliable data using physics, mathematics, and, most recently, ML models.

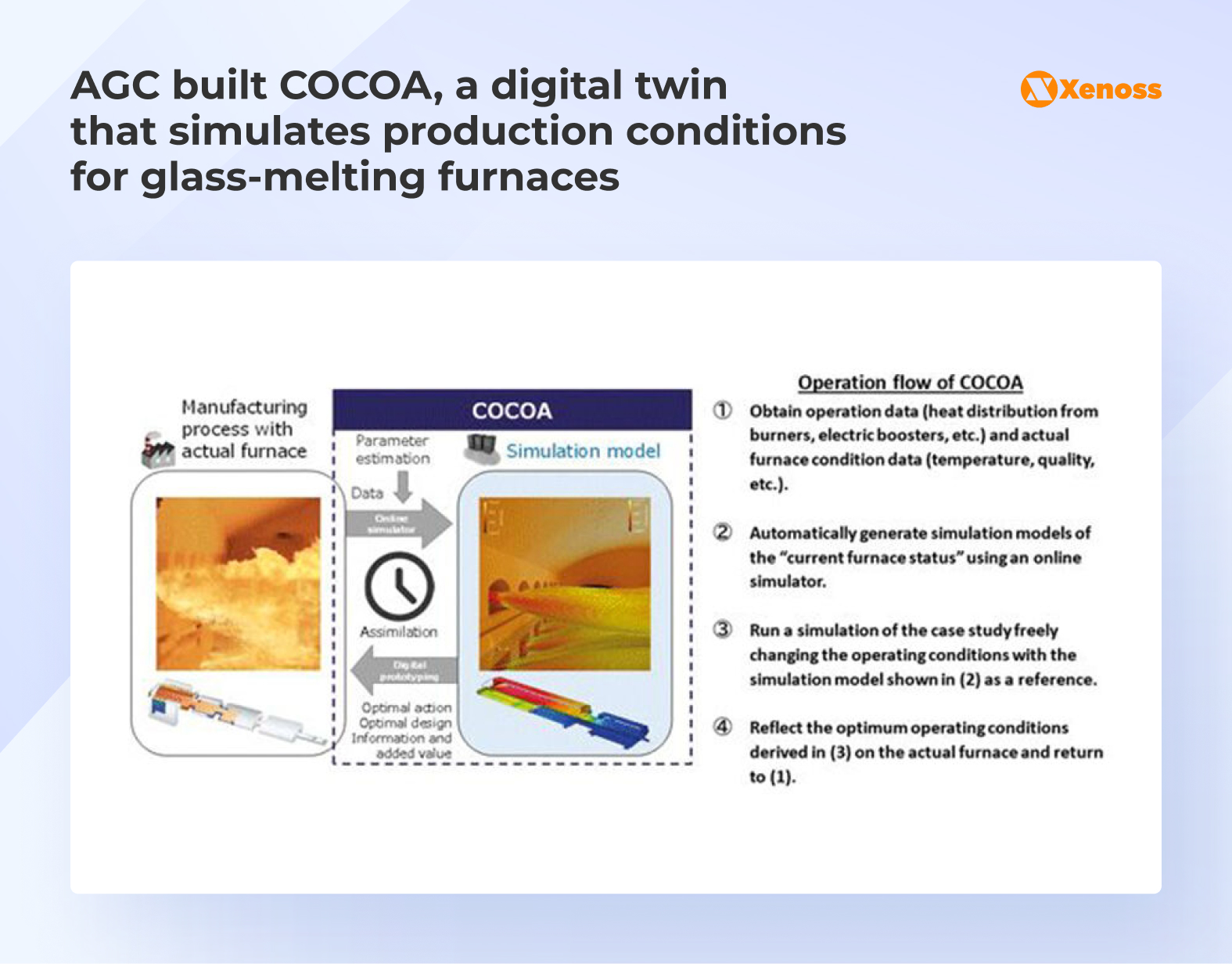

Real-world impact: AGC, a Japan-based glass manufacturer, piloted COCOA, a digital twin model that generates production-ready synthetic data on glass flow properties based on the melting furnace’s temperature distribution.

AGC technicians use this data for the preliminary studies of production conditions. Before embracing digital twin technology, the company had to bring in simulation specialists and invest additional time and resources to estimate glass flow accurately. Now AGC can get similarly accurate estimates at a fraction of the cost.

AGC plans to expand the system beyond glass flow and furnace temperature estimation and use it for sustainability monitoring and GHG emission reduction.

Digital twin architecture: A combination of data, technology, and processes

As control towers that provide visibility into processes, R&D, and quality assurance, digital twins sit at the intersection of a manufacturer’s data, the other technologies the factory uses, and the processes embedded within the organization.

To accurately represent the factory’s day-to-day operations, digital twins require a multi-layer architecture that combines secure data ingestion and processing, seamless interaction with the rest of the stack, and frictionless embedding into the organization.

1. The data layer

Inventory, production, demand, and other data types are the backbone of digital twin architecture.

A data pipeline supporting digital twins comprises four components: ingestion, transformation, loading, and application.

Data ingestion

Data ingestion is the gateway that validates, transforms, and routes diverse data streams from sensors, machines, enterprise systems, and manual inputs into a unified structure that the digital twin can process.

There are two standard approaches to data ingestion – batch and streaming processing.

Although streaming processing has been gaining traction, in some cases, batch processing is still more optimal.

For example, a pharmaceutical manufacturer might use batch processing to compile end-of-day quality control reports that aggregate test results, environmental conditions, and ingredient lot numbers from all production lines.

Regulatory entities like the FDA, which may request these files during an inspection, will value data completeness over instant access, so batch processing is a better fit here.

On the other hand, streaming data ingestion is critical for real-time monitoring, such as tracking temperature fluctuations in injection molding processes, detecting vibration anomalies in CNC machines, or monitoring conveyor belt speeds. Access to immediate insights gives factory managers the room to intervene rapidly and prevent defects or equipment failures.

Data transformation

Collecting data from multiple sources exposes organizations to fragmentation because suppliers, technology vendors, and factory teams store their logs in different formats.

To ensure these disparate data points can be viewed in an integrated dashboard and applied to business intelligence decisions, data engineers must double down on data normalization, structuring, and cleaning.

Here are the steps data engineering teams should follow to keep high data quality standards.

- Data modeling. Engineers define standard schemas and taxonomies that map disparate source systems to a unified data structure, creating consistent naming conventions and hierarchies for equipment from various vendors.

- Normalizing units and scales. All measurements should be converted to standard units. It’s also a good practice to align time zones across global facilities to keep accurate production logs.

- Implementing validation rules by setting acceptable ranges and thresholds for each data type to automatically flag outliers or impossible values.

- Protocols for handling missing data. Teams can choose from several methods to fill data gaps: interpolation, forward filling, flagging for manual review, or rejecting incomplete records.

- Documenting data lineage. Track the origin, transformations, and quality scores of each data element so operators understand the context behind digital twin insights

Data loading

Manufacturers typically load ingested and normalized data from digital twins into a centralized data warehouse or data lake architecture designed for industrial analytics.

These repositories will lay the foundation for advanced analytics, ML models, and business intelligence tools.

Cloud-based platforms like AWS, Azure, or Google Cloud are popular choices for large manufacturers because they offer scalable storage and computing power to handle the massive volumes of time-series data, sensor readings, and operational metrics generated by digital twins.

On the other hand, some manufacturers prefer hybrid storages that keep on-premises data centers for sensitive operational data and use cloud infrastructure for less critical analytics workloads.

In the table below, we recap the benefits of both approaches and optimal use cases for each.

| • Dependency on internet connectivity • Data sovereignty and compliance concerns • Latency for real-time operations | • Vulnerable to network outages • Ongoing subscription costs • Less control over data location • Potential security concerns | • Distributed manufacturing sites • Scalable analytics needs • Limited IT infrastructure • Collaboration across locations | |

| • Complex architecture management • Data synchronization between environments • Higher initial investment | • Requires skilled IT staff • Integration complexity • Duplicate infrastructure costs • Higher maintenance overhead | • Sensitive or proprietary data • Mission-critical operations • Strict regulatory requirements • Low-latency control systems • Large enterprises with existing infrastructure |

2. The application layer

Vendors get to fully leverage the value of digital twins once they, in addition to enabling it with real-time data access, build a layer of capabilities on top of a robust data pipeline.

Although it’s a good idea to tailor digital twin capabilities to a manufacturer’s needs and use case, below we offer a blueprint with some high-yield features.

- Simulators allow manufacturers to test different scenarios and operational changes in a virtual environment before implementing them in the physical facility. Running preliminary tests on a digital twin reduces the risk of failed real-world tests and cuts down the total cost of adopting organization-wide changes.

- Advanced analytics tools process vast streams of real-time data from connected assets to identify patterns, predict equipment failures, and uncover optimization opportunities.

- Twin management platforms enable centralized control and orchestration of digital twins across multiple facilities, creating a fully unified control tower for manufacturers.

- Low-code capabilities enable domain experts and operators to create and modify twins without extensive programming knowledge. Embedding low-code capabilities, possibly supported by generative AI coding assistants such as Cursor or Microsoft Copilot, into the digital twin platform helps accelerate development and reduce reliance on IT.

- Event management tools automatically detect, prioritize, and route alerts from digital twins to the appropriate personnel, enabling faster responses to anomalies and quicker resolution of critical issues before they cause downtime at the production site.

Stacking these capabilities on top of one another allows manufacturers to build complex, use-case-specific features.

For instance, McKinsey shares an account of a manufacturing team that built a time tracker to monitor how long each step in the pipeline is idle and cut down on such delays.

3. The process layer

Digital twins are by definition separated from the manufacturer’s real-life pipelines. That’s why teams need to put extra effort into bridging the two components.

The two milestones team site leaders should strive to reach are:

- Digital twins accurately represent the day-to-day realities of the factory. All data is up-to-date, and employees actively interact with the digital layer to make sure it is basically identical to the factory floor.

- On-site teams actively leverage insights that digital twins and simulators supply them with and use them to improve productivity and build higher-quality products.

To reach this state of alignment, factory leaders need to commit to upskilling team members who previously relied on manual work, create checklists documenting how real-world production should be augmented with digital twins, and establish task forces to expand digital twin adoption across the organization.

The table below dives into process-related challenges factory managers face when adopting digital twins and mitigation strategies that facilitate adoption.

| Inconsistent processes across departments create integration difficulties and silos that hinder digital twin effectiveness | - Establish cross-functional governance committees - Document standard operating procedures - Implement change management protocols before deployment | |

| Difficulty quantifying benefits makes it hard to justify investments and measure the success of digital twin initiatives | - Define specific KPIs upfront (OEE improvements, downtime reduction, energy savings) - Implement phased pilots with measurable outcomes - Track metrics consistently | |

| Operators and managers are hesitant to modify established workflows and adopt new data-driven decision-making approaches | - Involve frontline workers early in design - Provide comprehensive training - Emphasize how digital twins augment rather than replace human expertise | |

| Connecting digital twins to legacy MES, ERP, and SCADA systems requires extensive customization and process alignment | - Use middleware and APIs for gradual integration - Prioritize systems with the highest impact - Consider phased implementation rather than full replacement | |

| Keeping digital representations synchronized with physical asset changes and process modifications over time | - Establish update protocols when physical changes occur - Assign ownership responsibilities - Schedule regular validation reviews - Automate synchronization where possible |

Bottom line

Successful digital twins deliver tangible ROI for manufacturers like BMW, BASF, and Airbus by increasing factory-floor visibility, running R&D simulations before committing to expensive real-world tests, and predicting the impact of workplace bottlenecks.

Even though executives are showing interest in adopting digital twins, challenges such as poor data validation, employee resistance, and difficulties integrating them with the rest of the tech stack are holding them back.

One way to address these challenges is by building a smaller-scale digital twin prototype with a few data sources and application connectors. Limit its adoption to non-mission-critical processes to iron out their data infrastructure, application layers capabilities, and organizational workflows without risking factory disruption.

Once the simulation starts capturing value, consider gradually expanding the number of data sources and built-in capabilities. High-complexity features like predictive analytics should be introduced once the baseline digital twin is operational and part of factory operations.