This is part 2 of our 3-part series on topic modeling for business applications. In part 1, we explored what topic modeling is and how it differs from classification and clustering. Part 3 will showcase real-world business applications and case studies.

Comparing topic modeling approaches

With massive volumes of unstructured text, choosing the right topic modeling technique is critical for unlocking business value. The market offers several topic modeling algorithms, each with distinct mathematical foundations, computational requirements, and practical advantages. Understanding these differences is essential for effectively extracting actionable insights from your organization’s text data. In this second article of the series, we cover:

- A comparison of the most widely used topic modeling techniques: LDA, NMF, Top2Vec, BERTopic, and LSA/pLSA

- The mathematical foundations, strengths, and limitations of each method

- Practical advice on when to use each model based on text type, business goals, and computational resources

- Real-world examples, including how The New York Times uses LDA in their recommendation system

- How to select the right topic modeling approach or combine methods for maximum business impact

LDA (Latent Dirichlet Allocation)

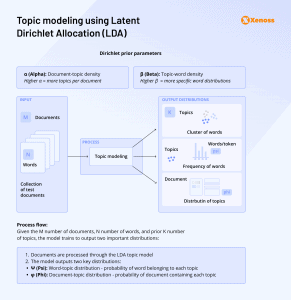

Latent Dirichlet Allocation (LDA) stands as the cornerstone of probabilistic topic modeling, representing documents as mixtures of topics and topics as distributions of words. Named after mathematician Peter Gustav Lejeune Dirichlet, this generative approach operates on two key assumptions: 1) Documents contain multiple topics, and 2) each topic consists of a collection of related words.

LDA’s strength lies in its intuitive framework that mirrors how that aligns with how documents are typically structured and understood in real-world settings. The algorithm uses Dirichlet distributions with two critical hyperparameters: an alpha parameter for document-topic density and a beta parameter for topic-word density. These parameters give LDA remarkable flexibility in handling diverse text collections.

During processing, LDA iteratively improves upon initially random topic assignments by calculating two probabilities: the likelihood of a topic appearing in a document and the probability of a word belonging to a topic. After multiple iterations, this process converges into stable probability distributions.

Benefits of LDA

What makes LDA particularly valuable for businesses is its scalability–it efficiently handles large-scale datasets with thousands of documents. Its probabilistic nature offers transparency into topic assignment uncertainty, allowing analysts to gauge confidence in modeling results. This statistical foundation also enables easy extension to more complex models when needed.

LDA excels in analyzing longer-form content like academic papers, news articles, and detailed customer reviews. It’s particularly effective for document clustering, content recommendation, and trend analysis in established fields with well-defined vocabulary. Organizations commonly employ LDA to organize document collections and power recommendation systems and extract insights from customer feedback to identify recurring themes.

Limitations of LDA

Despite its popularity, LDA has limitations worth considering. The algorithm requires pre-specifying the number of topics, which can lead to either overly broad or fractured topics without proper optimization. LDA also struggles with short texts like tweets or brief comments where limited word co-occurrence patterns make topic inference challenging.

Additionally, LDA’s bag-of-words approach disregards word order and context, potentially missing semantic relationships that more advanced embedding-based models capture. The probabilistic nature that gives LDA its flexibility also means results can vary between runs with identical data.

For maximum effectiveness, LDA implementations should include coherence score optimization to determine the ideal topic count and thorough text data preprocessing, including stopword removal and lemmatization.

Real-world application of LDA

A prominent real-world application comes from The New York Times, which employs LDA as a cornerstone of their recommendation system, modeling articles as mixtures of underlying topics. Their Algorithmic Recommendations team compares topic distributions between a user’s reading history and potential articles to identify relevant content. While effective, NYT discovered LDA’s limitations—particularly its inability to align with predefined interest categories—which led them to develop a hybrid approach combining LDA with supervised classification for more precise content recommendations.

NMF (Non-negative Matrix Factorization)

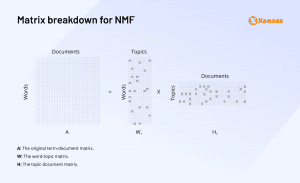

Non-negative Matrix Factorization (NMF) stands as a powerful alternative within the family of traditional topic modeling approaches, offering distinct advantages through its mathematical framework. Unlike LDA’s probabilistic approach, NMF uses linear algebra to decompose high-dimensional data into non-negative, lower-dimensional, and often more interpretable representations.

At its core, NMF works by breaking down a document-term matrix into two simpler matrices: one representing documents by topics and another representing topics by terms. The non-negativity constraint ensures that all values remain positive, making the results more intuitive to interpret compared to methods that allow negative values. This characteristic produces more coherent topics where related words clearly cluster together.

Benefits of NMF

Business analysts frequently choose NMF over other approaches when dealing with shorter texts or when interpretability is paramount. The model excels at identifying clear, distinct topics with less overlap, making it particularly valuable for analyzing customer feedback, support tickets, and product reviews. Studies comparing NMF with LDA on review data consistently show that NMF produces more coherent and interpretable topic distributions.

NMF also offers computational advantages, typically converging faster than probabilistic models like LDA, requiring less preprocessing. Its ability to handle sparse data efficiently makes it ideal for real-time applications where processing speed matters, such as analyzing incoming customer support tickets or monitoring social media sentiment.

Organizations should consider NMF when they need straightforward topic explanations for stakeholder presentations, when working with shorter documents like tweets or reviews, or when processing time constraints exist. While LDA might offer greater theoretical flexibility, NMF often delivers more immediately actionable insights with clearer separation between discovered topics.

Top2Vec

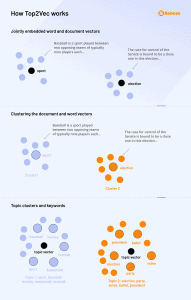

Top2Vec represents a significant evolution in topic modeling by leveraging the power of word and document embeddings. Unlike traditional approaches that rely on word frequency counts, Top2Vec transforms text into rich vector representations that capture semantic relationships between words and documents.

The embedding-based approach offers several key advantages. Top2Vec excels at understanding context and meaning rather than simply counting word occurrences. This semantic awareness allows it to detect nuanced topics even when specific terminology varies across documents. The algorithm automatically determines the optimal number of topics by identifying high-density regions in the semantic space, eliminating the need for manual parameter tuning that plagues older methods.

Benefits of Top2Vec

Top2Vec particularly shines when analyzing short-form content like social media posts or customer reviews—an area where traditional models like LDA often fall short. Its semantic embeddings capture meaning beyond word frequency, making it ideal for texts with sparse or variable vocabulary. Research comparing Top2Vec with traditional methods shows it produces more coherent topics for these shorter texts, grouping semantically related content even when terminology differs.

For businesses considering implementation, Top2Vec works seamlessly with modern NLP pipelines and integrates well with visualization tools. The model requires minimal preprocessing compared to LDA or NMF, automatically handling stopword removal and lemmatization through its embedding process. Organizations already using word embeddings for other applications will find Top2Vec a natural extension to their existing infrastructure.

When interpretability and the detection of emerging topics matter more than computational efficiency, Top2Vec offers a compelling alternative to traditional topic modeling approaches.

Limitations of Top2Vec

The implementation of Top2Vec requires several factors to be considered. The model demands more computational resources than traditional approaches, particularly for large document collections. Organizations need adequate GPU capacity when processing substantial datasets. Top2Vec offers flexibility in embedding options, supporting Doc2Vec, Universal Sentence Encoder, or BERT embeddings, each with different trade-offs between speed and accuracy.

BERTopic

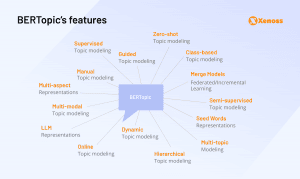

BERTopic represents a major advancement in topic modeling, leveraging transformer-based language models to overcome the limitations of traditional techniques. Unlike LDA or NMF, BERTopic harnesses BERT embeddings and class-based TF-IDF to identify dense clusters within document collections. The algorithm follows a sophisticated four-step process:

- Text embedding: It converts text into dense vector embeddings using transformer libraries like Gensim, Spacy, or Sentence Transformers. These 384-dimensional vectors capture deep semantic relationships between words.

- Dimensionality reduction with UMAP transforms these vectors into more manageable 2-3 dimensional embeddings while preserving document relationships.

- HDBSCAN clustering identifies high-density regions in the document space, grouping semantically similar content into cohesive topics.

- BERTopic applies class-based TF-IDF extraction to generate interpretable topic representations, providing clear labels for each identified cluster.

This transformer-based approach delivers significant performance advantages. Like Top2Vec, BERTopic handles short texts exceptionally well, addressing the limitations seen in older models such as LDA and NMF, making it ideal for analyzing social media content, customer comments, and other brief communications. Research consistently shows BERTopic outperforming older models in both quantitative metrics and the interpretability of results.

The model automatically determines the optimal number of topics without extensive parameter tuning and generates remarkably coherent and clear topic clusters. This enables businesses to quickly identify emerging customer feedback themes, track changing market sentiments, and organize large document collections more effectively. As numerous comparative studies indicate, BERTopic represents the cutting edge of topic modeling capabilities, particularly for companies dealing with diverse, modern text sources that traditional approaches struggle to interpret effectively.

LSA & pLSA

Latent Semantic Analysis (LSA) emerged in the late 1980s as a groundbreaking matrix factorization technique, establishing the first framework for discovering hidden semantic relationships in text. This pioneering approach uses singular value decomposition (SVD) to transform document-term matrices into lower-dimensional semantic spaces where connections between words and documents become apparent.

Probabilistic Latent Semantic Analysis (pLSA) followed in 1999, introducing statistical rigor through a mixture decomposition derived from latent class models. While LSA relied on linear algebra to downsize occurrence tables, pLSA brought a probabilistic framework that could determine the likelihood of certain words appearing within specific topics.

Despite newer algorithms like LDA, NMF, and transformer-based models dominating contemporary applications, LSA and pLSA retain significant relevance. Many production systems still implement these methods for concept searching, automated document categorization, and information retrieval tasks where computational efficiency matters more than semantic precision.

Financial services and healthcare organizations particularly value these established models for their interpretability and lower computational demands. LSA remains effective for analyzing longer-form content like academic papers and detailed documentation, while companies processing large-scale datasets often deploy pLSA as a preliminary step before applying more resource-intensive techniques.

The enduring utility of these foundational methods highlights an important principle in text analytics: sometimes, the most established techniques deliver the most practical value.

Selecting the right approach for your business needs

As explored throughout this article, no single topic modeling approach offers a universal solution for all business text analysis challenges. The most effective strategy often involves selecting the appropriate technique based on your specific data characteristics, business objectives, and available computational resources.

For longer documents with well-defined vocabulary, traditional approaches like LDA and NMF often provide reliable results with greater computational efficiency. For shorter texts and applications requiring more nuanced semantic understanding, embedding-based approaches like Top2Vec and transformer-based methods like BERTopic offer superior performance despite their greater computational demands.

Many organizations find success in implementing multiple complementary techniques. For example, using LSA or pLSA as an initial processing step for large document collections, then applying more sophisticated models like BERTopic to specific subsets requiring deeper analysis. This hybrid approach balances computational efficiency with analytical depth. The rapidly evolving field of topic modeling continues to produce new techniques and refinements. Organizations that strategically integrate these approaches into their text analytics pipeline position themselves to extract maximum value from their unstructured text data—transforming information overload into actionable business intelligence.

Ready to learn how businesses apply these techniques in real-world scenarios? Continue to part 3: Business applications and case studies of topic modeling. Missed the foundations? Go back to part 1: Topic modeling for business: Introduction and key distinctions.