Financial crime is a growing concern for financial institutions. Banking leaders are increasing spending on detection tools and KYC algorithms by 10% annually, yet these methods aren’t keeping pace with evolving fraud techniques.

According to PwC, EU-based banks are submitting 9.4% fewer suspicious activity reports despite a steady rise in fraud attempts, meaning more crimes go undetected.

To close this gap, banks are exploring machine learning capabilities to enhance legacy detection systems.

In this post, we examine how malicious actors use AI to develop advanced fraud techniques, the technologies engineering teams can deploy in response, and key challenges to consider when implementing AI-enabled fraud detection.

Impact of financial fraud on banks

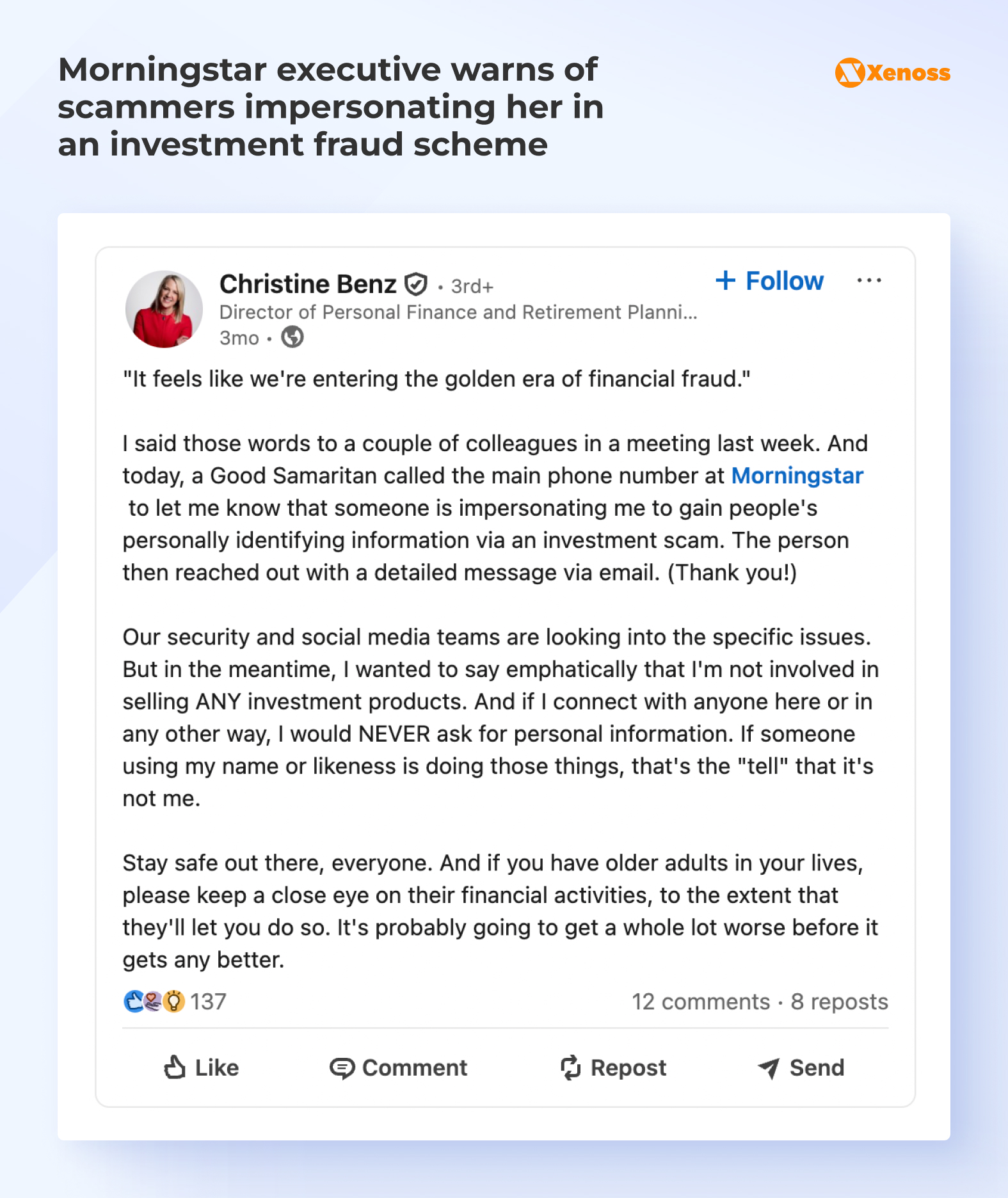

Christine Benz, Director of Personal Finance and Retirement Planning at Morningstar, recently shared on LinkedIn how scammers were using her personal data to lure consumers into bogus investments, just as she was warning her team about impersonation fraud.

Market data reinforces her point: the scale and impact of financial crime are rising sharply.

In the US, consumers lose over $12 billion annually to identity fraud and other scams. In the UK, fraud accounts for 41% of all crime, costing the country over £6.8 billion per year.

As executives brace for more frequent and sophisticated fraud attempts, many are recognizing that existing systems can’t keep pace. Currently, only 23% of banking executives believe they have reliable programs to counter financial fraud risks. In the coming years, concerns of low fraud detection effectiveness are likely to grow as financial crime becomes increasingly AI-assisted and harder to detect.

AI is transforming common types of fraud

Fraud detection teams are under constant pressure to keep pace with rapidly evolving scam techniques. The rise of generative AI in financial crime is blurring the line between bot behavior and authentic user activity, until it is nearly impossible to tell the two apart.

The latest omni-channel models, like GPT-4o, Sora, and others, are making traditional schemes like phone and email phishing more effective and harder to spot, as well as enabling entirely new scam techniques.

| APP scams | The victim is persuaded to authorize a transfer to a criminal-controlled account. | - GenAI enables highly tailored messages at scale - Deepfake “bank or police” calls increase compliance - Bots can coach victims in real time. |

| Investment and crypto scams | Fake advisors or platforms convince victims to deposit money into bogus products. | - Deepfake endorsements and synthetic “experts” create instant credibility - GenAI produces convincing pitch decks, dashboards, and support chats Faster iteration of narratives. |

| BEC / invoice fraud | A “vendor” or “exec” asks to change bank details or approve a payment. | - Voice cloning and deepfakes help bypass verbal verification - GenAI mimics tone and thread context |

| Account takeover (ATO) | The attacker takes over a real user account and drains funds or changes details. | AI helps pick the best targets, mimics human behavior to evade rules, and combines synthetic identity elements to keep access. |

| Synthetic identity fraud | A “new person” is stitched together from real and fake identity data to open accounts. | - Deepfakes and GenAI-made documents reduce friction in onboarding - Easier, cheaper, higher-volume attempts pressure KYC workflows. |

| Document forgery (KYC, loan, claims) | Counterfeit or altered documents are used to pass checks or trigger payouts. | - Generative media increases fidelity - Rapid variant generation defeats template checks Forged-doc activity has been reported rising sharply. |

| Card-not-present (CNP) fraud | Stolen card details are used for online purchases. | GenAI boosts phishing and social engineering that harvests credentials and supports more efficient “testing” and merchant-specific scripting. |

| Contact-center / call impersonation | Fraudster calls support to reset access, change payout details, or approve transfers. | Voice cloning and conversational agents sustain longer, more believable interactions and run multi-step scripts with less human effort. |

| Mule networks and laundering | Stolen funds are moved through intermediaries to cash out and hide traces. | AI-assisted ops can scale recruiting, messaging, and adaptive routing as accounts get flagged or frozen. |

According to Signicat, deepfake attempts increased by 2,137% between 2021 and 2024. In a separate report, financial executives noted that 50% of all fraud attempts now involve AI, with 90% expressing particular concern about voice cloning.

More concerningly, banks are adopting AI more slowly than the fraudsters themselves. Only 22% of surveyed institutions use any form of machine learning to detect financial crime.

To counter these advanced threats, banks and financial institutions need to embrace AI and predictive analytics, not only to improve detection accuracy but also to ease the burden on financial crime teams, which are now processing a deepfake attempt every 5 minutes on average.

AI technologies banks can use for fraud detection

Real-time predictive analytics for risk scoring

Fraud types it helps detect

- Card-not-present payment fraud

- Authorized push payment scams

- Synthetic identity fraud

- Account takeover–driven transfers

- Merchant or transaction laundering patterns

Predictive analytics for transaction risk scoring is the workhorse of modern fraud detection.

Engineering teams train supervised ML models on datasets that include labeled historical fraud logs, expert annotations, and chargeback outcomes. These models are then deployed to classify new events as normal or suspicious in real time.

Transaction scoring models combine multiple signal types: transaction attributes (amounts, velocity, merchants), customer context (tenure, typical behavior), and channel data (device, session) to reduce false positives and catch subtle fraud patterns.

By improving detection at the first line of defense with fewer unnecessary declines, they directly protect both revenue and customer trust.

Real-world example: Natwest

Approach: NatWest, one of the UK’s largest retail and commercial banking groups, upgraded its payment-fraud controls to a real-time transaction risk-scoring platform built on adaptive machine learning models. The system learns normal behavior at the individual-customer level, integrates contextual signals like device profiling, and uses this data to accurately flag anomalous payments.

Outcome: The rollout delivered immediate, measurable gains, including a 135% increase in the value of scams detected and a 75% reduction in scam false positives. Across fraud more broadly, NatWest reported a 57% improvement in the value of fraud detected and a 40% reduction in overall fraud false positives.

Graph ML and identity resolution

Fraud types it helps detect

- Money mule networks

- Collusive fraud rings

- Shell-company laundering structures

- Linked synthetic identities

- Trade-based laundering networks

Financial fraud teams can use graph analytics to model financial crime as a network of entities (customers, accounts, devices, counterparties) connected by relationships (transfers, shared devices, common addresses, beneficial ownership).

Here’s how graph ML improves transaction profiling:

- Entity resolution. Graph ML algorithms deduplicate and link records that represent the same real-world entity across messy, siloed datasets.

- Behavioral mapping. Creating a graph of all actions linked to a single customer helps distinguish normal behavior from suspicious activity.

- Pattern detection. Once a reliable graph exists, graph features and graph ML techniques (including graph embeddings and GNNs) expose coordinated behavior that appears normal in isolation but suspicious when viewed across the network.

Real-world example: HSBC

Approach: HSBC, one of the world’s largest multinational banks, adopted graph ML and entity-resolution technology to modernize its financial crime detection stack across AML and fraud use cases.

Engineers unified fragmented internal and external datasets: customers, accounts, counterparties, corporate registries, and transactions into a single, continuously updated entity graph.

Advanced entity resolution linked records referring to the same real-world person or organization, while network analytics and graph-based features exposed hidden relationships, mule networks, and complex laundering structures that transaction-by-transaction analysis would miss.

Outcome: Following the rollout, HSBC reported £4 million in potential cost savings from replacing its incumbent system while improving analytical depth and investigative efficiency.

By providing investigators with a contextual, network-level view of risk, the bank reduced manual reconciliation effort, accelerated case resolution, and scaled financial crime monitoring more efficiently across regions and business lines.

Unsupervised anomaly detection for anti-money laundering

Fraud types it helps detect

- Novel money laundering typologies

- Suspicious SWIFT and correspondent patterns

- Trafficking- and exploitation-linked flows

- Structuring and smurfing behaviors

- Previously unseen scam “playbooks.”

Unsupervised anomaly detection learns baseline “normal” behavior from data without requiring labeled fraud examples.

Semi-supervised approaches combine this with limited labels to improve precision.

Both are valuable in AML, where labeled data is sparse, and typologies evolve faster than rule-based systems can adapt.

The practical impact of unsupervised anomaly detection is seen in earlier detection of emerging patterns and reduced reliance on brittle rules. It also reduces the need for human review and cuts case queues by shrinking false positives.

Real-world example: Santander

Approach: Santander, a global banking group based in Spain, integrated an unsupervised anomaly detection solution into its transaction monitoring to enhance AML and financial crime screening across its operations.

Rather than relying on static thresholds and rules, the system models normal behavioral patterns across millions of transactions and flags statistical deviations that could indicate complex criminal activity, particularly typologies that traditional systems struggle with, such as human-trafficking-linked payment patterns and subtle money flows.

The AI ingests historic and ongoing transaction data to establish dynamic behavioral baselines, enabling earlier detection of abnormal sequences that would otherwise blend into noise under legacy rule-based systems.

Outcome: By deploying unsupervised anomaly detection, Santander achieved significant reductions in false positives. In some jurisdictions, the bank saw over 500,000 fewer unnecessary alerts per year.

NLP for screening, KYC/AML enrichment, and alert triage (names, watchlists, adverse media, narratives)

Fraud types it helps detect

- Sanctions and watchlist evasion

- Identity fraud via aliasing and transliteration

- Hidden beneficial ownership signals in text

- Adverse-media-linked financial crime risk

- High-risk onboarding and KYC inconsistencies

NLP applies language models and text-mining methods to the unstructured data that fraud and compliance teams rely on: names, addresses, corporate registries, adverse media, and investigator notes.

Modern NLP approaches allow teams to learn from historical analyst decisions, generate consistent recommendations, and provide written rationales that speed up alert disposition.

A deeper understanding of context around customer interactions helps fraud detection systems produce fewer false matches, make faster screening decisions, and handle large volumes of multilingual, messy real-world identity data.

Real-world example: Standard Chartered

Approach: Standard Chartered, a major global bank, enhanced its financial crime compliance operations by integrating NLP and machine learning–based name screening and alert-triage technology into its sanctions, watchlist, and adverse-media screening workflows.

The system uses two key components:

- NLP models that interpret names, aliases, addresses, news, and watchlist sources

- Machine learning algorithms that replicate human screening decisions.

It continuously learns from historical analyst decisions, enriches alerts with contextual signals, and generates explanations that help compliance teams understand and act on risks more quickly and consistently.

Outcome: After deployment across 40+ markets, the solution delivered dramatic reductions in manual workloads and false positives. The AI-driven screening system automatically resolves up to 95% of false positive alerts, enabling compliance teams to focus on genuinely suspicious matches rather than low-risk noise.

AI agents for investigation automation

Fraud types it helps detect

- Sanctions screening alerts

- AML transaction-screening alerts

- Watchlist and PEP-related matches

- Cross-border payments linked to risk patterns

- High-risk customer and counterparty linkages surfaced during the investigation

Banks and financial institutions are increasingly implementing agentic workflows to handle end-to-end alert management.

AI agents can pull relevant customer and transaction context, evaluate whether an alert is likely a true match or false positive, generate a clear narrative explaining the rationale, and route the case while ensuring full auditability and human oversight.

In operational areas like alert triage and disposition, where volume and false positives overwhelm teams, agentic workflows reduce manual effort, standardize decisions, and accelerate time-to-resolution without weakening governance.

Real-world example: DNB

Approach: DNB, Norway’s largest financial services group, implemented intelligent AI agents to execute high-volume, compliance-critical work across financial crime and adjacent finance operations.

The company embedded hyper-specialized agents into pre-submission checks on stock transaction data and AML-driven remediation actions, such as terminating customers who failed to refresh required identification.

To boost efficiency, DNB augmented these agents with APIs, OCR for document scanning, and ML-based keyword search for customer communications.

Outcome: AI agents are now involved in 230 processes, have returned over 1.5 million hours to the business, and saved €70 million, while eliminating AML errors within the targeted automation scope.

In one AML-related remediation, 90 AI agents processed 500,000 customer accounts to offboard non-compliant customers in time to meet a government deadline.

Challenges and risks of using AI for fraud detection

Despite hundreds of successful implementations of machine learning and generative AI, financial institutions should not underestimate the risks of letting AI agents and detection systems process sensitive customer data.

Understanding these risks helps internal engineering teams develop contingency plans and maintain regulatory compliance.

Overblocking and false positives

Modern fraud detection models rely on anomaly detection and risk scoring across signals such as device fingerprinting, geolocation, transaction velocity, and behavioral deviation.

When these algorithms are tuned conservatively or when downstream decision rules collapse nuanced scores into binary outcomes, they can over-trigger transaction blocks.

The false positives generated by ML-enabled fraud detection tools may escalate to account freezes, interrupt legitimate access, and strain customer support and dispute handling.

In one such incident, Monzo, a UK-based online bank, blocked a customer’s account after its fraud detection systems flagged a new mobile device attempting access. The customer could not use their card or view their balance until they completed identity verification. To resolve the matter, Monzo paid 8% interest on the full account balance plus an additional £1,000 for the distress caused.

Isolated false positives may not cause significant monetary damage, but at scale, settling customer complaints and managing reputational fallout creates substantial operational and budget strain.

How to address this challenge: Organizations should accept some level of friction when applying transaction monitoring, but thoughtful implementation helps minimize negative impact.

Rather than initiating a full account freeze for a possible fraud attempt, institutions can implement softer verification methods.

Here a few fallback strategies teams can implement:

- Confirming intent in-app,

- Limiting transaction size or destination

- Placing temporary holds while checks run in the background.

Operationally, institutions should support customers with clear explanations, predictable timelines, and a fast path to a human when automated checks fail.

Biometric and identity AI can be biased or inaccessible

Biometric checks such as selfie matching or liveness detection promise fast, low-friction identity verification. In practice, they don’t work equally well for everyone. Poor lighting, older devices, physical differences, or accessibility issues can all lead to repeated failures.

These rejections can propagate into onboarding and account recovery flows, disproportionately affecting certain customer segments and creating fairness and accessibility risks.

How to address this challenge: Treat biometrics as a convenience, not a bottleneck. Banks should account for potential malfunctions by offering alternatives that let customers proceed with authentication or transactions.

Fallback paths include:

- document checks

- verified bank credentials

- assisted reviews.

To improve customer experience across the authentication process, organizations should communicate upfront that these alternatives exist.

Additionally, financial institutions should monitor biometric check performance to identify failure conditions and adjust flows accordingly.

Data leakage and confidentiality risk when GenAI is used in fraud operations

Generative AI is increasingly used by fraud teams for case summarization, entity extraction, and investigative support, often requiring access to transaction data, internal notes, and SAR-adjacent context.

Without strict controls on data ingress, retention, and model scope, these tools can inadvertently expose regulated or confidential information beyond approved boundaries.

The risk is amplified when GenAI systems are integrated informally or outside established financial crime governance frameworks.

This is a challenge for global financial organizations where employees may use off-the-shelf LLMs to streamline workflows without reporting to management.

How to solve this challenge: Rather than restricting generative AI use and risking productivity slowdowns, successful institutions design GenAI as a controlled workspace. Organizations with access to top-tier engineering talent can build proprietary models trained on approved internal sources and compliant with industry-specific privacy regulations.

Morgan Stanley implemented this approach by deploying AI @ Morgan Stanley Assistant, an internal GenAI tool powered by OpenAI’s GPT-4. The assistant supports 16,000 financial advisors in the bank’s Wealth Management division, letting them query internal research, data, and documents in natural language.

Rather than risk sensitive data leaking through consumer versions of ChatGPT, Morgan Stanley rolled out an enterprise-grade edition trained on a library of 100,000 internal documents.

Adversarial AI undermining fraud detection

Fraud prevention systems are increasingly confronting adversarial inputs generated by AI, including deepfake audio and video, synthetic identity documents, and algorithmically generated behavioral patterns.

These artifacts are designed specifically to exploit model assumptions and bypass automated verification layers.

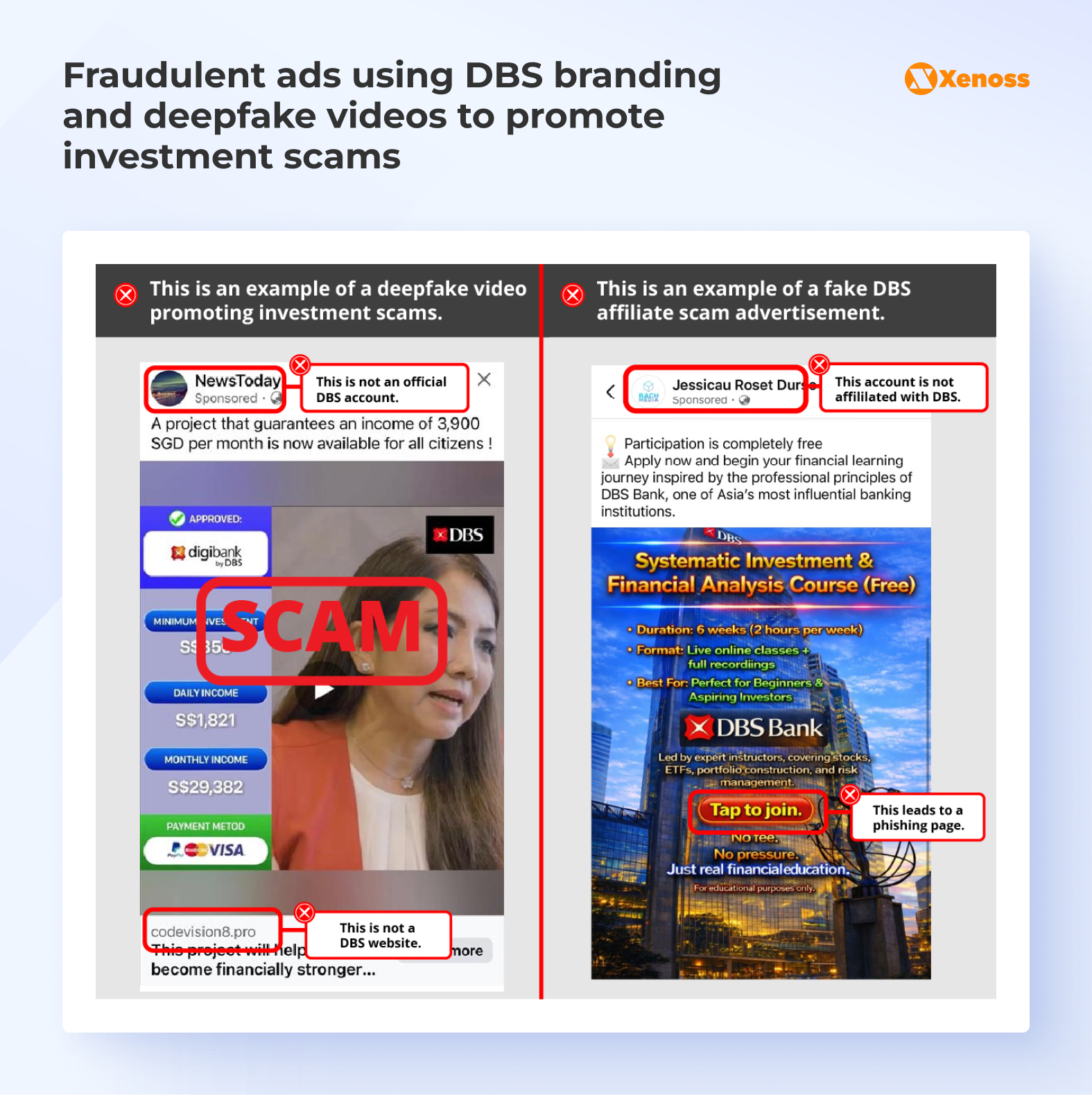

DBS, a Singapore-based bank, faced this challenge directly when scammers created deepfake videos of the bank’s executives to lure customers into investment scams. The bank was forced to issue a public warning to protect customers from engaging with AI-generated content on social media.

This and similar incidents are proof that traditional trust signals—visual identity checks, voice confirmation, static documents—are losing reliability, forcing detection systems to operate in an increasingly hostile and adaptive threat environment.

How to solve this challenge: As fraudsters exploit generative AI to create complex, hard-to-detect scams, financial crime teams must accept that traditional verification signals like a face, a voice, or a document can now be faked.

One-touch identity checks are no longer reliable. Instead, teams should prioritize layering customer behavioral context over time: understanding how a user typically behaves, which devices they trust, how a transaction compares to their normal patterns, and whether multiple independent signals align.

This approach offers a more robust defense against deepfakes than any single verification checkpoint.

Bottom line

As AI becomes more accessible, financial fraud groups are leveraging cutting-edge models to bypass traditional identity controls, execute illegal transactions, and lure bank customers into fraudulent investment schemes.

To stay ahead of malicious actors, financial institutions must intentionally deploy AI in fraud detection.

Supplementing existing transaction scoring and identity controls with tools like graph ML for added context or intelligent AI agents for automation improves both detection accuracy and investigator productivity.

At the same time, given the sector’s sensitive nature, banking teams need to ensure their AI tools remain compliant, carefully validate detection models to reduce false positives, and keep humans in the loop for edge cases. Balancing AI-driven analysis and automation with thoughtful human oversight allows institutions to adopt innovative fraud detection tools while minimizing risk to customers.